Hidden Markov models

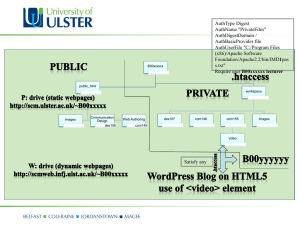

advertisement

Hidden Markov Models

Reading:

Russell and Norvig, Chapter 15, Sections 15.1-15.3

Recall for Bayesian networks:

General question: Given query variable X and observed evidence

variable values e, what is P(X|e)?

P ( X | e)

P ( X , e)

(definitio

n of conditiona

l probabilit

y)

P (e )

P ( X , e)

P ( X , e, y )

1

P (e )

(where Y are the non - evidence

variables

other than

y

y

P ( z | parents

z { X , e , y }

( Z ))

(semantics

of Bayesian

networks)

X)

Dynamic Bayesian Networks

• General dynamic Bayesian network: any number of random

variables, which can be discrete or continuous

• Observations are taken in time steps.

• At each time step, observe some of the variables (evidence

variables). Other variables are unobserved or “hidden”.

Simple example of HMM

(adapted from Russell and Norvig, Chapter 15)

You are a graduate student in a windowless office with no

phone and your network connection is down. The only way

you can get information about the weather outside is whether

or not your advisor shows up carrying an umbrella.

HMM for this scenario: Evidence variable: Umbrella {T, F}

Hidden variable Rain {T, F}

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

P(Rain0 =T ) = 0.5

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

t

P ( R 0 , R 1 ,..., R t , U 0 , U 1 ,..., U t ) P ( R 0 ) P ( R i | R i 1 ) P (U i | R i )

i 1

Markov model since Rt depends only on Rt-1.

Inference in Hidden Markov Models

• Inference tasks:

– Filtering (or monitoring): Computing belief

state―posterior distribution over current state, given all

evidence to date:

P ( X t | e 1:t )

“Given that my advisor has had an umbrella for the last

three days, what’s the probability it is raining today?”

Inference in Hidden Markov Models

– Prediction: Computing posterior distribution over the

future state, given all evidence to date:

P ( X t k | e 1:t ), k 0

“Given that my advisor has had an umbrella for the last

three days, what’s the probability it will rain the day after

tomorrow?”

– Smoothing (or hindsight): Computing posterior

probability over a past state, given all evidence up to the

present:

P ( X k | e 1:t ), 0 k t

“Given that my advisor has had an umbrella for the last

three days, what’s the probability it rained yesterday?

– Most likely explanation: Given a sequence of

observations, finding the sequence of states most likely to

have generated those observations:

arg max P ( x 1:t | e 1:t )

x 1:t

“Given that my advisor has had an umbrella for the last

three days, what’s the most likely sequence of weather over

the past 3 days?”

Inference algorithms

• Filtering: Can use recursive estimation

P ( X t 1 | e 1:t 1 ) P ( X t 1 | e 1:t , e t 1 ) (dividing

up the evidence)

P ( e t 1 | X t 1 , e 1:t ) P( X t 1 | e 1:t ) (by Bayes rule)

P ( e t 1 | X t 1 ) P( X t 1 | e 1:t ) (evidence

at t 1 depends only on hidden state at t 1)

Inference algorithms

• Filtering: Can use recursive estimation

P ( X t 1 | e 1:t 1 ) P ( X t 1 | e 1:t , e t 1 ) (dividing

up the evidence)

P ( e t 1 | X t 1 , e 1:t ) P( X t 1 | e 1:t ) (by Bayes rule)

P ( e t 1 | X t 1 ) P( X t 1 | e 1:t ) (evidence

The value of the first term,

network.

at t 1 depends only on hidden state at t 1)

P ( e t 1 | X t 1 ) ,

is given explicitly in the

Inference algorithms

• Filtering: Can use recursive estimation

P ( X t 1 | e 1:t 1 ) P ( X t 1 | e 1:t , e t 1 ) (dividing

up the evidence)

P ( e t 1 | X t 1 , e 1:t ) P( X t 1 | e 1:t ) (by Bayes rule)

P ( e t 1 | X t 1 ) P( X t 1 | e 1:t ) (evidence

The value of the first term,

network.

at t 1 depends only on hidden state at t 1)

P ( e t 1 | X t 1 ) ,

is given explicitly in the

The value of the second term is:

P( X t 1 | e 1:t )

P (X

xt

t 1

| x t , e 1:t ) P ( x t | e 1:t )

Inference algorithms

• Filtering: Can use recursive estimation

P ( X t 1 | e 1:t 1 ) P ( X t 1 | e 1:t , e t 1 ) (dividing

up the evidence)

P ( e t 1 | X t 1 , e 1:t ) P( X t 1 | e 1:t ) (by Bayes rule)

P ( e t 1 | X t 1 ) P( X t 1 | e 1:t ) (evidence

The value of the first term,

network.

at t 1 depends only on hidden state at t 1)

P ( e t 1 | X t 1 ) ,

is given explicitly in the

The value of the second term is:

P( X t 1 | e 1:t )

P (X

t 1

| x t , e 1:t ) P ( x t | e 1:t )

xt

Thus:

P ( X t 1 | e 1:t 1 ) P ( e t 1 | X t 1 ) P ( X t 1 | x t , e 1:t ) P ( x t , e 1:t )

xt

P ( e t 1 | X t 1 ) P ( X t 1 | x t ) P ( x t , e 1:t ) using the Markov property

xt

Inference algorithms

From the network, we have

everything except P(xt, e1:t).

Can estimate

recursively.

Thus:

P ( X t 1 | e 1:t 1 ) P ( e t 1 | X t 1 ) P ( X t 1 | x t , e 1:t ) P ( x t , e 1:t )

xt

P ( e t 1 | X t 1 ) P ( X t 1 | x t ) P ( x t , e 1:t ) using the Markov property

xt

Umbrella example:

• Day 1: Umbrella1 = U1 = T

Prediction t = 0 to t = 1:

P ( R1 )

P(R

1

| r0 ) P ( r0 ) 0 . 7 , 0 . 3 0 . 5 0 . 3 , 0 . 7 0 . 5

r0 { T , F }

0 .5 ,0 .5

Updating with evidence for t=1:

P ( R1 | u 1 ) P ( u 1 | R1 ) P ( R1 ) 0 . 9 , 0 . 2 0 . 5 , 0 . 5

0 . 45 , 0 . 1 0 . 818 , 0 . 182

Prediction t = 1 to t = 2:

P ( R 2 | u1 )

P(R

2

| r1 ) P ( r1 | u 1 ) 0 . 7 , 0 . 3 0 . 818 0 . 3 , 0 . 7 0 . 182 0 . 627 , 0 . 373

r1

Updating with evidence for t=2:

P ( R1 | u 1:2 ) P ( u 2 | R 2 ) P ( R 2 | u 1 ) 0 . 9 , 0 . 2 0 . 627 , 0 . 373

0 . 565 , 0 . 075 0 . 883 , 0 . 117

Why does probability of rain increase from day 1 to day 2?

Hidden Markov Models: Matrix Representations

• Transition model: P(Xt | Xt1) = T (SS matrix) where

Ti , j P ( X t j | X t 1 i )

• For umbrella model:

T

0 .7

T P ( X t | X t 1 )

0 .3

F

0 .3 T

0 .7 F

• Sensor model: P(et | Xt = i ) =O (SS diagonal matrix) where

O i, j

P ( e t | X t i ), i j

0 otherwise

• For umbrella model:

0 .9

O P ( e t | X t )

0

0

0 .2

Speech Recognition

• Task: Identify sequence of words uttered by speaker, given

acoustic signal.

• Uncertainty introduced by noise, speaker error, variation in

pronunciation, homonyms, etc.

• Thus speech recognition is viewed as problem of probabilistic

inference.

•

Speech recognition typically makes three assumptions:

1. Process underlying change is itself “stationary”

i.e., state transition probabilities don’t change

2. Current state X depends on only a finite history of

previous states (“Markov assumption”).

– Markov process of order n: Current state depends

only on n previous states.

3. Values et of evidence variables depend only on current

state Xt. (“Sensor model”)

Speech Recognition

• Input: acoustic signal

• Inference: P(words | signal)

• Bayes rule: P(words | signal) = P(signal | words) P(words)

• P(signal | words): acoustic model

– pronunciation model (for each word, distribution over

possible phone sequences)

– signal model (distribution of features of acoustic signal

over phones)

• P(words): language model – prior probability of each

utterance (e.g., bigram model)

Russell and Norvig, Artificial Intelligence: A Modern Approach, Chapter 15

Phone model

P( phone | frame features) = P(frame features| phone) P(phone)

P(frame features| phone) often represented by Gaussian mixture

model

Pronunciation model

Now we want

P (words|phones1:t ) = P(phones1:t | words) P(words)

Represent P(phones1:t | words) as an HMM

More Generally:

Components of an HMM

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

P(Rain0 =T ) = 0.5

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

Possible states:

S = {S1, ..., SN}

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

P(Rain0 =T ) = 0.5

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

State transition

probabilities

A = [aij],

aij=P(qt+1=Sj|qt=Si)

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

P(Rain0 =T ) = 0.5

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

P(Rain0 =T ) = 0.5

Possible

observationsRain

Rain

t

t+1

(or “emissions”):

V={v1, ..., vM}

Umbrellat-1

Umbrellat

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

Observation (emission)

probabilities:

P(Rain0 =T ) = 0.5

B = [bj(m)]

bj(m)=P(Ot=vm|qt=Si)

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

Initial state probabilities:

= [i]

i =P(q1=Si)

Raint-1

P(Raint)

T

0.7

F

0.3

Raint-1

Umbrellat-1

Raint

Umbrellat

P(Rain0 =T ) = 0.5

Raint+1

Umbrellat+1

Raint

P(Umbrellat)

T

0.9

F

0.2

Model consists of sequence of hidden states, sequence of observation states,

probability of each hidden state given previous hidden state, probability of each

hidden state given current observation, and prior probability of first hidden state.

Learning an HMM

Baum-Welch algorithm (also known as “forward-backward algorithm),

similar to Expectation-Maximization