Data Screaming! - KolobKreations

advertisement

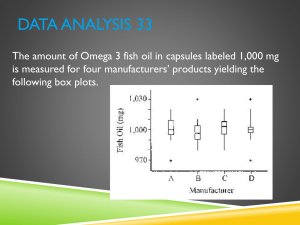

Data Screaming! Validating and Preparing your data Lyytinen & Gaskin Data Screening • Data screening (also known for us as “data screaming”) ensures your data is “clean” and ready to go before you conduct further your planned statistical analyses. • Data must always be screened to ensure the data is reliable, and valid for testing the type of causal theory your have planned for. • Screening and cooking are not synonymous – screening is like preparing the best ingredients for your gourmet food! Necessary Data Screening To Do: • Handle Missing Data • Address outliers and influentials • Meet multivariate statistical assumptions for alternative tests (scales, n, normality, covariance) Statistical Problems with Missing Data • If you are missing much of your data, this can cause several problems; e.g., can’t calculate the estimated model. • EFA, CFA, and path models require a certain minimum number of data points in order to compute estimates – each missing data point reduces your valid n by 1. • Greater model complexity (number of items, number of paths) and improved power require larger samples. Logical Problem with Missing Data • Missing data will indicate systematic bias because respondents may not have answered particular questions in your survey because of a common cause (poor formulation, sensitivity etc). • For example, if you ask about gender, and if females are less likely to report their gender than males, then you will have “male-biased” data. Perhaps only 50% of the females reported their gender, but 95% of the males reported gender. • If you use gender as moderator in your causal models, then you will be heavily biased toward males, because you will not end up using the unreported responses from females. You may also have biased sample from female respondents. Detecting Missing Values 1 3 2 Handling Missing Data • Missing more than 10% from a variable or respondent is typically not problematic (unless you lose specific items, or one end of the tail) • Method for handling missing data: – >10% - Just don't use that variable/respondent unless you go below acceptable n – <10% - Impute if not categorical – Warning: If you remove too many respondents, you will introduce response bias • If the DV is missing, then there is little you can do with that record • One alternative is to impute and run models with and without missing data to see how sensitive the result is Imputation Methods (Hair, table 2-2) • Use only valid data – No imputation, just use valid cases or variables – In SPSS: Exclude Pairwise (variable), Listwise (case) • Use known replacement values – Match missing value with similar case’s value • Use calculated replacement values – Use variable mean, median, or mode – Regression based on known relationships • Model based methods – Iterative two step estimation of value and descriptives to find most appropriate replacement value Mean Imputation in SPSS 2. Include each variable that has values that need imputing 2 1 3 4 3. For each variable you can choose the new name (for the imputed column) and the type of imputation Best Method – Prevention! • Short surveys (pre testing critical!) • Easy to understand and answer survey items (pre testing critical) • Force completion (incentives, technology) • Bribe/motivate (iPad drawing) • Digital surveys (rather than paper) • Put dependent variables at the beginning of the survey! Order for handling missing data 1. First decide which variables are going to be used in the model 2. Then handle missing data based on that set of variables 3. Then decide the method to handle missing data (see Hair Chapter 2) Outliers and Influentials • Outliers can influence your results, pulling the mean away from the median. • Outliers also affect distributional assumptions and often reflect false or mistaken responses • Two type of outliers: – outliers for individual variables (univariate) • Extreme values for a single variable – outliers for the model (multivariate) • Extreme (uncommon) values for a correlation Detecting Univariate Outliers Mean Outliers! 50% should fall within the box 99% should fall within this range Handling Univariate Outliers • Univariate outliers should be examined on a case by case basis. • If the outlier is truly abnormal, and not representative of your population, then it is okay to remove. But this requires careful examination of the data points – e.g., you are studying dogs, but somehow a cat got ahold of your survey – e.g., someone answered “1” for all 75 questions on the survey • However, just because a datapoint doesn’t fit comfortably with the distributions does not nominate that datapoint for removal Detecting Multivariate Outliers • Multivariate outliers refer to sets of data points (tuples) that do not fit the standard sets of correlations exhibited by the other data points in the dataset with regards to your causal model. • For example, if for all but one person in the dataset reports that diet has a positive effect on weight loss, but this one guy reports that he gains weight when he diets, then his record would be considered an outlier. • To detect these influential multivariate outliers, you need to calculate the Mahalanobis d-squared. (Easy in AMOS) These are row numbers from SPSS Anything less than .05 in the p1 column is abnormal, and is candidate for inspection Handling Multivariate Outliers • Create a new variable in SPSS called “Outlier” – Code 0 for Mahalanobis > .05 – Code 1 for Mahalanobis < .05 • I have a tool for this if you want… • Then in AMOS, when selecting data files, use “Outlier” as a grouping variable, with the grouping value set to 0 – This then runs your model with only non-outliers Before and after removing outliers N=340 N=295 BEFORE AFTER Even after you remove outliers, the Mahalanobis will come up with a whole new set of outliers, so these should be checked on a case by case basis, using the Mahalanobis as a guide for inspection. “Best Practice” for outliers • In general, it is a bad idea to remove outliers, unless they are truly “abnormal” and do not represent accurate observations from the population. The logic of removal needs to be based on semantics of the data • Removing outliers (especially en mass as demonstrated with the mahalanobis values) is risky because it decreases your ability to generalize as you do not know the cause of this type of variance, it may be more than just noise. Statistical Assumptions Part of data screening is ensuring you meet the four main statistical assumptions for multivariate data analysis: 1. Normality 2. Homoscedasticity 3. Linearity 4. Multicollinearity These assumptions are intended to hold for scalar and continuous variables, rather than categorical (we prefer gender to be bimodal) Normality • Normality refers to the distributional assumptions of a variable. • We usually assume in co-variance based models that the data is normally distributed, even though many times it is not! • Other tests like PLS or binomial regressions do not require such assumptions • t tests and F tests assume normal distributions • Normality is assessed in many ways: shape, skewness, and kurtosis (flat/peaked). • Normality issues effect small sample sizes (<50) much more than large sample sizes (>200) Bimodal Flat Shape Skewness Kurtosis Tests for Skewness and Kurtosis 1 2 • Relaxed rule: – Skewness > 1 = positive (right) skewed – Skewness < -1 = negative (left) skewed – Skewness between -1 and 1 is fine • Strict rule: – Abs(Skewness) > 3*Std. error = Skewed – Same for Kurtosis 3 Tests for Normality 1. 2. 3. 4. SPSS Analyze Explore Plots Normality *Neither of these variables would be considered normally distributed according to the KS or SW measures, but a visual inspection shows that role conflict (left) is roughly normal and participation (right) is positive skewed. So, ALWAYS conduct visual inspections! Fixing Normality Issues • Fix flat distribution with: – Inverse: 1/X • Fix negative skewed distribution with: – Squared: X*X – Cubed: X*X*X • Fix positive skewed distribution with: – Square root: SQRT(X) – Logarithm: LG10(X) Before and After Transformation Negative Skewed Cubed Homoscedasticity • Homoscedasticity is a nasty word that helps impress your listeners! • If a variable has this property it means that the DV exhibits consistent variance across different levels of the IV. • A simple way to determine if a relationship is homoscedastic, is to do a scatter plot with the IV on the x-axis and the DV on the y-axis. • If the plot comes up with a linear pattern, and has a substantial R-square we have homoscedasticity! • If there is not a linear pattern, and the R-square is low, then the relationship is heteroscedastic. Scatterplot approach Linearity • Linearity refers to the consistent slope of change that represents the relationship between an IV and a DV. • If the relationship between the IV and the DV is radically inconsistent, then it will throw off your SEM analyses as your data is not linear • Sometime you achieve this with transformations (log linear). Good Bad Multicollinearity • Multicollinearity is not desirable in regressions (but desirable in factor analysis!). • It means that independent variables are too highly correlated with each other and share too much variance • Influences the accuracy of estimates for DV and inflates error terms for DV (Hair). • How much unique variance does the black circle actually account for? Detecting Multicollinearity • An easy way to check this is to calculate a Variable Inflation Factor (VIF) for each independent variable after running a multivariate regression using one of the IVs as the dependent variable, and then regressing it on all the remaining IVs. Then swap out the IVs one at a time. • The rules of thumb for the VIF are as follows: – – – – VIF < 3; no problem VIF > 3; potential problem VIF > 5; very likely problem VIF > 10; definitely problem Handling Multicollinearity Loyalty 2 and loyalty 3 seem to be too similar in both of these test Dropping Loyalty 2 fixed the problem