The Edge of Smartness - University of Calgary

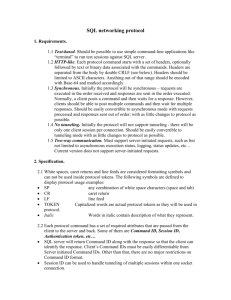

advertisement

The Edge of Smartness

Carey Williamson

iCORE Chair and Professor

Department of Computer Science

University of Calgary

Email: carey@cpsc.ucalgary.ca

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

1

Main Message

• Now, more than ever, we need “smart edge”

devices to enhance the performance,

functionality, and efficiency of the Internet

Application

Application

Transport

Transport

Network

Network

Data Link

Data Link

Physical

May 14, 2011

Core

Network

Networks Conference

Copyright © 2005 Department of Computer Science

Physical

2

Talk Outline

•

•

•

•

•

•

•

The End-to-End Principle: Revisited

The Smart Edge: Motivation and Definition

Example 1: Redundant Traffic Elimination

Example 2: TCP Incast Problem

Example 3: Speed Scaling Systems

Future Outlook and Opportunities

Questions and Discussion

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

3

The End-to-End Principle

• Central design tenet of the Internet (simple core)

• Represented in design of TCP/IP protocol stack

• Wikipedia: Whenever possible, communication

protocol operations should be defined to occur

at the end-points of a communications system

• Some good reading:

– J. Saltzer, D. Reed, and D. Clark, “End-to-End

Arguments in System Design”, ACM ToCS, 1984

– M. Blumenthal and D. Clark, “Rethinking the Design

of the Internet: The end to end arguments vs. the

brave new world”, ACM ToIT, 2001

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

4

Internet Protocol Stack

Application

Application

Application

Transport

Transport

Router

Transport

Network

Network

Network

Data Link

Data Link

Data Link

Physical

Physical

Physical

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

5

The End-to-End Principle: Revisited

• Claim: The ongoing evolution of the Internet is

blurring our notion of what an end system is

• This is true for both client side and server side

– Client: mobile phones, proxies, middleboxes, WLAN

– Server: P2P, cloud, data centers, CDNs, Hadoop

• When something breaks in the Internet protocol

stack, we have to find a suitable retrofit to make

it work properly

• We have done this repeatedly for decades,

and will likely keep doing it again and again!

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

6

(Selected) Existing Examples

•

•

•

•

•

•

•

•

•

•

•

Mobility: Mobile IP, MoM, Home/Foreign Agents

Small devices: mobile portals, content transcoding

Web traffic volume: proxy caching, CDNs

Wireless: I-TCP, Proxy TCP, Snoop TCP, cross-layer

IP address space: Network Address Translation (NAT)

Multi-homing: smart devices, cognitive networks, SDR

Big data: P2P file sharing, BT, download managers

P2P file sharing: traffic classification, traffic shapers

Security concerns: firewalls, intrusion/anomaly detection

Intermittent connectivity: delay-tolerant networks (DTN)

Deep space: inter-planetary IP

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

7

The Smart Edge

• Putting new functionality in a “smart edge”

device seems like a logical choice, for reasons

of performance, functionality, efficiency, security

• What is meant by “smart”?

– Interconnected: one or more networks; define basic

information units; awareness of location/context

– Instrumented: suitably represent user activities;

location, time, identity, and activity; perf metrics

– Intelligent: provisioning, management, adaptation;

appropriate decision-making in real-time

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

8

Example 1:

Redundant Traffic Elimination

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

9

Motivation for RTE

• A lot of the data content carried on the Internet

today is (partially) redundant

• Examples:

– Spam email that we receive (CIBC, RBC, …)

– Regular email that we receive (drafts)

– Web pages that we visit (U of X)

• It would be nice to avoid having to send this

redundant data more than once (especially on

low-bandwidth links!)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

10

Basic Principles of RTE

• If you can “remember” what you have

sent before, then you don’t have to

send another copy

• Redundant Traffic Elimination (RTE)

• Done using a dictionary of chunks and

their associated fingerprints

• Examples:

– Joke telling by certain CS professors

– Data deduplication in storage systems (90%)

– “WAN Optimization” in networks (20%)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

11

A Toy Example

Mary had a little lamb

Its fleece was white as snow

And everywhere that Mary went

That lamb was sure to go

It followed her to school one day

Which was against the rule

It made the children laugh and play

To see a lamb at school

May 14, 2011

Mary had a little lamb

A little pork, a little ham

Mary had a little lamb

And then she had dessert!

Networks Conference

Copyright © 2005 Department of Computer Science

12

Chunk Granularity Issue

• Object: large potential savings, but exact

hits will be very rare

• Paragraph: very few repeats though

• Sentence: some repeats, some savings

• Chunk: “just right” size and savings

• Word: lots of repeats, small savings

• Letter: finite alphabet, many hits, but

relatively high overhead to encode

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

13

Redundant Traffic Elimination (RTE)

• Purpose: Use bottleneck link more efficiently

• Basic idea: Use a cache of data chunks to avoid

transmitting identical chunks more than once

Distance

Overlap

• RTE process:

Chunk A

...

Chunk C

...

Copyright © 2005 Department of Computer Science

FP A

FP C

... ...

Networks Conference

Chunk B

...

May 14, 2011

FP B

...

• Works within and across files

• Combines caching and chunking

Chunk C

... ...

Chunk A

Chunk B

– Divide IP packet into chunks

– Select a subset of chunks

FP A = fingerprint (Chunk A)

– Store a cache of chunks at two ends

of a network link or path

– Transfer only chunks that are not cached

Chunk cache

14

Background on RTE

• Proposed by [Spring and Wetherall 2000]

–

–

–

–

Intended to augment Web caching

Proposed for IP packet level redundancy elimination

Found up to 54% redundancy in Web traffic

Applied to high-speed wired links (WAN Optimization)

• Chunking used in storage systems to avoid

storing redundant data (data deduplication)

• Can also apply this approach in WLAN context:

–

–

–

–

Increasing demand for wireless broadband

Plenty of CPU power, cheap storage available

Wireless traffic content similar to wired traffic

More efficient use of constrained wireless channel

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

15

Some References on RTE

• N. Spring and D. Wetherall, “A Protocol-Independent Technique for

Eliminating Redundant Network Traffic”, ACM SIGCOMM 2000

• A. Anand et al., “Packet Caches on Routers: The Implications of

Universal Redundant Traffic Elimination”, ACM SIGCOMM 2008

• A. Anand et al., “Redundancy in Network Traffic: Findings and

Implications”, ACM SIGMETRICS 2009

• A. Anand et al., “SmartRE: An Architecture for Coordinated Networkwide Redundancy Elimination”, ACM SIGCOMM 2009

• B. Aggarwal et al., “EndRE: An End-System Redundancy

Elimination Service for Enterprises”, USENIX NSDI 2010

• E. Halepovic et al., “DYNABYTE: A Dynamic Sampling Algorithm for

Redundant Content Detection”, IEEE ICCCN 2011

• E. Halepovic et al., “Enhancing Redundant Network Traffic

Elimination”, under review, 2011

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

16

RTE Process Pipeline

Current

Improve traditional RTE

Exploit traffic non-

Packet

Packet size (bypass

technique)

Chunk popularity (new

cache management scheme)

Content type (content-aware

RTE)

Up to 50% more

detected redundancy

May 14, 2011

NIC

NIC

uniformities:

Proposed

Packet

Large

enough?

No

Chunking

(no overlap)

Yes

Next

chunk

Fingerprinting

Overlap

OK?

Yes

No

Content

promising?

No

Chunk

expansion

FIFO cache

management

Forwarding

Networks Conference

Copyright © 2005 Department of Computer Science

Yes

Fingerprinting

non-FIFO cache

management

17

Forwarding

Fixed-size Chunks with Overlap

• Traditional RTE uses variable-sized chunks with expansion

– After detecting a chunk match, the matching region is expanded

– Need to store whole packets in cache

– Need full packet cache at both ends of the link

– Constrained to FIFO replacement policy

• Replaced with fixed-size chunks (64 bytes) and overlap

– Store chunks only in cache, not whole packets (less overhead)

– Full cache needed only at receiver, fingerprints only at sender

– Allows alternative cache management schemes

• Benefits of fixed-size chunks with overlap:

– Simpler technique with lower storage overhead

– Detects 9-14% more redundancy compared to 13% with

“expansion“

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

18

LSA Cache Replacement

• Frequency-based cache replacement (not FIFO)

• Exploit non-uniform chunk popularity

• Replace chunks that contributed least to savings

–

–

–

–

Track savings by chunks, not cache hits (overlap)

New metric: “total bytes saved” per chunk

LFU-like, may cause cache pollution

Need “aging” factor: purge entire cache!

• Least Savings with Aging (LSA) improves

detected redundancy by up to 12%

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

19

RTE in Wireless Traffic

• Using traces of campus WLAN traffic

• RTE applied to aggregate wireless traffic

– Savings comparable to inbound aggregate campus

traffic, but higher for outbound direction by about 30%

– Why? Inbound traffic mix similar for campus and

WLAN traffic, but differs for outbound (more P2P)

• RTE applied to individual WLAN user traffic

– 65% of users have up to 10% redundancy in traffic

– 30% of users have 10-50% redundant traffic

– 5% of users have 50% or more redundancy

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

20

Main Sources of Redundancy

Type

Value

Nulls

57.1% Consecutive null bytes

0x00000000

Text

16.7% Plain text (English)

Gnutella

HTTP

7.3%

HTTP directives

Content-Type:

Mixed

6.2%

Plain text and other chars

14pt font

Binary

5.8%

Random characters

0x27c46128

HTML

3.7%

HTML code fragments

<HTML> <p>

Char+1

3.2%

Repeated text chars

AAAAAAAz

May 14, 2011

Description

Networks Conference

Copyright © 2005 Department of Computer Science

Example

21

Content-Aware RTE

• Improvement techniques are nice,

but chunk selection is still random

• Tackle the fundamental problem of RTE:

selecting the most redundant data chunks

• Content-based vs. Random

• Exploit non-uniform content in data traffic

• RTE savings contribution by different data chunks:

– Null strings:

57%

– Text-based:

31%

– Binary, Mixed: 12%

May 14, 2011

Select more text-based chunks

Bypass binary data

Networks Conference

Copyright © 2005 Department of Computer Science

22

Example with 6-byte chunks (1 of 2)

Normal selection:

HTTP/1.1 200 OK<CRLF>

Server: Apache/2.2.11 (Unix)<CRLF>

Last-Modified: Mon, 25 Jan 2010 16:19:01 GMT<CRLF>

ETag: "a7046c-a6e-47dff86f24740"<CRLF>

Accept-Ranges: bytes<CRLF>

Content-Length: 2670<CRLF>

Cache-Control: maxage=3600<CRLF>

X-UA-Compatible: IE=EmulateIE7<CRLF>

Content-Type: image/png<CRLF>

Date: Fri, 29 Jan 2010 19:30:05 GMT<CRLF>

<CRLF><CRLF>‰PNG <CRLF><CRLF>IHDR Œ . ÍÊé gAMA ÖØÔOX2

tEXtSoftware Adobe ImageReadyqÉe< PLTE÷ì|''"áá®×Õ–{{iþú•

òò¼-'$þôrââ°ÆĤóóºþövîï»·«Sš•E¾¾•âÕ\±±ŽþòpKKChhYÿðmåå±YYG“’t’’‚ÿïk„„s

žžƒ<;4ôô¼ÓÔ«¢¢†uudóð¬–

“ŒDD:ëë¹33,÷÷½æ浜šŠÚÚ«\\UJE@ŠŠqÖÕ¦þ÷”»ºšÿón²²•

GECøëjŽŽy÷÷¾–

•zì쵦¦‹““zÝÝ

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

23

Example with 6-byte chunks (2 of 2)

Content-aware selection:

HTTP/1.1 200 OK <CRLF>

Server: Apache/2.2.11 (Unix) <CRLF>

Last-Modified: Mon, 25 Jan 2010 16:19:01 GMT <CRLF>

ETag: "a7046c-a6e-47dff86f24740" <CRLF>

Accept-Ranges: bytes <CRLF>

Content-Length: 2670 <CRLF>

Cache-Control: maxage=3600 <CRLF>

X-UA-Compatible: IE=EmulateIE7 <CRLF>

Content-Type: image/png <CRLF>

Date: Fri, 29 Jan 2010 19:30:05 GMT <CRLF>

<CRLF><CRLF>‰PNG <CRLF><CRLF>IHDR Œ . ÍÊé gAMA ÖØÔOX2

tEXtSoftware Adobe ImageReadyqÉe< PLTE÷ì|''"áá®×Õ–{{iþú•

òò¼-,'$þôrââ°ÆĤóóºþövîï»·«Sš•E¾¾•âÕ\±±ŽþòpKKChhYÿðmåå±YYG“’t’’‚ÿïk„„

sžžƒ<;4ôô¼ÓÔ«¢¢†uudóð¬–

“ŒDD:ëë¹33,÷÷½æ浜šŠÚÚ«\\UJE@ŠŠqÖÕ¦þ÷”»ºšÿón²²

GECøëjŽŽy÷÷¾–

•zì쵦¦‹““zÝÝ

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

24

Entropy-based bypass

Percentage of chunks

• Lower entropy means higher redundancy

• Select from chunks with entropy of 5.3 or less

• Problem: CPU time required

25%

HTML

PDF

MP3

20%

15%

10%

5%

0%

3

3.2 3.4 3.6 3.8

4

4.2 4.4 4.6 4.8

5

5.2 5.4 5.6 5.8

6

Entropy

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

25

Textiness-based bypass

• “Textiness”: proportion of plain text characters in a chunk

• Computationally simple

• Select from chunks with textiness of at least 0.9

80%

• Modest CPU demands

• Similar RTE savings

Percentage of Chunks

70%

60%

HTML

50%

MP3

40%

30%

20%

10%

0%

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1.0

Textiness value

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

26

RTE Summary

• Improves traditional RTE savings by up to 50%

• Techniques can be used individually or together

• RTE very beneficial for wireless traffic

– 30% of users have 10-50% redundant traffic

• Proposed a novel content-aware RTE

– Improve RTE savings by up to 38%

• Challenges of content-aware RTE

– Needs refinement to be able to work on real traces, or

exploit an appropriate traffic classification scheme

– Needs improvement in execution time

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

27

Example 2:

The TCP Incast Problem

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

28

Motivation

• Emerging IT paradigms

–

–

–

–

Data centers, grid computing, HPC, multi-core

Cluster-based storage systems, SAN, NAS

Large-scale data management “in the cloud”

Data manipulation via “services-oriented computing”

• Cost and efficiency advantages from IT trends,

economy of scale, specialization marketplace

• Performance advantages from parallelism

– Partition/aggregation, Hadoop, multi-core, etc.

– Think RAID at Internet scale! (1000x)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

29

Problem Formulation

TCP retransmission timeouts

How to provide

high goodput

for data center

applications?

•

•

•

•

TCP throughput

degradation

High-speed, low-latency network (RTT ≤ 0.1 ms)

Highly-multiplexed link (e.g., 1000 flows)

Highly-synchronized flows on bottleneck link

Limited switch buffer size (e.g., 100 packets)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

30

Some References on TCP Incast

• A. Phanishayee et al., “Measurement and Analysis of TCP

Throughput Collapse in Cluster-based Storage Systems”,

Proceedings of FAST 2008

• Y. Chen et al., “Understanding TCP Incast Throughput Collapse in

Datacenter Networks”, WREN 2009

• V. Vasudevan et al., “Safe and Effective Fine-grained TCP

Retransmissions for Datacenter Communication”, SIGCOMM 2009

• M. Alizadeh et al., “Data Center TCP”, ACM SIGCOMM 2010

• A. Shpiner et al., “A Switch-based Approach to Throughput Collapse

and Starvation in Data Centers”, IEEE IWQoS 2010

• M. Podlesny et al., “An Application-Level Solution to the TCP-incast

Problem in Data Center Networks”, IEEE IWQoS 2011

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

31

Effect of Timer Granularity

Finer granularity definitely helps a lot!

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

32

Application-layer Scheduling

Start time of the response from the i-th server:

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

33

Solution

Analytical Model

34

Copyright © 2005 Department of Computer Science

Effect of Number of Servers

• Note non-monotonic behaviour! (ceiling functions)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

35

Summary

Summary: TCP Incast Problem

• Data centers have specific network characteristics

• TCP-incast throughput collapse problem emerges

• Solutions:

– Tweak TCP parameters for this environment

– Redesign TCP for this environment

– Rewrite applications for this environment

– Smart edge coordination for uploads/downloads

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

36

Example 3:

Speed Scaling Systems

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

37

Motivation

• Computer systems performance evaluation

research has traditionally considered throughput,

response time, delay as performance metrics

• In modern computer and communication systems,

energy consumption, dollar cost, and sustainability

are becoming more important

• Dynamic Voltage and Frequency Scaling (DVFS)

is well-supported in modern processors, but not

used particularly effectively

• Growing research interest in “CPU Speed Scaling”

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

38

Some References on Speed Scaling

• M. Weiser et al., “Scheduling for Reduced CPU Energy”, OSDI 1994

• F. Yao et al., “A Scheduling Model for Reduced CPU Energy”,

Proceedings of ACM FOCS 1995

• N. Bansal et al., “Speed Scaling to Manage Energy and

Temperature”, JACM, Vol. 54, 2007

• N. Bansal et al., “Speed Scaling with an Arbitrary Power Function”,

Proceedings of ACM-SIAM SODA 2007

• D. Snowdon et al., “Koala: A Platform for OS-level Power

Management”, Proceedings of ACM EuroSys 2009

• S. Albers, “Energy-Efficient Algorithms”, CACM, May 2010

• L. Andrew et al., “Optimality, Fairness, and Robustness in Speed

Scaling Designs”, Proceedings of ACM SIGMETRICS 2010

• A. Gandhi, “Optimality Analysis of Energy-Performance Tradeoff for

Server Farm Management”, IFIP Performance 2010

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

39

A Toy Example

• Consider 5 jobs (with

no specific deadlines)

• Scheduling policies:

– FCFS, PS, SRPT

• Simple simulator of

single-CPU system

• Plot number of active

jobs in system vs time

• Plot number of active

bytes in system vs time

Job

Arrival Size

0

1.0

5

1

2.2

2

2

2.8

3

3

3.5

1

4

4.7

4

40

Copyright © 2005 Department of Computer Science

i

Ai

Si

0

1.0

5

1

2.2

2

2

2.8

3

3

3.5

1

4

4.7

4

41

Copyright © 2005 Department of Computer Science

i

Ai

Si

0

1.0

5

1

2.2

2

2

2.8

3

3

3.5

1

4

4.7

4

42

Copyright © 2005 Department of Computer Science

No Speed Scaling

FCFS

PS

SRPT

43

Copyright © 2005 Department of Computer Science

Dynamic Speed Scaling

FCFS

PS

SRPT

44

Copyright © 2005 Department of Computer Science

Speed Scaling Summary

• A widely-applicable problem

– Small-scale: desktops, multi-core, wireless devices

– Large-scale: enterprise networks, data centers

• Mechanisms available, but policies unclear

• Interesting tradeoffs between fairness,

efficiency, cost, optimality, and robustness

• Much more work remains to be done

– Universal fairness metrics

– Worst-case bounds vs average-case performance

– Dynamic energy pricing...

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

45

Concluding Remarks

• We need “smart edge” devices to enhance the

performance, functionality, security, and

efficiency of the Internet (now more than ever!)

Application

Application

Transport

Transport

Network

Network

Data Link

Data Link

Physical

May 14, 2011

Core

Network

Networks Conference

Copyright © 2005 Department of Computer Science

Physical

46

Future Outlook and Opportunities

•

•

•

•

•

•

•

•

Traffic classification

QoS management

Load balancing

Security and privacy

Cloud computing

Virtualization everywhere

Cognitive radio networks

Smart Applications on Virtual Infrastructure

(SAVI)

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

47

For More Information

• C. Williamson, “The Edge of Smartness”,

Workshop on Data Center Performance

(DCPerf 2011), Minneapolis, MN, June 2011

• Web site: http://www.cpsc.ucalgary.ca/~carey

• Email: carey@cpsc.ucalgary.ca

• Questions and Discussion?

May 14, 2011

Networks Conference

Copyright © 2005 Department of Computer Science

48