Apriori Algorithm

advertisement

Big Data Analysis

Technology

University of Paderborn

L.079.08013 Seminar: Cloud Computing and Big

Data Analysis (in English)

Summer semester 2013

June 12, 2013

Tobias Hardes (6687549) –

Tobias.Hardes@gmail.com

Table of content

Introduction

Definitions

Background

Example

Related Work

Research

Main Approaches

Association Rule Mining

MapReduce Framework

Conclusion

June 12, 2013

2

4 Big keywords

June 12, 2013

3

Big Data vs. Business Intelligence

How can we predict cancer

early enough to treat it

successfully?

How Can I make significant

profit on the stock market next

month?

Docs.oralcle.com

Which is the most profitable branch of

our supermarket?

In a specific country?

During a specific period of time

June 12, 2013

4

Background

home.web.cern.ch

June 12, 2013

5

Big Science – The LHC

600 million times per second, particles collide within the Large

Hadron Collider (LHC)

Each collision generate new particles

Particles decay in complex way

Each collision is detected

The CERN Data Center reconstruct this collision event

15 petabytes of data stored every year

Worldwide LHC Computing Grid (WLCG) is

used to crunch all of the data

home.web.cern.ch

June 12, 2013

6

Data Stream Analysis

- Just in time analysis of data.

- Sensor networks

- Analysis for a certain time (last 30 seconds)

http://venturebeat.com

June 12, 2013

7

Complex event processing (CEP)

- Provides queries for streams

- Usage of „Event Processing Languages“ (EPL)

- select avg(price) from

StockTickEvent.win:time(30 sec)

Tumbling Window

(Slide = WindowSize)

Window

Sliding Window

(Slide < WindowSize)

https://forge.fi-ware.eu

Slide

June 12, 2013

8

Complex Event Processing - Areas of

application

- Just in time analysis Complexity of algorithms

- CEP is used with Twitter:

- Identify emotional states of users

- Sarcasm?

June 12, 2013

9

Related Work

June 12, 2013

10

Big Data in companies

June 12, 2013

11

Principles

- Statistics

- Probability theory

- Machine learning

Data Mining

- Association rule learning

- Cluster analysis

- Classificiation

June 12, 2013

12

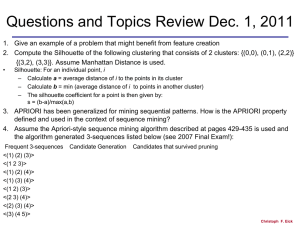

Association Rule Mining – Cluster analysis

Association Rule Mining

Is soda purchased

with bananas?

-Relationships between items

-Find associations,

correlations or causal

structures

-Apriori algorithm

-Frequent Pattern (FP)-Growth

algorithm

June 12, 2013

13

Cluster analysis – Classification

Cluster Analysis

-Classification of similar

objects into classes

-Classes are defined during

the clustering

-k-Means

-K-Means++

June 12, 2013

14

Research and future work

- Performance, performance, performance…

-

Passes of the data source

Parallelization

NP-hard problems

….

- Accuracy

- Optimized solutions

June 12, 2013

16

Example

- Apriori algorithm: n+1 database scans

- FP-Growth algorithm: 2 database scans

June 12, 2013

17

Distributed computing – Motivation

- Complex computational tasks

- Serveral terabytes of data

- Limited hardware resources

Prof. Dr. Erich Ehses (FH Köln)

Google‘s MapReduce framework

June 12, 2013

18

Main approaches

http://ultraoilforpets.com

June 12, 2013

20

Structure

- Association rule mining

- Apriori algorithm

- FP-Growth algorithm

- Googles MapReduce

June 12, 2013

21

Association rule mining

- Identify items that are related to other items

- Example: Analysis of baskets in an online shop

or in a supermarket

http://img.deusm.com/

June 12, 2013

22

Terminology

- A stream or a database with n elements: S

- Item set: 𝑰 = {𝒊𝟏 , 𝒊𝟐 , … , 𝒊𝒏 }

- Frequency of occurrence of an item set: Φ(A)

- Association rule 𝑨

B:

𝑨 ∈ 𝑰 𝒂𝒏𝒅 𝑩 ∈ 𝑰, 𝑨 ∩ 𝑩 = ∅

- Support: 𝐬𝐮𝐩 𝑨 =

𝜱(𝑨)

|𝑺|

- Confidence: 𝒄𝒐𝒏𝒇(𝑨

B) =

𝒔𝒖𝒑(𝑨 ∪𝑩)

𝒔𝒖𝒑(𝑨)

June 12, 2013

23

Example

- Rule:

„If a basket contains cheese and chocolate,

then it also contains bread“

𝐶ℎ𝑒𝑒𝑠𝑒, 𝑐ℎ𝑜𝑐𝑜𝑙𝑎𝑡𝑒

{𝑏𝑟𝑒𝑎𝑑}

- 6 of 60 transactions contains cheese and

𝜱(𝑨)

𝟔

chocolate 𝒔𝒖𝒑 𝑨 =

= = 𝟏𝟎%

|𝑺|

𝟔𝟎

- 3 of the 6 transactions contains bread

𝒄𝒐𝒏𝒇(𝑨

B) =

𝒔𝒖𝒑 𝑨 ∪𝑩

𝒔𝒖𝒑 𝑨

=

𝟑

𝟔

= 𝟓𝟎%

June 12, 2013

24

Common approach

- Disjoin the problem into two tasks:

1. Generation of frequent item sets

•

Find item sets that satisfy a minimum support value

𝜱(𝑨)

𝐦𝐢𝐧_𝐬𝐮𝐩 ≤ 𝐬𝐮𝐩 𝑨 =

|𝑺|

2. Generation of rules

•

Find Confidence rules using the item sets

𝒎𝒊𝒏_𝒄𝒐𝒏𝒇 ≤ 𝒄𝒐𝒏𝒇(𝑨

𝒔𝒖𝒑(𝑨 ∪ 𝑩)

B) =

𝒔𝒖𝒑(𝑨)

June 12, 2013

25

Aprio algorithm – Frequent item set

Input:

Minimum support:

Datasource:

min_sup

S

June 12, 2013

26

Apriori – Frequent item sets (I)

Generation of frequent item sets : min_sup = 2

TID Transaction

1

(B,C)

2

(B,C)

3

(A,C,D)

4

(A,B,C,D)

5

(B,D)

22

A1

B12

43

C 42

1

3

D3

1

2

https://www.mev.de/

{}

June 12, 2013

27

Apriori – Frequent item sets (II)

Generation of frequent item sets : min_sup = 2

TID Transaction

1

(B,C)

2

(B,C)

3

(A,C,D)

4

(A,B,C,D)

5

(B,D)

ACD 2

Candidates

Candidates

AB 1

AC 2

A2

BCD 1

AD 2

BC 3

BD 2

B4

C4

D3

L3

CD 2

L2

L1

https://www.mev.de/

{}

June 12, 2013

28

Apriori Algorithm – Rule generation

-

Uses frequent item sets to extract high-confidence rules

-

Based on the same principle as the item set generation

-

Done for all

frequent item set Lk

June 12, 2013

29

Example: Rule generation

TID

Items

T1

{Coffee; Pasta; Milk}

T2

{Pasta; Milk}

T3

{Bread; Butter}

T4

{Coffee; Milk; Butter}

T5

{Milk; Bread; Butter}

sup 𝐵𝑢𝑡𝑡𝑒𝑟

conf 𝐵𝑢𝑡𝑡𝑒𝑟

𝐵𝑢𝑡𝑡𝑒𝑟

𝑀𝑖𝑙𝑘

Φ(𝐵𝑢𝑡𝑡𝑒𝑟 ∪ 𝑀𝑖𝑙𝑘) 2

𝑀𝑖𝑙𝑘 =

= = 40%

|𝑆|

5

𝑠𝑢𝑝(𝐵𝑢𝑡𝑡𝑒𝑟 ∪ 𝑀𝑖𝑙𝑘) 40%

𝑀𝑖𝑙𝑘 =

=

= 66%

sup(𝐵𝑢𝑡𝑡𝑒𝑟)

60%

June 12, 2013

30

Summary Apriori algorithm

- n+1 scans of the database

- Expensive generation of the candidate item set

- Implements level-wise search using frequent

item property.

- Easy to implement

- Some opportunities for specialized optimizations

June 12, 2013

31

FP-Growth algorithm

- Used for databases

- Features:

1.

2.

Requires 2 scans of the database

Uses a special data structure – The FP-Tree

Build the FP-Tree

Extract frequent item sets

- Compression of the database

- Devide this database and apply data mining

June 12, 2013

32

Construct FP-Tree

TID

Items

1

{a,b}

2

{b,c,d}

3

{a,c,d,e}

4

{a,d,e}

5

{a,b,c}

6

{a,b,c,d}

7

{a}

8

{a,b,c}

9

{a,b,d}

10

{b,c,e}

d:1

June 12, 2013

33

Extract frequent itemsets (I)

- Bottom-up strategy

- Start with node „e“

- Then look for „de“

- Each path is processed

recursively

- Solutions are merged

June 12, 2013

34

Extract frequent itemsets (II)

-

Is e frequent?

- Is de frequent?

- …

- Is ce frequent?

- ….

- Is be frequent?

- ….

- Is ae frequent?

- …..

Using subproblems to identify

frequent itemsets

Φ(e) = 3 – Assume the minimum support was set to 2

June 12, 2013

35

Extract frequent itemsets (III)

1. Update the support count along the prefix

path

2. Remove Node e

3. Check the frequency of the paths

Find item sets with

de, ce, ae or be

June 12, 2013

36

Apriori vs. FP-Growth

- FP-Growth has some advantages

-

Two scans of the database

No expensive computation of candidates

Compressed datastructure

Easier to parallelize

W. Zhang, H. Liao, and N. Zhao, “Research on the fp growth algorithm

about association rule mining

June 12, 2013

37

MapReduce

- Map and Reduce functions are expressed by a

developer

- map(key, val)

- Emits new key-values p

- reduce(key, values)

- Emits an arbitrary output

- Usually a key with one value

June 12, 2013

45

MapReduce – Word count

June 12, 2013

46

User

Programm

(1)fork

(1)fork

(7) return

(1)fork

Master

(2) assign

(4) local write

(3) read

(2) assign

(5) RPC

worker

Worker for blue keys

worker

worker

worker

worker

Worker for red keys

worker

worker

worker

Input files

Map

phase

Intermediate

files

Worker for yellow keys

Reduce

Shuffle

phase

Output files

Conclusion: MapReduce (I)

- MapReduce is design as a batch processing

framework

- No usage for ad-hoc analysis

- Used for very large data sets

- Used for time intensive computations

- OpenSource implementation: Apache Hadoop

http://hadoop.apache.org/

June 12, 2013

48

Conclusion

June 12, 2013

49

Conclusion (I)

- Big Data is important for research and in daily

business

- Different approaches

- Data Stream analysis

- Complex event processing

- Rule Mining

- Apriori algorithm

- FP-Growth algorithm

June 12, 2013

50

Conclusion (II)

- Clustering

- K-Means

- K-Means++

- Distributed computing

- MapReduce

- Performance / Runtime

-

Multiple minutes

Hours

Days…

Online analytical processing for Big Data?

June 12, 2013

51

Thank you

for your attention

Appendix

Big Data definitions

Every day, we create 2.5

quintillion bytes of …. .

Big data is high-volume, high-velocity

This data comes from

and high-variety information assets

everywhere: sensors used to

that demand cost-effective, innovative

gather climate information,

posts to social media sites,

forms of information processing for

digital pictures and videos,

enhanced insight and decision making.

purchase transaction

(Gartner Inc.)

records, and cell phone GPS

signals to name a few. This

data is big data.

(IBM Corporate )

Big data” refers to datasets whose size is

beyond the ability of typical database software

tools to capture, store, manage, and analyze.

(McKinsey & Company)

June 12, 2013

54

Big Data definitions

Every day, we create 2.5

quintillion bytes of …. .

Big data is high-volume, high-velocity

This data comes from

and high-variety information assets

everywhere: sensors used

that demand cost-effective, innovative

to gather climate

information, posts to social

forms of information processing for

media sites, digital pictures

enhanced insight and decision making.

and videos, purchase

(Gartner Inc.)

transaction records, and cell

phone GPS signals to name

a few. This data is big data.

(IBM Corporate )

Big data” refers to datasets whose size is

beyond the ability of typical database software

tools to capture, store, manage, and analyze.

(McKinsey & Company)

June 12, 2013

55

Complex Event Processing – Windows

Tumbling Window

Sliding Window

-Moves as much as the

window size

-Slides in time

Tumbling Window

(Slide = WindowSize)

Window

-Buffers the last x elements

Sliding Window

(Slide < WindowSize)

Slide

June 12, 2013

56

MapReduce vs. BigQuery

June 12, 2013

57

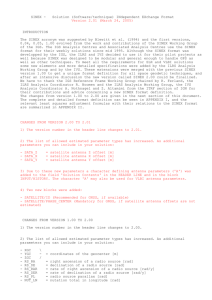

Apriori Algorithm (Pseudocode)

-

𝑳𝟏 ← 𝒊𝒕𝒆𝒎𝒔𝒆𝒕𝒔

-

for (𝑘 = 2; 𝐿𝑘−1 ; 𝑘 + +) 𝐝𝐨

-

𝐶𝑘 = 𝑎𝑝𝑟𝑖𝑜𝑟𝑖𝐺𝑒𝑛 𝐿𝑘−1

-

for each 𝐼 ∈ 𝑆 do

-

𝐶𝑟 ← 𝑠𝑢𝑏𝑠𝑒𝑡(𝐶𝑘 , 𝐼)

-

for each 𝑐 ∈ 𝐶𝑟 do

-

-

𝑐. 𝑐𝑜𝑢𝑛𝑡 + +

end for

-

end for

-

if 𝑐. 𝑐𝑜𝑢𝑛𝑡 ≥ 𝑚𝑖𝑛 _𝑠𝑢𝑝 then

-

-

𝐿𝑘 = 𝐿 𝑘 ∪ 𝑐

end if

-

end for

-

return 𝑳𝒌

June 12, 2013

58

Apriori Algorithm (Pseudocode)

-

𝑳𝟏 ← 𝒊𝒕𝒆𝒎𝒔𝒆𝒕𝒔

-

for (𝑘 = 2; 𝐿𝑘−1 ; 𝑘 + +) 𝐝𝐨

-

𝐶𝑘 = 𝑎𝑝𝑟𝑖𝑜𝑟𝑖𝐺𝑒𝑛 𝐿𝑘−1

-

for each 𝐼 ∈ 𝑆 do

-

𝐶𝑟 ← 𝑠𝑢𝑏𝑠𝑒𝑡(𝐶𝑘 , 𝐼)

-

for each 𝑐 ∈ 𝐶𝑟 do

-

-

𝑐. 𝑐𝑜𝑢𝑛𝑡 + +

end for

-

end for

-

if 𝑐. 𝑐𝑜𝑢𝑛𝑡 ≥ 𝑚𝑖𝑛 _𝑠𝑢𝑝 then

-

-

𝐿𝑘 = 𝐿 𝑘 ∪ 𝑐

end if

-

end for

-

return 𝑳𝒌

June 12, 2013

59

Apriori Algorithm (Pseudocode)

-

𝑳𝟏 ← 𝒊𝒕𝒆𝒎𝒔𝒆𝒕𝒔

-

for (𝑘 = 2; 𝐿𝑘−1 ; 𝑘 + +) 𝐝𝐨

-

𝐶𝑘 = 𝑎𝑝𝑟𝑖𝑜𝑟𝑖𝐺𝑒𝑛 𝐿𝑘−1

-

for each 𝐼 ∈ 𝑆 do

-

𝐶𝑟 ← 𝑠𝑢𝑏𝑠𝑒𝑡(𝐶𝑘 , 𝐼)

-

for each 𝑐 ∈ 𝐶𝑟 do

-

-

𝑐. 𝑐𝑜𝑢𝑛𝑡 + +

end for

-

end for

-

if 𝑐. 𝑐𝑜𝑢𝑛𝑡 ≥ 𝑚𝑖𝑛 _𝑠𝑢𝑝 then

-

-

𝐿𝑘 = 𝐿 𝑘 ∪ 𝑐

end if

-

end for

-

return 𝑳𝒌

June 12, 2013

60

Apriori Algorithm (Pseudocode)

-

𝑳𝟏 ← 𝒊𝒕𝒆𝒎𝒔𝒆𝒕𝒔

-

for (𝑘 = 2; 𝐿𝑘−1 ; 𝑘 + +) 𝐝𝐨

-

𝐶𝑘 = 𝑎𝑝𝑟𝑖𝑜𝑟𝑖𝐺𝑒𝑛 𝐿𝑘−1

-

for each 𝐼 ∈ 𝑆 do

-

𝐶𝑟 ← 𝑠𝑢𝑏𝑠𝑒𝑡(𝐶𝑘 , 𝐼)

-

for each 𝑐 ∈ 𝐶𝑟 do

-

-

𝑐. 𝑐𝑜𝑢𝑛𝑡 + +

end for

-

end for

-

if 𝑐. 𝑐𝑜𝑢𝑛𝑡 ≥ 𝑚𝑖𝑛 _𝑠𝑢𝑝 then

-

-

𝐿𝑘 = 𝐿 𝑘 ∪ 𝑐

end if

-

end for

-

return 𝑳𝒌

June 12, 2013

61

June 12, 2013

62

Distributed computing of Big Data

CERN‘s Worldwide LHC Computing Grid (WLCG) launched in

2002

Stores, distributes and analyse the 15 petabytes of data

140 centres across 35 countries

June 12, 2013

63

Apriori Algorithm – 𝑎𝑝𝑟𝑖𝑜𝑟𝑖𝐺𝑒𝑛 Join

- Do not generate not too many candidate item

sets, but making sure to not lose any that do turn

out to be large.

- Assume that the items are ordered (alphabetical)

- {a1, a2 , … ak-1} = {b1, b2 , … bk-1}, and ak < bk,

{a1, a2 , … ak, bk} is a candidate k+1-itemset.

June 12, 2013

64

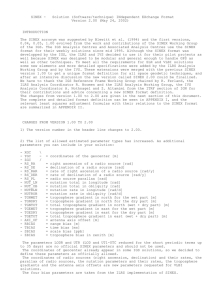

Big Data vs. Business Intelligence

Big Data

Business Intelligence

Large and complex data sets

Temporal, historical, …

Difficult to process and to

analyse

Used for deep analysis and

reporting:

How can we predict cancer

early enough to treat it

successfully?

How Can I make significant

profit on the stock market

next month?

Transformed Data

Historical view

Easy to process and to

analyse

Used for reporting:

Which is the most profitable

branch of our supermarket?

Which postcodes suffered

the most dropped calls in

July?

June 12, 2013

65

Improvement approaches

- Selection of startup parameters for algorithms

- Reducing the number of passes over the database

- Sampling the database

- Adding extra constraints for patterns

- Parallelization

June 12, 2013

66

Improvement approaches – Examples

June 12, 2013

67

Example: FA-DMFI

- Algorithm for Discovering frequent item sets

- Read the database once

- Compress into a matrix

- Frequent item sets are generated by cover relations

Further costly computations are avoided

June 12, 2013

68

K-Means algorithm

1. Select k entities as the initial centroids.

2. (Re)Assign all entities to their closest centroids.

3. Recompute the centroid of each newly

assembled cluster.

4. Repeat step 2 and 3 until the centroids do not

change or until the maximum value for the

iterations is reached

June 12, 2013

69

Solving approaches

- K-Means cluster is NP-hard

- Optimization methods to handle NP-hard

problems (K-Means clustering)

June 12, 2013

70

Examples

- Apriori algorithm: n+1 database scans

- FP-Growth algorithm: 2 database scans

- K-Means: Exponential runtime

- K-Means++: Improve startup parameters

June 12, 2013

71

Google‘s BigQuery

Upload

Upload the data set

to the Google

Storage

http://glenn-packer.net/

Process

Import data to tables

Analyse

Run queries

June 12, 2013

72

The Apriori algorithm

- Most known algorithm for rule mining

- Based on a simple principle:

- „If an item set is frequent, then all subsets of this

item are also frequent“

- Input:

- Minimum confidence: min_conf

- Minimum support:

min_sup

- Data source:

S

June 12, 2013

73

Apriori Algorithm – aprioriGen

- Generates a candidate item set 𝐿𝑘+1 that might

by larger

- Join: Generation of the item set

- Prune: Elimination of item sets with

support(𝐼𝑗 ) < 𝑚𝑖𝑛 _𝑠𝑢𝑝

June 12, 2013

74

Apriori Algorithm – Rule generation -- Example

- {Butter, milk, bread} {cheese}

- {Butter, meat, bread} {cola}

{Butter, bread} {cheese, cola}

June 12, 2013

75

How to improve the Apriori algorithm

- Hash-based itemset counting: A k-itemset

whose corresponding hashing bucket count is

below the threshold cannot be frequent.

- Sampling: mining on a subset of given data

- Dynamic itemset counting:

June 12, 2013

76

Construction of FP-Tree

- Compressed representation of the database

- First scan

- Get the support of every item and sort them by the

support count

- Second scan

- Each transaction is mapped to a path

- Compression is done if overlapping path are

detected

- Generate links between same nodes

- Each node has a counter Number of mapped

transactions

June 12, 2013

77

FP-Growth algorithm

Calculate the

support count of

each item in S

Sort items in

decreasing support

counts

Read transaction t

hasNext

Create new nodes

labeled with the

items in t

Set the frequency

count to 1

No overlapped

prefix found

Overlapped prefix

found

Increment the

frequency count for

each overlapped

item

Create new nodes

for none overlapped

items

Create additional

path to common

items

return

June 12, 2013

78