Linking Science to Technology Vol. 2 Methodological FrameWork

advertisement

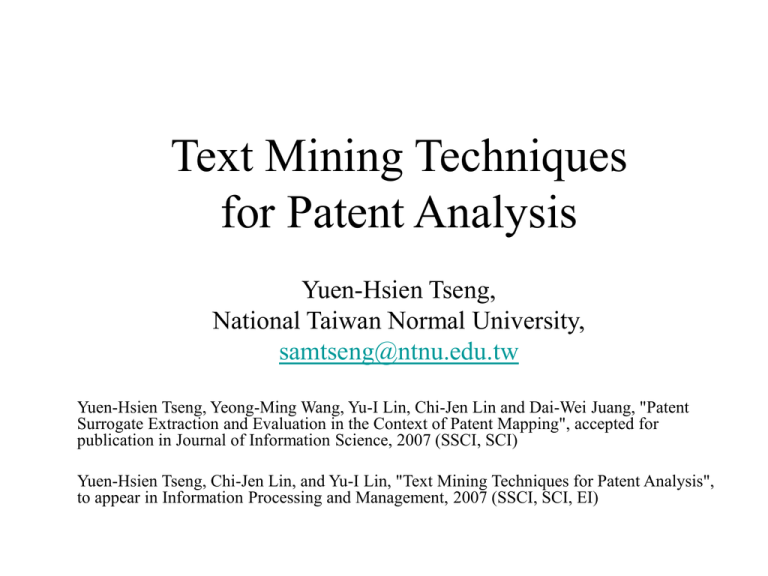

Text Mining Techniques for Patent Analysis Yuen-Hsien Tseng, National Taiwan Normal University, samtseng@ntnu.edu.tw Yuen-Hsien Tseng, Yeong-Ming Wang, Yu-I Lin, Chi-Jen Lin and Dai-Wei Juang, "Patent Surrogate Extraction and Evaluation in the Context of Patent Mapping", accepted for publication in Journal of Information Science, 2007 (SSCI, SCI) Yuen-Hsien Tseng, Chi-Jen Lin, and Yu-I Lin, "Text Mining Techniques for Patent Analysis", to appear in Information Processing and Management, 2007 (SSCI, SCI, EI) Outline • Introduction • A General Methodology • Technique Details • Technique Evaluation • Application Example • Discussions • Conclusions Introduction – Why Patent Analysis? • Patent documents contain 90% research results – valuable to the following communities: • • • • Industry Business Law Policy-making • If carefully analyzed, they can: – – – – – reduce 60% and 40% R&D time and cost, respectively show technological details and relations reveal business trends inspire novel industrial solutions help make investment policy Introduction – Gov. Efforts • PA has received much attention since 2001 – Korea: to develop 120 patent maps in 5 years – Japan: patent mapping competition in 2004 – Taiwan: more and more PM were created • Example: “carbon nanotube” (CNT) • 5 experts dedicated more than 1 month • Asian countries, such as, China, Japan, Korean, Singapore, and Taiwan have invested various resources in patent analysis • PA requires a lot of human efforts – Assisting tools are in great need Typical Patent Analysis Scenario 1. Task identification: define the scope, concepts, and purposes for the analysis task. 2. Searching: iteratively search, filter, and download related patents. 3. Segmentation: segment, clean, and normalize structured and unstructured parts. 4. Abstracting: analyze the patent content to summarize their claims, topics, functions, or technologies. 5. Clustering: group or classify analyzed patents based on some extracted attributes. 6. Visualization: create technology-effect matrices or topic maps. 7. Interpretation: predict technology or business trends and relations. Technology-Effect Matrix • To make decisions about future technology development – seeking chances in those sparse cells • To inspire novel solutions – by understanding how patents are related so as to learn how novel solutions were invented in the past and can be invented in the future • To predict business trends – by showing the trend distribution of major competitors in this map Effect (Function) Technology Gas reaction Manufacture Catalyst Arc discharging Application Display Material Carbon nanotube 5346683 6129901 … 5424054 5780101 … 5424054 Performance Purity Electricity 6181055 6190634 6221489 6333016 6190634 6331262 Product FED 6232706 6339281 5916642 5916642 6346775 5889372 5967873 … Part of the T-E matrix (from STIC) for “Carbon Nanotube” Topic Map of Carbon Nanotube 25 docs. : 0.228054 (emission:180.1, field:177.2, emitter:157.1, cathode:108.4, field emission: 88.0) + 23 docs. : 0.424787 (emitter:187.0, emission:141.9, field:141.4, cathode:129.0, field emission:104.7) + 19 docs. : 0.693770 (emitter:139.7, field emission:132.0, cathode: 96.0, electron: 67.1, display: 61.9) + ID=2 : 7 docs.,0.09(cathode:0.58, source:0.56, display:0.50, field emission:0.45, vacuum:0.43) + ID=1 : 12 docs.,0.07(emitter:0.67, emission:0.60, field:0.57, display:0.40, cathode:0.38) + ID=11 : 4 docs.,0.13(chemic vapor deposition:0.86, sic:0.56, grow:0.44, plate:0.42, thicknes:0.42) + ID=19 : 2 docs.,0.21(electron-emissive:1.00, carbon film:0.70, compromise:0.70, emissive material ... 13 docs. : 0.240830 (energy: 46.8, circuit: 34.0, junction: 33.3, device: 26.0, element: 24.9) + 9 docs. : 0.329811 (antenna: 31.0, energy: 29.5, system: 29.4, electromagnetic: 25.0, granular: 20.6) + ID=4 : 5 docs.,0.07(wave:0.77, induc:0.58, pattern:0.45, nanoscale:0.44, molecule:0.35) + ID=15 : 4 docs.,0.12(linear:0.86, antenna:0.86, frequency:0.74, optic antenna:0.70, …) + ID=10 : 4 docs.,0.06(cool:0.70, sub-ambient:0.70, thermoelectric cool apparatuse:0.70, nucleate:0.70, ... Text Mining - Definition • Knowledge discovery is often regarded as a process to find implicit, previously unknown, and potentially useful patterns – Data mining: from structured databases – Text mining: from a large text repository • In practice, TM involves a series of user interactions with the text mining tools to explore the repository to find such patterns. • After supplemented with additional information and interpreted by experienced experts, these patterns can become important intelligence for decision-making. Text Mining Process for Patent Analysis A General Methodology • Document preprocessing – – – – Collection Creation Document Parsing and Segmentation Text Summarization Document Surrogate Selection • Indexing – – – – Keyword/Phrase extraction morphological analysis Stop word filtering Term association and clustering • Topic Clustering – – – – Term selection Document clustering/categorization Cluster title generation Category mapping • Topic Mapping – Trend map – Query map -- Aggregation map -- Zooming map Example: An US Patent Doc. • See Example or this URL: – http://patft.uspto.gov/netacgi/nphParser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p= 1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1 &f=G&l=50&s1=5,695,734.PN.&OS=PN/5,695,734 &RS=PN/5,695,734 Download and Parsing into DBMS NSC Patents • 612 US patents with assignee contains “National Science Council” downloaded on 2005/06/15 Distribution of NSC Patents 120 G 100 F E 80 D 60 C 40 B A 20 2003 2002 2001 2000 1999 1998 1997 1996 1995 1994 1993 1992 1991 1990 1989 1988 1987 0 7 Apply_Year Patents H 2 1 Document Parsing and Segmentation • Data conversion – Parsing unstructured texts and citations into structured fields in DBMS • Document segmentation – Partition the full patent texts into 6 segments • Abstract, application, task, summary, feature, claim – Only 9 empty segments in 6*92=552 CNT patent segments =>1.63% – Only 79 empty segments in 6*612=3672 NSC patent segments => 2.15% NPR Parsing for Most-Frequently Cited Journals and Citation Age Distribution JouTitle CitedCount Appl. Phys. Lett. 23 Plant Molecular Biology 22 IEEE Electron Device Letters 20 Nature 17 Bio/Technology 16 25 1991 Journal of Virology 15 20 1992 J. Biological Chemistry 11 IEEE 11 Plant Physiol. 10 IEEE Journal of Solid-State Circuits 10 J. Appl. Phys. 9 Macromolecules 8 Mol. Gen. Genet. 8 J. Electroanal. Chem. 8 Proc. Nat'l Acad. Sci. 8 J. Chem. Soc. 8 Science 8 Applied Optics 8 Electronics Letters 7 Jpn. J. Appl. Phys. 7 1988 Citation Age Distribution 1989 Num. of Patents 1990 1993 15 1994 1995 10 1996 5 1997 0 1998 0 1 2 3 4 5 6 7 8 9 10 11 Citation Age 12 13 1999 2000 2001 2002 Data are for 612 NSC patents Automatic Summarization • Segment the doc. into paragraphs and sentences • Assess sentences, consider their – – – – Positions Clue words Title words keywords advantage avoid cost costly decrease difficult effectiveness efficiency goal important weight ( S ) wkeywords • Select sentences tf improved increase issue limit needed w _ or _ titlewords overhead performance problem reduced resolve shorten simplify suffer superior weakness avgtf FS P wcluewords – Sort by the weights and select the top-k sentences. • Assembly the selected sentences – Concatenate the sentences in their original order Example: Auto-summarization MS Word (blue) Vs Ours (red) TITLE (Patent No.: 6,862,710) Internet navigation using soft hyperlinks BACKGROUND OF THE INVENTION Many existing systems have been developed to enable a user to navigate through a set of documents , in order to find one or more of those documents which are particularly relevant to that user's immediate needs . For example , HyperText Mark-Up Language ( HTML ) permits web page designers to construct a web page that includes one or more " hyperlinks " ( also sometimes referred to as " hot links " ) , which allow a user to " click-through " from a first web page to other , different web pages . Each hyperlink is associated with a portion of the web page , which is typically displayed in some predetermined fashion indicating that it is associated with a hyperlink . While hyperlinks do provide users with some limited number of links to other web pages , their associations to the other web pages are fixed , and cannot dynamically reflect the state of the overall web with regard to the terms that they are associated with . Moreover , because the number of hyperlinks within a given web page is limited , when a user desires to obtain information regarding a term , phrase or paragraph that is not associated with a hyperlink , the user must employ another technique . One such existing technique is the search engine . Search engines enable a user to search the World Wide Web ( " Web " ) for documents related to a search query provided by the user . Typical search engines operate through a Web Browser interface . Search engines generally require the user to enter a search query , which is then compared with entries in an " index " describing the occurrence of terms in a set of documents that have been previously analyzed , for example by a program referred to sometimes as a " web spider " . Entry of such a search query requires the user to provide terms that have the highest relevance to the user as part of the search query . However , a user generally must refine his or her search query multiple times using ordinary search engines , responding to the search results from each successive search . Such repeated searching is time consuming , and the format of the terms within each submitted query may also require the user to provide logical operators in a non-natural language format to express his or her search . For the above reasons , it would be desirable to have a system for navigating through a document set , such as the Web , which allows a user to freely search for documents related to terms , phrases or paragraphs within a web page without relying on hyperlinks within the web page . The system should further provide a more convenient technique for internet navigation than is currently provided by existing search engine interfaces . Evaluation of Each Segment • • • • • • • • abs: the ‘Abstract’ section of each patent app: FIELD OF THE INVENTION task: BACKGROUND OF THE INVENTION sum: SUMMARY OF THE INVENTION fea: DETAILED DESCRIPTION OF THE PREFERRED EMBODIMENT cla: Claims section of each patent seg_ext: summaries from each of the sets: abs, app, task, sum, and fea full: full texts from each of the sets: abs, app, task, sum, and fea Evaluation Goal • Analyze a human-crafted patent map to see which segments have more important terms • Purposes (so as to): – allow analysts to spot the relevant segments more quickly for classifying patents in the map – provide insights to possibly improve automated clustering and/or categorization in creating the map Evaluation Method • In the manual creation of a technology-effect matrix, it is helpful to be able to quickly spot the keywords that can be used for classifying the patents in the map. • Once the keywords or category features are found, patents can usually be classified without reading all the texts. • Thus a segment or summary that retains as many important category features as possible is preferable. • Our evaluation design therefore is to reveal which segments contains most such features compared to the others. Patent Maps for Evaluation Num. of Cat. in the Tech. Taxonomy Abbr. Patent Map Num. of Doc. Num. of Cat. in the Effect Taxonomy CNT Carbon Nanotube 92 21 9 QDF Quantum-Dot Fluorescein Detection 11 5 6 QDL Quantum-Dot LED 27 10 5 QDO Quantum-Dot Optical Sensor 19 10 3 NTD Nano Titanium Dioxide 417 17 22 MCM Molecular Motors 79 21 9 All patent maps are from STPI Empty segments in the six patent maps Maps abs app task sum fea cla Total empty segments Total segments Empty rate CNT 0 1 2 5 1 0 9 552 1.63% QDF 0 0 0 0 0 0 0 66 0.00% QDL 0 0 0 1 1 0 2 162 1.23% QDO 0 1 1 2 0 0 4 114 3.51% NTD 0 62 74 85 103 0 324 2502 12.95% MCM 0 1 2 1 1 0 5 474 1.05% Feature Selection • Well studied in machine learning • Best feature selection algorithms Term T – Chi-square, information gain, … • But to select only a few features, correlation coefficient is better than chi-square Category C Yes No Yes TP FN No FP TN • co=1 if FN=FP=0 and TP <>0 and TN <>0 (T , C ) 2 ( TP TN - FN FP ) 2 (TP + FN)(FP + TN)(TP + FP)(FN + TN) Co ( T , C ) ( TP TN - FN FP ) (TP + FN)(FP + TN)(TP + FP)(FN + TN) Best and worst terms by Chi-square and correlation coefficient Chi-square Construction Correlation coefficient Non-Construction Construction Non-Construction engineering 0.6210 engineering 0.6210 engineering 0.7880 equipment 0.2854 improvement 0.1004 improvement 0.1004 improvement 0.3169 procurement 0.2231 … … kitchen 0.0009 kitchen 0.0009 communiqué -0.2062 improvement -0.3169 update 0.0006 update 0.0006 equipment -0.2854 engineering -0.7880 Data are from a small real-world collection of 116 documents with only two exclusive categories, construction vs. non-construction in civil engineering tasks Some feature terms and their distribution in each set for the category FED in CNT term sc ss emit 8 4.86 12 0.62 13 0.58 21 0.55 17 0.59 22 0.70 14 0.61 20 0.63 27 0.59 yes emission 8 5.07 20 0.69 17 0.59 31 0.62 21 0.73 34 0.63 20 0.63 33 0.64 40 0.54 yes display 8 5.06 9 0.50 12 0.62 22 0.64 14 0.61 24 0.71 10 0.62 23 0.68 34 0.68 cathode 8 3.86 12 0.39 9 0.42 27 0.48 14 0.54 30 0.53 15 0.51 25 0.52 41 0.47 pixel 7 3.14 3 0.33 8 0.46 3 0.33 12 0.62 2 0.27 5 0.43 17 0.72 screen 5 1.74 2 0.27 2 0.27 8 0.37 18 0.43 19 0.41 yes electron 5 1.71 27 0.31 25 0.40 yes voltage 4 1.48 52 0.39 rel sc ( term ) abs app Task 36 20 1 term Segment sum 0.45 fea cla 0.28 27 45 0.37 ss ( term ) 0.37 seg_ext 61 0.35 16 0.28 full co ( term ) term Segment Note: The correlation coefficients in each segment correlate to the set counts of the ordered features: the larger the set count, the larger the correlation coefficient in each segment. Occurrence distribution of 30 top-ranked terms in each set for some categories in CNT category T_No abs App Task sum fea cla seg_ext full Carbon nanotube 30 15/50.0% 12/40.0% 14/46.7% 20/66.7% 13/43.3% 19/63.3% 20/66.7% 13/43.3% FED 30 16/53.3% 14/46.7% 22/73.3% 19/63.3% 21/70.0% 19/63.3% 21/70.0% 22/73.3% device 30 21/70.0% 17/56.7% 9/30.0% 16/53.3% 7/23.3% 19/63.3% 17/56.7% 8/26.7% Derivation 30 14/46.7% 6/20.0% 7/23.3% 11/36.7% 8/26.7% 13/43.3% 13/43.3% 11/36.7% electricity 30 12/40.0% 10/33.3% 10/33.3% 10/33.3% 8/26.7% 8/26.7% 13/43.3% 12/40.0% purity 30 12/40.0% 12/40.0% 7/23.3% 20/66.7% 9/30.0% 17/56.7% 18/60.0% 14/46.7% High surface area 30 19/63.3% 13/43.3% 13/43.3% 17/56.7% 8/26.7% 9/30.0% 16/53.3% 8/26.7% magnetic 30 18/60.0% 11/36.7% 6/20.0% 14/46.7% 14/46.7% 13/43.3% 15/50.0% 13/43.3% energy storage 30 16/53.3% 17/56.7% 13/43.3% 17/56.7% 6/20.0% 10/33.3% 21/70.0% 12/40.0% M_Best_Term_Coverage(Segment, Category)= MBTC ( s , c ) 1 M 1 term s c Occurrence distribution of manually ranked terms in each set for some categories in CNT category T_No abs app task sum Carbon nanotube fea cla 4 3/75.0% 2/50.0% 2/50.0% 3/75.0% 2/50.0% 2/50.0% 3/75.0% 2/50.0% FED 7 6/85.7% 6/85.7% 6/85.7% 4/57.1% 6/85.7% 4/57.1% 6/85.7% 5/71.4% device 2 2/100.0% 1/50.0% 0/0.0% 1/50.0% 1/50.0% 2/100.0% 1/50.0% 0/0.0% electricity 2 2/100.0% 2/100.0% 2/100.0% 2/100.0% 1/50.0% 2/100.0% 0/0.0% 0/0.0% purity 2 2/100.0% 2/100.0% 0/0.0% 1/50.0% 1/50.0% 1/50.0% 0/0.0% 1/50.0% High surface area 8 6/75.0% 2/25.0% 3/37.5% 5/62.5% 1/12.5% 2/25.0% 4/50.0% 1/12.5% magnetic 5 3/60.0% 1/20.0% 2/40.0% 1/20.0% 3/60.0% 0/0.0% 4/80.0% 3/60.0% energy storage 2 2/100.0% 2/100.0% 1/50.0% 2/100.0% 1/50.0% 1/50.0% 2/100.0% 0/0.0% R_Best_Term_Covertage(Segment, Category)= RBTC ( s , c ) seg_ext 1 R 1 full term s c rel Occurrence distribution of terms in each segment averaged over all categories in CNT Set Taxonomy nc abs app task sum fea Cla seg_ext full nt Effect 9 M=30 52.96% 41.48% 37.41% 53.33% 34.81% 47.04% 57.04% 41.85% Effect* 8 4 86.96% 66.34% 45.40% 64.33% 51.03% 54.02% 55.09% 30.49% Tech 21 M=30 49.37% 25.56% 26.51% 56.51% 34.44% 46.51% 56.03% 40.95% Tech* 17 4.5 59.28% 29.77% 23.66% 49.43% 34.46% 60.87% 44.64% 32.17% M_Best_Term_Coverage(Segment)= MBTC ( s ) R_Best_Term_Coverage(Segment)= RBTC ( s ) 1 Cat 1 Cat cCat cCat 1 M 1 R 1 term s c 1 term s c rel Maximum correlation coefficients in each set averaged over all categories in CNT Set Taxonomy nc abs app task sum fea cla seg_ext full nt Effect 9 M=30 0.58 0.49 0.54 0.55 0.55 0.57 0.56 0.55 Effect* 8 4.0 0.52 0.43 0.39 0.52 0.48 0.47 0.44 0.33 Tech 21 M=30 0.64 0.58 0.65 0.66 0.66 0.67 0.68 0.68 Tech* 17 4.5 0.47 0.29 0.34 0.44 0.35 0.51 0.43 0.42 *: denoted those calculated from human judged relevant terms Term-covering rates for M best terms for the effect taxonomy in CNT 90.00% 80.00% 70.00% 60.00% 10 50.00% 40.00% 30 50 30.00% 20.00% 10.00% 0.00% abs app task sum fea cla seg_ext full Term-covering rates for M best terms for the technology taxonomy in CNT 90.00% 80.00% 70.00% 60.00% 10 50.00% 40.00% 30 50 30.00% 20.00% 10.00% 0.00% abs app task sum fea cla seg_ext full Term-covering rates for M best terms 80% 80% 70% 70% 60% 60% 50% 10 50% 10 40% 30 40% 30 30% 50 30% 50 20% 20% 10% 10% 0% 0% abs app task sum fea cla seg_ext abs full app task sum fea cla seg_ext full QDF: Quantum Dot Fluorescein Detection 90% 90% 80% 80% 70% 70% 60% 10 50% 40% 30 50 30% 60% 10 50% 40% 30 50 30% 20% 20% 10% 10% 0% 0% abs app task sum fea cla seg_ext full abs app QDL: Quantum Dot LED task sum fea cla seg_ext full Term-covering rates for M best terms 90% 80% 70% 60% 10 50% 40% 30 50 30% 20% 10% 0% abs app task sum fea cla seg_ext full 80% 70% 10 50% 40% 30 50 30% 20% 10% 0% abs app task sum fea cla seg_ext 50 90% 80% 80% 70% 70% 60% 60% 10 app task sum fea cla seg_ext 30 50 30 50% 40% 50 30% 20% 20% 10% 10% app task sum fea cla seg_ext abs app task sum fea cla seg_ext full 10 30 50 abs app task sum fea cla seg_ext NTD: Nano Titanium Dioxide full 0% 0% QDO: QuantumDot Optical Sensor full 10 abs 90% 30% 30 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% full 50% 40% 10 abs 90% 60% 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% full MCM: Molecular Motors Findings • Most ICFs ranked by correlation coefficient occur in the “segment extracts”, the Abstract section, and the SUMMARY OF THE INVENTION section. • Most ICFs selected by humans occur in the Abstract section or the Claims section. • The “segment extracts” lead to more top-ranked ICFs than the “full texts”, regardless whether the category features are selected manually or automatically. • The ICFs selected automatically have higher capability in discriminating a document’s categories than those selected manually according to the correlation coefficient. Implications • Text summarization techniques help in patent analysis and organization, either automatically or manually. • If one would determine a patent’s category based on only a few terms in a quick pace, one should first read the Abstract section and the SUMMARY OF THE INVENTION section • Or alternatively, one should first read the “segment extracts” prepared by a computer Text Mining Process for Patent Analysis • Document preprocessing – – – – Collection Creation Document Parsing and Segmentation Text Summarization Document Surrogate Selection • Indexing – – – – Keyword/Phrase extraction morphological analysis Stop word filtering Term association and clustering • Topic Clustering – – – – Term selection Document clustering/categorization Cluster title generation Category mapping • Topic Mapping – Trend map – Query map -- Aggregation map -- Zooming map Ideal Indexing for Topic Identification Semantic Resource processing (human-prepared) Byte isolation Character identification Language knowledge Word Word segmentation, Word disambiguity Lexicon Phrase Phrase extraction POS tagger Tagged corpus Morphological Morphological analyzer Linguistic knowledge Grammar analyzer Thesaurus, WordNet Unit Syntatic processing Alphabet Term Concept processing: stemmer Clustering, feature extraction Category classification Understanding Training data, Existing DB No processing may result in low recall; More processing may have false drops. Example: Extracted Keywords and Their Associated Terms 1. 2. Yuen-Hsien Tseng, Chi-Jen Lin, and Yu-I Lin, "Text Mining Techniques for Patent Analysis", to appear in Information Processing and Management, 2007 (SSCI and SCI) Yuen-Hsien Tseng, "Automatic Cataloguing and Searching for Retrospective Data by Use of OCR Text", Journal of the American Society for Information Science and Technology, Vol. 52, No. 5, April 2001, pp. 378-390. (SSCI and SCI) Clustering Methods • Clustering is a powerful technique to detect topics and their relations in a collection. • Clustering techniques: – – – – HAC : Hierarchical Agglomerative Clustering K-means MDS: Multi-Dimensional Scaling SOM: Self-organization Map • Many open source packages are available – Need to define the similarity to use them • Similarities – Co-words: common words used between items – Co-citations: common citations between items Document Clustering • Effectiveness of clustering relies on – how terms are selected • Affect effectiveness most • Automatic, manual, or hybrid • Users have more confidence on the clustering results if terms are selected by themselves, but this is costly • Manual verification of selected terms is recommended whenever it is possible • Recent trend: – Text clustering with extended user feedback, SIGIR 2006 – Near-duplicate detection by instance-level constrained clustering, SIGIR06 – how they are weighted • Boolean or TFxIDF – how similarities are measured • Cosine, Dice, Jaccard, etc, .. • Direct HAC document clustering may be prohibited due to its complexity Term Clustering • Single terms are often ambiguous, a group of nearsynonym terms can be more specific in topic • Goal: reduce number of terms for ease of topic detection, concept identification, generation of classification hierarchy, or trend analysis • Term clustering followed by document categorization – Allow large collections to be clustered • Methods: – Keywords: maximally repeated words or phrases, extracted by patented algorithm (Tseng, 2002) – Related terms: keywords which often co-occur with other keywords, extracted by association mining (Tseng, 2002) – Simset: a set of keywords having common related terms, extracted by term clustering Multi-Stage Clustering • Single-stage clustering is easy to get skewed distribution • Ideally, in multi-stage clustering, terms or documents can be clustered into concepts, which in turn can be clustered into topics or domains. • In practice, we need to browse the whole topic tree to found desired concepts or topics. Topics Concepts Terms or docs. Cluster Descriptors Generation • One important step to help analysts interpret the clustering results is to generate a summary title or cluster descriptors for each cluster. • CC (correlation Coefficient) is used • But CC0.5 or CCxTFC yield better results • See – Yuen-Hsien Tseng, Chi-Jen Lin, Hsiu-Han Chen and Yu-I Lin, "Toward Generic Title Generation for Clustered Documents," Proceedings of Asia Information Retrieval Symposium, Oct. 16-18, Singapore, pp. 145-157, 2006. (Lecture Notes in Computer Science, Vol. 4182, SCI) Mapping Cluster Descriptors to Categories • More generic title words can not be generated automatically – ‘Furniture’ is a generic term for beds, chairs, tables, etc. But if there is no ‘furniture’ in the documents, there is no way to yield furniture as a title word, unless additional knowledge resources were used, such as thesauri • See also Tseng et al, AIRS 2006 Search WordNet for Cluster Class • Using external resource to get cluster categories – For each of 352 (0.005) or 328 (0.001) simsets generated from 2714 terms – Submit the sinset heads to WordNet to get their hypernyms (upper-level hypernyms as categories) – Accumulate occurrence of each of these categories – Rank these categories by occurrence – Select the top-ranked categories as candidates for topic analysis – These top-ranked categories still need manual filtering – Current results are not satisfying • Need to try to search scientific literature databases which support topic-based search capability and which have needed categories Mapping Cluster Titles to Categories • Search Stanford’s InfoMap – http://infomap.stanford.edu/cgi-bin/semlab/infomap/classes/print_class.pl?args=$term1+$term2 • Search WordNet directly – Results similar to InfoMap – Higher recall, lower Precision than InfoMap – Yield meaningful results only when terms are in high quality • Search google directory: http://directory.google.com/ – Often yield: your search did not match any documents. – Or wrong category: • Ex1: submit: “'CMOS dynamic logics‘” – get: ‘Computers > Programming > Languages > Directories’ • Ex2: submit: “laser, wavelength, beam, optic, light”, get: – ‘Business > Electronics and Electrical > Optoelectronics and Fiber‘, – ‘Health > Occupational Health and Safety > Lasers’ • Searching WordNet yield better results but still unacceptable D:\demo\File>perl -s wntool.pl =>0.1816 : device%1 =>0.1433 : actinic_radiation%1 actinic_ray%1 =>0.1211 : signal%1 signaling%1 sign%3 =>0.0980 : orientation%2 =>0.0924 : vitality%1 verve%1 NSC Patents • 612 US patents whose assignees are NSC • NSC sponsors most academic researches – Own the patents resulted from the researches • Documents in the collection are – knowledge-diversified (cover many fields) – long (2000 words in average) – full of advanced technical details • Hard for any single analyst to analyze them • Motivate the need to generate generic titles Text Mining from NSC Patents • Download NSC patents from USPTO with assignee=National Science Council • Automatic key-phrase extraction – Terms occurs more than once can be extracted • Automatic segmentation and summarization – 20072 keywords from full texts vs 19343 keywords from 5 segment summarization – The 5 segment abstracts contain more category-specific terms then full texts (Tseng, 2005) • Automatic index compilation – Occurring frequency of each term in each document was recorded – Record more than 500,000 terms (words, phrases, digits) among 612 documents in 72 seconds Text Mining from NSC Patents: Clustering Methods • Term similarity is based on common co-occurrence terms – Phrases and co-occurrence terms are extracted based on Tseng’s algorithm [JASIST 2002] • Document similarity is based on common terms • Complete-link method is used to group similar items Cluster Info. ID=180, sim=0.19,descriptors: standard:0.77, mpeg:0.73, audio:0.54 Term DF Co-occurrence Terms AUDIO 9 standard, high-fidelity, MPEG, technique, compression, signal, Multi-Channel. MPEG 4 standard, algorithm, AUDIO, layer, audio decoding, architecture. audio decoding 3 MPEG, architecture. standard 31 AUDIO,MPEG. compression 29 apparatus, AUDIO, high-fidelity, Images, technique, TDAC, high-fidelity audio, signal, arithmetic coding. Term Clustering of NSC Patents • Results: – From 19343 keywords, remove those whose df>200 (36) and df=1 (12330), and those that have no related terms (4263), resulting in 2714 terms • Number of terms whose df>5 is 2800 – 352 (0.005) or 328 (0.001) simsets were generated from 2714 terms • Good cluster: – – – – – – • 180 : 5筆,0.19(standard:0.77, mpeg:0.73, audio:0.54) AUDIO : 9 : standard, high-fidelity, MPEG, technique, compression, signal, Multi-Channel. MPEG : 4 : standard, algorithm, AUDIO, layer, audio decoding, architecture. audio decoding : 3 : MPEG, architecture. standard : 31 : AUDIO,MPEG. compression : 29 : apparatus, AUDIO, high-fidelity, Images, technique, TDAC, high-fidelity audio, signal, arithmetic coding. Wrong cluster: – 89 : 6筆,0.17(satellite:0.71, communicate:0.54, system:0.13) – satellite : 8 : nucleotides, express, RNAs, vector, communication system, phase, plant, communism, foreign gene. – RNAs : 5 : cDNA, Amy8, nucleotides, alpha-amylase gene, Satellite RNA, sBaMV, analysis, quinoa, genomic, PAT1, satellite, Lane, BaMV, transcription. – foreign gene : 4 : express, vector, Satellite RNA, plant, satellite, ORF. – electrical power : 4 : satellite communication system. – satellite communication system : 2 : electrical power, microwave. – communication system : 23 : satellite. Topic Map for NSC Patents • Third-stage document clustering result 5. Material 2. Electronics and Semi-conductors 1.Chemistry 4. Communication and computers 3. Generality 6. Biomedicine Topic Tree for NSC Patents 1: 122 docs. : 0.201343 (acid:174.2, polymer:166.8, catalyst:155.5, ether:142.0, formula:135.9) * 108 docs. : 0.420259 (polymer:226.9, acid:135.7, alkyl:125.2, ether:115.2, formula:110.7) o 69 docs. : 0.511594 (resin:221.0, polymer:177.0, epoxy:175.3, epoxy resin:162.9, acid: 96.7) + ID=131 : 26 docs. : 0.221130(polymer: 86.1, polyimide: 81.1, aromatic: 45.9, bis: 45.1, ether: 44.8) + ID=240 : 43 docs. : 0.189561(resin:329.8, acid: 69.9, group: 57.5, polymer: 55.8, monomer: 44.0) o ID=495 : 39 docs. : 0.138487(compound: 38.1, alkyl: 37.5, agent: 36.9, derivative: 33.6, formula: 24.6) * ID=650 : 14 docs. : 0.123005(catalyst: 88.3, sulfide: 53.6, iron: 21.2, magnesium: 13.7, selective: 13.1) 2: 140 docs. : 0.406841 (silicon:521.4, layer:452.1, transistor:301.2, gate:250.1, substrate:248.5) * 123 docs. : 0.597062 (silicon:402.8, layer:343.4, transistor:224.6, gate:194.8, schottky:186.0) o ID=412 : 77 docs. : 0.150265(layer:327.6, silicon:271.5, substrate:178.8, oxide:164.5, gate:153.1) o ID=90 : 46 docs. : 0.2556(layer:147.1, schottky:125.7, barrier: 89.6, heterojunction: 89.0, transistor: … * ID=883 : 17 docs. : 0.103526(film: 73.1, ferroelectric: 69.3, thin film: 48.5, sensor: 27.0, capacitor: 26.1) 3: 66 docs. : 0.220373 (plastic:107.1, mechanism: 83.5, plate: 79.4, rotate: 74.9, force: 73.0) * 54 docs. : 0.308607 (plastic:142.0, rotate:104.7, rod: 91.0, screw: 85.0, roller: 80.8) o ID=631 : 19 docs. : 0.125293(electromagnetic: 32.0, inclin: 20.0, fuel: 17.0, molten: 14.8, side: 14.8) o ID=603 : 35 docs. : 0.127451(rotate:100.0, gear: 95.1, bear: 80.0, member: 77.4, shaft: 75.4) * ID=727 : 12 docs. : 0.115536(plasma: 26.6, wave: 22.3, measur: 13.3, pid: 13.0, frequency: 11.8) 4: 126 docs. : 0.457206 (output:438.7, signal:415.5, circuit:357.9, input:336.0, frequency:277.0) * 113 docs. : 0.488623 (signal:314.0, output:286.8, circuit:259.7, input:225.5, frequency:187.9) o ID=853 : 92 docs. : 0.105213(signal:386.8, output:290.8, circuit:249.8, input:224.7, light:209.7) o ID=219 : 21 docs. : 0.193448(finite: 41.3, data: 40.7, architecture: 38.8, computation: 37.9, algorithm: … * ID=388 : 13 docs. : 0.153112(register: 38.9, output: 37.1, logic: 32.2, addres: 28.4, input: 26.2) 5: 64 docs. : 0.313064 (powder:152.3, nickel: 78.7, electrolyte: 74.7, steel: 68.6, composite: 64.7) * ID=355 : 12 docs. : 0.1586(polymeric electrolyte: 41.5, electroconductive: 36.5, battery: 36.1, electrode: ... * ID=492 : 52 docs. : 0.138822(powder:233.3, ceramic:137.8, sinter: 98.8, aluminum: 88.7, alloy: 63.2) 6: 40 docs. : 0.250131 (gene:134.9, protein: 77.0, cell: 70.3, acid: 65.1, expression: 60.9) * ID=12 : 11 docs. : 0.391875(vessel: 30.0, blood: 25.8, platelet: 25.4, dicentrine: 17.6, inhibit: 16.1) * ID=712 : 29 docs. : 0.116279(gene:148.3, dna: 66.5, cell: 65.5, sequence: 65.1, acid: 62.5) Total: 558 docs. Major IPC Categories for NSC patents A: 87 docs. : Human Necessities + A61: 71 docs. : Medical Or Veterinary Science; Hygiene + A*: 16 docs. : A01(7), A21(2), A23(2), A42(2), A03(1), A62(1), A63(1) B: 120 docs. : Performing Operations; Transporting + B01: 25 docs. : Physical Or Chemical Processes Or Apparatus In General + B05: 28 docs. : Spraying Or Atomising In General; Applying Liquids Or Other Fluent Materials To Surfaces + B22: 17 docs. : Casting; Powder Metallurgy + B*: 50 docs. : B32(12), B29(11), B62(6), B23(4), B24(4), B60(4), B02(2), B21(2), B06(1), B25(1), … C: 314 docs. : Chemistry; Metallurgy + C07: 62 docs. : Organic Chemistry + C08: 78 docs. : Organic Macromolecular Compounds; Their Preparation Or Chemical Working-Up; … + C12: 76 docs. : Biochemistry; Beer; Wine; Vinegar; Microbiology; Mutation Or Genetic Engineering; … + C* : 98 docs. : C23(22), C25(20), C01(19), C04(10), C09(10), C22(8), C03(5), C30(3), C21(1) D: 6 docs. : Textiles; Paper E: 8 docs. : Fixed Constructions F: 30 docs. : Mechanical Engineering; Lighting; Heating; G: 134 docs. : Physics + G01: 49 docs. : Measuring; Testing + G02: 28 docs. : Optics + G06: 29 docs. : Computing; Calculating; Counting + G*: 28 docs. : G10(7), G11(7), G05(6), G03(5), G08(2), G09(1) H: 305 docs. : Electricity + H01: 216 docs. : Basic Electric Elements o H01L021: 92 docs. : Processes or apparatus adapted for the manufacture or treatment of semiconductor o H01L029: 35 docs. : Semiconductor devices adapted for rectifying, amplifying, oscillating, or switching; o H01L*: 89 docs. : others. o H01*: 53 docs. : H03K(23), H03M(11), H04B(10), H04L(7), H04N(7), H01B(5), H03H(4), H04J(4), … + H03: 51 docs. : Basic Electronic Circuitry + H04: 30 docs. : Electric Communication Technique + H*: 8 docs. : H05(5), H02(3) Division Distributions of NSC Patents Abbrev. Ele Che Mat Opt Med Mec Bio Com Inf Civ Others Total Division Electrical Engineering Chemical Engineering Material Engineering Optio-Electronics Medical Engineering Mechanical Engineering Biotechnology Engineering Communication Engineering Information Engineering Civil Engineering Percentage 28.63% 14.70% 14.12% 13.15% 10.44% 6.58% 5.03% 2.90% 2.90% 1.16% 0.39% 100.00% Distribution of Major IPC Categories in Each Cluster others F B05 B* H* C08 C* B01 A61 C07 Cluster 1 others G01 C* H01L 029 others H01L 021 H Cluster 2 B* B22 H* A* E Cluster 3 others G* G01 F G01 others H03 G02 H04 H* G06 Cluster 4 others B01 H* B22 C07 C* Cluster 5 A61 C12 Cluster 6 Distribution of Academic Divisions in Each Cluster Mat Bio Opt Ele Civ Che Che Mat Che CivInf Opt Opt Mec Ele Ele Mat Med Cluster 1 Inf Med Che Mec Cluster 2 Cluster 3 Ele Ele Com Mat Che Bio Med Opt Cluster 4 Cluster 5 Cluster 6 Comparison among the Three Methods • The three classification systems provide different facets to understand the topic distribution of the patent set. • Each may reveal some insights if we can interpret it. • The IPC system results in divergent and skewed distributions which make it hard for further analysis (such as trend analysis). • The division classification is the most familiar one to the NSC analysts, but it lacks inter-disciplinary information. • As to the text mining approach, it dynamically glues related IPC categories together based on the patent contents to disambiguate their vagueness. • This makes future analysis possible even when the division information is absent, as may be the case in later published patents to which NSC no longer claims their right. Other Methods: SOM The 16x16 SOM for the NSC patents obtained by the tool from Peter Kleiweg Other Methods: Citation Analysis • Among 612 NSC patents: • Only 123 patents are co-cited by others – resulting in 99 co-cited pairs. • Only 175 patents co-cite others – resulting in 143 co-citing pairs. • Such sparseness may lead to biased analysis. • Citation analysis is not suitable in this case. Conclusions • Used text mining techniques: – – – – text segmentation summary extraction keyword identification topic detection (taxonomy generation, term clustering) • Achievement: – better classification than IPC – As more interactions are involved in nowadays researches, inter-disciplinary relations are interesting to monitor. – Provide this information that NSC Divisions lack • “Problems to be solved” is likely to be extracted from the “Background of the Invention” • However, “Solutions” is hard to extract Types of Patent Maps • Trend maps : two kinds for showing the trends: – Growth mode: accumulate patents over time – Evolution mode: divide patents over time – Both are made by fixing the clusters obtained from clustering all patents and then divide the patents in each cluster in a timely fashion and recalculate the similarities among these clusters. • Query maps: – Showing only those patents satisfying some conditions in each cluster • Aggregation maps : – Aggregated results based on some specified attributes are show in each cluster • Zooming maps: – Some clusters can be selected and zoomed in or out to show the details or the overviews