Chap 5 Slides

advertisement

Section 5.1

Eigenvectors and Eigenvalues

Eigenvectors and Eigenvalues

•Useful

throughout pure and applied mathematics.

•Used to study difference equations and continuous

dynamical systems.

•Provide critical information in engineering design

•Arise naturally in such fields as physics and chemistry.

•Used in statistics to analyze multicollinearity

Example:

3 3

•Let A

1 5

•Consider

3

v1

1

Av for some v

1

v2

1

1

v3

1

Definition:

•The

Eigenvector of Anxn is a nonzero vector x such that

Ax=λx for some scalar λ.

•λ

is called an Eigenvalue of A

Statistics (multicollinearity)

yi 0 1 x1 2 x2 ....... i xi i

•Where

y is the dependent response vector and the x’s

are the independent explanatory vectors

•The β’s are least squares regression coefficients

•εi are errors

•We desire linear independence between x vectors

•Can use Eigen analysis to determine

From Definition:

•Ax

= λx = λIx

•Ax – λIx = 0

•(A – λI)x = 0

•Observations:

1.

2.

3.

λ is an eigenvalue of A iff (A – λI)x= 0 has non-trivial

solutions

A – λI is not invertible

IMT all false

The set {xεRn: (A – λI)x= 0} is the nullspace of (A – λI)x=

0, A a subspace of Rn

The set of all solutions is called the eigenspace of A

corresponding to λ

Example

Show that 2 is an eigenvalue of

3 3

A

1

5

and find the corresponding eigenvectors.

Comments:

Warning:The method just used (row reduction) to

find eigenvectors cannot be used to find

eigenvalues.

Note: The set of all solutions to (A-λI)x =0 is called

the eigenspace of A corresponding to λ.

Example

Let

1 2 2

A 3 2 1

0 1 1

An eigenvalue of A is λ=3. Find a basis for the

corresponding eigenspace.

Theorem

The eigenvalues of a triangular matrix

are the entries on its main diagonal.

Proof of 3x3 case

a11

Let A 0

0

a12

a22

0

a13

a23

a33

a11

So (A-λI) = A 0

0

a12

a22

0

a11

0

0

a13 0 0

a23 0 0

a33 0 0

a12

a22

0

a13

a23

a33

Proof of 3x3 case

By definition λ is an eigenvalue iff (A-λI)x=0

has non-trivial solutions so a free variable must

exist.

a11

0

0

a12

a22

0

a13

a23

a33

This occurs when a11=λ or a22=λ or a33=λ

Example

Consider the lower triangular matrix below.

4 0 0

A 0 0 0

1 0 3

λ= 4 or 0 or -3

Addtion to IMT

Anxn is invertible iff

s. The number 0 is not an eigenvalue

t. det A≠0 (not sure why author waits until

not to add this)

Theorem

If eigenvectors have distinct

eigenvalues then the eigenvectors

are linearly independent

This can be proven by the IMT

Section 5.2

The Characteristic Equation

Finding Eigenvalues

1.

We know (A-λI)x=0 must have nontrivial solutions and x is non-zero. That

is free variables exist.

2.

So (A-λI) is not invertible by the IMT

3.

Therefore det(A-λI)=0 by IMT

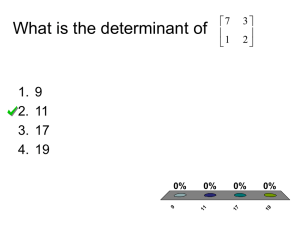

Characteristic Equation

det(A-λI)=0

Solve to find eigenvalues

Note: det(A-λI) is the characteristic polynomial.

Previous Example:

•Let

3 3

A

1 5

find eigenvalues

Example

Find Eigenvalues

1 2 1

A 0 5 0

1 8 1

Example

Find characteristic polynomial and eigenvalues

3 2 3

A 0 6 10

0 0 2

Example

a.

b.

c.

Find the characteristic polynomial

Find all eigenvalues

Find multiplicity of each eigenvalue

2

5

A

9

1

0

3

1

2

0 0

0 0

3 0

5 1

Recap

a.

b.

c.

λ is an eigenvalue of A if (A-λI)x=0 has non-trivial

solutions (free variables exist).

Eigenvectors (eigenspace )are found by row reducing

(A-λI)x=0.

Eigenvalues are found by solving det(A-λI)=0.

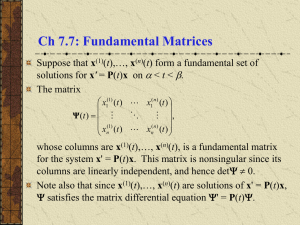

Section 5.3

Diagonalization

Diagonalization

•The

goal here is to develop a useful

factorization A=PDP-1, when A is nxn.

•We can use this to compute Ak quickly for

large k.

•The matrix D is a diagonal matrix (i.e.

entries off the main diagonal are all zeros).

Example

Find a formula for Ak given A=PDP-1 &

6 1

A

2 3

1 1

P

1

2

5 0

D

0 4

2 1

P

1 1

1

Diagonalizable

Matrix “A” is diagonalizable if

A=PDP-1 where P is invertible

and D is a diagonal matrix.

Note: AP=PD

When is a matrix diagonalizable?

Let’s examine eigenvalues and eigenvectors

of A

6 1

A

2 3

The Diagonalization Theorem

If Anxn & has n linearly independent

eigenvectors.

Then

1.

A=PDP-1

2.

Columns of P are eigenvectors

3.

Diagonals of D are eigenvalues.

Example

Diagonalize

2 0 0

A 1 2 1

1 0 1

We need to find P & D

Theorem

If Anxn has n distinct eigenvalues then A is

diagonalizable.

Example

Diagonalize

2 4 6

A 0 2 2

0 0 4

Example

Diagonalize

2 0 0

A 2 6 0

3 2 1