PowerPoint - Ivan Titov

advertisement

Learning for Structured Prediction

Linear Methods For Sequence Labeling:

Hidden Markov Models vs Structured Perceptron

Ivan Titov

x is an input (e.g.,

sentence), y is an output

(syntactic tree)

Last Time: Structured Prediction

Selecting feature representation '

1.

(x ; y )

It should be sufficient to discriminate correct structure from incorrect ones

It should be possible to decode with it (see (3))

Learning

2.

Which error function to optimize on the training set, for example

w ¢' (x ; y ? ) ¡ maxy 02 Y ( x ) ;y 6= y ? w ¢' (x ; y 0) > °

How to make it efficient (see (3))

Decoding: y =

3.

argmaxy 02 Y (x ) w ¢' (x ; y 0)

Dynamic programming for simpler representations '

Approximate search for more powerful ones?

?

We illustrated all these challenges on the example of dependency parsing

Outline

Sequence labeling / segmentation problems: settings and example problems:

Part-of-speech tagging, named entity recognition, gesture recognition

Hidden Markov Model

Standard definition + maximum likelihood estimation

General views: as a representative of linear models

Perceptron and Structured Perceptron

algorithms / motivations

Decoding with the Linear Model

Discussion: Discriminative vs. Generative

3

Sequence Labeling Problems

Definition:

Input: sequences of variable length

Output: every position is labeled

x = (x 1 ; x 2 ; : : : ; x j x j ), x i 2 X

y = (y1 ; y2 ; : : : ; yj x j ), yi 2 Y

Examples:

Part-of-speech tagging

x = John

carried

a

tin

can

.

y = NP

VBD

DT

NN

NN

.

Named-entity recognition, shallow parsing (“chunking”),

from video-streams, …

gesture recognition

4

Part-of-speech tagging

x = John

NNP

y =

a

tin

can

.

VBD

DT

NN

NN or MD?

.

In fact, even knowing that

previous

If youthe

just

predict the most

word is a nounfrequent

is not enough

tag for each word you

will make a mistake here

Labels:

NNP – proper singular noun;

NN – singular noun

VBD - verb, past tense

MD - modal

DT - determiner

. - final punctuation

Consider

One need to model interactions between labels

to successfully resolve ambiguities, so this

should be tackled as a structured prediction

problem

x = Tin

can

cause

poisoning

…

NN

MD

VB

NN

…

y =

3

carried

5

Named Entity Recognition

[ORG Chelsea], despite their name, are not based in [LOC Chelsea], but in

neighbouring [LOC Fulham] .

[PERS Bill Clinton] will not embarrass [PERS Chelsea] at her wedding

Tiger failed to make a birdie in the South Course …

Is it an animal or a person?

Encoding example (BIO-encoding)

x=

Bill

y = B-PERS

3

Chelsea can be a person too!

Not as trivial as it may seem, consider:

Clinton

embarrassed

Chelsea

at

her

wedding

at

Astor

Courts

I-PERS

O

B-PERS

O

O

O

O

B-LOC

I-LOC

6

Figures from (Wang et al.,

CVPR 06)

Vision: Gesture Recognition

Given a sequence of frames in a video annotate each frame with a gesture type:

Types of gestures:

Flip back

Shrink

vertically

Expand

vertically

Double

back

Point

and back

Expand horizontally

It is hard to predict gestures from each frame in isolation, you need to exploit

relations between frames and gesture types

7

Outline

Sequence labeling / segmentation problems: settings and example problems:

Part-of-speech tagging, named entity recognition, gesture recognition

Hidden Markov Model

Standard definition + maximum likelihood estimation

General views: as a representative of linear models

Perceptron and Structured Perceptron

algorithms / motivations

Decoding with the Linear Model

Discussion: Discriminative vs. Generative

8

Hidden Markov Models

We will consider the part-of-speech (POS) tagging example

John

carried

a

tin

can

.

NP

VBD

DT

NN

NN

.

A “generative” model, i.e.:

Model: Introduce a parameterized model of how both words and tags are

generated P (x ; y jµ)

Learning: use a labeled training set to estimate the most likely parameters of

^

the model µ

Decoding:

^

y = argmaxy 0 P(x ; y 0j µ)

9

Hidden Markov Models

A simplistic state diagram for noun phrases:

Det

N – tags, M – vocabulary size

[0.5 : a

0.5 : the]

Example:

a

0.8

0.5

0.1

$

0.1

Adj

0.2

1.0

0.8

hungry

dog

[0.01: red,

0.01 : hungry

, …]

0.5

Noun

[0.01 : dog

0.01 : herring, …]

States correspond to POS tags,

Words are emitted independently from each POS tag

Parameters (to be estimated from the training set):

Transition probabilities P(y( t ) jy( t ¡

Emission probabilities

1)

P(x ( t ) jy( t ) )

Stationarity assumption: this

probability does not depend on

the position in the sequence t

) :

[ N x N ] matrix

:

[ N x M] matrix

10

Hidden Markov Models

Representation as an instantiation of a graphical model:

y( 1)= Det

y( 2) = Adj

N – tags, M – vocabulary size

y( 3)= Noun y( 4)

…

A arrow means that in the

generative story x(4) is generated

from some P(x(4) | y(4))

…

x (1) = a

x (2)= hungry x (3) = dog x (4)

States correspond to POS tags,

Words are emitted independently from each POS tag

Parameters (to be estimated from the training set):

Transition probabilities P(y( t ) jy( t ¡

Emission probabilities

1)

P(x ( t ) jy( t ) )

Stationarity assumption: this

probability does not depend on

the position in the sequence t

) :

[ N x N ] matrix

:

[ N x M] matrix

11

Hidden Markov Models: Estimation

N – the number tags, M – vocabulary size

Parameters (to be estimated from the training set):

Transition probabilities aj i = P(y( t ) = i jy(t ¡

Emission probabilities bi k = P(x (t ) = kjy( t ) = i ) ,

1)

= j ) , A - [ N x N ] matrix

B - [ N x M] matrix

Training corpus:

x(1)= (In, an, Oct., 19, review, of, …. ), y(1)= (IN, DT, NNP, CD, NN, IN, …. )

x(2)= (Ms., Haag, plays, Elianti,.), y(2)= (NNP, NNP, VBZ, NNP, .)

…

x(L)= (The, company, said,…), y(L)= (DT, NN, VBD, NNP, .)

How to estimate the parameters using maximum likelihood estimation?

You probably can guess what these estimation should be?

12

Hidden Markov Models: Estimation

Parameters (to be estimated from the training set):

Transition probabilities aj i = P(y( t ) = i jy(t ¡

= j ) , A - [ N x N ] matrix

Emission probabilities bi k = P(x (t ) = kjy( t ) = i ) ,

1)

B - [ N x M] matrix

Training corpus: (x(1),y(1) ), l = 1, … L

Write down the probability of the corpus according to the HMM:

P(f x

=

QL

(l )

; y (l ) gLl= 1 )

l = 1 a$;y 1( l )

³Q

l = 1 P(x

=

(l )

; y (l) ) =

jx l j¡ 1

by ( l ) ;x ( l ) ay ( l ) ;y ( l )

t= 1

t

t

t

t+ 1

Draw a word

from this state

Select tag for

the first word

8

=

QL

QN

´

by ( l )

jx l j

Select the next

state

C T ( i ;j )

a

i ;j = 1 i ;j

CT(i,j) is #times tag i is followed by tag j.

Here we assume that $ is a special tag which

precedes and succeeds every sentence

(l)

jx l j

ay ( l )

jx l j

Draw last

word

QN QM

i= 1

;x

;$

=

Transit into the

$ state

C ( i ;k )

E

b

k = 1 i ;k

CE(i,k) is #times

word k is

emitted by tag i

13

Hidden Markov Models: Estimation

Maximize: P(f x ( l ) ; y ( l ) gLl= 1 ) =

=

QN

C T ( i ;j )

a

i ;j = 1 i ;j

QN QM

i= 1

C ( i ;k )

E

b

k = 1 i ;k

CT(i,j) is #times tag i is followed by tag j.

CE(i,k) is #times word k is

emitted by tag i

Equivalently maximize the logarithm of this: log(P(f x (l ) ; y ( l ) gLl= 1 )) =

´

P N ³P N

PM

=

i= 1

j = 1 CT (i ; j ) log ai ;j +

k = 1 CE (i ; k) log bi ;k

PN

PN

a

=

1;

i = 1; : : : ; N

subject to probabilistic constraints:

j = 1 i ;j

i = 1 bi ;k = 1;

Or, we can decompose it into 2N optimization tasks:

For transitions

i = 1; : : : ; N :

For emissions

i = 1; : : : ; N :

P

N

maxa i ; 1 ;:::;a i ; N

j = 1 CT (i ; j ) log ai ;j

PN

s.t .

j = 1 ai ;j = 1

maxbi ; 1 ;:::;bi ; M CE (i ; k) logbi ;k

PN

s.t . i = 1 bi ;k = 1

14

Hidden Markov Models: Estimation

For transitions (some i)

PN

maxa i ; 1 ;:::;a i ; N

j = 1 CT (i ; j ) log ai ;j

PN

s.t . 1 ¡

j = 1 ai ;j = 0

Constrained optimization task, Lagrangian:

PN

P

L (ai ;1 ; : : : ; ai ;N ; ¸ ) =

C

(i

;

j

)

log

a

+

¸

£

(1

¡

i ;j

j=1 T

=

C T ( i ;j )

ai j

¡ ¸ = 0

P(yt = j jyt ¡

1

=)

ai j =

= i ) = ai ;j =

C T ( i ;j )

¸

P C T (i ;j ) 0

j 0 C T ( i ;j )

Similarly, for emissions:

P(x t = kjyt = i ) = bi ;k =

2

ai ;j )

Find critical points of Lagrangian by solving the system of equations:

PN

@L

j = 1 ai ;j = 0

@¸ = 1 ¡

The maximum likelihood solution is

@L

@a i j

N

j=1

P C E ( i ;k ) 0

k 0 C E ( i ;k )

just normalized counts of events.

Always like this for generative

models if all the labels are visible in

training

I ignore “smoothing” to process rare

or unseen word tag combinations…

Outside score of the seminar

15

HMMs as linear models

John

carried

a

tin

?

?

?

?

can

.

?

.

y = argmaxy 0 P(x ; y 0jA; B ) = argmaxy 0 log P(x ; y 0jA; B )

Decoding:

We will talk about the decoding algorithm slightly later, let us generalize Hidden

Markov Model:

0

P

jxj+ 1

log P(x ; y jA; B ) = l = 1 log by i0;x i + log ay i0;y i0+ 1

PN PN

PN PM

0

0

=

C

(y

;

i

;

j

)

£

log

a

+

C

(x

;

y

; i ; k) £ log bi ;k

T

i

;j

E

i= 1

j=1

i= 1

k= 1

The number of times tag i

is followed by tag j in the

candidate y’

2

The number of times tag i

corresponds to word k in (x, y’)

But this is just a linear model!!

16

Scoring: example

(x ; y 0) =

John

carried

a

tin

can

.

NP

VBD

DT

NN

NN

.

' (x ; y 0) = (

Unary features

1

…

0

NP: John

NP:Mary

...

1

0

…

NN-.

MD-.

…

1

NP-VBD

CE (x ; y 0; i ; k)

Edge features

wM L = ( log bN P;J oh n

wM L ¢' (x ; y ) =

log bN P;M ar y

:::

log aN N ;V B D log aN N ;:

CT (y 0; i ; j )

)

log aM D ;: :::

Their inner product is exactly log P (x ; y 0jA; B )

0

)

P

N

i= 1

P

N

j=1

0

CT (y ; i ; j ) £ log ai ;j +

P

N

i= 1

P

M

k= 1

CE (x ; y 0; i ; k) £ log bi ;k

But may be there other (and better?) ways to estimate w , especially when we

know that HMM is not a faithful model of reality?

It is not only a theoretical question! (we’ll talk about that in a moment)

Feature view

Basically, we define features which correspond to edges in the graph:

y( 1)

y( 2)

y( 3)

y( 4)

…

…

x (1)

x (2)

x (3)

x (4)

Shaded because they

are visible (both in

training and testing)

18

Generative modeling

For a very large dataset (asymptotic analysis):

If data is generated from some “true” HMM, then (if the training set is sufficiently

large), we are guaranteed to have an optimal tagger

Otherwise, (generally) HMM will not correspond to an optimal linear classifier

Discriminative methods which minimize the error more directly are guaranteed

(under some fairly general conditions) to converge to an optimal linear classifier

For smaller training sets

Generative classifiers converge faster to their optimal error [Ng & Jordan, NIPS

01]

A discriminative classifier

Errors on a

regression dataset

(predict housing

prices in Boston

area):

1

Real case: HMM is a

coarse approximation

of reality

A generative model

# train examples

19

Outline

Sequence labeling / segmentation problems: settings and example problems:

Part-of-speech tagging, named entity recognition, gesture recognition

Hidden Markov Model

Standard definition + maximum likelihood estimation

General views: as a representative of linear models

Perceptron and Structured Perceptron

algorithms / motivations

Decoding with the Linear Model

Discussion: Discriminative vs. Generative

20

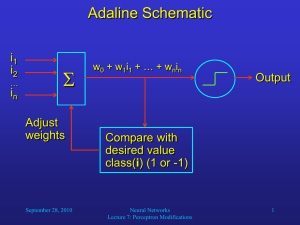

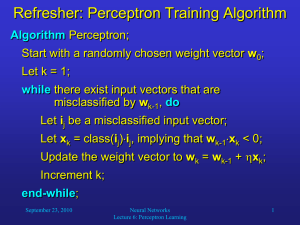

Perceptron

Let us start with a binary classification problem

y 2 f + 1; ¡ 1g

break ties (0) in some

deterministic way

For binary classification the prediction rule is: y = sign (w ¢' (x))

Perceptron algorithm, given a training set f x ( l ) ; y( l ) gLl= 1

w = 0 // initialize

do

err = 0

for l = 1 .. L¡ // over the

training examples

¢

if ( y( l ) w ¢' (x (l ) ) < 0)

// if mistake

w +=

´ y(l ) ' (x (l ) )

// update, ´ > 0

err ++ // # errors

endif

endfor

while ( err > 0 ) // repeat until no errors

return w

21

Figure adapted from Dan

Roth’s class at UIUC

Linear classification

Linear separable case, “a perfect” classifier:

(w ¢' (x) + b) = 0

' (x) 1

w

' (x) 2

Linear functions are often written as: y = sign (w ¢' (x) + b), but we can assume

that ' (x) 0 = 1 for any x

22

Perceptron: geometric interpretation

if ( y

(l)

¡

(l )

¢

w ¢' (x ) < 0)

w += ´ y(l ) ' (x (l ) )

// if mistake

// update

endif

23

Perceptron: geometric interpretation

if ( y

(l)

¡

(l )

¢

w ¢' (x ) < 0)

w += ´ y(l ) ' (x (l ) )

// if mistake

// update

endif

24

Perceptron: geometric interpretation

if ( y

(l)

¡

(l )

¢

w ¢' (x ) < 0)

w += ´ y(l ) ' (x (l ) )

// if mistake

// update

endif

25

Perceptron: geometric interpretation

if ( y

(l)

¡

(l )

¢

w ¢' (x ) < 0)

w += ´ y(l ) ' (x (l ) )

// if mistake

// update

endif

26

Perceptron: algebraic interpretation

if ( y

(l)

¡

(l )

¢

w ¢' (x ) < 0)

w += ´ y(l ) ' (x (l ) )

// if mistake

// update

endif

We want after the update to increase y

(l)

¡

(l )

w ¢' (x )

¢

If the increase is large enough than there will be no misclassification

Let’s see that’s what happens after the update

y

(l )

¡

(l )

(l )

(l )

¢

(w + ´ y ' (x )) ¢' (x )

¡

¢

¡

¢

(l )

(l )

(l) 2

(l )

(l )

= y w ¢' (x ) + ´ (y ) ' (x ) ¢' (x )

(y( l ) ) 2 = 1

squared norm > 0

So, the perceptron update moves the decision hyperplane towards

misclassified ' (x (l ) )

27

Perceptron

The perceptron algorithm, obviously, can only converge if the

training set is linearly separable

It is guaranteed to converge in a finite number of iterations,

dependent on how well two classes are separated (Novikoff,

1962)

28

Averaged Perceptron

A small modification

w = 0, w P = 0

// initialize

Do not run until convergence

for k = 1 .. K

// for a number of iterations

for l = 1 .. L¡ // over the

training examples

¢

if ( y( l ) w ¢' (x (l ) ) < 0)

// if mistake

w += ´ y(l ) ' (x (l ) )

// update, ´ > 0

Note: it is after endif

endif

w P += w

// sum of w over the course of training

endfor

endfor

More stable in training: a vector w which survived

return K1L w P

more iterations without updates is more similar to

the resulting vector

larger number of times

2

1

KL

w P , as it was added a

w

29

Structured Perceptron

Let us start with structured problem: y = argmaxy 02 Y (x ) w ¢' (x ; y 0)

Perceptron algorithm, given a training set f x ( l ) ; y (l ) gLl= 1

w = 0 // initialize

do

err = 0

for l = 1 .. L // over the training examples

y^ = argmaxy 02 Y (x ( l ) ) w ¢' (x (l ) ; y 0) // model prediction

if ( w ¢' (x (l ) ; y^ ) > w ¢' (x (l ) ; y (l ) )

// if mistake

Pushes the correct

¡

¢

sequence up and the

w += ´ ' (x (l ) ; y (l ) ) ¡ ' (x (l ) ; y^ )

// update

incorrectly predicted one

err ++ // # errors

down

endif

endfor

while ( err > 0 ) // repeat until no errors

return w

30

Str. perceptron: algebraic interpretation

if (w ¢' (x (l ) ;¡y^ ) > w ¢' (x (l ) ; y (l ) )) ¢

w += ´ ' (x (l ) ; y ( l ) ) ¡ ' (x ( l ) ; y

^)

endif

w ¢(' (x (l ) ; y ( l ) ) ¡ ' (x ( l ) ; y^ ))

We want after the update to increase

// if mistake

// update

(l )

If the increase is large enough then y

will be scored above y^

Clearly, that this is achieved as this product will be increased by

´ jj' (x

(l)

;y

(l )

) ¡ ' (x

(l )

; y^ )jj

2

There might be other

y 0 2 Y(x ( l ) )

but we will deal with them

on the next iterations

31

Structured Perceptron

Positive:

Drawbacks

Very easy to implement

Often, achieves respectable results

As other discriminative techniques, does not make assumptions about the

generative process

Additional features can be easily integrated, as long as decoding is tractable

“Good” discriminative algorithms should optimize some measure which is

closely related to the expected testing results: what perceptron is doing on

non-linearly separable data seems not clear

However, for the averaged (voted) version a generalization bound which

generalization properties of Perceptron (Freund & Shapire 98)

Later, we will consider more advance learning algorithms

32

Outline

Sequence labeling / segmentation problems: settings and example problems:

Part-of-speech tagging, named entity recognition, gesture recognition

Hidden Markov Model

Standard definition + maximum likelihood estimation

General views: as a representative of linear models

Perceptron and Structured Perceptron

algorithms / motivations

Decoding with the Linear Model

Discussion: Discriminative vs. Generative

33

Decoding with the Linear model

y = argmaxy 02 Y (x ) w ¢' (x ; y 0)

Decoding:

Again a linear model with the following edge features (a generalization of a HMM)

In fact, the algorithm does not depend on the feature of input (they do not need to

be local)

y( 1)

y( 2)

y( 3)

y( 4)

…

…

x (1)

x (2)

x (3)

x (4)

34

Decoding with the Linear model

y = argmaxy 02 Y (x ) w ¢' (x ; y 0)

Decoding:

Again a linear model with the following edge features (a generalization of a HMM)

In fact, the algorithm does not depend on the feature of input (they do not need to

be local)

y( 1)

y( 2)

y( 3)

y( 4)

…

x

35

Decoding with the Linear model

Decoding:

y = argmaxy 02 Y (x ) w ¢' (x ; y 0)

y( 1)

y( 2)

y( 3)

y( 4)

…

Let’s change notation:

x

Edge scores f t (yt ¡ 1 ; yt ; x ) : roughly corresponds to log ay t ¡

Defined for t = 0 too (“start” feature:

Start/Stop symbol

information ($) can be

encoded with them too.

Decode:

y = argmaxy 02 Y ( x )

P

y0 = $

1 ;y t

+ log by t ;x t

)

jx j

0

0

f

(y

;

y

t

t¡ 1 t ; x )

t= 1

Decoding: a dynamic programming algorithm - Viterbi algorithm

36

Viterbi algorithmP

Decoding: y = argmaxy 02 Y ( x )

y( 1)

jx j

t= 1

y( 2)

f t (yt0¡ 1 ; yt0; x )

y( 3)

y( 4)

…

x

Loop invariant: ( t = 1; : : : ; jx j)

scoret[y] - score of the highest scoring sequence up to position t with

prevt[y] - previous tag on this sequence

Init: score0[$] = 0, score0[y] = - 1 for other y

Recomputation ( t = 1; : : : ; jx j)

Time complexity ?

0

0

prevt [y] = argmaxy 0 scor et [y ] + f t (y ; y; x )

O(N 2 jxj)

scoret [y] = scoret ¡ 1 [pr evt [y]] + f t (prevt [y]; y; x )

1

Return:

retrace prev pointers starting from argmaxy scorej x j [y]

37

Outline

Sequence labeling / segmentation problems: settings and example problems:

Part-of-speech tagging, named entity recognition, gesture recognition

Hidden Markov Model

Standard definition + maximum likelihood estimation

General views: as a representative of linear models

Perceptron and Structured Perceptron

algorithms / motivations

Decoding with the Linear Model

Discussion: Discriminative vs. Generative

38

Recap: Sequence Labeling

Hidden Markov Models:

Discriminative models

How to estimate

How to learn with structured perceptron

Both learning algorithms result in a linear model

How to label with the linear models

39

Discriminative vs Generative

Generative models:

Not necessary the case

for generative models

with latent variables

Cheap to estimate: simply normalized counts

Hard to integrate complex features: need to come up with a generative

story and this story may be wrong

Does not result in an optimal classifier when model assumptions are

wrong (i.e., always)

Discriminative models

More expensive to learn: need to run decoding (here,Viterbi) during

training and usually multiple times per an example

Easy to integrate features: though some feature may make decoding

intractable

Usually less accurate on small datasets

40

Reminders

Speakers: slides about a week before the talk, meetings with me

before/after this point will normally be needed

Reviewers: reviews are accepted only before the day we consider the

topic

Everyone: References to the papers to read at GoogleDocs,

These slides (and previous ones) will be online today

speakers: send me the last version of your slides too

Next time: Lea about Models of Parsing, PCFGs vs general WCFGs

(Michael Collins’ book chapter)

41