Parallel Programming

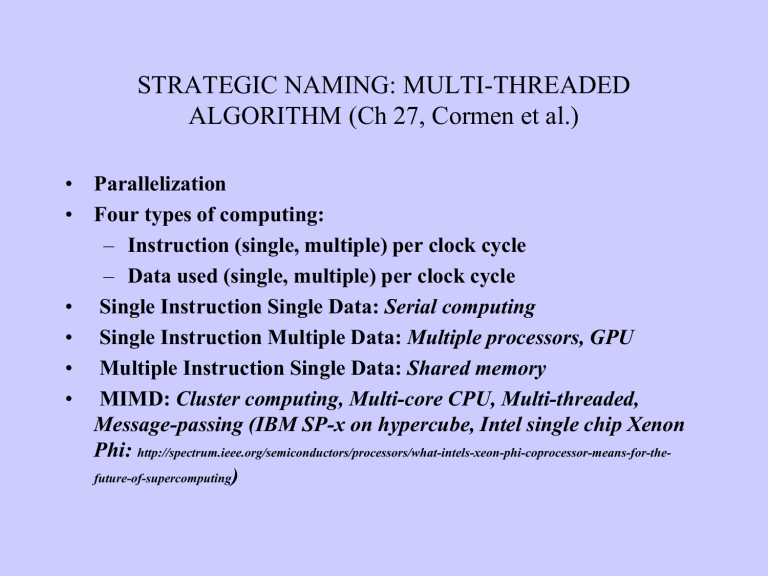

STRATEGIC NAMING: MULTI-THREADED

ALGORITHM (Ch 27, Cormen et al.)

• Parallelization

• Four types of computing:

– Instruction (single, multiple) per clock cycle

–

Data used (single, multiple) per clock cycle

• Single Instruction Single Data: Serial computing

• Single Instruction Multiple Data: Multiple processors, GPU

• Multiple Instruction Single Data: Shared memory

• MIMD: Cluster computing, Multi-core CPU, Multi-threaded,

Message-passing (IBM SP-x on hypercube, Intel single chip Xenon

Phi: http://spectrum.ieee.org/semiconductors/processors/what-intels-xeon-phi-coprocessor-means-for-thefuture-of-supercomputing

)

Grid Computing & Cloud

• Not necessarily parallel

• Primary focus is the utilization of CPU-cycles across

• Just networked CPU’s, but middle-layer software makes node utilizations transparent

• A major focus: avoid data transfer – run codes where data are

• Another focus: load balancing

• Message passing parallelization is possible: MPI, PVM, etc.

• Community specific Grids: CERN, Bio-grid, Cardio-vascular grid, etc.

• Cloud: Data archiving focus, but really commercial versions of Grid,

CPU utilization is under-sold but coming up: expect service-oriented software business model to pick up

RAM Memory Utilization

•

Two types feasible:

•

Shared memory:

•

Fast, possibly on-chip, no message passing time, no dependency on a ‘pipe’ and its possible failure

•

But, consistency needs to be explicitly controlled, that may cause-deadlock, that needs deadlock checking-breaking mechanism adding overhead

•

Distributed local memory:

• communication overhead

• ‘pipe’ failure possibility is a practical problem

• good model where threads are independent of each other

• most general model for parallelization

• easy to code, & well-established library (MPI)

• scaling up is easy – on-chip to over-the-globe

Threading Types

•

Two types feasible:

•

Static threading: OS controls, typically for single-core CPU’s (why

would one do it? - OS), but multi-core CPU’s use it if compiler guarantees safe execution

•

Dynamic threading: Program controls explicitly, threads are created/destroyed as needed, parallel computing model

Multi-threaded Fibonacci Recursive

Fib (n)

1 If n<=1 then return n; else

2.

x = Fib(n-1);

3.

y = Fib(n-2);

4.

return (x+y).

Complexity: O(G n ), where G is Golden ration ~1.6

Fibonacci Recursive

Fib (n)

1 If n<=1 then return n; else

2.

x = Spawn Fib(n-1);

3.

y = Fib(n-2);

4.

Sync;

5.

return (x+y).

Parallelization of threads is optional: scheduler decides (programmer, script translator, compiler, os)

Spawn, or Data

collection node is counted as time unit 1

This is message passing

Note,

GPU/SIMD uses different model:

Each thread does same work

(kernel), & Data goes to shared memory

•

GPU-type parallelization’s ideal time ~critical path length

•

The more balanced the tree is the shorter the critical path

Terminologies/Concepts

• For P available processor: T inf

, T

P

, T

1

: no-limit to serial-processor

• Ideal parallelization: T

P

= T

1

/ P

• Real situation: T

P

>= T

1

/ P

• T inf is theoretical minimum feasible, so, T

P

>= T inf

• Speedup factor = T

1

/ P

• T

1

/ T

P

<= P

• Linear speedup: T

1

/ T

P

= O(P) [e.g. 3P +c]

• Perfect linear speedup: T

1

/ T

P

= P

• My preferred factor would be T

P factor?)

/ T

1

(inverse speedup: slowdown

– linear O(P); quadratic O(P 2 ), …, exponential O(k P , k>1)

Terminologies/Concepts

• For P available processor: T inf

, T

P

, T

1

: no limit to serial processor

• Parallelism factor: T

1

/ T inf

– serial-time by ideal-parallelized-time

– note, this is about your algorithm,

• unoptimized over the actual configuration available to you

• T

1

/ T inf

< P implies NOT linear speedup

• T

1

/ T inf

<< P implies processors are underutilized

• We want to be close to P: T

1

/ T inf

P, as in limit

• Slackness factor: (T

1

/ T inf

) / P , or (T

1

/ T inf

P)

• We want slackness

1, minimum feasible

– i.e, we want no slack