Lecture7

advertisement

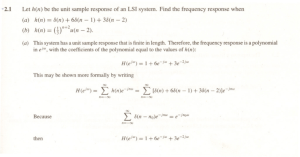

Decimation-in-frequency FFT algorithm

The decimation-in-time FFT algorithms are all based on

structuring the DFT computation by forming smaller and smaller

subsequences of the input sequence x[n]. Alternatively, we can

consider dividing the output sequence X[k] into smaller and

smaller subsequences in the same manner.

N 1

X [k ] x[n]WNnk

k 0,1,...,N 1

n 0

The even-numbered frequency samples are

N 1

( N / 2 ) 1

n 0

n 0

X [2r ] x[n]WNn( 2r )

X [2r ]

n( 2r )

x

[

n

]

W

N

( N / 2 ) 1

( N / 2 ) 1

n 0

n 0

2 nr

x

[

n

]

W

N

N 1

n( 2r )

x

[

n

]

W

N

n ( N / 2 )

2 r ( n ( N / 2 ))

x

[

n

(

N

/

2

)]

W

N

Since

WN2r[n( N / 2)] WN2rnWNrN WN2rn

and WN

2

WN / 2

X [2r ]

( N / 2 ) 1

rn

(

x

[

n

]

x

[

n

(

N

/

2

)])

W

N /2

r 0,1,...,( N / 2) 1

n 0

The above equation is the (N/2)-point DFT of the (N/2)-point

sequence obtained by adding the first and the last half of the

input sequence.

Adding the two halves of the input sequence represents time

aliasing, consistent with the fact that in computing only the evennumber frequency samples, we are sub-sampling the Fourier

transform of x[n].

We now consider obtaining the odd-numbered frequency points:

N 1

( N / 2 ) 1

n 0

n 0

X [2r 1] x[n]WNn( 2r 1)

n ( 2 r 1)

x

[

n

]

W

N

N 1

n ( 2 r 1)

x

[

n

]

W

N

n ( N / 2 )

Since

N 1

( N / 2 ) 1

n N / 2

n 0

n ( 2 r 1)

x

[

n

]

W

N

WN( N / 2 )( 2 r 1)

( N / 2 ) 1

( n N / 2 )( 2 r 1)

x

[

n

(

N

/

2

)]

W

N

( N / 2 ) 1

n ( 2 r 1)

x

[

n

(

N

/

2

)]

W

N

n 0

n ( 2 r 1)

x

[

n

(

N

/

2

)]

W

N

n 0

We obtain

X [2r 1]

( N / 2 ) 1

n ( 2 r 1)

(

x

[

n

]

x

[

n

N

/

2

])

W

N

n 0

( N / 2 ) 1

n

nr

(

x

[

n

]

x

[

n

N

/

2

])

W

W

N

N /2

r 0,1,...,( N / 2) 1

n 0

The above equation is the (N/2)-point DFT of the sequence

obtained by subtracting the second half of the input sequence

from the first half and multiplying the resulting sequence by

WNn.

Let g[n] = x[n]+x[n+N/2] and h[n] = x[n]x[x+N/2], the DFT can

be computed by forming the sequences g[n] and h[n], then

computing h[n] WNn, and finally computing the (N/2)-point DFTs

of these two sequences.

Flow graph of decimation-in-frequency decomposition of an Npoint DFT (N=8).

Recursively, we can further decompose the (N/2)-point DFT

into smaller substructures:

Finally, we have

Butterfly structure for decimation-in-frequency FFT algorithm:

The decimation-in-frequency FFT algorithm also has the

computation complexity of O(N log2N)

Chirp Transform Algorithm (CTA)

This algorithm is not optimal in minimizing any measure of

computational complexity, but it has been used to compute any

set of equally spaced samples of the DTFT on the unit circle.

To derive the CTA, we let x[n] denote an N-point sequence and

X(ejw) its DTFT. We consider the evaluation of M samples of X(ejw)

that are equally spaced in angle on the unit cycle, at frequencies

wk w0 kw

(k 0,1,...,M 1,

w 2 / M )

When w0 =0 and M=N, we obtain the special case of DFT.

The DTFT values evaluated at wk are

N 1

X (e jwk ) x[n]e jwk n

with W defined as

we have

k 0,1,...,M 1

n 0

W e jw

N 1

X (e jwk ) x[n]e jw0nW nk

k 0,1,...,M 1

n 0

The Chirp transform represents X(ejwk) as a convolution:

To achieve this purpose, we represent nk as

nk (1/ 2)[n2 k 2 (k n)2 ]

Then, the DTFT value evaluated at wk is

N 1

X (e

jwk

) x[n]e

jw0 n

W

n2 / 2

W

k2 /2

W

( k n ) 2 / 2

n 0

Letting

g[n] x[n]e

jw0n

W

n2 / 2

we can then write

N 1

jwk

k /2

( k n ) 2 / 2

X (e ) W g[n]W

n 0

2

k 0,1,...,M 1

To interpret the above equation, we obtain more familiar notation

by replacing k by n and n by k:

N 1

jwn

n /2

( n k ) 2 / 2

X (e ) W g[k ]W

k 0

2

n 0,1,...,M 1

X(ejwk) corresponds to the convolution of the sequence g[n] with

the sequence Wn2/2.

The block diagram of the chirp transform algorithm is

Since only the outputs of n=0,1,…,M1 are required, let h[n] be

the following impulse response with finite length (FIR filter):

W

h[n]

0

n2 / 2

Then

X (e

jwn

) W

n2 / 2

( N 1) n M 1

otherwise

g[n] h[n]

n 0,1,...,M 1

The block diagram of the chirp transform algorithm for FIR is

Then the output y[n] satisfies that

X (e jwn ) y[n]

n 0,1,...,M 1

Evaluating frequency responses using the procedure of chirp

transform has a number of potential advantages:

We do not require N=M as in the FFT algorithms, and neither N

nor M need be composite numbers. => The frequency values can be

evaluated in a more flexible manner.

The convolution involved in the chirp transform can still be

implemented efficiently using an FFT algorithm. The FFT size

must be no smaller than (M+N1). It can be chosen, for example,

to be an appropriate power of 2.

In the above, the FIR filter h[n] is non-causal. For certain realtime implementation it must be modified to obtain a causal system.

Since h[n] is of finite duration, this modification is easily

accomplished by delaying h[n] by (N1) to obtain a causal impulse

response:

( n N 1) 2 / 2

W

h1[n]

0

n 0,1,...,M N 2

otherwise

and the DTFT transform values are

X (e jwn ) y1[n N 1]

n 0,1,...,M 1

In hardware implementation, a fixed and pre-specified causal

FIR can be implemented by certain technologies, such as chargecoupled devices (CCD) and surface acoustic wave (SAW) devices.

Two-dimensional Transform Revisited

(c.f. Fundamentals of Digital Image Processing, A. K. Jain, Prentice

Hall, 1989)

One-dimensional orthogonal (unitary) transforms

N 1

v=Au

u =

A*T

v=

AH

v

v[k ] ak ,nu[n]

0 k N 1

n 0

N 1

u[n] ak,n v[k ]

0 n N 1

k 0

where A*T = A1, i.e., AAH = AHA = I. That is, the columns of AH

form a set of orthonormal bases, and so are the columns of A.

The vector ak* {ak,n*, 0 n N1} are called the basis vector

of A. The series coefficients v[k] give a representation of the

original sequence u[k], and are useful in filtering, data

compression, feature extraction, and other analysis.

Two-dimensional orthogonal (unitary) transforms

Let {u[m,n]} be an nn image.

N 1 N 1

v[k , l ] u[m, n]ak ,l [m, n]

0 k, l N 1

m 0 n 0

N 1 N 1

u[m, n] v[k , l ]ak,l [m, n]

0 m, n N 1

k 0 l 0

where {ak,l[m,n]}, called an image transform, is a set of complete

orthonormal discrete basis functions satisfying the properties:

Orthonormality:

N 1 N 1

a

[

m

,

n

]

a

k ,l

k ',l ' [ m, n] [ k k ' , l l ' ]

m 0 n 0

N 1 N 1

a

[

m

,

n

]

a

k ,l

k ,l [ m' , n' ] [ m m' , n n' ]

k 0 l 0

where [a,b] is the 2D delta function, which is one only when

a=b=0, and is zero otherwise.

V = {v[k,l]} is called the transformed image.

The orthonormal property assures that any expansion of the

basis images

P 1 Q 1

uP,Q [m, n] v'[k , l ]ak,l [m, n]

P N,

QN

m 0 n 0

will be minimized by the truncated series

v'[k , l ] v[k , l ]

When P=Q=N, the error of minimization will be zero.

Separable Unitary Transforms

The number of multiplications and additions required to

compute the transform coefficients v[k,l] is O(N4), which is

quite excessive.

The dimensionality can be reduced to O(N3) when the

transform is restricted to be separable.

A transform {ak,l[m,n]} is separable iff for all 0k,l,m,nN1, it

can be decomposed as follows:

ak ,l [m, n] ak [m]bl [n]

where A {a[k,m]} and B {b[l,n]} should be unitary matrices

themselves, i.e., AAH = AHA = I and BBH = BHB = I .

Often one choose B to be the same as A, so that

N 1 N 1

v[k , l ] ak [m]u[m, n]al [n]

m 0 n 0

N 1 N 1

u[m, n] ak [m]v[k , l ]al[n]

k 0 l 0

Hence, we can simplify the transform as

V = AUAT, and U = A*TVA*

where V = {v[k,l]} and U = {u[m,n]}.

A more general form: for an MN rectangular image, the

transform pair is

V = AMUANT, and U = AM*TUAN*

where AM and AN are MM and NN unitary matrices,

respectively. themselves, i.e., AAH = AHA = I and BBH = BHB = I.

These are called two-dimensional separable transforms. The

complexity in computing the coefficient image is O(N3).

The computation can be decomposed as computing T=UAT

first, and then compute V= AT (for an NN image)

Computing T=UAT requires N2 inner products (of N-point

vectors). Each inner product requires N operations, and so in

total O(N3).

Similarly, V= AT also requires O(N3) operations, and

finally we need O(N3) to compute V.

A closer look at T=UAT:

Let the rows of U be {U1, U2, …, UN}. Then

T=UAT = [U1T, U2T, …, UNT]TAT = [U1AT, U2AT, …, UNAT] T.

Note that each UiAT (i=1 … N) is a one-dimensional unitary

transform. That is, this step performs N one-dimensional

transforms for the rows of the image U, obtaining a temporary

image T.

Then, the step V= AT performs N 1-D unitary transforms on the

columns of T.

Totally, 2N 1-D transforms are performed. Each 1-D transform is

of O(N2).

Remember that the two-dimensional DFT is

1

v[k , l ]

N

N 1 N 1

km

ln

u

[

m

,

n

]

W

W

N

N

m 0 n 0

1

u[m, n]

N

where WN e

N 1 N 1

mk

nl

v

[

k

,

l

]

W

W

N

N

k 0 l 0

j ( 2 / N )

The 2D DFT is separable, and so it can be represented as

V = FUF

where F is the NN matrix with the element of k-th row and nth element be

1

kn

WN

F=

N

,0 k , n N 1

Fast computation of two-dimensional DFT:

According to V = FUF, it can be decomposed as the computation

of 2N 1-D DFTs.

Each 1-D DFT requires Nlog2N computations.

So, the 2-D DFT can be efficiently implemented in time

complexity of O(N2log2N )

2-D DFT is inherent in many properties of 1-D DFT (e.g., conjugate

symmetry, shifting, scaling, convolution, etc.). A property not from

the 1-D DFT is the rotation property.

Rotation property: if we represent (m,n) and (k,l) in polar

coordinate,

(m, n) (r cos , r sin ) and (k , l ) (w cos , w sin )

DFT

then u[r , ] v[ w, ]

That is, the rotation of an image implies the rotation of its DFT.

Discrete-time Random Signals

(c.f. Oppenheim, et al., 1999)

• Until now, we have assumed that the signals are

deterministic, i.e., each value of a sequence is

uniquely determined.

• In many situations, the processes that generate

signals are so complex as to make precise

description of a signal extremely difficult or

undesirable.

• A random or stochastic signal is considered to be

characterized by a set of probability density

functions.

Stochastic Processes

• Random (or stochastic) process (or signal)

– A random process is an indexed family of random

variables characterized by a set of probability

distribution function.

– A sequence x[n], <n< . Each individual sample x[n]

is assumed to be an outcome of some underlying

random variable Xn.

– The difference between a single random variable and a

random process is that for a random variable the

outcome of a random-sampling experiment is mapped

into a number, whereas for a random process the

outcome is mapped into a sequence.

Stochastic Processes (continue)

• Probability density function of x[n]: p xn , n

• Joint distribution of x[n] and x[m]:

p x n , n , x m , m

• Eg., x1[n] = Ancos(wn+n), where An and n are

random variables for all < n < , then x1[n] is a

random process.

Independence and Stationary

• x[n] and x[m] are independent iff

p x n , n , x m , m p x n , n p x m , m

• x is a stationary process iff

p x n k , n k , x m k , m k p x n , n , x m , m

for all k.

• That is, the joint distribution of x[n] and x[m]

depends only on the time difference m n.

Stationary (continue)

• Particularly, when m = n for a stationary process:

p x n k , n k p x n , n

It implies that x[n] is shift invariant.

Stochastic Processes vs.

Deterministic Signal

• In many of the applications of discrete-time signal

processing, random processes serve as models

for signals, in the sense that a particular signal

can be considered a sample sequence of a

random process.

• Although such a signals are unpredictable –

making a deterministic approach to signal

representation is inappropriate – certain average

properties of the ensemble can be determined,

given the probability law of the process.

Expectation

• Mean (or average)

mxn xn xn pxn , ndxn

• denotes the expectation operator

g xn g xn pxn , n dxn

• For independent random variables

xn ym xn ym

Mean Square Value and

Variance

• Mean squared value

{ xn }

2

xn pxn , ndxn

2

• Variance

2

varxn xn mxn

Autocorrelation and

Autocovariance

• Autocorrelation

xx{n,m}

• Autocovariance

xn xm

xn xm p

xn , n , xm , mdxn dxm

xx {n,m} xn m xn xm m xm

xx{n,m} m xn m xm

*

Stationary Process

• For a stationary process, the autocorrelation is

dependent on the time difference m n.

• Thus, for stationary process, we can write

mx mxn xn

2

x

x n m x

2

• If we denote the time difference by k, we have

xx k xx n k , n x x

n k n

Wide-sense Stationary

• In many instances, we encounter random

processes that are not stationary in the strict

sense.

• If the following equations hold, we call the process

wide-sense stationary (w. s. s.).

mx mxn xn

2

x

x n m x

2

xx k xx n k , n x x

n k n

Time Averages

• For any single sample sequence x[n], define their

time average to be

L

1

xn lim

xn

L 2 L 1

n L

• Similarly, time-average autocorrelation is

xn mxn

L

1

lim

xn mx n

L 2 L 1

n L

Ergodic Process

• A stationary random process for which time

averages equal ensemble averages is called an

ergodic process:

xn mx

xn mxn xx m

Ergodic Process (continue)

• It is common to assume that a given sequence is

a sample sequence of an ergodic random

process, so that averages can be computed from

a single sequence.

1 L 1

ˆx

m

xn

L n 0

In practice, we cannot

compute with the limits, but

L 1

instead the quantities.

1

2

2

ˆ

x

n

m

x

x

Similar quantities are often

L n 0

computed as estimates of the

L 1

1

mean, variance, and

xn mx n xn mx n

L

L n 0

autocorrelation.

Properties of correlation and

covariance sequences

xx m xn m xn

xx m xn m m x xn m x

xy m xn m y n

xy m xn m m x y n m y

• Property 1:

xx m xx m m x

2

xy m xy m m x m y

Properties of correlation and

covariance sequences (continue)

• Property 2:

2

xx 0 E xn Mean SquaredValue

xy 0

• Property 3

2

x

Variance

xx m

m

m

xx m xx

xx

xy m

m

m

xy m xy

xy

Properties of correlation and

covariance sequences (continue)

• Property 4:

xy m xx 0 yy 0

2

xy m xx 0 yy 0

2

xx m xx 0

xx m xx 0

Properties of correlation and

covariance sequences (continue)

• Property 5:

– If

yn xn n0

yy m xx m

yy m xx m

Fourier Transform Representation

of Random Signals

• Since autocorrelation and autocovariance

sequences are all (aperiodic) one-dimensional

sequences, there Fourier transform exist and are

bounded in |w|.

• Let the Fourier transform (DTFT) of the

autocorrelation and autocovariance sequences

be

e

e

xx m xx e jw

xy m xy e jw

xx m xx

xy m xy

jw

jw

Fourier Transform Representation

of Random Signals (continue)

• Consider the inverse Fourier Transforms:

1

xx n

2

1

xx n

2

e e

jw

jwn

xx

e e

jw

xx

dw

jwn

dw

Fourier Transform Representation

of Random Signals (continue)

• Consequently,

1

jw

xn xx 0

e

dw

xx

2

1

2

jw

x xx 0

e

dw

xx

2

2

• Denote Pxx w xx e

to be the power density spectrum (or power

spectrum) of the random process x.

jw

Power Density Spectrum

(using Fourier transform to represent a random signal)

xx 0 x[n]x[n] xn

2

1

2

P e dw

jw

xx

• The total area under power density in [,] is the

total energy of the signal.

• Pxx(w) is always real-valued since xx(n) is

conjugate symmetric

• For real-valued random processes, Pxx(w) = xx(ejw)

is both real and even.

Mean and Linear System

• Consider a linear system with frequency response

h[n]. If x[n] is a stationary random signal with

mean mx, then the output y[n] is also a stationary

random signal with mean mx equaling to

m y n yn

k

k

hk xn k hk mx n k

• Since the input is stationary, mx[nk] = mx , and

consequently,

m y mx

hk H e j 0 m x

k

DC response

Stationary and Linear System

• If x[n] is a real and stationary random signal, the

autocorrelation function of the output process is

yy n , n m ynyn m

hk hr xn k xn m r

k r

k

r

hk hr xn k xn m r

• Since x[n] is stationary , {x[nk]x[n+mr] }

depends only on the time difference m+kr.

Stationary and Linear System

(continue)

• Therefore,

yy n , n m

k

r

hk hr xx m k r

yy m

The output power density is also stationary.

• Generally, for a LTI system having a wide-sense

stationary input, the output is also wide-sense

stationary.

Power Density Spectrum and

Linear System

• By substituting l = rk,

yy m

l

k

xx m l hk hk hl k

xx m l chh l

l

where

chh l

hk hl k

k

• A sequence of the form of chh[l] is called a

deterministic autocorrelation sequence.

Power Density Spectrum and

Linear System (continue)

• A sequence of the form of Chh[l], l = rk,

C e e

yy e

jw

jw

jw

hh

xx

where Chh(ejw) is the Fourier transform of chh[l].

• For real h,

chh l hl h l

H e H e

e H e

Chh e

• Thus

Chh

jw

jw

jw

jw

2

jw

Power Density Spectrum and

Linear System (continue)

• We have the relation of the input and the output

power spectrums to be the following:

H e e

yy e

jw

jw

2

jw

xx

1

jw

xn xx 0

e

dw totalaveragepowerof the input

xx

2

2

1

2

jw

jw

yn yy 0

H

e

e

dw

xx

2

totalaveragepowerof the output

2

Power Density Property

• Key property: The area over a band of

frequencies, wa<|w|<wb, is proportional to the

power in the signal in that band.

• To show this, consider an ideal band-pass filter.

Let H(ejw) be the frequency of the ideal band

pass filter for the band wa<|w|<wb.

• Note that |H(ejw)|2 and xx(ejw) are both even

functions. Hence, after ideal low pass filtering,

yy 0 averagepowerin output

1

2

wa

w

b

e

He

jw

2

jw

xx

1

dw

2

e dw

wb

wa

He

jw

2

jw

xx

White Noise (or White Gaussian

Noise)

• A white noise signal is a signal for which

xx m x2 m

– Hence, its samples at different instants of time are

uncorrelated.

• The power spectrum of a white noise signal is a

constant

jw

2

xx e

x

• The concept of white noise is very useful in

quantization error analysis.

White Noise (continue)

• The average power of a white-noise is therefore

1

1 2

jw

xx 0

xx e dw

x dw x2

2

2

• White noise is also useful in the representation

of random signals whose power spectra are not

constant with frequency.

– A random signal y[n] with power spectrum yy(ejw) can

be assumed to be the output of a linear time-invariant

system with a white-noise input.

H e

yy e

jw

jw

2

2

x

Cross-correlation

• The cross-correlation between input and output of

a LTI system: m xnyn m

xy

xn hk xn m k

k

hk xx m k

k

• That is, the cross-correlation between the input

output is the convolution of the impulse response

with the input autocorrelation sequence.

Cross-correlation (continue)

• By further taking the Fourier transform on both sides

of the above equation, we have

jw

jw

jw

xy e H e xx e

• This result has a useful application when the input is

white noise with variance x2.

xy m x2hm,

xy e jw x2 H e jw

– These equations serve as the bases for estimating the

impulse or frequency response of a LTI system if it is

possible to observe the output of the system in response to

a white-noise input.