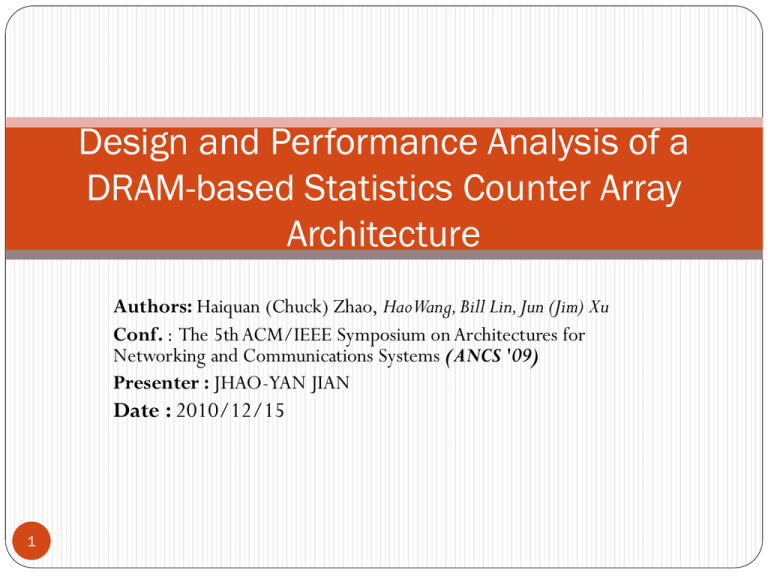

Design and Performance Analysis of a DRAM

advertisement

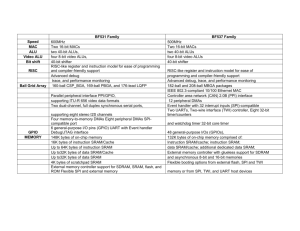

Design and Performance Analysis of a DRAM-based Statistics Counter Array Architecture Authors: Haiquan (Chuck) Zhao, HaoWang, Bill Lin, Jun (Jim) Xu Conf. : The 5th ACM/IEEE Symposium on Architectures for Networking and Communications Systems (ANCS '09) Presenter : JHAO-YAN JIAN Date : 2010/12/15 1 Outline Introduction Scheme Analysis Evaluation 2 Network Measurement Traffic statistics are widely used in router management traffic engineering network anomaly detection etc. � Fine-grained network measurement Possibly tens of millions of flows (and counters) Wirespeed statistics counting 8 ns update time at 40 Gb/s(OC-768) 3 Naive Implementations SRAM is fast, but too expensive e.g., 10 million counters × 64-bits = 80 MB, prohibitively expensive (infeasible for on-chip) DRAM is cheap, but naïve implementation too slow e.g., 50 ns DRAM random access times typically quoted, 2 × 50 ns = 100 ns > 8 ns required for wirespeed updates (read, increment, then write) 4 Hybrid SRAM/DRAM architectures(1/2) Based on premise that DRAM is too slow, hybrid SRAM/DRAM architectures have been proposed e.g., Shah’02[25], Ramabhadran’03[21], Roeder’04[23], Zhao’06[31] All based on following idea: Store full counters in DRAM (64-bits) Keep say a 5-bit SRAM counter, one per flow Wirespeed increments on 5-bit SRAM counters “Flush" SRAM counters to DRAM before they “overflow" Once “flushed", SRAM counter won’t overflow again for at least say another 2^5 = 32 (or 2^b in general) cycles 5 Hybrid SRAM/DRAM architectures(2/2) 10 to 57 MB needed far exceed available on-chip SRAM On-chip SRAM needed for other network processing SRAM amount depends on “how often" SRAM counters have to be flushed - if arbitrary increments are allowed (e.g. byte counting), more SRAM needed Integer specific, no decrements 6 Basic Architecture: Randomized Scheme Proposed by Lin & Xu in Hotmetrics08. Counters randomly distributed across B memory banks B > 1= 1/µ, where µ is the SRAM-to-DRAM access latency ratio. 7 Adversarial Access Patterns Even though random address permutation is applied, the memory loads to the DRAM banks may not be balanced. Counter index permutation in our scheme makes it difficult for an adversary to purposely trigger a large number of consecutive counter updates to the same memory bank with updates to distinct counters since the pseudorandom permutation function (or the key it uses) is not known to the outside world. An adversary can only try to trigger consecutive counter updates to the same counter, which would result in consecutive accesses to the same memory bank. 8 Extended Architecture to Handle Adversaries Fixed pipelined delay module absorbs repeated updates to the same memory location. Implemented as a fully associative cache with FIFO replacement policy 1 1 21 9 Worst Case Request Pattern q + r requests for distinct counters a1, ..., aq+r q requests repeat T times each r requests repeat T − 1 times each 10 A Few Definitions(1/2) Eg. (1, 1, 1) ≤M (2, 1, 0) ≤M (3, 0, 0). 11 A Few Definitions(2/2) 12 A Useful Theorem The following theorem relates majorization, exchangeable random variables and convex order together. 13 Chernoff Bound(1/2) Want to bound the probability that a request queue will overflow in n cycles Xs,t is the number of updates to the bank during cycles [s, t], τ = t − s, K is length of request queue. For total overflow probability bound multiply by B. 14 Chernoff Bound(2/2) mi : 1 ≤ i ≤ n be the count of the number of appearances of the ith address m∗ : worst case counter update sequences Xi, 1 ≤ i ≤ n be the indicator random variable for whether the ith address is mapped to the DRAM bank 15 Overflow Probability Overflow probability for 16 million counters, µ = 1/16, B = 32. 16 Memory Usage Comparison 17