Week5

advertisement

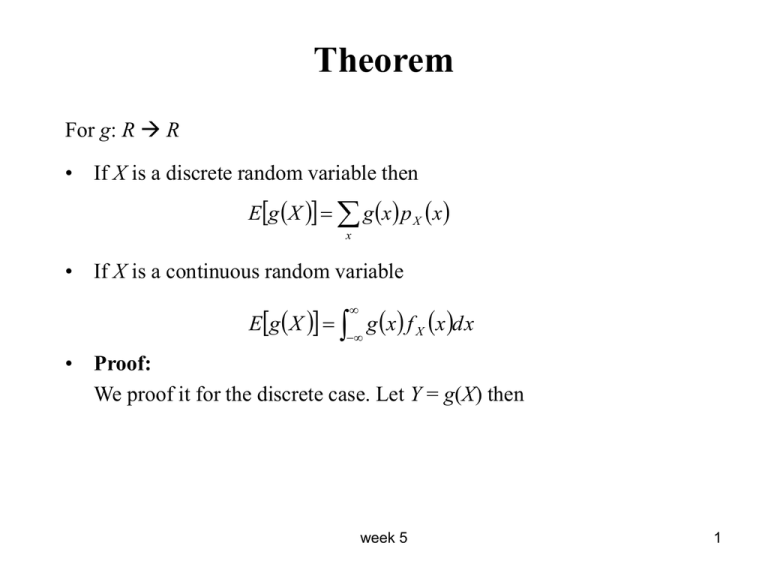

Theorem For g: R R • If X is a discrete random variable then Eg X g x p X x x • If X is a continuous random variable Eg X g x f X x dx • Proof: We proof it for the discrete case. Let Y = g(X) then week 5 1 Example to illustrate steps in proof • Suppose Y X 2 i.e. g x x 2 and the possible values of X are X : 1, 2, 3 so the possible values of Y are Y : 1, 4, 9 then, week 5 2 Examples 1. Suppose X ~ Uniform(0, 1). Let Y X 2 then, 2. Suppose X ~ Poisson(λ). Let Y e X, then week 5 3 Properties of Expectation For X, Y random variables and a, b R constants, • E(aX + b) = aE(X) + b Proof: Continuous case • E(aX + bY) = aE(X) + bE(Y) Proof to come… • If X is a non-negative random variable, then E(X) = 0 if and only if X = 0 with probability 1. • If X is a non-negative random variable, then E(X) ≥ 0 • E(a) = a week 5 4 Moments • The kth moment of a distribution is E(Xk). We are usually interested in 1st and 2nd moments (sometimes in 3rd and 4th) • Some second moments: 1. Suppose X ~ Uniform(0, 1), then E X2 1 3 2. Suppose X ~ Geometric(p), then x EX 2 2 pq x 1 x 1 week 5 5 Variance • The expected value of a random variable E(X) is a measure of the “center” of a distribution. • The variance is a measure of how closely concentrated to center (µ) the probability is. It is also called 2nd central moment. • Definition The variance of a random variable X is Var X E X E X E X 2 2 • Claim: Var X EX 2 EX 2 EX 2 2 Proof: • We can use the above formula for convenience of calculation. • The standard deviation of a random variable X is denoted by σX ; it is the square root of the variance i.e. X Var X . week 5 6 Properties of Variance For X, Y random variables and are constants, then • Var(aX + b) = a2Var(X) Proof: • Var(aX + bY) = a2Var(X) + b2Var(Y) + 2abE[(X – E(X ))(Y – E(Y ))] Proof: • Var(X) ≥ 0 • Var(X) = 0 if and only if X = E(X) with probability 1 • Var(a) = 0 week 5 7 Examples 1. Suppose X ~ Uniform(0, 1), then E X 2 1 1 1 Var X 3 2 12 1 1 and E X 2 therefore 3 2 2. Suppose X ~ Geometric(p), then E X Var X 1 q 1 and E X 2 2 therefore p p 1 q 1 q 1 p 2 2 2 2 p p p p 3. Suppose X ~ Bernoulli(p), then E X p and EX 2 12 p 0 2 q p therefore, Var X p p 2 p1 p week 5 8 Example • Suppose X ~ Uniform(2, 4). Let Y X 2. Find PY 9 . • What if X ~ Uniform(-4, 4)? week 5 9 Functions of Random variables • In some case we would like to find the distribution of Y = h(X) when the distribution of X is known. • Discrete case PX x pY y PY y Ph X y P X h 1 y xh 1 y • Examples 1. Let Y = aX + b , a ≠ 0 1 PY y PaX b y P X y b a 2. Let Y X 2 P X y P X y PY y PX 2 y P X 0 0 week 5 if y 0 if y 0 if y 0 10 Continuous case – Examples 1. Suppose X ~ Uniform(0, 1). Let Y X 2 , then the cdf of Y can be found as follows FY y PY y P X 2 y P X y FX y The density of Y is then given by 2. Let X have the exponential distribution with parameter λ. Find the 1 density for Y X 1 3. Suppose X is a random variable with density x 1 f X x 2 0 , 1 x 1 , elsewhere Check if this is a valid density and find the density of Y X 2 . week 5 11 Question • Can we formulate a general rule for densities so that we don’t have to look at cdf? • Answer: sometimes … Suppose Y = h(X) then FY y Ph X y P X h 1 y and f X x d FX h 1 y dy but need h to be monotone on region where density for X is non-zero. week 5 12 • Check with previous examples: 2 1. X ~ Uniform(0, 1) and Y X 2. X ~ Exponential(λ). Let Y 1 h X X 1 3. X is a random variable with density x 1 f X x 2 0 , 1 x 1 , elsewhere and Y X 2 week 5 13 Theorem • If X is a continuous random variable with density fX(x) and h is strictly increasing and differentiable function form R R then Y = h(X) has density d 1 fY y f X h y h y dy 1 for y R . • Proof: week 5 14 Theorem • If X is a continuous random variable with density fX(x) and h is strictly decreasing and differentiable function form R R then Y = h(X) has density d h y dy f Y y f X h 1 y 1 for y R . • Proof: week 5 15 Summary • If Y = h(X) and h is monotone then d dy h y f Y y f X h 1 y 1 • Example X has a density x3 f X x 4 0 for 0 x 2 otherwise Let Y X 6. Compute the density of Y. week 5 16 Indicator Functions and Random Variables • Indicator function – definition Let A be a set of real numbers. The indicator function for A is defined by 1 I A x I x A 0 if x A if x A • Some properties of indicator functions: I A x I B x I AB x g x g x I A x 0 for x A for x A • The support of a discrete random variable X is the set of values of x for which P(X = x) > 0. • The support of a continuous random variable X with density fX(x) is the set of values of x for which fX(x) > 0. week 5 17 Examples • A discrete random variable with pmf x p X x 6 0 x 1, 2, 3 otherwise can be written as p X x x x I x 1, 2, 3 I 1, 2, 3 x 6 6 • A continuous random variable with density function e x x0 f X x otherwise 0 can be written as f X x e x I 0, x week 5 18 Important Indicator random variable • If A is an event then IA is a random variable which is 0 if A does not occur and 1 if it does. IA is an indicator random variable. IA is also called a Bernoulli random variable. • If we perform a random experiment repeatedly and each time measure the random variable IA, we could get 1, 1, 0, 0, 0, 0, 1, 0, …The average of this list in the long run is E(IA); it gives the proportion with which A occurs. In the long run it is P(A), i.e. P(A) = E(IA) • Example: for a Bernoulli random variable X we have E X EI A 1 P A 0 PA P A p week 5 19 Use of Indicator random variable • Suppose X ~ Binomial(n, p). Let Y1,…, Yn be Bernoulli random variables n with probability of success p. Then X can be thought of as X Yi , i 1 then n n n E X E Yi E Yi p np i 1 i 1 i 1 • Similar trick for Negative Binomial: Suppose X ~ Negative Binomial(r, p). Let X1 be the number of trials until the 1st success X2 be the number of trails between 1st and 2nd success . : Xr be the number of trails between (r - 1)th and rth success r Then X X i and we have i 1 r 1 r r r E X E X i E X i p i 1 p i 1 i 1 week 5 20