test set - Crystal

advertisement

BoilerPlate Detection using Shallow

Text Features

Christian Kohlschütter, Peter

Fankhauser, Wolfgang Nejdl

Classification

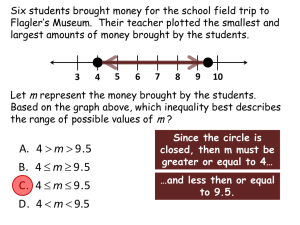

• What is classification?

• Goal: previously unseen records should be

assigned a class as accurately as possible.

• Training Set

• Each record contains a set of attributes, one of the

attributes is the class.

• A test set is used to determine the accuracy of the

model. Usually, the given data set is divided into

training and test sets, with training set used to build

the model and test set used to validate it.

Applications Of Classification

•

•

•

•

•

Fraud Detection

Customer Attrition/Churn

Spam Mail

Direct marketing

Many more….

Text Classification

• Text Classification is the task of assigning

documents expressed in natural language

into one or more classes belonging to a

predefined set.

• The classifier:

– Input: a document x

– Output: a predicted class y from some fixed set of

labels y1,...,yK

Application: Text Classification

• Classify news stories as World, US,

Business, SciTech, Sports, Entertainment,

Health, Other

• Classify student essays as A,B,C,D, or F.

• Classify pdf files as ResearchPaper, Other

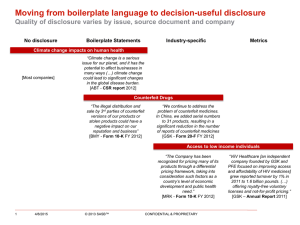

• Boilerplate Detection in web pages

• Text not related to main content

Web Page

Boilerplate Content

BoilerPalte Content Removed

• Web Page Features Used for Classification

– Strutural Features

» HTML Tags

– Shallow Text Features

» Average word/sentence length

– Densitometric Features

» Text Density

Shallow Text Features

•Examine Document at Text Block Level

–

–

–

–

–

Numbers: Words, Tokens contained in block

Average Lengths: Tokens, Sentences

Ratios: Uppercased words

Block-level HTML tags <P>, <Hn>, <DIV>

Densities: Link Density (Anchor Text Percentage)

Link Density = No.of Tokens within A Tag

No.of Token in the block

• Token Density

ρ(b) = No.of tokens in block

wrapped lines in block

• Wrap text at a fixed line width (e.g. 80 chars)

Classification Method Used

• 2 Class(Boilerplate vs main content) problem

• 4 Class(Boilerplate , main content, headline,

supplemental) problem

• Weka is used to examine the per-feature information

gain and evaluate machine-learning classifiers based

on DecisionTrees (1R and C4.8)

– Weka is a collection of machine learning algorithms for data

mining tasks

Learn One Rule -1R

• The objective of this function is to extract the

best rule that covers the current set of training

instances

– What is the strategy used for rule growing ?

– What is the evaluation criteria used for rule

growing ?

– What is the stopping criteria for rule growing ?

Learn One Rule: Rule Growing

Strategy

• General-to-specific approach

– It is initially assumed that the best rule is the

empty rule, r : { } → y, where y is the majority class

of the instances

– Iteratively add new conjuncts to the LHS of the

rule until the stopping criterion is met

• Specific-to-general approach

– A positive instance is chosen as the initial seed

for a rule

– The function keeps refining this rule by

generalizing the conjuncts until the stopping

criterion is met

Rule Evaluation and Stopping

Criteria

• Evaluate rules using rule evaluation metric

– Accuracy= (TP+TN)/(TP+FP+FN+TN)

– Coverage

– F measure : measure of test accuracy considering

both precision and recall

• A typical condition for terminating the rule

growing process is to compare the evaluation

metric of the previous candidate rule to the

newly grown rule.

• 1R used:

– Block with a text density less than 10.5 is

regarded boilerplate

Data Set

• GoogleNews Dataset

– 621 news articles from 408 web sites, randomly

sampled from a 254,000 pages crawl of English

Google News over 4 months,

– manually assessed by L3S research group

Cost-Sensitive Measures

• Precision

P=

TP

TP+FP

• Recall

R=

TP

TP+ FN

• F-measure

F= 2RP

R+P

Linguistic Interpretation

• Descriptive nature of long text

• Short text – Grammatically incomplete

• Eg. “Contact us”,” Read more”

Inference from Experiments

• Combination of just two features(num of

words and link density) leads to a simple

clssification model

• Very high Classification/Extraction Accuracy

(92-98%)

Impact of Boilerplate detection

to search

• Keywords that are not relevant to the actual

main content can be avoided

• Increase of Retrieval Precision

– Experimented on BLOGS06 Web research

collection

– For 50 top-k searches

QUESTIONS

???