LSS-MPC

Mojtaba Hajihasani

Mentor: Dr. Twohidkhah

Contents

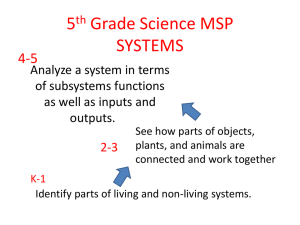

Introduction

Large-Scale Systems Modeling

Aggregation Methods

Perturbation Methods

Structural Properties of Large Scale Systems

Hierarchical Control of Large-Scale Systems

Coordination of Hierarchical Structures

Hierarchical Control of Linear Systems

Decentralized Control of Large-Scale Systems

Distributed Control of Large-Scale System

MPC of Large-Scale System

Introduction

Many technology and societal and environmental processes which are highly complex, "large" in

dimension, and uncertain by nature.

How large is large?

if it can be decoupled or partitioned into a number of interconnected subsystems or "small-scale“ systems for either computational or practical reasons when its dimensions are so large that conventional

techniques of modeling, analysis, control, design, and computation fail to give reasonable solutions with reasonable computational efforts.

Introduction

Since the early 1950s, when classical control theory was being established,

These procedures can be summarized as follows:

Modeling procedures

Behavioral procedures of systems

Control procedures

The underlying assumption: "centrality“

A notable characteristic of most large-scale systems is that centrality fails to hold due to either the lack of centralized computing capability or centralized information, e.g. society, business, management, the economy, the environment, energy, data networks, aeronautical systems, power networks, space structures, transportation, aerospace, water resources, ecology, robotic systems, flexible manufacturing systems, and etc.

Aggregation Methods

Perturbation Methods

Introduction

In any modeling task, two often conflicting factors prevail:

"simplicity“

"accuracy"

The key to a valid modeling philosophy is:

The purpose of the model

The system's boundary

A structural relationship

A set of system variables

Elemental equations

Physical compatibility

Elemental, continuity, and compatibility equations should be manipulated

The last step to a successful modeling

Introduction

The common practice has been to work with simple and less accurate models. There are two different motivations for this practice:

(i) the reduction of computational burden for system simulation, analysis, and design;

(ii) the simplification of control structures resulting from a simplified model.

Until recently there have been only two schemes for modeling large-scale systems

Aggregate method: economy

Perturbation Method: Mathematics

Aggregation Method

A "coarser" set of state variables.

For example, behind an aggregated variable, say, the

consumer price index, numerous economic variables and parameters may be involved.

The underlying reason: retain the key qualitative properties of the system, such as stability.

In other words, the stability of a system described by

several state variables is entirely represented by a single

variable-the Lyapunov function.

General Aggregation

where C is an l x n (l < n) constant aggregation matrix and l x 1 vector z is called the aggregation of x aggregated system l

L

where the pair (F,G) satisfy the following, so-called dynamic

exactness (perfect aggregation) conditions:

General Aggregation

Error vector is defined as e(t) = z(t)-C.x(t),

dynamic behavior is given by e(t) = F.e(t)+(FC-CA)x(t)+(G - CB)u(t),

reduces to e(t) = F.e(t) if previous conditions hold.

Example: Consider a third-order unaggregated system described by

It is desired to find a second-order aggregated model for this system.

General Aggregation

SOLUTION: λ(A} = {-0.70862, -6.6482, -4.1604}, the first mode is the slowest of all three.

Aggregation matrix C can be

The aggregated model becomes

The resulting error vector e(t) satisfies

An alternative choice of C

This scheme leads to dynamically inexact aggregation also.

Modal Aggregation

Balanced Aggregation

Perturbation Methods

The basic concept behind perturbation methods is the approximation of a system's structure through neglecting

certain interactions within the model which leads to lower order.

There are two basic classes:

weakly coupled models

strongly coupled models

Example of weakly coupled: chemical process control and space guidance: different subsystems are designed for flow, pressure, and temperature control

weakly coupled models

Consider the following large-scale system split into k linear subsystems

where ε is a small positive coupling parameter, x i

subsystem state and control vectors.

and u i are i th when k = 2, has been called the ε-coupled system. It is clear that when ε = 0 the ε-coupled system decouples into two subsystems,

which correspond to two approximate aggregated models one for each subsystem.

Perturbation Method &

Decentralized Control

In view of the decentralized structure of large-scale systems, these two subsystems can be designed separately in a decentralized fashion shown in Figure.

There has been no hard evidence that two reducing model method are the most desirable for large-scale systems.

Structural Properties of Large-Scale

Systems

Stability

Controllability

Observability

When the stability of large-scale system is of concern, one basic approach, consisting of three steps, has prevailed "composite system method“:

decompose a given large-scale system into a number of small-scale subsystems

Analyze each subsystem using the classical stability theories and methods combine the results leading to certain restrictive conditions with the interconnections and reduce them to the stability of the whole

One of the earliest efforts regarding the stability of composite systems: using the theory of the vector Lyapunov function

The bulk of research in the controllability and observability of largescale systems falls into four main problems:

controllability and observability of composite systems, controllability (and observability) of decentralized systems, structural controllability, controllability of singularly perturbed systems.

Coordination of Hierarchical Structures

Hierarchical Control of Linear Systems

Hierarchical Structures

The idea of "decomposition" was first treated theoretically in mathematical programming by Dantzig and Wolfe.

The coefficient matrices of such large linear programs often have sparse matrices.

The "decoupled" approach divides the original system into a number of subsystems involving certain values of parameters.

Each subsystem is solved independently for a fixed value of the so-called "decoupling" parameter, whose value is subsequently adjusted by a coordinator in an appropriate fashion so that the subsystems resolve their problems and the solution to the original system is obtained.

This approach, sometimes termed as the "multilevel" or

"hierarchical” approach.

Hierarchical Structures

There is no uniquely or universally accepted set of properties associated with the hierarchical systems.

However, the following are some of the key properties:

decision-making components

The system has an overall goal

exchange information

(usually vertically)

As the level of hierarchy goes up, the time horizon increases

Coordination of Hierarchical

Structures

Most of hierarchically controlled are essentially a combination of two distinct approaches:

the model-coordination method (or "feasible" method)

The goal-coordination method (or "dualfeasible” method)

These methods are described for a two-subsystem static optimization (nonlinear programming) problem.

Model Coordination Method

static optimization problem

where x is a vector of system (state) variables, u is a vector

of manipulated (control) variables, and y is a vector of interaction variables between subsystems.

objective function be decomposed into two subsystems:

by fixing the interaction variables

Under this situation the problem may be divided into the following two sequential problems:

First-Level Problem-Subsystem

Second-Level Problem

Model Coordination Method

The minimizations are to be done, respectively, over the following feasible sets:

A system can operate with these intermediate values with a near-optimal performance.

Goal Coordination Method

In the goal coordination method the interactions are

literally removed by cutting all the links among the subsystems.

Let y i be the outgoing variable from the ith subsystem, while its incoming variable is denoted by z i . Due to the removal of all links between subsystems, it is clear that y i ≠z i .

In order to make sure the individual sub problems yield a solution to the original problem, it is necessary that the

interaction-balance principle be satisfied, i.e., the independently selected y i and z i actually become equal.

By introducing the z variables, the system's equations are given by

Goal Coordination Method

The set of allowable system variables is defined by

objective function

Expanding the penalty term:

First-level problem

Second-level problem

Goal Coordination Method

It will be seen later that the coordinating variable a

can be interpreted as a vector of Lagrange

multipliers and the second-level problem can be solved through well-known iterative search methods, such as the gradient,

Newton's, or conjugate gradient methods.

Hierarchical Control of Linear Systems

The goal coordination formulation of multilevel systems is applied to large-scale linear continuoustime systems.

A large-scale dynamic interconnected system

It is assumed that the system can be decomposed into

N interconnected subsystems S i

Hierarchical Control of Linear Systems

The objective, in an optimal control sense

Through the assumed decomposition of system into N interconnected subsystems

The above problem, known as a hierarchical (multilevel) control

Linear System Two-Level

Coordination

Consider a large-scale linear time-invariant system:

decompose into

interaction vector

The original system's optimal control problem

Linear System Two-Level Coordination

The "goal coordination" or "interaction balance" approach as applied to the "linear-quadratic” problem is now presented.

The global problem S

G

is replaced by a family of N subproblems coupled together through a parameter vector α= (α

1

, α

2

, ... , α

N

) and denoted by S i

(α).

The coordinator, in turn, evaluates the next updated value of α

Linear System Two-Level Coordination

where ε l is the lth iteration step size, and the update term d l , as will be seen shortly, is commonly taken as a function of "interaction error":

Reference

M. Jamshidi, “Large-Scale Systems: Modeling,

Control and Fuzzy Logic”, Prentice Hall PTR, New

Jersey, 1997.

Thanks for your attention!