ppt - IBM

1+eps-Approximate Sparse

Recovery

Eric Price

MIT

David Woodruff

IBM Almaden

Compressed Sensing

• Choose an r x n matrix A

• Given x 2 R n

• Compute Ax

• Output a vector y so that

Pr

A

• x top k is the k-sparse vector of largest magnitude coefficients of x

• p = 1 or p = 2

• Minimize number r = r(n, k, ε) of “measurements”

Previous Work

• p = 1

[IR, …] r = O(k log(n/k) / ε) (deterministic A)

• p = 2

[GLPS] r = O(k log(n/k) / ε)

In both cases, r =

(k log(n/k)) [DIPW]

What is the dependence on ε?

Why 1+ ε is Important

• Suppose x = e i

– e i

+ u

= (0, 0, …, 0, 1, 0, …, 0) have 1/ ε = 100, log n = 32

• Consider y = 0 n

– |x-y|

2

= |x|

2

· 2 1/2

It’s a trivial solution!

¢ |x-e i

|

2

• (1+ε)-approximate recovery fixes this i

Our Results Vs. Previous Work

• p = 1

[IR, …] r = O(k log(n/k) / ε) r = O(k log(n/k) ¢ log 2 (1/ ε) / ε 1/2 ) (randomized) r =

(k log(1/ ε) / ε 1/2 )

• p = 2:

[GLPS] r = O(k log(n/k) / ε) r =

(k log(n/k) / ε)

Previous lower bounds

(k log(n/k))

Lower bounds for randomized constant probability

Comparison to Deterministic

Schemes

• We get r = O~(k/ ε 1/2 ) randomized upper bound for p = 1

• We show

(k log (n/k) / ε) for p = 1 for deterministic schemes

• So randomized easier than deterministic

Our Sparse-Output Results

• Output a vector y from Ax so that

|x-y| p

· (1+ ε) |x-x top k

| p

• Sometimes want y to be k-sparse r =

~

(k/ ε p )

• Both results tight up to logarithmic factors

• Recall that for non-sparse output r = £ ~(k/ ε p/2 )

Talk Outline

1. O~(k / ε 1/2 ) upper bound for p = 1

2. Lower bounds

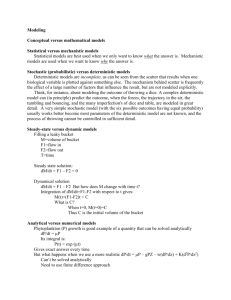

Simplifications

• Want O~(k/ ε 1/2 ) for p = 1

• Replace k with 1

– Sample 1/k fraction of coordinates

– Solve the problem for k = 1 on the sample

– Repeat O~(k) times independently

– Combine the solutions found

ε/k, ε/k, …, ε/k, 1/n, 1/n, …, 1/n

ε/k, 1/n, …, 1/n

k = 1

• Assume |x-x top

|

1

• First attempt

= 1, and x top

= ε

– Use CountMin [CM]

– Randomly partition coordinates into B buckets, maintain sum in each bucket

Σ i s.t. h(i) = 2 x i

• The expected l

1

mass of “noise” in a bucket is 1/B

• If B = £ (1/ ε), most buckets have count < ε/2, but bucket that contains x top has count > ε/2

• Repeat O(log n) times

Second Attempt

• But we wanted O~(1/ε 1/2 ) measurements

• Error in a bucket is 1/B, need B ¼ 1/ ε

• What about CountSketch? [CCF-C]

– Give each coordinate i a random ¾ (i) 2 {-1,1}

– Randomly partition coordinates into B buckets, maintain Σ i s.t. h(i) = j

¾ (i) ¢ x i in j-th bucket

Σ i s.t. h(i) = 2

¾ (i) ¢ x i

– Bucket error is (Σ i

top

– Is this better?

x i

2 / B) 1/2

CountSketch

• Bucket error Err = (Σ i

top x i

2 / B) 1/2

• All |x i

| · ε and |x-x top

|

1

= 1

• Σ i

top x i

2 · 1/ ε ¢ ε 2 · ε

• So Err · ( ε/B) 1/2 which needs to be at most ε

• Solving, B ¸ 1/ ε

• CountSketch isn’t better than CountMin

Main Idea

• We insist on using CountSketch with B = 1/ε 1/2

• Suppose Err = (Σ i

top x i

2 / B) 1/2 = ε

• This means Σ i

top x i

2 = ε 3/2

• Forget about x top

!

• Let’s make up the mass another way

Main Idea

• We have: Σ i

top x i

2 = ε 3/2

• Intuition: suppose all x i

, i

top, are the same or 0

• Then: (# non-zero)*value = 1

(# non-zero)*value 2 = ε 3/2

• Hence, value = ε 3/2 and # non-zero = 1/ ε 3/2

• Sample ε-fraction of coordinates uniformly at random!

– value = ε 3/2 and # non-zero sampled = 1/ ε 1/2 , so l

1

-contribution = ε

– Find all non-zeros with O~(1/ε 1/2 ) measurements

• Σ i

top x i

2 = ε 3/2

General Setting

• S j

= {i | 1/4 j < x i

2

· 1/4 j-1 }

• Σ i

top x i

2 = ε 3/2 implies there is a j for which |S j

|/4 j =

~( ε 3/2 )

ε 3/4

…

16 ε 3/2 , …, 16ε 3/2

ε 3/2

4 ε 3/2 , …, 4ε 3/2

, …, ε 3/2

General Setting

• If |S j

| < 1/ ε 1/2 , then 1/4 j > ε 2 , so 1/2 j > ε, can’t happen

• Else, sample at rate 1/(|S j

| ε 1/2 ) to get 1/ ε 1/2 elements of |S j

|

• l

1

-mass of |S j

| in sample is > ε

• Can we find the sampled elements of S j

? Use Σ i

top x i

2 = ε 3/2

• The l

2

2 of the sample is about ε 3/2 ¢ 1/(|S j

| ε 1/2 ) = ε/|S j

|

• Using CountSketch with 1/ε 1/2 buckets:

Bucket error = sqrt{ ε 1/2 ¢ ε 3/2 ¢ 1/(|S j

| ε 1/2 )}

= sqrt{ ε 3/2 /|S j

|} < 1/2 j since |S j

|/4 j > ε 3/2

Algorithm Wrapup

• Sub-sample O(log 1/ε) times in powers of 2

• In each level of sub-sampling maintain CountSketch with

O~(1/ ε 1/2 ) buckets

• Find as many heavy coordinates as you can!

• Intuition: if CountSketch fails, there are many heavy elements that can be found by sub-sampling

• Wouldn’t work for CountMin as bucket error could be ε because of n-1 items each of value ε/(n-1)

Talk Outline

1. O~(k / ε 1/2 ) upper bound for p = 1

2. Lower bounds

Our Results

• General results:

–

~(k / ε 1/2 ) for p = 1

–

(k log(n/k) / ε) for p = 2

• Sparse output:

–

~(k/ ε) for p = 1

–

~(k/ ε 2 ) for p = 2

• Deterministic:

–

(k log(n/k) / ε) for p = 1

Simultaneous Communication Complexity

Alice x

What is f(x,y)?

Bob y

M

A

(x)

M

B

(y)

• Alice and Bob send a single message to the referee who outputs f(x,y) with constant probability

• Communication cost CC(f) is maximum message length, over randomness of protocol and all possible inputs

• Parties share randomness

Reduction to Compressed Sensing

• Shared randomness decides matrix A

• Alice sends Ax to referee

• Bob sends Ay to referee

• Referee computes A(x+y), uses compressed sensing recovery algorithm

• If output of algorithm solves f(x,y), then

# rows of A * # bits per measurement > CC(f)

A Unified View

• General results: Direct-Sum Gap-l

1

–

~(k / ε 1/2 ) for p = 1

–

~(k / ε) for p = 2

• Sparse output: Indexing

–

~(k/ ε) for p = 1

–

~(k/ ε 2 ) for p = 2

• Deterministic: Equality

–

(k log(n/k) / ε) for p = 1

Tighter log factors achievable by looking at Gaussian channels

General Results: k = 1, p = 1

• Alice and Bob have x, y, respectively, in R m

• There is a unique i * for which (x+y) i*

For all j

i * , (x+y) j

2

= d

{0, c, -c}, where |c| < |d|

• Finding i * requires

(m/(d/c) 2 ) communication

[SS, BJKS]

• m = 1/ε 3/2 , c = ε 3/2 , d = ε

• Need

(1/ ε 1/2 ) communication

General Results: k = 1, p = 1

• But the compressed sensing algorithm doesn’t need to find i *

• If not then it needs to transmit a lot of information about the tail

– Tail a random low-weight vector in {0, ε 3/2 , ε 3/2 } 1/ε 3

– Uses distributional lower bound and RS codes

• Send a vector y within 1-ε of tail in l

1

-norm

• Needs 1/ε 1/2 communication

General Results: k = 1, p = 2

• Same argument, different parameters

•

(1/ ε) communication

• What about general k?

Handling General k

• Bounded Round Direct Sum Theorem [BR]

(with slight modification) given k copies of a function f, with input pairs independently drawn from ¹ , solving a 2/3 fraction needs communication

(k ¢ CC

¹

(f))

ε 1/2 ε 3/2 , …, ε 3/2

ε 1/2

…

ε 3/2 , …, ε 3/2

} k

ε 1/2 ε 3/2 , …, ε 3/2

Instance for p = 1

Handling General k

• CC =

(k/ ε

1/2

) for p = 1

• CC =

(k/ ε) for p = 2

• What is implied about compressed sensing?

Rounding Matrices [DIPW]

• A is a matrix of real numbers

• Can assume orthonormal rows

• Round the entries of A to O(log n) bits, obtaining matrix A’

• Careful

– A’x = A(x+s) for “small” s

– But s depends on A, no guarantee recovery works

– Can be fixed by looking at A(x+s+u) for random u

Lower Bounds for Compressed

Sensing

• # rows of A * # bits per measurement > CC(f)

• By rounding, # bits per measurement = O(log n)

• In our hard instances, universe size = poly(k/ε)

• So # rows of A * O(log (k/ε)) > CC(f)

• # rows of A =

~(k/ ε 1/2 ) for p = 1

• # rows of A =

~(k/ ε) for p = 2

Sparse-Output Results

Sparse output: Indexing

–

~(k/ ε) for p = 1

–

~(k/ ε 2 ) for p = 2

Sparse Output Results - Indexing

What is x i

?

x 2 {0,1} n i 2 {1, 2, …, n}

CC(Indexing) =

(n)

(1/ ε) Bound for k=1, p = 1

Generalizes to k > 1 to give

~(k/ ε) x 2 {ε, ε} 1/ε Generalizes to p = 2 to give

~(k/ ε 2 ) y = e i

• Consider x+y

• If output is required to be 1-sparse must place mass on the i-th coordinate

• Mass must be 1+ε if x i

= ε, otherwise 1-ε

Deterministic Results

Deterministic: Equality

–

(k log(n/k) / ε) for p = 1

Deterministic Results - Equality

Is x = y?

x 2 {0,1} n y 2 {0,1} n

Deterministic CC(Equality) =

(n)

(k log(n/k) / ε) for p = 1

Choose log n signals x 1 , …, x log n , each with k/ ε values equal to ε/k

Choose log n signals y 1 , …, y log n , each with k/ ε values equal to ε/k x = Σ i=1 log n

10 i x i y = Σ i=1 log n

Consider x-y

Compressed sensing output is 0 n iff x = y

10 i y i

General Results – Gaussian

Channels (k = 1, p = 2)

• Alice has a signal x =ε 1/2 e i for random i 2 [n]

• Alice transmits x over a noisy channel with independent

N(0, 1/n) noise on each coordinate

• Consider any row vector a of A

• Channel output = <a,x> + <a,y>, where <a,y> is N(0, |a|

2

2 /n)

• E i

[<a,x> 2 ] = ε |a|

2

2 /n

• Shannon-Hartley Theorem:

I(i; <a,x>+<a,y>) = I(<a,x>; <a,x>+<a,y>) · ½ log(1+ ε) = O(ε)

Summary of Results

• General results

– £ ~(k/ ε p/2 )

• Sparse output

– £ ~(k/ ε p )

• Deterministic

– £ (k log(n/k) / ε) for p = 1