Chapter 3

advertisement

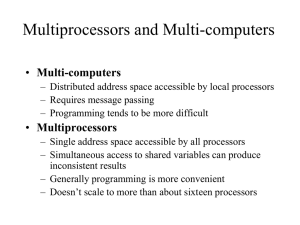

Chapter 3 Parallel Programming Models Abstraction • Machine Level – Looks at hardware, OS, buffers • Architectural models – Looks at interconnection network, memory organization, synchronous & asynchronous • Computational Model – Cost models, algorithm complexity, RAM vs. PRAM • Programming Model – Uses programming language description of process Control Flows • Process – Address spaces differ - Distributed • Thread – Shares address spaces – Shared Memory • Created statically (like MPI-1) or dynamically during run time (MPI-2 allows this as well as pthreads). Parallelization of a Program • Decomposition of the computations – Can be done at many levels (ex. Pipelining). – Divide into tasks and identify dependencies between tasks. – Can be done statically (at compile time) or dynamically (at run time) – Number of tasks places an upper bound on the parallelism that can be used – Granularity: the computation time of a task Assignment of Tasks • The number of processes or threads does not need to be the same as the number of processors • Load Balancing: each process/thread having the same amount of work (computation, memory access, communication) • Have a tasks that use the same memory execute on the same thread (good cache use) • Scheduling: assignment of tasks to threads/processes Assignment to Processors • 1-1: map a process/thread to a unique processor • many to 1: map several processes to a single processor. (Load balancing issues) • OS or programmer done Scheduling • Precedence constraints – Dependencies between tasks • Capacity constraints – A fixed number of processors • Want to meet constraints and finish in minimum time Levels of Parallelism • • • • Instruction Level Data Level Loop Level Functional level Instruction Level • Executing multiple instructions in parallel. May have problems with dependencies – Flow dependency – if next instruction needs a value computed by previous instruction – Anti-Dependency – if an instruction uses a value from register or memory when the next instruction stores a value into that place (cannot reverse the order of instructions – Output dependency – 2 instructions store into same location Data Level • Same process applied to different elements of a large data structure • If these are independent, the can distribute the data among the processors • One single control flow • SIMD Loop Level • If there are no dependencies between the iterations of a loop, then each iteration can be done independently, in parallel • Similar to data parallelism Functional Level • Look at the parts of a program and determine which parts can be done independently. • Use a dependency graph to find the dependencies/independencies • Static or Dynamic assignment of tasks to processors – Dynamic would use a task pool Explicit/Implicit Parallelism Expression • Language dependent • Some languages hide the parallelism in the language • For some languages, you must explicitly state the parallelism Parallelizing Compilers • Takes a program in a sequential language and generates parallel code – Must analyze the dependencies and not violate them – Should provide good load balancing (difficult) – Minimize communication • Functional Programming Languages – Express computations as evaluation of a function with no side effects – Allows for parallel evaluation More explicit/implicit • Explicit parallelism/implicit distribution – The language explicitly states the parallelism in the algorithm, but allows the system to assign the tasks to processors. • Explicit assignment to processors – do not have to worry about communications • Explicit Communication and Synchronization – MPI – additionally must explicitly state communications and synchronization points Parallel Programming Patterns • • • • • • • • • Process/Thread Creation Fork-Join Parbegin-Parend SPMD, SIMD Master-Slave (worker) Client-Server Pipelining Task Pools Producer-Consumer Process/Thread Creation • Static or Dynamic • Threads, traditionally dynamic • Processes, traditionally static, but dynamic has become recently available Fork-Join • An existing thread can create a number of child processes with a fork. • The child threads work in parallel. • Join waits for all the forked processes to terminate. • Spawn/exit is similar Parbegin-Parend • Also called cobegin-coend • Each statement (blocks/function calls) in the cobegin-coend block are to be executed in parallel. • Statements after coend are not executed until all the parallel statement are complete. SPMD – SIMD • Single Program, Multiple Data vs. Single Instruction, Multiple Data • Both use a number of threads/processes which apply the same program to different data • SIMD executes the statements synchronously on different data • SPMD executes the statements asynchronously Master-Slave • One thread/process that controls all the others • If dynamic thread/process creation, the master is the one that usually does it. • Master would “assign” the work to the workers and the workers would send the results to the master Client-Server • Multiple clients connected to a server that responds to requests • Server could be satisfying requests in parallel (multiple requests being done in parallel or if the request is involved, a parallel solution to the request) • The client would also do some work with response from server. • Very good model for heterogeneous systems Pipelining • Output of one thread is the input to another thread • A special type of functional decomposition • Another case where heterogeneous systems would be useful Task Pools • Keep a collection of tasks to be done and the data to do it upon • Thread/process can generate tasks to be added to the pool as well as obtaining a task when it is done with the current task Producer Consumer • Producer threads create data used as input by the consumer threads • Data is stored in a common buffer that is accessed by producers and consumers • Producer cannot add if buffer is full • Consumer cannot remove if buffer is empty Array Data Distributions • 1-D – Blockwise • Each process gets ceil(n/p) elements of A, except for the last process which gets n-(p-1)*ceil(n/p) elements • Alternatively, the first n%p processes get ceil(n/p) elements while the rest get floor(n/p) elements. – Cyclic • Process p gets data k*p+i (k=0..ceil(n/)) – Block cyclic • Distribute blocks of size b to processes in a cyclic manner 2-D Array distribution • Blockwise distribution rows or columns • Cyclic distribution of rows or columns • Blockwise-cyclic distribution of rows or columns Checkerboard • Take an array of size n x m • Overlay a grid of size g x f – g<=n – f<=m – More easily seen if n is a multiple of g and m is a multiple of f • Blockwise Checkerboard – Assign each n/g x m/f submatrix to a processor Cyclic Checkerboard • Take each item in a n/g x m/f submatrix and assign it in a cyclic manner. • Block-Cyclic checkerboard – Take each n/g x m/f submatrix and assign all the data in the submatrix to a processor in a cyclic fashion Information Exchange • Shared Variables – Used in shared memory – When thread T1 wants to share information with thread T2, then T1 writes the information into a variable that is shared with T2 – Must avoid 2 or more processes reading or writing to the same variable at the same time (race condition) – Leads to non-Deterministic behavior. Critical Sections • Sections of code where there may be concurrent accesses to shared variables • Must make these sections mutually exclusive – Only one process can be executing this section at any one time • Lock mechanism is used to keep sections mutually exclusive – Process checks to see if section is “open” – If it is, then “lock” it and execute (unlock when done) – If not, wait until unlocked Communication Operations • Single Transfer – Pi sends a message to Pj • Single Broadcast – one process sends the same data to all other processes • Single accumulation – Many values operated on to make a single value that is placed in root • Gather – Each process provides a block of data to a common single process • Scatter – root process sends a separate block of a large data structure to every other process More Communications • Multi-Broadcast – Every process sends data to every other process so every process has all the data that was spread out among the processes • Multi-Accumulate – accumulate, but every process gets the result • Total Exchange-Each process provides p-data blocks. The ith data block is sent to pi. Each processor receives the blocks and builds the structure with the data in i order. Applications • Parallel Matrix-Vector Product – Ab=c where A is n x m and b, c are m – Want A to be in contiguous memory • A single array, not an array of arrays – Have blocks of rows with allof b calculate a block of c • Used if A is stored row-wise – Have blocks of columns with a block of b compute columns that need to be summed. • Used if A is stored column-wise Processes and Threads • Process – a program in execution – Includes code, program data on stack or heap, values of registers, PC. – Assigned to processor or core for execution – If there are more processes than resources (processors or memory) for all, execute in a round-robin time-shared method – Context switch – changing from one process to another executing on processor. Fork • The Unix fork command – Creates a new process – Makes a copy of the program – Copy starts at statement after the fork – NOT shared memory model – Distributed memory model – Can take a while to execute Threads • Share a single address space • Best with physically shared memory • Easier to get started than a process – no copy of code space • Two types – Kernel threads – managed by the OS – User threads – managed by a thread library Thread Execution • If user threads are executed by a thread library/scheduler, (no OS support for threads) then all the threads are part of one process that is scheduled by the OS – Only one thread executed at a time even if there are multiple processors • If OS has thread management, then threads can be scheduled by OS and multiple threads can execute concurrently • Or, Thread scheduler can map user threads to kernel threads (several user threads may map to one kernel thread) Thread States • • • • • • Newly generated Executable Running Waiting Finished Threads transition from state to state based on events (start, interrupt, end, block, unblock, assign-to-processor) Synchronization • Locks – A process “locks” a shared variable at the beginning of a critical section • Lock allows process to proceed if shared variable is unlocked • Process blocked if variable is locked until variable is unlocked • Locking is an “atomic” process. Semaphore • Usually a binary type but can be integer • wait(s) – Waits until the value of s is 1 (or greater) – When it is, decreases s by 1 and continues • signal(s) – Increments s by 1 Barrier Synchronization • A way to have every process wait until every process is at a certain point • Assures the state of every process before certain code is executed Condition Synchronization • A thread is blocked until a given condition is established – If condition is not true, then put into blocked state – When condition true, moved from blocked to ready (not necessarily directly to a processor) – Since other processes may be executing, by the time this process gets to a processor, the condition may no longer be true • So, must check condition after condition satisfied Efficient Thread Programs • Proper number of threads – Consider degree of parallelism in application – Number of processors – Size of shared cache • Avoid synchronization as much as possible – Make critical section as small as possible • Watch for deadlock conditions Memory Access • Must consider writing values to shared memory that is held in local caches • False sharing – Consider 2 processes writing to different memory locations – SHOULD not be an issue since not shared by two cache memories – HOWEVER, if the memory locations are close to each other, they may be in the same cache line and actually have the different locations both be in the different caches