Modeling Pixel Process with Scale Invariant Local Patterns for

advertisement

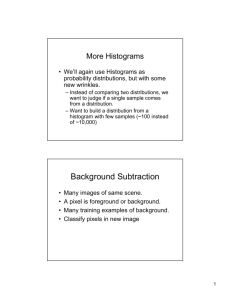

Modeling Pixel Process with Scale Invariant Local Patterns for Background Subtraction in Complex Scenes (CVPR’10) Shengcai Liao, Guoying Zhao, Vili Kellokumpu, Matti Pietikainen, Stan Z. Li Outline • • • • • • Introduction Scale Invariant Local Ternary Pattern (SILTP) Background modeling Multiscale Block-based SILTP Experimental results Conclusion Introduction • Moving object detection in video sequences is one of the main tasks in many computer vision applications. • Its output is as an input to a higher level process, such as object categorization, tracking or action recognition. • Background subtraction is an common approach for this task. Introduction (cont.) • The popular idea is to model temporal samples in multimodal distribution, in either parametric or nonparametric way • Parametric : GMM , Nonparametric : KDE • GMM is the most popular technique. Each pixel is modeled independently using a mixture of Gaussians and updated by an online approximation. • Don’t handle cast shadow and dynamic scenes well • A number of existing works handle illumination such as moving shadows in special color spaces, but the learned parameters are not adaptable . GMM Background Modeling • Initial background model • The first N frames of the input sequence • K-means clustering • The history of each pixel, X1,..., X N , is modeled by three independent Gaussian distributions, and X is the color value, i.e. X X R , X G , X B . • For computational reasons, we assume the red, green, and blue pixel values are independent. GMM Background Modeling • The probability of the observing pixel value X t P X t i i i X , , t t t , i R ,G , B i • t and t i: the mean value and the covariance matrix of the i th Gaussian at time t, wherei R, G, B . • η: the Gaussian probability density function • Update of t and t2 : t 1 t 1 X t t2 1 t21 X t t T X t t • ρ: the update rate and its value is between 0 and 1. Introduction (cont.) • Besides color based background modeling, LBP based method models each pixel by a group of LBP histograms • Moving shadow could not be handled well • Sensitive to noise • In this paper, they extend LBP to a scale invariant local ternary pattern (SILTP) operator to handle illumination variations. • They propose a Pattern KDE technique to effectively model probability distribution for handling complex dynamic background • In addition, they develop a multi-scale fusion scheme to consider the spatial scale information Scale Invariant Local Ternary Pattern • LBP is proved to be a powerful local image descriptor. • Not robust to local image noises when neighboring pixels are similar • Tan and Triggs proposed a LTP (Local Ternary Pattern) operator for face recognition. • It is extended from LBP by simply adding a small offset value for comparison • It’s not invariant under scale transform of intensity values Scale Invariant Local Ternary Pattern • The intensity scale invariant property of a local comparison operator is very important • The illumination variations, either global or local, often cause sudden changes of gray scale intensities of neighboring pixels, which would approximately be a scale transform • Given any pixel location (Xc,Yc) , SILTP encodes it as • Ic is the gray intensity value of the center pixel, Ik are that of its N neighborhood pixel, denotes concatenation operator of binary strings, 𝜏 is a scale factor The advantage of SILTP operator • It is computationally efficient • By introducing a tolerable range like LTP, the SILTP operator is robust to local image noises within a range. • Especially in the shadowed area, the region is darker and contain more noises, in which SILTP is tolerable while local comparison result of LBP would be affected more • The scale invariance property makes SILTP robust to illumination changes • The illumination is suddenly changed from darker to brighter Comparison of LBP, LTP and SILTP Black block white block KDE of Local Patterns • Given a gray scale video sequence, the pixel process with the local pattern observations over time 1,2,…,t at a pixel location(X0,Y0) is defined as • where F is the texture image sequence. • all background patterns within a pixel process, either unitary or dynamic, are distributed at just several possible bins Pattern KDE • Traditional numerical value based methods can not be used directly for modeling local patterns into background • They develop a pattern KDE technique with a particular local pattern kernel that is suitable for descriptors like LBP,LTP, and SILTP • First they define distance function d(p,q) as the number of different bits between two local patterns p and q • Then they derive the local pattern kernel as where g is a weighting function that can typically be a Gaussian Pattern KDE (cont.) • The probability density function can be estimated smoothly as • Where ci are weighting coefficients • For example, the distribution of LTP pixel process with unitary back-ground is: 20(00010100)/432 hits, 21(00010101)/4 hits, 84(01010100)/6 hits, and 148(10010100)/58 hits. • If no kernel technique id used, the patterns 21 and 84 might be regarded as foregrounds and the pattern 148 might be considered to be another modal • In their pattern, all patterns will be considered in the same distribution Modeling background with local patterns • Given an estimated density function at time t-1 and a new coming background pattern pt, we update the new density function as (𝛼 is a learning rate) • For multimodality of local pattern, they estimate K density functions for each pixel process via PKDE method, with 𝜔𝑘,𝑡 , k= 1,2,…,K being the corresponding weights and normalized to 1 • Afterwards, we estimate the probability of a new pattern pt being background as Multiscale Block-based SILTP(MB-SILTP) • Fuse multiscale spatial information to achieve better performance • MB-SILTP encodes in a way similar with (1) , where Ic and Ik are replaced with mean values of corresponding blocks, of which the size 𝜔 x 𝜔 indicate the scale. • To implement the MB-SILTP based background subtraction efficiently, first the original video frame is downsample by 𝜔 • Afterwards, MB-SILTP can be calculated, and the proposed background model can be applied on the reduced space Multiscale Block-based SILTP(MB-SILTP) • Finally, the resulted probabilities are upsampled bilinearly to the original space and generate the foreground / background segmentation result • In the way, the speed is much faster with larger scale, whereas the precision is generally lower. • The background probabilities at each scale can be fused on the original space • Adopt the geometric average of probabilities at each scale as the fusion score Experimental Results • Nine dataset containing complex backgrounds , such as busy human flows, moving cast shadows, etc. • The proposed approach was compared with existing state-of-the-art online background subtraction algorithms • • • • • • • • Mixture of Gaussian (MoG) ACMMM03 Blockwise LBP histogram based (LBP-B) Pixelwise LBP histogram based (LBP-P) PKDE based background subtraction with LTP (PKDEltp) PKDE based background subtraction with SILTP (PKDEsiltp) 3 MB-SILTP with 𝜔 = 3 ( PKDEmb siltp ) 1 2 3 ) MB-SILTP with 𝜔 = 1,2,3 ( PKDEmb siltp Experimental Results • All tested algorithm were implemented in c++ and ran on standard PC with 2.4GHz CPU, 2G memory • For all algorithms, a standard OpenCV postprocessing was used which eliminates small pieces less than 15 pixels. Experimental Results • Quantitative evaluation Conclusion • They proposed an improved local image descriptor called SILTP, and demonstrated its power for background subtraction • They have also proposed a multiscale block-based SILTP operator for considering the spatial scale information. • A Pattern Kernel Density Estimation technique was proposed and based on it we have developed a multimodal background modeling framework