Margin - University of Nottingham

advertisement

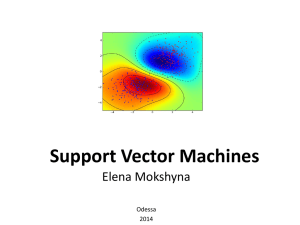

An Introduction to Support Vector Machines Presenter: Celina Xia University of Nottingham G54DMT - Data Mining Techniques and Applications Outline Maximizing the Margin Linear SVM and Linear Separable Case Primal Optimization Problem Dual Optimization Problem Non-Separable Case Non-Linear Case Kernel Functions Applications G54DMT - Data Mining Techniques and Applications Margin Any of these separating lines would be fine.. ..but which is best? G54DMT - Data Mining Techniques and Applications Margin Margin: the width that the boundary could be increased by before hitting a datapoint. margin margin Wide margin Decision boundary Narrow margin G54DMT - Data Mining Techniques and Applications SVMs reckon… Decision boundary The decision boundary with maximal margin deliver the best generalization ability. margin w Orientation of the decision boundary SVM—Linear Separable Objective: maximize the margin maximize 2 w wTx+b=1 wTx+b=0 wTx+b=-1 minimize w 2 2 SVM—Linear Separable Objective: maximize the margin maximize 2 w wTx+b=1 wTx+b=0 wTx+b=-1 Support Vectors minimize w 2 2 SVM—Linear Separable 1 T min w w w ,b 2 subject to yi (w xi b) 1 i 1,2,...,l T (P1) The Lagrangian trick min x 2 x s. t. x b Moving the constraint to objective function Lagrangian: min LP x - ( x-b) 2 The Lagrangian trick min LP x - ( x-b) 2 Optimality conditons: LP 2x 0 x 0 xb ( x-b) 0 The Lagrangian trick min LP x - ( x-b) 2 Replace xwith 2 Solving: max LD s. t . 0 2 4 b SVM—Linear Separable 1 T min w w w ,b 2 subject to yi (w xi b) 1 i 1,2,...,l T (P1) SVM—Linear Separable Lagrangian: l 1 T LP w w i [ yi (wT xi b) 1] 2 i 1 Optimality conditons: l l LP w i yi xi 0 w i yi xi w i 1 i 1 l LP i yi 0 b i 1 Dual Optimization Problem l 1 l l max LD i i j yi y j (x i x j ) α 2 i 1 j 1 i 1 Subject to (P 2) l y i 1 i i i 0 0 i 1,2,...,l Linearly Non-separable Case(Soft Margin Optimal Hyperplane) G54DMT - Data Mining Techniques and Applications Linearly Non-separable Case(Soft Margin Optimal Hyperplane) l 1 T min w w C i 2 i 1 Subject t o (P 3) yi ( w T x i b ) 1 i i 0 i 1,2 ,...l G54DMT - Data Mining Techniques and Applications Lagrangian l l l 1 T LP w w C i i [ yi (wxi b) 1 i ] ii 2 i 1 i 1 i 1 G54DMT - Data Mining Techniques and Applications Lagrangian l l LP w i yi xi 0 w i yi x i w i 1 i 1 l LP i yi 0 b i 1 LP 0 C i i 0, i 1,...,l i G54DMT - Data Mining Techniques and Applications Dual Optimization Problem l 1 l l max LD i i j yi y j (x i x j ) α 2 i 1 j 1 i 1 Subject to (P 2) l y i 1 i i 0 0 i C i 1,2 ,...,l Problems with linear SVM What if the decison function is not a linear? Problems with linear SVM Dual Optimization Problem l 1 l l max LD i i j yi y j (xTi x j ) α 2 i 1 j 1 i 1 Subject to (P 2) l y i 1 i i i 0 0 i 1,2,...,l Dual Optimization Problem l 1 l l max LD i i j yi y j ( (x i )T (x j )) α 2 i 1 j 1 i 1 Subject to (P 2) l y i 1 i i i 0 0 i 1,2,...,l Kernel Functions A kernel function K enables the explicit mapping of input data without exact knowledge of K (x, y) (x) (y) Gaussian radial basis function (RBF) is one of widely-used kernel functions K (x, y) e ||x y||2 2 2 Dual Optimization Problem l 1 l l max LD i i j yi y j (xTi x j ) α 2 i 1 j 1 i 1 Subject to l y i 1 i i i 0 0 replace the dot product of the inputs with the kernel function i 1,2,...,l (P 2) Dual Optimization Problem l 1 l l max LD i i j yi y j K (x i , x j ) α 2 i 1 j 1 i 1 Subject to (P 2) l y i 1 i i i 0 0 i 1,2,...,l Some kernel functions Polynomial type: K (u, v) (uT v)d , d 1,2 Polynomial type: K (u, v) [(uT v) 1]d , d 1,2 Gaussian radial basis function (RBF) || u v ||2 K (u,v) exp 2 2 Multi-Layer Perceptron: K (u, v) tanh(uT v ) 27 G54DMT - Data Mining Techniques and Applications Two-Spiral Pattern Given 194 training data points on X-Y plane: 97 of class “ red circle’’ and another 97 of class “blue cross ’’. Question: how to distinguish between these two spirals ? G54DMT - Data Mining Techniques and Applications What’s the challenge? A proper learning of these 194 training data points A piece of cake for a variety of methods. After all, it’s just a limited number of 194 points Correct assignment of an arbitrary data point on XY plane to the right “spiral stripe” Very challenging since there are an infinite number of points on XY-plane, making it the touchstone of the power of a classification algorithm G54DMT - Data Mining Techniques and Applications G54DMT - Data Mining Techniques and Applications This is exactly what we want! G54DMT - Data Mining Techniques and Applications References http://www.kernel-machines.org/ http://www.support-vector.net/ AN INTRODUCTION TO SUPPORT VECTOR MACHINES (and other kernel-based learning methods) N. Cristianini and J. Shawe-Taylor Cambridge University Press 2000 ISBN: 0 521 78019 5 Papers by Vapnik C.J.C. Burges: A tutorial on Support Vector Machines. Data Mining and Knowledge Discovery 2:121-167, 1998.