Devavrat Shah

advertisement

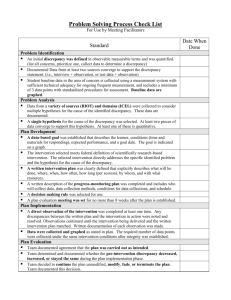

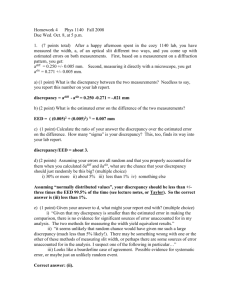

RANDOM GEOMETRIC GRAPHS Discussion of Markov Lecture of Francois Baccelli Devavrat Shah Laboratory for Information & Decision System Massachusetts Institute of Technology Random geometric graph G(n,r) Place n node uniformly at random in unit square Connect two nodes that are within distance r r Unit length Unit length Random geometric graph Quantity of interest: discrepancy How “far” is G(n,r) from “expected” node placement At what r does G(n,r) become connected? near 1/ n ? 1/ n Random geometric graph Quantity of interest: discrepancy How “far” is G(n,r) from “expected” node placement At what r does G(n,r) become connected? near 1/ n ? Connectivity threshold (Penrose ‘97, Gupta-Kumar ‘00) Let r 2 log n n cn n , then P[G(n, r) connected] 0 if c n 1 if c n “Connectivity discrepancy” Additional log n n Random geometric graph Quantity of interest: discrepancy How “far” is G(n,r) from “expected” node placement Minimum of total edge-lengths over all perfect matchings L1 grid-matching threshold: (Ajtai-Komlos-Tusnady ‘80) With high probability, the minimal total length of matching is n log n Similar to (and implies) connectivity threshold Additional discrepancy log n Random geometric graph Quantity of interest: discrepancy How “far” is G(n,r) from “expected” node placement Minimum of maximum edge-length over all perfect matchings L grid-matching threshold: (Leighton-Shor ‘86) With high probability, minimal max length over all max’l length matchings is Ln ~ log 3 4 n n ~ rc log 1 4 n Further, additional discrepancy of log 1 4 n Why worry about discrepancy ? For r scaling as L grid-matching threshold (say L) G(n,r) contains “expected” grid as it’s sub-graph w.h.p Implications: (L) “edge conductance” of G(n,r) 2L + 1/nfor r = (L) scales as (1/n) (ignoring log n term) L L Hence 1/n Capacity scales as (1/n) (Gupta-Kumar ’00) Hierarchical schemes for info. th. scaling (Ozgur et al ‘06, Niesen et al ‘08) Monotone graph properties have sharp threshold (Goel-Rai-Krish ‘06) Mixing time of RW scales (n) (Boyd-Ghosh-Prabhakar-Shah ‘06) Information diffuses in time (n) (MoskAoyama-Shah ‘08) Why worry about discrepancy ? For r scaling as L grid-matching threshold (say L) G(n,r) contains “expected” grid as it’s sub-graph w.h.p Implication: n RED, n BLUE points thrown at random in unit square Match a RED point to a BLUE point that is UP-RIGHT Number of unmatched points scale as (n L) ~ (n) Online bin-packing analysis (Talagrand-Rhee ‘88) Mean Glivanko-Cantelli convergence (Shor-Yukich ‘91) Bin-packing with queues (Shah-Tsitsiklis ‘08) The ultimate matching conjecture Talagrand ‘01 proposed the following conjecture Unifies L1 and L threshold results (and more) Throw n RED, n BLUE points at random in unit square (X1i,Y1i) : position of ith RED pt (X2i,Y2i) : position of ith BLUE pt For any 1/1+ 1/2=2, and some constant C, there exists a matching such that for j =1, 2: i exp C n log n j X i -Y j αj (i) 2 Point process view Poisson process and stochastic geometry Useful, for example understanding Structure of radial spanning trees (cf. Baccelli-Bordenave ‘07) Behavior of wireless protocols (cf. Baccelli-Blaszczyszyn ‘10) Hope: resolution of The ultimate matching conjecture (or a variant of it)