Linear programming, quadratic programming, sequential quadratic

advertisement

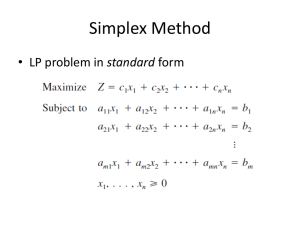

CS B553: ALGORITHMS FOR OPTIMIZATION AND LEARNING Linear programming, quadratic programming, sequential quadratic programming KEY IDEAS Linear programming Simplex method Mixed-integer linear programming Quadratic programming Applications RADIOSURGERY 3 CyberKnife (Accuray) Normal tissue Tumor Tumor Radiologically sensitive tissue Tumor Tumor Tumor Tumor OPTIMIZATION FORMULATION Dose cells (xi,yj,zk) in a voxel grid Cell class: normal, tumor, or sensitive Beam “images”: B1,…,Bn describing dose absorbed at each cell with maximum power Optimization variables: beam powers x1,…,xn Constraints: Normal cells: Dijk Dnormal Sensitive cells: Dijk Dsensitive Tumor cells: Dmin Dijk Dmax 0 xb 1 𝑏 𝑛 𝑥 𝐵 𝑏=1 𝑏 𝑖𝑗𝑘 Dose calculation: Dijk = Objective: minimize total dose 𝑖𝑗𝑘 𝐷𝑖𝑗𝑘 LINEAR PROGRAM General form min fTx+g s.t. Axb Cx=d A convex polytope A slice through the polytope THREE CASES Infeasible Feasible, bounded Feasible, unbounded f f ? x* x* f SIMPLEX ALGORITHM (DANTZIG) Start from a vertex of the feasible polytope “Walk” along polytope edges while decreasing objective on each step Stop when the edge is unbounded or no improvement can be made Implementation details: How to pick an edge (exiting and entering) Solving for vertices in large systems Degeneracy: no progress made due to objective vector being perpendicular to edges COMPUTATIONAL COMPLEXITY Worst case exponential Average case polynomial (perturbed analysis) In practice, usually tractable Commercial software (e.g., CPLEX) can handle millions of variables/constraints! SOFT CONSTRAINTS Penalty Normal Dose Sensitive Tumor SOFT CONSTRAINTS Auxiliary variable zijk: penalty at each cell zijk zijk 0 Dijk zijk c(Dijk – Dnormal) Dose SOFT CONSTRAINTS Auxiliary variable zijk: penalty at each cell fijk zijk 0 zijk zijk c(Dijk – Dnormal) Dose Introduce term in objective to minimize zijk MINIMIZING AN ABSOLUTE VALUE Absolute value Objective minx |x1| s.t. Ax b Cx = d x1 Constraints minv,x v Ax b Cx = d x1 v -x1 v s.t. x1 MINIMIZING AN L-1 OR L-INF NORM L1 norm Fx* minx ||Fx-g||1 s.t. Ax b Cx = d g Feasible polytope, projected thru F Feasible polytope, projected thru F L norm minx ||Fx-g|| s.t. Ax b Cx = d g Fx* MINIMIZING AN L-1 OR L-INF NORM L1 norm minx ||Fx-g||1 s.t. Ax b Cx = d mine,x 1Te s.t. Fx + Ie g Fx - Ie g Ax b Cx = d Fx* e g Feasible polytope, projected thru F MINIMIZING AN L-2 NORM L2 norm minx ||Fx-g||2 s.t. Ax b Cx = d Fx* g Not a linear program! Feasible polytope, projected thru F QUADRATIC PROGRAMMING General form min ½ xTHx + gTx + h s.t. Axb Cx=d Objective: quadratic form Constraints: linear QUADRATIC PROGRAMS H positive definite Feasible polytope H-1 g QUADRATIC PROGRAMS H positive definite Optimum can lie off of a vertex! H-1 g QUADRATIC PROGRAMS H negative definite Feasible polytope QUADRATIC PROGRAMS H positive semidefinite Feasible polytope SIMPLEX ALGORITHM FOR QPS Start from a vertex of the feasible polytope “Walk” along polytope facets while decreasing objective on each step Stop when the facet is unbounded or no improvement can be made Facet: defined by mn constraints m=n: vertex m=n-1: line m=1: hyperplane m=0: entire space ACTIVE SET METHOD Active inequalities S=(i1,…,im) Constraints ai1Tx = bi1, … aimTx = bim Written as ASx – bS = 0 Objective ½ xTHx + gTx + f Lagrange multipliers = (1,…,m) Hx + g + AST = 0 Asx - bS = 0 𝐻 Solve linear system: 𝐴𝑆 If x violates a different constraint not in S, add it −𝑔 𝐴𝑆𝑇 𝑥 = 𝑏 0 𝑆 If k<0 , then drop ik from S PROPERTIES OF ACTIVE SET METHODS FOR QPS Inherits properties of simplex algorithm Worst case: exponential number of facets Positive definite H: polynomial time in typical case Indefinite or negative definite H: can be exponential time! NP complete problems APPLYING QPS TO NONLINEAR PROGRAMS Recall: we could convert an equality constrained optimization to an unconstrained one, and use Newton’s method Each Newton step: Fits a quadratic form to the objective Fits hyperplanes to each equality Solves for a search direction (x,) using the linear equality-constrained optimization How about inequalities? SEQUENTIAL QUADRATIC PROGRAMMING Idea: fit half-space constraints to each inequality g(x) 0 becomes g(xt) + g(xt)T(x-xt) 0 xt g(x) 0 g(xt) + g(xt)T(x-xt) 0 SEQUENTIAL QUADRATIC PROGRAMMING Given nonlinear minimization minx f(x) s.t. gi(x) 0, hj(x) = 0, for i=1,…,m for j=1,…,p At each step xt, solve QP minx ½xTx2L(xt,t,t)x + xL(xt,t,t)Tx s.t. gi(xt) + gi(xt)Tx 0 for i=1,…,m hj(xt) + hj(xt)Tx = 0 for j=1,…,p To derive the search direction x Directions and are taken from QP multipliers ILLUSTRATION xt x g(x) 0 g(xt) + g(xt)T(x-xt) 0 ILLUSTRATION x xt+1 g(x) 0 g(xt+1) + g(xt+1)T(x-xt+1) 0 ILLUSTRATION xt+2 x g(x) 0 g(xt+2) + g(xt+2)T(x-xt+2) 0 SQP PROPERTIES Equivalent to Newton’s method without constraints Equivalent to Lagrange root finding with only equality constraints Subtle implementation details: Does the endpoint need to be strictly feasible, or just up to a tolerance? How to perform a line search in the presence of inequalities? Implementation available in Matlab. FORTRAN packages too =(