Scheduling Applications

advertisement

IE 514 Production

Scheduling

Introduction

Spring 2002

IE 514

1

Contact Information

Siggi Olafsson

3018 Black Engineering

294-8908

olafsson@iastate.edu

http://www.public.iastate.edu/~olafsson

OH: MW 10:30-12:00

Spring 2002

IE 514

2

Administration (Syllabus)

Text

Prerequisites

Assignments

Homework

Project

Final Exam

35%

40%

35%

Computing

Spring 2002

IE 514

3

What is Scheduling About?

Applied operations research

Models

Algorithms

Solution using computers

Implement algorithms

Draw on common databases

Integration with other systems

Spring 2002

IE 514

4

Application Areas

Procurement and production

Transportation and distribution

Information processing and

communications

Spring 2002

IE 514

5

Manufacturing Scheduling

Short product life-cycles

Quick-response manufacturing

Manufacture-to-order

More complex operations must be

scheduled in shorter amount of time with

less room for errors!

Spring 2002

IE 514

6

Scope of Course

Levels of planning and scheduling

Long-range planning (several years),

middle-range planning (1-2 years),

short-range planning (few months),

scheduling (few weeks), and

reactive scheduling (now)

These functions are now often integrated

Spring 2002

IE 514

7

Scheduling Systems

Enterprise Resource Planning (ERP)

Common for larger businesses

Materials Requirement Planning (MRP)

Very common for manufacturing companies

Advanced Planning and Scheduling (APS)

Most recent trend

Considered “advanced feature” of ERP

Spring 2002

IE 514

8

Scheduling Problem

Allocate scarce resources to tasks

Combinatorial optimization problem

Maximize profit

Subject to constraints

Mathematical techniques and heuristics

Spring 2002

IE 514

9

Our Approach

Scheduling Problem

Problem Formulation

Model

Solve with Computer Algorithms

Conclusions

Spring 2002

IE 514

10

Scheduling Models

Project scheduling

Job shop scheduling

Flexible assembly systems

Lot sizing and scheduling

Interval scheduling, reservation,

timetabling

Workforce scheduling

Spring 2002

IE 514

11

General Solution

Techniques

Mathematical programming

Linear, non-linear, and integer programming

Enumerative methods

Branch-and-bound

Beam search

Local search

Simulated annealing/genetic algorithms/tabu

search/neural networks.

Spring 2002

IE 514

12

Scheduling System Design

Order

master file

Databases

Shop floor

data collection

Database Management

Schedule

generation

Automatic Schedule Generator

Schedule Editor

User interfaces

Performance

Evaluation

Graphical Interface

User

Spring 2002

IE 514

13

LEKIN

On disk with book

Generic job shop scheduling system

User friendly windows environment

C++ object oriented design

Can add own routines

Spring 2002

IE 514

14

Advanced Topics

Uncertainty, robustness, and reactive

scheduling

Multiple objectives

Internet scheduling

Spring 2002

IE 514

15

Topic 1

Setting up the Scheduling

Problem

Spring 2002

IE 514

16

Modeling

Three components to any model:

Decision variables

This is what we can change to affect the system,

that is, the variables we can decide upon

Objective function

E.g, cost to be minimized, quality measure to be

maximized

Constraints

Which values the decision variables can be set to

Spring 2002

IE 514

17

Decision “Variables”

Three basic types of solutions:

A sequence: a permutation of the jobs

A schedule: allocation of the jobs in a more

complicated setting of the environment

A scheduling policy: determines the next

job given the current state of the system

Spring 2002

IE 514

18

Model Characteristics

Multiple factors:

Number of machine and resources,

configuration and layout,

level of automation, etc.

Our terminology:

Resource = machine (m)

Entity requiring the resource = job (n)

Spring 2002

IE 514

19

Notation

Static data:

Processing time (pij)

Release date (rj)

Due date (dj)

Weight (wj)

Dynamic data:

Completion time (Cij)

Spring 2002

IE 514

20

Machine Configuration

Standard machine configurations:

Single machine models

Parallel machine models

Flow shop models

Job shop models

Real world always more complicated.

Spring 2002

IE 514

21

Constraints

Precedence constraints

Routing constraints

Material-handling constraints

Storage/waiting constraints

Machine eligibility

Tooling/resource constraints

Personnel scheduling constraints

Spring 2002

IE 514

22

Other Characteristics

Sequence dependent setup

Preemptions

preemptive resume

preemptive repeat

Make-to-stock versus make-to-order

Spring 2002

IE 514

23

Objectives and

Performance Measures

Throughput (TP) and makespan (Cmax)

Due date related objectives

Work-in-process (WIP), lead time

(response time), finished inventory

Others

Spring 2002

IE 514

24

Throughput and Makespan

Throughput

Defined by bottleneck machines

Makespan

Cmax maxC1 , C2 ,...,Cn

Ci maxCi1 , Ci 2 ,...,Cim , i 1,...,n

Minimizing makespan tends to maximize

throughput and balance load

Spring 2002

IE 514

25

Due Date Related

Objectives

Lateness L j C j d j

Minimize maximum lateness (Lmax)

Tardiness Tj maxC j d j ,0

Minimize the weighted tardiness

n

w T

j 1

Spring 2002

j

IE 514

j

26

Due Date Penalties

Tardiness

Lateness

Lj

Tj

Cj

Cj

dj

dj

Late or Not

In practice

Uj

1

Cj

Cj

dj

Spring 2002

dj

IE 514

27

WIP and Lead Time

Work-in-Process (WIP) inventory cost

Minimizing WIP also minimizes average

lead time (throughput time)

Minimizing lead time tends to minimize

the average number of jobs in system

Equivalently, we can minimize sum of the

completion times:

n

n

C j

w jC j

i 1

Spring 2002

j 1

IE 514

28

Other Costs

Setup cost

Personnel cost

Robustness

Finished goods inventory cost

Spring 2002

IE 514

29

Topic 2

Solving Scheduling

Problems

Spring 2002

IE 514

30

Classic Scheduling Theory

Look at a specific machine environment

with a specific objective

Analyze to prove an optimal policy or to

show that no simple optimal policy exists

Thousands of problems have been studied

in detail with mathematical proofs!

Spring 2002

IE 514

31

Example: single machine

Lets say we have

Single machine (1), where

the total weighted completion time should be

minimized (SwjCj)

We denote this problem as

1|| wj C j

Spring 2002

IE 514

32

Optimal Solution

Theorem: Weighted Shortest Processing

time first - called the WSPT rule - is

optimal for 1 || wj C j

Note: The SPT rule starts with the job that has

the shortest processing time, moves on the job

with the second shortest processing time, etc.

Spring 2002

IE 514

33

Proof (by contradiction)

Suppose it is not true and schedule S is optimal

Then there are two adjacent jobs, say job j

followed by job k such that

wj

wk

p j pk

Do a pairwise interchange to get schedule S ’

j

k

t p j pk

t

k

Spring 2002

t

IE 514

j

t p j pk

34

Proof (continued)

The weighted completion time of the two jobs under S is

(t p j )wj (t p j pk )wk

The weighted completion time of the two jobs under S ‘ is

(t pk )wk (t p j pk )wj

Now:

(t p j ) w j (t p j pk ) wk (t p j ) w j p j wk (t pk ) wk

(t p j ) w j pk w j (t pk ) wk

(t pk ) wk (t pk p j ) w j

Contradicting that S is optimal.

Spring 2002

IE 514

35

Complexity Theory

Classic scheduling theory draws heavily on

complexity theory

The complexity of an algorithm is its

running time in terms of the input

parameters (e.g., number of jobs and

number of machines)

Big-Oh notation, e.g., O(n2m)

Spring 2002

IE 514

36

Polynomial versus NP-Hard

Time

O(n2 )

n

O(2 )

O(n)

O(logn)

Problemsize (n)

Spring 2002

IE 514

37

Scheduling in Practice

Practical scheduling problems cannot be

solved this easily!

Need:

Heuristic algorithms

Knowledge-based systems

Integration with other enterprise functions

However, classic scheduling results are

useful as a building block

Spring 2002

IE 514

38

General Purpose

Scheduling Procedures

Some scheduling problems are easy

Simple priority rules

Complexity: polynomial time

However, most scheduling problems are

hard

Complexity: NP-hard, strongly NP-hard

Finding an optimal solution is infeasible in

practice heuristic methods

Spring 2002

IE 514

39

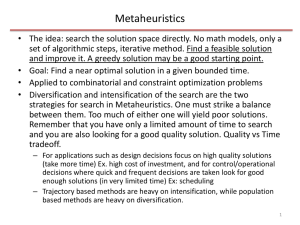

Types of Heuristics

Simple Dispatching Rules

Composite Dispatching Rules

Branch and Bound

Beam Search

Simulated Annealing

Tabu Search

Genetic Algorithms

Spring 2002

IE 514

Construction

Methods

Improvement

Methods

40

Topic 3

Dispatching Rules

Spring 2002

IE 514

41

Dispatching Rules

Prioritize all waiting jobs

job attributes

machine attributes

current time

Whenever a machine becomes free: select

the job with the highest priority

Static or dynamic

Spring 2002

IE 514

42

Release/Due Date Related

Earliest release date first (ERD) rule

variance in throughput times

Earliest due date first (EDD) rule

maximum lateness

Minimum slack first (MS) rule

Current

Time

maxd j p j t,0

maximum lateness

Processing

Time

Deadline

Spring 2002

IE 514

43

Processing Time Related

Longest Processing Time first (LPT) rule

balance load on parallel machines

makespan

Shortest Processing Time first (SPT) rule

sum of completion times

WIP

Weighted Shortest Processing Time first

(WSPT) rule

Spring 2002

IE 514

44

Processing Time Related

Critical Path (CP) rule

precedence constraints

makespan

Largest Number of Successors (LNS) rule

precedence constraints

makespan

Spring 2002

IE 514

45

Other Dispatching Rules

Service in Random Order (SIRO) rule

Shortest Setup Time first (SST) rule

makespan and throughput

Least Flexible Job first (LFJ) rule

makespan and throughput

Shortest Queue at the Next Operation

(SQNO) rule

machine idleness

Spring 2002

IE 514

46

Discussion

Very simple to implement

Optimal for special cases

Only focus on one objective

Limited use in practice

Combine several dispatching rules

Composite Dispatching Rules

Spring 2002

IE 514

47

Example

Single Machine with

Weighted Total Tardiness

Spring 2002

IE 514

48

Setup

Problem: 1 || w jTj

No efficient algorithm (NP-Hard)

Branch and bound can only solve very

small problems (<30 jobs)

Are there any special cases we can solve?

Spring 2002

IE 514

49

Case 1: Tight Deadlines

Assume dj=0

Then

T j max0, C j d j

max0, C j C j

w T w C

j

j

j

j

We know that WSPT is optimal for this

problem!

Spring 2002

IE 514

50

Conclusion

The WSPT is optimal in the extreme case

and should be a good heuristic whenever

due dates are tight

Now lets look at the opposite

Spring 2002

IE 514

51

Case 2: “Easy” Deadlines

Theorem: If the deadlines are sufficiently

spread out then the MS rule

jselect argmaxd j p j t,0

is optimal (proof a bit harder)

Conclusion: The MS rule should be a good

heuristic whenever deadlines are widely

spread out

Spring 2002

IE 514

52

Composite Rule

Two good heuristics

Weighted Shorted Processing Time (WSPT )

optimal with due dates zero

Minimum Slack (MS)

Optimal when due dates are “spread out”

Any real problem is somewhere in between

Combine the characteristics of these rules

into one composite dispatching rule

Spring 2002

IE 514

53

Apparent Tardiness Cost

(ATC) Dispatching Rule

New ranking index

maxd j p j t ,0

I j (t )

exp

pj

K p (t )

wj

Scaling constant

When machine becomes free:

Compute index for all remaining jobs

Select job with highest value

Spring 2002

IE 514

54

Special Cases (Check)

If K is very large:

ATC reduces to WSPT

If K is very small and no overdue jobs:

ATC reduces to MS

If K is very small and overdue jobs:

ATC reduces to WSPT applied to overdue

jobs

Spring 2002

IE 514

55

Choosing K

Value of K determined empirically

Related to the due date tightness factor

d

1

Cmax

and the due date range factor

d max d min

R

Cmax

Spring 2002

IE 514

56

Choosing K

Usually 1.5 K 4.5

Rules of thumb:

Fix K=2 for single machine or flow shop.

Fix K=3 for dynamic job shops.

Adjusted to reduce weighted tardiness

cost in extremely slack or congested job

shops

Statistical analysis/empirical experience

Spring 2002

IE 514

57

Topic 4

Branch-and-Bound

& Beam Search

Spring 2002

IE 514

58

Branch and Bound

Enumerative method

Guarantees finding the best schedule

Basic idea:

Look at a set of schedules

Develop a bound on the performance

Discard (fathom) if bound worse than best

schedule found before

Spring 2002

IE 514

59

Example

Single Machine with

Maximum Lateness

Objective

Spring 2002

IE 514

60

Classic Results

The EDD rule is optimal for 1 || Lmax

If jobs have different release dates, which

we denote

1 | rj | Lmax

then the problem is NP-Hard

What makes it so much more difficult?

Spring 2002

IE 514

61

1 | rj | Lmax

Jobs

EDD

1

2

0

r2

r3

2

2

3

5

rj

0

3

5

dj

8

14

10

3

5

r1

pj

1

4

10

d1

15

d3

d2

Lmax maxL1 , L2 , L3 maxC1 d1 , C2 d 2 , C3 d3

max4 8,10 14,15 10 max 4,4,5 5

Spring 2002

IE 514

Can we

improve?

62

Delay Schedule

Add a delay

1

3

0

r1

5

r2

r3

2

10

d1

15

d3

d2

Lmax maxL1 , L2 , L3 maxC1 d1 , C2 d 2 , C3 d3

max4 8,16 14,10 10 max 4,2,0 3

What makes this problem hard is that

the optimal schedule is not necessarily

a non-delay schedule

Spring 2002

IE 514

63

Final Classic Result

The preemptive EDD rule is optimal for

the preemptive (prmp) version of the

problem

1 | rj , prmp| Lmax

Note that in the previous example, the preemptive EDD

rule gives us the optimal schedule

Spring 2002

IE 514

64

Branch and Bound

The problem

1 | rj | Lmax

cannot be solved using a simple dispatching rule

so we will try to solve it using branch and bound

To develop a branch and bound procedure:

Determine how to branch

Determine how to bound

Spring 2002

IE 514

65

Data

Jobs

1

2

3

4

pj

4

2

6

5

rj

0

1

3

5

dj

8

12

11

10

Spring 2002

IE 514

66

Branching

(•,•,•,•)

(1,•,•,•)

Spring 2002

(2,•,•,•)

(3,•,•,•)

IE 514

(4,•,•,•)

67

Branching

(•,•,•,•)

(1,•,•,•)

(2,•,•,•)

(3,•,•,•)

(4,•,•,•)

Discard immediately because

r3 3

r4 5

Spring 2002

IE 514

68

Branching

(•,•,•,•)

(1,•,•,•)

(2,•,•,•)

(3,•,•,•)

(4,•,•,•)

Need to develop lower bounds on

these nodes and do further branching.

Spring 2002

IE 514

69

Bounding (in general)

Typical way to develop bounds is to relax the

original problem to an easily solvable problem

Three cases:

If there is no solution to the relaxed problem there is

no solution to the original problem

If the optimal solution to the relaxed problem is

feasible for the original problem then it is also

optimal for the original problem

If the optimal solution to the relaxed problem is not

feasible for the original problem it provides a bound

on its performance

Spring 2002

IE 514

70

Relaxing the Problem

The problem

1 | rj , prmp| Lmax

is a relaxation to the problem 1 | rj | Lmax

Not allowing preemption is a constraint in the

original problem but not the relaxed problem

We know how to solve the relaxed problem

(preemptive EDD rule)

Spring 2002

IE 514

71

Bounding

Preemptive EDD rule optimal for the

preemptive version of the problem

Thus, solution obtained is a lower bound

on the maximum delay

If preemptive EDD results in a nonpreemptive schedule all nodes with higher

lower bounds can be discarded.

Spring 2002

IE 514

72

Lower Bounds

Start with (1,•,•,•):

r4 5

Job with EDD is Job 4 but

Second earliest due date is for Job 3

Lmax 5

Job 1

Job 2

Job 3

Job 4

0

Spring 2002

10

IE 514

20

73

Branching

(•,•,•,•)

Lmax 5

(1,•,•,•)

(2,•,•,•)

(1,2,•,•)

(1,3,•,•)

Lmax 6

Spring 2002

(1,3,4,2)

(3,•,•,•)

(4,•,•,•)

Lmax 7

Lmax 5

IE 514

74

Beam Search

Branch and Bound:

Considers every node

Guarantees optimum

Usually too slow

Beam search

Considers only most promising nodes

(beam width)

Does not guarantee convergence

Much faster

Spring 2002

IE 514

75

Single Machine Example

(Total Weighted Tardiness)

Jobs

1

2

3

4

pj

10

10

13

4

dj

4

2

1

12

wj

14

12

1

12

Spring 2002

IE 514

76

Branching

(Beam width = 2)

(•,•,•,•)

(1,•,•,•)

(2,•,•,•)

(3,•,•,•)

(4,•,•,•)

(1,4,2,3)

(2,4,1,3)

(3,4,1,2)

(4,1,2,3)

408

436

814

440

ATC Rule

Spring 2002

IE 514

77

Beam Search

(•,•,•,•)

(1,•,•,•)

(1,2,•,•)

(2,•,•,•)

(1,3,•,•)

(1,4,2,3)

Spring 2002

(3,•,•,•)

(1,4,•,•)

(1,4,3,2)

(2,1,•,•)

(4,•,•,•)

(2,3,•,•)

(2,4,1,3)

IE 514

(2,4,•,•)

(2,4,3,1)

78

Discussion

Implementation tradeoff:

Careful evaluation of nodes (accuracy)

Crude evaluation of nodes (speed)

Two-stage procedure

Filtering

crude evaluation

filter width > beam width

Careful evaluation of remaining nodes

Spring 2002

IE 514

79

Topic 5

Random Search

Spring 2002

IE 514

80

Construction versus

Improvement Heuristics

Construction Heuristics

Start without a schedule

Add one job at a time

Dispatching rules and beam search

Improvement Heuristics

Start with a schedule

Try to find a better ‘similar’ schedule

Can be combined

Spring 2002

IE 514

81

Local Search

0.

1.

Start with a schedule s0 and set k = 0.

Select a candidate schedule

2.

from the neighborhood of sk

If acceptance criterion is met, let

3.

Otherwise let sk 1 sk .

Let k = k+1 and go back to Step 1.

Spring 2002

s N sk

sk 1 s.

IE 514

82

Defining a Local Search

Procedure

Determine how to represent a schedule.

Define a neighborhood structure.

Determine a candidate selection

procedure.

Determine an acceptance/rejection

criterion.

Spring 2002

IE 514

83

Neighborhood Structure

Single machine

A pairwise interchange of adjacent jobs

(n-1 jobs in a neighborhood)

Inserting an arbitrary job in an arbitrary

position

( n(n-1) jobs in a neighborhood)

Allow more than one interchange

Spring 2002

IE 514

84

Neighborhood Structure

Job shops with makespan objective

Neighborhood based on critical paths

Set of operations start at t=0 and end at

t=Cmax

Machine 1

Machine 2

Machine 3

Machine 4

Spring 2002

IE 514

85

Neighborhood Structure

The critical path(s) define the

neighborhood

Interchange jobs on a critical path

Too few better schedules in the

neighborhood

Too simple to be useful

Spring 2002

IE 514

86

One-Step Look-Back

Interchange

Jobs on critical path

Machine h

Machine i

Interchange jobs

on critical path

Machine h

Machine i

One-step look-back

interchange

Machine h

Machine i

Spring 2002

IE 514

87

Neighborhood Search

Find a candidate schedule from the

neighborhood:

Random

Appear most promising

(most improvement in objective)

Spring 2002

IE 514

88

Acceptance/Rejection

Major difference between most methods

Always accept a better schedule?

Sometimes accept an inferior schedule?

Two popular methods:

Simulated annealing

Tabu search

Same except the acceptance criterion!

Spring 2002

IE 514

89

Topic 6

Simulated Annealing

Spring 2002

IE 514

90

Simulated Annealing (SA)

Notation

S0 is the best schedule found so far

Sk is the current schedule

G(Sk) it the performance of a schedule

Note that

G(Sk ) G(S0 )

Spring 2002

IE 514

Aspiration Criterion

91

Acceptance Criterion

Let Sc be the candidate schedule

If G(Sc) < G(Sk) accept Sc and let

Sk 1 Sc

If G(Sc) G(Sk) move to Sc with

probability

Always 0

G ( Sk )G ( Sc )

P(Sk 1, Sc ) e

k

Temperature > 0

Spring 2002

IE 514

92

Cooling Schedule

The temperature should satisfy

1 2 3 ... 0

Normally we let

k 0

but we sometimes stop when some

predetermined final temperature is

reached

If temperature is decreased slowly

convergence is guaranteed

Spring 2002

IE 514

93

SA Algorithm

Step 1:

Set k = 1 and select the initial temperature 1.

Select an initial schedule S1 and set S0 = S1.

Step 2:

Select a candidate schedule Sc from N(Sk).

If G(S0) < G(Sc) < G(Sk), set Sk+1 = Sc and go to Step 3.

If G(Sc) < G(S0), set S0 = Sk+1 = Sc and go to Step 3.

If G(Sc) < G(S0), generate Uk Uniform(0,1);

If Uk P(Sk, Sc), set Sk+1 = Sc; otherwise set Sk+1 = Sk; go to

Step 3.

Step 3:

Select k+1 k.

Let k=k+1. If k=N STOP; otherwise go to Step 2.

Spring 2002

IE 514

94

Discussion

SA has been applied successfully to many

industry problems

Allows us to escape local optima

Performance depends on

Construction of neighborhood

Cooling schedule

Spring 2002

IE 514

95

Topic 7

Tabu Search

Spring 2002

IE 514

96

Tabu Search

Similar to SA but uses a deterministic

acceptance/rejection criterion

Maintain a tabu list of schedule changes

A move made entered at top of tabu list

Fixed length (5-9)

Neighbors restricted to schedules not

requiring a tabu move

Spring 2002

IE 514

97

Tabu List

Rational

Avoid returning to a local optimum

Disadvantage

A tabu move could lead to a better schedule

Length of list

Too short cycling (“stuck”)

Too long search too constrained

Spring 2002

IE 514

98

Tabu Search Algorithm

Step 1:

Set k = 1. Select an initial schedule S1 and set S0 = S1.

Step 2:

Select a candidate schedule Sc from N(Sk).

If Sk Sc on tabu list set Sk+1 = Sk and go to Step 3.

Enter Sc Sk on tabu list.

Push all the other entries down (and delete the last one).

If G(Sc) < G(S0), set S0 = Sc.

Go to Step 3.

Step 3:

Select k+1 k.

Let k=k+1. If k=N STOP; otherwise go to Step 2.

Spring 2002

IE 514

99

Single Machine Total

Weighted Tardiness Example

Jobs

1

2

3

4

pj

10

10

13

4

dj

4

2

1

12

wj

14

12

1

12

Spring 2002

IE 514

100

Initialization

Tabu list empty L={}

Initial schedule S1 = (2,1,4,3)

20 - 4 = 16

10 - 2 = 8

Deadlines

37 - 1 = 36

24 - 12 = 12

0

10

w T

j

Spring 2002

j

20

30

40

1416 12 8 1 36 1212 500

IE 514

101

Neighborhood

Define the neighborhood as all schedules

obtain by adjacent pairwise interchanges

The neighbors of S1 = (2,1,4,3) are

(1,2,4,3)

Select the best

(2,4,1,3)

non-tabu schedule

(2,1,3,4)

Objective values are: 480, 436, 652

Spring 2002

IE 514

102

Second Iteration

Let S0 =S2 = (2,4,1,3). G(S0)=436.

Let L = {(1,4)}

New neighborhood

Sequence

(4,2,1,3)

(2,1,4,3)

(2,4,3,1)

w T

460

500

608

j

Spring 2002

IE 514

103

Third Iteration

Let S3 = (4,2,1,3), S0 = (2,4,1,3).

Let L = {(2,4),(1,4)}

New neighborhood

Sequence

(2,4,1,3)

(4,1,2,3)

(4,2,3,1)

w T

436

440

632

j

Tabu!

Spring 2002

IE 514

104

Fourth Iteration

Let S4 = (4,1,2,3), S0 = (2,4,1,3).

Let L = {(1,2),(2,4)}

New neighborhood

Sequence

(1,4,2,3)

(4,2,1,3)

(4,1,3,2)

w T

402

460

586

j

Spring 2002

IE 514

105

Fifth Iteration

Let S5 = (1,4,2,3), S0 = (1,4,2,3).

Let L = {(1,4),(1,2)}

This turns out to be the optimal

schedule

Tabu search still continues

Notes:

Optimal schedule only found via tabu list

We never know if we have found the optimum!

Spring 2002

IE 514

106

Topic 8

Genetic Algorithm

Spring 2002

IE 514

107

Genetic Algorithms

Schedules are individuals that form

populations

Each individual has associated fitness

Fit individuals reproduce and have

children in the next generation

Very fit individuals survive into the next

generation

Some individuals mutate

Spring 2002

IE 514

108

Total Weighted Tardiness

Single Machine Example

First Generation

Individual

(sequence)

Fitness

w T

j

Spring 2002

Initial population

selected randomly

(2,1,3,4)

(3,4,1,2)

(4,1,3,2)

652

814

586

j

IE 514

109

Mutation Operator

Fittest

Individual

Individual

(2,1,3,4)

(3,4,1,2)

(4,1,3,2)

Fitness

652

814

586

Reproduction

via mutation

(4,3,1,2)

Spring 2002

IE 514

110

Cross-Over Operator

Fittest

Individuals

Individual

(2,1,3,4)

(4,3,1,2)

(4,1,3,2)

Fitness

652

758

586

(4,1,3,2)

(2,1,3,2)

Reproduction

via cross-over

(2,1,3,4)

Spring 2002

Infeasible!

(4,1,3,4)

IE 514

111

Representing Schedules

When using GA we often represent

individuals using binary strings

(0 1 1 0 1 0 1 0 1 0 1 0 0 )

(1 0 0 1 1 0 1 0 1 0 1 1 1 )

(0 1 1 0 1 0 1 0 1 0 1 1 1 )

Spring 2002

IE 514

112

Discussion

Genetic algorithms have been used very

successfully

Advantages:

Very generic

Easily programmed

Disadvantages:

A method that exploits special structure is

usually faster (if it exists)

Spring 2002

IE 514

113

Topic 9

Nested Partitions Method

Spring 2002

IE 514

114

The Nested Partitions Method

(Shameless self-promotion!)

Partitioning of all schedules

Similar to branch-and-bound & beam search

Random sampling & local search used to

find which node is most promising

Similar to beam search

Retains only one node (beam width = 1)

Allows for possible backtracking

Spring 2002

IE 514

115

Single Machine Total

Weighted Tardiness Example

Jobs

1

2

3

4

pj

10

10

13

4

dj

4

2

1

12

wj

14

12

1

12

Spring 2002

IE 514

116

First Iteration

(•,•,•,•)

(1,•,•,•)

(2,•,•,•)

Most promising region

(3,•,•,•)

(4,•,•,•)

Random Samples (uniform sampling, ATC rule, genetic algorithm)

(1,4,2,3)

(1,2,4,3)

(1,4,3,2)

Spring 2002

402

480

554

Promising Index

IE 514

I(1,•,•,•) = 402

117

Notation

We let

s(k) be the most promising region in the k-th iteration

sj(k) be the subregions, j=1,2,…,M

sM+1(k) be the surrounding region

S denote all possible regions (fixed set)

S0 all regions that contain a single schedule

I(s) be the promising index of sS

Spring 2002

IE 514

118

Second Iteration

Most promising region s(2)

ss(2))

(•,•,•,•)

s4(2)

(1,•,•,•)

s1(2)

(1,2,•,•)

{(2,•,•,•),(3,•,•,•), (4,•,•,•)}

s2(2)

(1,3,•,•)

s3(2)

(1,4,•,•)

Best Promising Index

Spring 2002

IE 514

Random Samples

(4,2,1,3)

(2,4,1,3)

(4,1,3,2)

460

436

586

I(s4(2)) = 436

119

Third Iteration

(•,•,•,•)

s3(3)

ss(3))

{(1,2,•,•), (1,4,•,•),(2,•,•,•),(3,•,•,•), (4,•,•,•)}

(1,•,•,•)

Random Samples

s(3)

(1,3,•,•)

s1(3)

(1,3,2,4)

s2(3)

(1,3,4,2)

(4,2,3,1)

(2,4,1,3)

(1,4,2,3)

632

436

402

I(s3(3)) = 402

Best Promising Index

Spring 2002

IE 514

120

Fourth Iteration

ss(4))

(•,•,•,•)

s(4)

s4(4)

(1,•,•,•)

s1(4)

(1,2,•,•)

{(2,•,•,•),(3,•,•,•), (4,•,•,•)}

s2(4)

(1,3,•,•)

s3(4)

(1,4,•,•)

Backtracking!

Spring 2002

IE 514

121

Finding the Optimal Schedule

The sequence

s (k )

k 1

is a Markov chain

Eventually the singleton region soptS0

containing the best schedule is visited

Absorbing state (never leave sopt)

Finite state space

visited after finitely many iterations.

Spring 2002

IE 514

122

Discussion

Finds best schedule in finite iterations

Only branch-and-bound can also guarantee

If we can calculate/estimate/guarantee the

probability of moving in right direction:

Expected number of iterations

Probability of having found optimal schedule

Stopping rules that guarantee performance

(unique)

Works best if incorporates dispatching rules

and local search (genetic algorithms, etc)

Spring 2002

IE 514

123

Topic 10

Mathematical Programming

Spring 2002

IE 514

124

Review of Mathematical

Programming

Many scheduling problems can be

formulated as mathematical programs:

Linear Programming (IE 534)

Nonlinear Programming (IE 631)

Integer Programming (IE 632)

See Appendix A in book.

Spring 2002

IE 514

125

Linear Programs

Minimize

subject to

c1 x1 c2 x2 ... cn xn

a11 x1 a12 x2 ... a1n xn b1

a21 x1 a22 x2 ... a2 n xn b1

am1 x1 am 2 x2 ... amn xn b1

xj 0

Spring 2002

IE 514

for j 1,...,n

126

Solving LPs

LPs can be solved efficiently

Simplex method (1950s)

Interior point methods (1970s)

Polynomial time

Has been used in practice to solve huge

problems

IE 534

Spring 2002

IE 514

127

Nonlinear Programs

Minimize

subject to

f ( x1 , x2 ,..., xn )

g1 ( x1, x2 ,..., xn ) 0

g 2 ( x1, x2 ,..., xn ) 0

g m ( x1, x2 ,..., xn ) 0

Spring 2002

IE 514

128

Solving Nonlinear

Programs

Optimality conditions

Karush-Kuhn-Tucker

Convex objective, convex constraints

Solution methods

Gradient methods (steepest descent,

Newton’s method)

Penalty and barrier function methods

Lagrangian relaxation

Spring 2002

IE 514

129

Integer Programming

LP where all variables must be integer

Mixed-integer programming (MIP)

Much more difficult than LP

Most useful for scheduling

Spring 2002

IE 514

130

Example: Single Machine

One machine and n jobs

Minimize n w j C j

j 1

Define the decision variables

1 if job j startsat timet

x jt

0 otherwise.

Spring 2002

IE 514

131

IP Formulation

Minimize

n Cmax 1

w (t p ) x

j 1

subject to

j

t 0

j

C max 1

x

t 0

n

jt

t 1

x

js

j 1 s max{t p j , 0}

jt

1 j

1 t

x jt 0,1 j , t

Spring 2002

IE 514

132

Solving IPs

Solution Methods

Cutting plane methods

Linear programming relaxation

Additional constraints to ensure integers

Branch-and-bound methods

Branch on the decision variables

Linear programming relaxation provides bounds

Generally difficult

Spring 2002

IE 514

133

Disjunctive Programming

Conjuctive

All constraints must be satisfied

Disjunctive

At least one of the constraints must be

satisfied

Zero-one integer programs

Use for job-shop scheduling

Spring 2002

IE 514

134