Document

advertisement

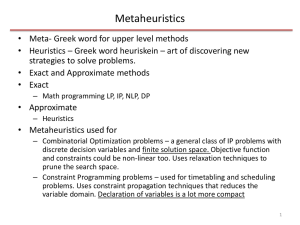

An Introduction to Metaheuristics

Chun-Wei Tsai

Electrical Engineering, National Cheng Kung University

Outline

Optimization Problem and Metaheuristics

Metaheuristic Algorithms

• Hill Climbing (HC)

• Simulated Annealing (SA)

• Tabu Search (TS)

• Genetic Algorithm (GA)

• Ant Colony Optimization (ACO)

• Particle Swarm Optimization (PSO)

Performance Consideration

Conclusion and Discussion

Page 2

Optimization Problem

The optimization problems

– continuous

– discrete

The combinatorial optimization problem (COP) is a kind of the discrete

optimization problems

Most of the COPs are NP-hard

Page 3

The problem definition of COP

The combinatorial optimization problem

P = (S, f) can be defined as:

where opt is either min or max, x = {x1, x2, . . . , xn} is a set of variables,

D1,D2, . . . ,Dn are the variable domains, f is an objective function to be optimized,

and f : D1×D2×· · ·×Dn R+. In addition, S = {s | s ∈ D1×D2×· · ·×Dn} is the search

space. Then, to solve P, one has to find a solution s ∈ S with optimal objective

function value.

D1 D2 D3 D4

1

2

3

4

solutions1 1

Page 4

1

2

3

4

2

1

2

3

4

3

1

2

3

4

4

D1 D2 D3 D4

1

2

3

4

1

2

3

4

solution s2 2

2

1

2

3

4

1

2

3

4

3 3

Combinatorial Optimization Problem and Metaheuristics (1/3)

Complex Problems

– NP-complete problem (Time)

• No optimum solution can be found in a reasonable time with limited

computing resources.

• E.g., Traveling Salesman Problem

– Large scale problem (Space)

• In general, this kind of problem cannot be handled efficiently with limited

memory space.

• E.g., Data Clustering Problem, astronomy, MRI

Page 5

Combinatorial Optimization Problem and Metaheuristics (2/3)

Traveling Salesman Problem (n!)

– Shortest Routing Path

Path 1:

Path 2:

Page 6

Combinatorial Optimization Problem and Metaheuristics (3/3)

Metaheuristics

– It works by guessing the right directions for finding the true or near

optimal solution of complex problems so that the space searched, and

thus the time required, can be significantly reduced.

opt

opt

D2

s4

f

s3

s2

s1

s4

s3

s5

s1

D1

Page 7

s2

s5

opt

The Concept of Metaheuristic Algorithms

The word “meta” means higher level

while the word “heuristics” means to

find. (Glover, 1986)

The operators of metaheuristics

– Transition: play the role of searching the

solutions (exploration and exploitation).

Transition

Page 8

s2 = (2,2) D2

opt

D2

s2

s1

Evaluation

D1

o1 = 5

opt

D2

Determination

s'2

d1

d2

s'1

s'2

s1

o2 = 3

– Evaluation: evaluate the objective

function value of the problem in question.

– Determination: play the role of deciding

the search directions.

opt

s1 = (1,1)

s1

D1

D1

An example-Bulls and cows

Check all candidate solutions

Guess Feedback Deduction

– Secret number: 9305

– Opponent's try: 1234

Transition

• 0A1B

Evaluation

• 1234

Determination

– Opponent's try: 5678

Transition

• 0A1B

Evaluation

• 5678

Determination

– number 0 and 9 must be the secret

number

Page 9

from wiki

Classification of Metaheuristics (1/2)

The most important way to classify metaheuristics

– population-based vs. single-solution-based (Blum and Roli, 2003)

The single-solution-based algorithms work on a single solution, thus the name

– Hill Climbing

– Simulated Annealing

– Tabu Search

The population-based algorithms work on a population of solutions, thus the name

– Genetic Algorithm

– Ant Colony Optimization

– Particle Swarm Optimization

Page 10

Classification of Metaheuristics (2/2)

Single-solution-based

– Hill Climbing

– Simulated Annealing

– Tabu Search

Population-based

– Genetic Algorithm

Swarm Intelligence

– Ant Colony Optimization

– Particle Swarm Optimization

Page 11

Hill Climbing (1/2)

greedy algorithm

based on heuristic adaptation of the objective function to explore a better

landscape

begin

t0

Randomly create a string vc

Repeat

evaluate vc

select m new strings from the neighborhood of vc

Let vn be the best of the m new strings

If f(vc) < f(vn) then vc vn

t t+1

Until t N

end

Page 12

Hill Climbing (2/2)

Global optimum

Local optimum

Starting point

Starting point

search space

Page 13

Simulated Annealing (1/3)

Metropolis et al., 1953

From the annealing process found in the thermodynamics and metallurgy

To avoid the local optimum, SA allows worse moves with a controlled

probability---temperature

The temperature will become lower and lower due to the convergence

condition

Page 14

Simulated Annealing (2/3)

begin

t0

Randomly create a string vc

vc = 01110

Repeat

evaluate vc

f = 01110 = 3

select 3 new strings from the neighborhood of vc

Let vn be the best of the 3 new strings

If f(vc) < f(vn)

then vc vn

Else if (T > random()) then vc vn

Update T according to annealing schedule

t t+1

Until t N

end

Page 15

n1 = 00110, n2 = 11110,

n3 = 01100

vn = 11110

vc = vn= 11110

Simulated Annealing (3/3)

Global optimum

Local optimum

Local optimum

Starting point

Starting point

search space

Page 16

Tabu Search (1/3)

Fred W. Glover, 1989

To avoid falling into the local optima and searching

the same solutions, the solutions recently visited are

saved in a list, called the tabu list (a short-term

memory the size of which is a parameter).

Moreover, when a new solution is generated, it will

be inserted into the tabu list and will stay in the tabu

list until it is replaced by a new solution in a first-infirst-out manner.

http://spot.colorado.edu/~glover/

Page 17

Tabu Search (2/3)

begin

t0

Randomly create a string vc

Repeat

evaluate vc

select 3 new strings from the neighborhood of vc and not in the tabu list

Let vn be the best of the 3 new strings

If f(vc) < f(vn) then vc vn

Update tabu list TL

t t+1

Until t N

end

Page 18

Tabu Search (3/3)

Global optimum

Local optimum

Local optimum

Starting point

search space

Page 19

Genetic Algorithm (1/5)

John H. Holland, 1975

Indeed, the genetic algorithm is one of the most

important population-based algorithms.

Schema Theorem

– short, low-order, above-average schemata receive

exponentially increasing trials in subsequent

generations of a genetic algorithms.

David E. Goldberg

– http://www.illigal.uiuc.edu/web/technical-reports/

Page 20

Genetic Algorithm (2/5)

Page 21

Genetic Algorithm (3/5)

Page 22

Genetic Algorithm (4/5)

Initialization operators

Selection operators

– Evaluate the fitness function (or the objective function)

– Determinate the search direction

Reproduction operators

Crossover operators

– Recombine the solutions to generate new candidate solutions

Mutation operators

– To avoid the local optima

Page 23

Genetic Algorithm (5/5)

p1 = 01110, p2 = 01110

p3 = 11100, p4 = 00010

begin

t 0

initialize Pt

f1 = 01110 = 3, f2 = 01110 = 3

f3 = 11100 = 3, f4 = 00010 = 1

evaluate Pt

while (not terminated) do

s1 = 01110 = 0.3, s2 = 01110 = 0.3

s3 = 11100 = 0.3, s4 = 00010 = 0.1

begin

t t+1

select Pt from Pt-1

s4 = 11100 = 0.3

crossover and mutation Pt

p1 = 011 10, p2 = 01 110

p3 = 111 00, p4 = 11 100

evaluate Pt

end

end

c1 = 011 00, c2 = 01 100

c3 = 111 10, c4 = 11 110

c1 = 01101, c2 = 01110

c3 = 11010, c4 = 11111

Page 24

24

c1 = 01100, c2 = 01100

c3 = 11110, c4 = 11110

Ant Colony Optimization (1/5)

Marco Dorigo, 1992

Ant colony optimization (ACO) is another wellknown population-based metaheuristic originated

from an observation of the behavior of ants by

Dorigo

The ants are able to find out the shortest path

from a food source to the nest by exploiting

pheromone information

http://iridia.ulb.ac.be/~mdorigo/HomePageDorigo/

Page 25

Ant Colony Optimization (2/5)

Page 26

food

food

nest

nest

Ant Colony Optimization (3/5)

Create the initial weights of each path

While the termination criterion is not met

Create the ant population s = {s1, s2, . . . , sn}

Each ant si moves one step to the next city according the pheromone rule

Update the pheromone

End

Page 27

Ant Colony Optimization (4/5)

Solution construction

–

for choosing the next sub-solution is

defined as follows:

where

is the set of feasible (or candiate)

sub-solutions that can be the next sub-solution

of i;

is the pheromone value between the

sub-solutions i and j; and

is a heuristic value

which is also called the heuristic information.

Page 28

Ant Colony Optimization (5/5)

Pheromone Update is employed for updating the pheromone values

each edge e(i, j), which is defined as follows:

where

is the number of ants;

tour created by ant k.

Page 29

represents either the length of the

on

Particle Swarm Optimization (1/4)

James Kennedy and Russ Eberhart, 1995

The particle swarm optimization

originates from an observation of the

social behavior by Kennedy and Eberhart

global best, local best, and trajectory

http://clerc.maurice.free.fr/pso/

Page 30

Particle Swarm Optimization (2/4)

IPSO, http://appshopper.com/education/pso

http://abelhas.luaforge.net/

Page 31

Particle Swarm Optimization (3/4)

Create the initial population (particle positions) s = {s1,

s2, . . . , sn} and particle velocities v = {v1, v2, . . . , vn}

While the termination criterion is not met

global best

Evaluate the fitness values fi of each particle si

New motion

For each particle

Update the particle position and velocity

IF (fi < f’i ) Update the local best f’i = fi

IF (fi < fg ) Update the global best fg = fi

End

Page 32

trajectory,

current motion

local best,

personal best

Particle Swarm Optimization (4/4)

particle’s position and velocity update equations :

velocity Vik+1 = wVik +c1 r1 (pbi-sik) + c2 r2(gb-sik)

where vik: velocity of particle i at iteration k,

w, c1, c2: weighting factor,

r1, r2: uniformly distributed random number between 0 and 1,

sik: current position of agent i at iteration k,

pbi: pbest of particle i,

gb: gbest of the group.

position Xik+1 = Xik + Vik+1

Page 33

Larger w global search ability

Smaller w local search ability

Summary

Page 34

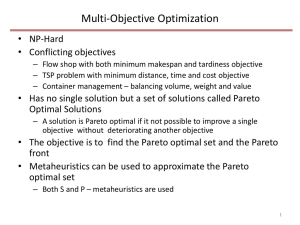

Performance Consideration

Enhancing the Quality of the End Result

– How to balance the Intensification and Diversification

– Initialization Method

– Hybrid Method

– Operator Enhancement

Reducing the Running Time

– Parallel Computing

– Hybrid Metaheuristics

– Redesigning the procedure of Metaheuristics

Page 35

Large Scale Problem

Methods for solving large scale problems (Xu and Wunsch, 2008)

– random sampling

– data condensation

– density-based approaches

– grid-based approaches

– divide and conquer

– Incremental learning

Page 36

How to balance the Intensification and Diversification

Intensification

– Local Search, 2-opt, n-opt

opt

Diversification

– Keeping Diversity

– Fitness Sharing

Intensification

– Increase the number of individuals

•

More computing resource

– Re-create

opt

Too much intensification local optimum

Too much diversification random search

Diversification

Page 37

Reducing the Running Time

Parallel computing

– This method generally does not reduce the overall computation time.

– master-slave model, fine-grained model (cellular model) and coarse-grained

model (island model) [Cant´u-Paz, 1998; Cant´u-Paz and Goldberg, 2000]

Sub-Population

Island 1

Population

Migration procedure

Sub-Population

Island 3

Page 38

Sub-Population

Island 2

Sub-Population

Island 4

Multiple-Search Genetic Algorithm (1/3)

The evolutionary process of MSGA.

Page 39

Multiple-Search Genetic Algorithm (2/3)

Multiple Search Genetic Algorithm (MSGA) vs. Learnable Evolution Model

(LEM)

Tsai’s MSGA

TSP problem pcb442

Page 40

Michalski’s LEM

Multiple-Search Genetic Algorithm (3/3)

It may face the premature convergence problem because diversity of

metaheuristics may decrease too quickly.

Each iteration may take more computation time than that of the original

algorithm.

Page 41

Pattern Reduction Algorithm

Concept

Assumptions and Limitations

The Proposed Algorithm

– Detection

– Compression

– Removal

Page 42

Concept (1/4)

Our observation shows that a lot of computations of most, if not all, of the

metaheuristic algorithms during their convergence process are redundant.

Page 43

(Data courtesy of Su and Chang)

43

Concept (2/4)

Page 44

44

Concept (3/4)

Page 45

45

Concept (4/4)

C1

0

0

1

0

C2

1

1

1

0

C1

0

0

1

0

C2

1

1

1

0

g =1, s =4

g =2, s =4

C1

0

0

1

0

C2

1

1

1

0

C1

0

0

C2

1

1

g =2, s =2

.

.

.

.

.

.

C1

0

0

1

0

C2

1

1

1

0

g =n, s =4

Metaheuristics

Page 46

g=1, s =4

C1

0

0

C2

1

1

g =n, s =2

Metaheuristics

+

Pattern Reduction

Assumptions and Limitations

Assumptions

– Some of the sub-solutions at certain point in the evolution process will

eventually end up being part of the final solution (Schema Theory, Holland

1975)

– Pattern Reduction (PR) is able to detect these sub-solutions as early as

possible during the evolution process of metaheuristics.

Limitations

– The proposed algorithm requires that the sub-solutions be integer or binary

encoded (i.e., combinatorial optimization problem).

Page 47

47

Some Results of PR

Page 48

The Proposed Algorithm

Create the initial solutions P = {p1, p2, . . . , pn}

While termination criterion is not met

Apply the transition, evaluation, and determination operators of the metaheuristics in

question to P

/* Begin PR */

Detect the sub−solutions R = {r1, r2, . . . , rm} that have a high probability not to be changed

Compress the sub−solutions in R into a single pattern, say, c

Remove the sub−solutions in R from P; that is, P = P \ R

P = P ∪ {c}

/* End PR */

End

Page 49

49

Detection

Time-Oriented

– aka static patterns

…

– Detect patterns not changed in a certain number of

iterations

T1: 1352476

T2: 7352614

T3: 7352416

Tn: 7

C1

416

Space-Oriented

T1 P1: 1352476

– Detect sub-solutions that are common at certain loci

P2: 7352614

…

Problem-Specific

Tn P1: 1

– E.g., for the k-means, we are assuming that patterns

P2: 7

near a centroid are unlikely to be reassigned to

another cluster.

Page 50

50

C1

C1

476

614

Problem-Specific

x1

x2

x3

x9

x4

x11

x10

x1

Cluster 0

Cluster 1

Cluster 2

Mean

Removed

x2

x9

Cluster 0

Cluster 1

Cluster 2

Mean

x5

x12

x6

x7

x8

x12

x6

x8

P = 12

P=9

1

1

1

1

2

2

2

2

3

3

3

3

1

1

1

1

2

2

2

2

3

3

3

3

x1

x2

x3

x4

x5

x6

x7

x8

x9

x10

x11

x12

x1

x2

x3

x4

x5

x6

x7

x8

x9

x10

x11

x12

P: Number of patterns

Page 51

51

Compression and Removal

The compression module plays the role of compressing all the subsolutions to be removed whereas the removal module plays the role of

removing all the sub-solutions once they are compressed.

Lossy Method

– May cause a “small” loss of the quality of the end result.

Page 52

52

An Example

Page 53

Simulation Environment

The empirical analysis was conducted on an IBM X3400 machine with 2.0 GHz Xeon CPU

and 8GB of memory using CentOS 5.0 running Linux 2.6.18.

Enhancement in percentage

– ((Tn - To) / To ) x 100

TSP

– Traditional Genetic Algorithm (TGA), HeSEA, Learnable Evolution Model (LEM), Ant Colony

System (ACS), Tabu Search (TS), Tabu GA, Simulated Annealing (SA).

Clustering

– Standard k-means (KM), Relational k-means (RKM), Kernel k-means (KKM), Scheme Kernel

k-means (SKKM), Triangle Inequality k-means (TKM), Genetic k-means Algorithm (GKA), or

Particle Swarm Optimization (PSO)

Page 54

54

The Results of Traveling Salesman Problem (1/2)

Page 55

55

The Results of Traveling Salesman Problem (2/2)

Page 56

56

The Results of Data Clustering Problem (1/3)

Data sets for Clustering

Page 57

57

The Results of Data Clustering Problem (2/3)

Page 58

58

The Results of Data Clustering Problem (3/3)

Page 59

59

Time Complexity

where n is the number of patterns,

k the number of clusters,

l the number of iterations,

and d number of dimensions.

Ideally, the running time of “k-means with PR” is independent of the number

of iterations.

In reality, however, our experimental result shows that setting the removal

bound to 80% gives the best result.

Page 60

60

Conclusion and Discussion (2/2)

In this presentation, we introduce the

– Combinatorial Optimization Problem

– Several Metaheuristic Algorithms

– The Performance Enhancement Method

• MSGA, PREGA and so on.

Future Work

– Developing an efficient algorithm that will not only eliminate all the redundant

computations but also guarantee that the quality of the end results by

“metaheuristics with PR” is either preserved or even enhanced, with respect to

those of “metaheuristics by themself.”

– Applying the proposed framework to other optimization problems and

metaheuristics.

Page 61

Conclusion and Discussion (2/2)

Future Work

– Applying the proposed framework to continuous optimization problem.

– Developing more efficient detection, compression, and removal methods.

Discussion

Page 62

• E-mail: cwtsai87@gmail.com

• MSN: cwtsai87@yahoo.com.tw

• Web site: http://cwtsai.ee.ncku.edu.tw/

• Chun-Wei Tsai is currently a postdoctoral fellow at the Electrical

Engineering of National Cheng Kung University.

• His research interests include evolutionary computation, web

information retrieval, e-Learning, and data mining.

Framework for metaheuristics

s1 = 0 1 1 1 0

s2 = 1 0 0 0 1

s1 = 0 1 1 0 0

s2 = 1 0 0 1 1

f1 = 2

p1 = 2/5

f2 = 3

p2 = 3/5

p1 = 2/6

p2 = 4/6

g=2

p1 = 3/7

p2 = 4/7

g=3

Create the initial solutions s = {s1, s2, …, sn}

While termination criterion is not met

Transit s to s’

Evaluate the objective function value of each solution s’I in s’.

Determine s

g=1

fitness

End

Page 64

.

.

.

p1 = 4/9

iteration

64

p2 = 5/9

g=n

Initialization Methods (1/4)

In general, the initial solutions of metaheuristics are randomly

generated and it may take a tremendous number of iterations, and

thus a great deal of time, to converge.

– Sampling

– Dimension reduction

– Greedy method

Page 65

65

Initialization Methods (2/4)

Page 66

66

Initialization Methods (3/4)

Page 67

67

Initialization Methods (4/4)

The refinement initialization method can provide a more stable solution

and enhance the performance of metaheuristics.

fitness

The risk of using the refinement initialization methods is that it may

cause metaheuristics to fall into local optima.

average

total

Page 68

iteration68

Local Search Methods (1/2)

The local search methods play an important role in fine-tuning the

solution found by metaheuristics.

13 8 5 6 7 4 9 2 0

31 8 5 6 7 4 9 2 0

18 3 5 6 7 4 9 2 0

15 8 3 6 7 4 9 2 0

16 8 5 6 7 4 9 2 0

17 8 5 6 3 4 9 2 0

14 8 5 6 7 3 9 2 0

19 8 5 6 7 4 3 2 0

12 8 5 6 7 4 9 3 0

10 8 5 6 7 4 9 2 3

Page 69

69

Local Search Methods (2/2)

We have to take into account how to balance metaheuristics and

local search.

Longer computation time may provide a better result, but

there is no guarantee.

Page 70

70

Hybrid Methods

Hybrid method combines pros from different metaheuristic algorithms for

enhancing the performance of metaheuristics

– E.g., GA plays the role of global search while SA plays the role of local search.

– Again, we may have to balance the performance of the hybrid algorithm.

Page 71

71

Data Clustering Problem

– Partitioning the n patterns into k groups or clusters based on some

similarity metric.

image1

codebook

Vector Quantization

k-means

Page 72

72

image2