Chapter 3

ILP

1

Three Generic Data Hazards

Inst I before inst j in in the program

• Read After Write (RAW)

InstrJ tries to read operand before InstrI writes it

I: add r1,r2,r3

J: sub r4,r1,r3

•

Caused by a “Dependence” (in compiler nomenclature). This hazard

results from an actual need for communication.

2

Three Generic Data Hazards

•

Write After Read (WAR)

InstrJ writes operand before InstrI reads it

I: sub r4,r1,r3

J: add r1,r2,r3

K: mul r6,r1,r7

•

Called an “anti-dependence” by compiler writers.

This results from reuse of the name “r1”.

•

Can’t happen in MIPS 5 stage pipeline because:

All instructions take 5 stages, and

Reads are always in stage 2, and

Writes are always in stage 5

3

Three Generic Data Hazards

•

Write After Write (WAW)

InstrJ writes operand before InstrI writes it.

I: sub r1,r4,r3

J: add r1,r2,r3

K: mul r6,r1,r7

•

•

Called an “output dependence” by compiler writers

This also results from the reuse of name “r1”.

Can’t happen in MIPS 5 stage pipeline because:

– All instructions take 5 stages, and

– Writes are always in stage 5

4

Branch Hazards

• Loop unrolling

• Branch prediction

–Static

–Dynamic

5

Static Branch Prediction

•Scheduling (reordering) code around delayed branch

• need to predict branch statically at compile time

• use profile information collected from earlier runs

•Behavior of branch is often bimodally distributed!

• Biased toward taken or not taken

•Effectiveness depend on

• frequency of branches and accuracy of the scheme

22%

18%

20%

15%

15%

12%

11%

12%

10%

9%

10%

4%

5%

6%

Integer

r

2c

o

p

dl

jd

FP

su

d

m

hy

dr

o2

ea

r

li

c

do

du

c

gc

eq

nt

ot

es

t

pr

es

so

m

pr

e

ss

0%

co

Integer benchmarks have

higher branch frequency

Misprediction Rate

25%

6

Dynamic Branch Prediction

• Why does prediction work?

• Underlying algorithm has regularities

• Data that is being operated on has regularities

•Is dynamic branch prediction better than static branch

prediction?

• Seems to be

• There are a small number of important branches in

programs which have dynamic behavior

Performance = ƒ(accuracy, cost of misprediction)

7

1-Bit Branch Prediction

•Branch History Table: Lower bits of PC address index table of

1-bit values

• Says whether or not branch taken last time

NT

0x40010100

0x40010104

for (i=0; i<100; i++) {

….

}

addi r10, r0, 100

addi r1, r1, r0

L1:

……

……

…

0x40010A04 addi r1, r1, 1

0x40010A08 bne r1, r10, L1

……

0x40010108

T

1-bit Branch

History Table

T

NT

T

:

:

T

NT

Prediction

8

1-Bit Bimodal Prediction (SimpleScalar Term)

• For each branch, keep track of what happened last time

and use that outcome as the prediction

• Change mind fast

9

1-Bit Branch Prediction

•What is the drawback of using lower bits of the PC?

• Different branches may have the same lower bit value

•What is the performance shortcome of 1-bit BHT?

• in a loop, 1-bit BHT will cause two mispredictions

• End of loop case, when it exits instead of looping as before

• First time through loop on next time through code, when it predicts

exit instead of looping

10

2-bit Saturating Up/Down Counter Predictor

•Solution: 2-bit scheme where change prediction only if get

misprediction twice

2-bit branch prediction

State diagram

11

2-Bit Bimodal Prediction (SimpleScalar Term)

• For each branch, maintain a 2-bit saturating counter:

if the branch is taken: counter = min(3,counter+1)

if the branch is not taken: counter = max(0,counter-1)

• If (counter >= 2), predict taken, else predict not taken

• Advantage: a few atypical branches will not influence the

prediction (a better measure of “the common case”)

• Can be easily extended to N-bits (in most processors, N=2)

12

Branch History Table

•Misprediction reasons:

• Wrong guess for that branch

• Got branch history of wrong branch when indexing the table

13

Branch History Table (4096-entry, 2-bits)

Branch intensive

benchmarks have higher

miss rate. How can we solve

this problem?

Increase the buffer size or

Increase the accuracy

14

Branch History Table (Increase the size?)

Need to focus on

increasing the

accuracy of the

scheme!

15

Correlated Branch Prediction

• Standard 2-bit predictor uses local

information

• Fails to look at the global picture

•Hypothesis: recent branches are

correlated; that is, behavior of recently

executed branches affects prediction

of current branch

16

Correlated Branch Prediction

• A shift register captures the local

path through the program

• For each unique path a predictor is

maintained

• Prediction is based on the behavior

history of each local path

• Shift register length determines

program region size

17

Correlated Branch Prediction

•Idea: record m most recently executed branches as taken or

not taken, and use that pattern to select the proper branch

history table

• In general, (m,n) predictor means record last m branches to select

between 2^m history tables each with n-bit counters

• Old 2-bit BHT is then a (0,2) predictor

If (aa == 2)

aa=0;

If (bb == 2)

bb = 0;

If (aa != bb)

do something;

18

Correlated Branch Prediction

Global Branch History: m-bit shift register keeping T/NT

status of last m branches.

19

Accuracy of Different Schemes

4096 Entries 2-bit BHT

Unlimited Entries 2-bit BHT

1024 Entries (2,2) BHT

18%

16%

14%

12%

11%

10%

8%

6%

6%

5%

6%

6%

5%

4%

4%

4,096 entries: 2-bits per entry

Unlimited entries: 2-bits/entry

li

eqntott

expresso

gcc

fpppp

matrix300

0%

spice

1%

0%

doducd

1%

tomcatv

2%

nasa7

Frequency of Mispredictions

20%

1,024 entries (2,2)

20

Tournament Predictors

• A local predictor might work well for some branches or

programs, while a global predictor might work well for others

• Provide one of each and maintain another predictor to

identify which predictor is best for each branch

Local

Predictor

Global

Predictor

Branch PC

M

U

X

Tournament

Predictor

21

Tournament Predictors

•Multilevel branch predictor

• Selector for the Global and Local predictors of correlating branch

prediction

•Use n-bit saturating counter to choose between predictors

•Usual choice between global and local predictors

22

Tournament Predictors

•Advantage of tournament predictor is ability to select the right predictor for

a particular branch

•A typical tournament predictor selects global predictor 40% of the time for

SPEC integer benchmarks

• AMD Opteron and Phenom use tournament style

23

Tournament Predictors (Intel Core i7)

• Based on predictors used in Core Due chip

• Combines three different predictors

• Two-bit

• Global history

• Loop exit predictor

• Uses a counter to predict the exact number of taken

branches (number of loop iterations) for a branch

that is detected as a loop branch

• Tournament: Tracks accuracy of each predictor

• Main problem of speculation:

• A mispredicted branch may lead to another branch

being mispredicted !

24

Branch Prediction

•Sophisticated Techniques:

• A “branch target buffer” to help us look up the destination

• Correlating predictors that base prediction on global behavior

and recently executed branches (e.g., prediction for a specific

branch instruction based on what happened in previous branches)

• Tournament predictors that use different types of prediction

strategies and keep track of which one is performing best.

• A “branch delay slot” which the compiler tries to fill with a useful

instruction (make the one cycle delay part of the ISA)

•Branch prediction is especially important because it enables

other more advanced pipelining techniques to be effective!

•Modern processors predict correctly 95% of the time!

25

Branch Target Buffers (BTB)

•Branch target calculation is costly and stalls the instruction

fetch.

•BTB stores PCs the same way as caches

•The PC of a branch is sent to the BTB

•When a match is found the corresponding Predicted PC is

returned

•If the branch was predicted taken, instruction fetch continues

at the returned predicted PC

26

Branch Target Buffers (BTB)

27

Pipeline without Branch Predictor

IF (br)

PC

Reg Read

Compare

Br-target

PC + 4

In the 5-stage pipeline, a branch completes in two cycles

If the branch went the wrong way, one incorrect instr is fetched

One stall cycle per incorrect branch

28

Pipeline with Branch Predictor

IF (br)

PC

Branch

Predictor

Reg Read

Compare

Br-target

29

Dynamic Vs. Static ILP

• Static ILP:

+ The compiler finds parallelism no extra hw

higher clock speeds and lower power

+ Compiler knows what is next better global schedule

- Compiler can not react to dynamic events (cache misses)

- Can not re-order instructions unless you provide

hardware and extra instructions to detect violations

(eats into the low complexity/power argument)

- Static branch prediction is poor even statically

scheduled processors use hardware branch predictors

30

Dynamic Scheduling

•Hardware rearranges instruction execution to reduce stalls

• Maintains data flow and exception behavior

•Advantages:

• Handles cases where dependences are unknown at

compile time

• Simplifies compiler

• Allows processor to tolerate unpredictable delays by

executing other code

• Cache miss delay

• Allows code compiled with one pipeline in mind to run

efficiently on a different pipeline

•Disadvantage:

• Complex hardware

31

Dynamic Scheduling

• Simple Pipelining technique:

• In-order instruction issue and execution

• If an instruction is stalled, no later instructions can

proceed

• Dependence between two closely spaced instructions

leads to hazard

• What if there are multiple functional units?

• Units could stay idle

• If instruction “j” depends on a long running instruction “i”

• All instructions after “j” stalls

DIV.D

ADD.D

SUB.D

F0,F2,F4

F10,F0,F8

F12,F8,F14

Can be eliminated by not

requiring instructions to

execute in-order

32

Idea

• Classic five stage pipeline

• Structural and data hazards could be checked during ID

• What do we need to allow us execute the SUB.D?

DIV.D

ADD.D

SUB.D

F0,F2,F4

F10,F0,F8

F12,F8,F14

•Separate ID process into two

• Check for hazards (Issue)

• Decode (read operands)

•In-order instruction issue (in program order)

• Begin execution as soon as its operands are available

• Out-of-order execution (out-of-order completion)

33

Out-of-order complications

•Introduces possibility of WAW, WAR hazards

• Do not exist in 5 stage pipeline

WAR

DIV.D

ADD.D

SUB.D

MUL.D

F0,F2,F4

F6,F0,F8

F8,F10,F14

F6,F10,F8

WAW

• Solution

• Register renaming

34

Out-of-order complications

•Handling exceptions

• Out-of-order completion must preserve exception

behavior

• Exactly those exceptions that would arise if the program

was executed in strict program order actually do arise

• Preserve exception behavior by:

• Ensuring that no instruction can generate an

exception until the processor knows that the

instruction raising the exception will be executed

35

Splitting ID Stage

•Instruction Fetch:

• Fetch into register or queue

•Instruction Decode

• Issue:

• Decode instructions

• Check for structural hazards

• Read operands

• Wait until no data hazards

• Then read operands

•Execute

• Just as 5-stage pipeline execute stage

• May take multiple cycles

36

Hardware requirement

•Pipeline must allow multiple instructions to be in execution

stage

• Multiple functional units

•Instructions pass through issue stage in order (in-order issue)

•Instructions can be stalled and bypass each other in the

second stage (read operands)

•Instructions enter execution stage out-of-order

37

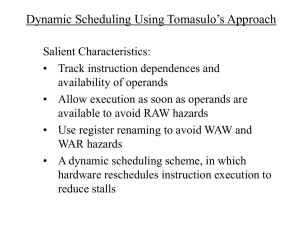

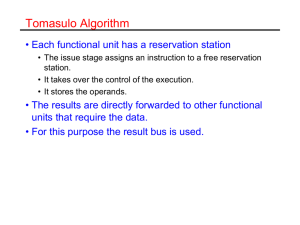

Dynamic Scheduling using Tomasulo’s Method

•Sophisticated scheme to allow out-of-order execution

•Objective is to minimize RAW hazards

• Introduces register renaming to minimize WAR and

WAW hazards

•Many variations of this technique are used in modern

processors

•Common features:

• Tracking instruction dependencies to allow as soon as

(only when) operands are available (resolves RAW)

• Renaming destination registers (WAR, WAW)

38

Dynamic Scheduling using Tomasulo’s Method

•Register renaming:

true

anti

DIV.D

ADD.D

S.D

SUB.D

MUL.D

F0,F2,F4

F6,F0,F8

F6,0(R1)

F8,F10,F14

F6,F10,F8

DIV.D

ADD.D

S.D

SUB.D

MUL.D

F0,F2,F4

S,F0,F8

S,0(R1)

T,F10,F14

F6,F10,T

output

Finding any use of F8 requires sophisticated compiler or hardware

39

- later use of F8!

Dynamic Scheduling using Tomasulo’s Method

1

FIFO

2

3

40

Steps of an instruction

1.

•

Issue —get instruction from instruction queue

If a reservation station is free

• issue instr & send operands to reservation station

• If operand not available

• Track functional units producing that will produce

• This step renames registers!

• Else

• Structural hazard, stall

41

Steps of an instruction

2.

Execution —operate on operands (EX)

When both operands ready then execute; if not ready,

watch CDB for result; when both in reservation station,

execute; checks RAW (sometimes called “issue”)

Note: several instructions may become ready for the same FU at the

same time : arbitrary choice for FUs,

Loads and stores complicated! Need to maintain the

program order

42

4 Steps of Speculative Tomasulo

3.

Write result —finish execution (WB)

Write on Common Data Bus to all awaiting FUs

mark reservation station available.

Load waits for memory unit to be available

Store waits for operand and then memory to be available

43

Reservation Station

•Op: Operation to perform in the unit (e.g., + or –)

•Vj, Vk: Value of Source operands

• For loads Vk is used to hold the offset

•Qj, Qk: Reservation stations producing source registers

(value to be written)

Note: Qj,Qk=0 => ready in Vj, Vk

• Busy: Indicates reservation station or FU is busy

• A : Hold information for the memory address calculation for

load/store.

• Initially, immediate value is stored in A,

• After address calculation: effective address

•Register result status (Qi)—Indicates the number of the

reservation station that contains the operation whose results

should be stored in to this register.

44

• Blank when no pending instructions to write that register.

Refer to D. Patterson’s

Tomasulo Slides

45

Loop Unrolling

•Determine loop unrolling useful by finding that loop iterations

were independent

• Determine address offsets for different loads/stores

• Increases program size

•Use different registers to avoid unnecessary constraints

forced by using same registers for different computations

• Stress on registers

•Eliminate the extra test and branch instructions and adjust

the loop termination and iteration code

46

Loop Unrolling

•If a loop only has dependences within an iteration, the loop

is considered parallel multiple iterations can be executed

together so long as order within an iteration is preserved

• If a loop has dependences across iterations, it is not parallel

and these dependences are referred to as “loop-carried”

47

Example

For (i=1000; i>0; i=i-1)

x[i] = x[i] + s;

For (i=1; i<=100; i=i+1) {

A[i+1] = A[i] + C[i];

B[i+1] = B[i] + A[i+1];

}

S1

S2

For (i=1; i<=100; i=i+1) {

A[i] = A[i] + B[i];

B[i+1] = C[i] + D[i];

}

S1

S2

For (i=1000; i>0; i=i-1)

x[i] = x[i-3] + s;

S1

48

Example

For (i=1000; i>0; i=i-1)

x[i] = x[i] + s;

No dependences

For (i=1; i<=100; i=i+1) {

A[i+1] = A[i] + C[i];

B[i+1] = B[i] + A[i+1];

}

S1

S2

S2 depends on S1 in the same iteration

S1 depends on S1 from prev iteration

S2 depends on S2 from prev iteration

For (i=1; i<=100; i=i+1) {

A[i] = A[i] + B[i];

B[i+1] = C[i] + D[i];

}

S1

S2

S1 depends on S2 from prev iteration

For (i=1000; i>0; i=i-1)

x[i] = x[i-3] + s;

S1

S1 depends on S1 from 3 prev iterations

Referred to as a recursion

Dependence distance 3; limited parallelism

49

Constructing Parallel Loops

If loop-carried dependences are not cyclic (S1 depending on S1 is cyclic),

loops can be restructured to be parallel

For (i=1; i<=100; i=i+1) {

A[i] = A[i] + B[i];

B[i+1] = C[i] + D[i];

}

S1

S2

S1 depends on S2 from prev iteration

A[1] = A[1] + B[1];

For (i=1; i<=99; i=i+1) {

B[i+1] = C[i] + D[i];

A[i+1] = A[i+1] + B[i+1];

}

S3

S4

B[101] = C[100] + D[100];

S4 depends on S3 of same iteration

Loop unrolling reduces impact of branches on pipeline;

another way is branch prediction

50

Dynamic Scheduling using Tomasulo’s Method

•Register renaming is provided by reservation stations

• Buffer the operands of instructions waiting to issue

•Reservation station fetches and buffers an operand as soon

as it is available

• Eliminates the need to get the operand from a register

•Pending instructions designate the reservation station that

will provide their input (register renaming)

•Successive writes to a register: last one is used to update

•There can be more reservations stations than real registers!

• Name dependencies that can’t be eliminated by a compiler , now

can be eliminated by hardware

51

Dynamic Scheduling using Tomasulo’s Method

•Data structures attached to

• reservation stations

• Load/store buffers: acting like reservation station (hold data or

address coming from or going to memory)

• Register file

•Once an instruction has issued and is waiting for source

operand

• Refers to the operand by reservation station number where the

instruction that will generate the value has been assigned

• If reservation station number is 0

• Operand is already in the register file

•1 cycle latency between source and result

• Effective latency between producing instruction and consuming

instruction is at least 1 cycle longer than the latency of the function

52

unit producing the result

Dynamic Scheduling using Tomasulo’s Method

•Loads and Stores

• If they access different address

• Can be done out of order safely

• Else

• Load and then Store program order

• WAR

• Store and then Load program order

• RAW

• Store and then Store

• WAW

•To detect these hazards

• Effective address calculation must be in program order

• Check the “A” field in Load/Store queue before issuing the

Load/Store instruction

53

Dynamic Scheduling using Tomasulo’s Method

•Advantage of distributed reservation stations and CDB

• If multiple instructions are waiting for the same result

• Instructions released concurrently by the broadcast of

the result through CDB

• Centralized register file: units read from register file when the

bus is available

•Advantage of using reservation station number instead of

register name

• eliminates WAR, WAW

54

Dynamic Scheduling using Tomasulo’s Method

•Disadvantage

• Complex hardware

• Can achieve high performance if branches are

predicted accurately

• <1 cycle per instruction with a dual issue!

• Reservation station must be associative

• Single CDB!

55

Hardware-Based Speculation

•Branch prediction reduces the stalls attributable to branches

• For a processor executing multiple instructions

• Just predicting branch is not enough

• Multiple issue processor may execute a branch every

clock cycle

•Exploiting parallelism requires that we overcome the

limitation of control dependence

56

Hardware-Based Speculation

•Greater ILP: Overcome control dependence by hardware

speculating on outcome of branches and executing program

as if guesses were correct

• extension over branch prediction with dynamic scheduling

• Speculation fetch, issue, and execute instructions as if branch

predictions were always correct

• Dynamic scheduling only fetches and issues such instructions

•Essentially a data flow execution model: Operations execute

as soon as their operands are available

57

Hardware-Based Speculation

•3 components of HW-based speculation:

• Dynamic branch prediction to choose which instructions

to execute

• Speculation to allow execution of instructions before

control dependences are resolved

• ability to undo effects of incorrectly speculated sequence

• Dynamic scheduling to deal with scheduling of different

combinations of basic blocks

• without speculation only partially overlaps basic blocks

• requires that a branch be resolved before actually executing

any instructions in the successor basic block.

58

Hardware-Based Speculation in Tomasulo

•The key idea

• allow instructions to execute out of order

• force instructions to commit in order

• prevent any irrevocable action (such as updating state or

taking an exception) until an instruction commits.

•Hence:

• Must separate execution from allowing instruction to

finish or “commit”

• instructions may finish execution considerably before

they are ready to commit.

•This additional step called instruction commit

59

Hardware-Based Speculation in Tomasulo

•When an instruction is no longer speculative, allow it to

update the register file or memory

•Requires additional set of buffers to hold results of

instructions that have finished execution but have not

committed : reorder buffer (ROB)

•This reorder buffer (ROB) is also used to pass results among

instructions that may be speculated

60

Reorder Buffer

•In Tomasulo’s algorithm, once an instruction writes its result,

any subsequently issued instructions will find result in the

register file

•With speculation, the register file is not updated until the

instruction commits

• (we know definitively that the instruction should execute)

•Thus, the ROB supplies operands in interval between

completion of instruction execution and instruction commit

• ROB is a source of operands for instructions, just as reservation

stations (RS) provide operands in Tomasulo’s algorithm

• ROB extends architectured registers like RS

61

Reorder Buffer Structure (Four fields)

•instruction type field

• Indicates whether the instruction is a branch (and has no destination

result), a store (which has a memory address destination), or a

register operation (ALU operation or load, which has register

destinations).

•destination field

• supplies the register number (for loads and ALU operations) or the

memory address (for stores) where the instruction result should be

written.

•value field

• hold the value of the instruction result until the instruction commits.

•ready field

• indicates that the instruction has completed execution, and the

62

value is ready.

Reorder Buffer Operation

•Holds instructions in FIFO order, exactly as issued

•When instructions complete, results placed into ROB

• Supplies operands to other instruction between execution complete

& commit => more registers like RS

• Tag results with ROB buffer number instead of reservation station

•Instructions commit =>values at head of ROB placed in

registers

Reorder

•As a result, easy to undo

Buffer

FP

speculated instructions

Op

on mispredicted branches

Queue

FP Regs

or on exceptions

Commit path

Res Stations

FP Adder

Res Stations

FP Adder

63

Where is

the store

queue?

64

4 Steps of Speculative Tomasulo

1- Issue —get instruction from instruction queue

If reservation station and reorder buffer slot free, issue instr

& send operands (if available: either from ROB or FP

registers) to reservation station & send reorder buffer no.

allocated for result to reservation station (tag the result when

it is placed on CDB)

65

4 Steps of Speculative Tomasulo

2. Execution —operate on operands (EX)

When both operands ready then execute; if not ready, watch

CDB for result; when both in reservation station, execute;

checks RAW

Loads still require 2 step process, instructions may take multiple

cycles,, stores is only effective address calculation (need Rs),

66

4 Steps of Speculative Tomasulo

3. Write result —finish execution (WB)

Write on Common Data Bus to all awaiting FUs

& reorder buffer; mark reservation station available.

If the value to be stored is available, it is written into the Value field

of the ROB entry for the store.

If the value to be stored is not available yet, the CDB must be

monitored until that value is broadcast, at which time the Value field

of the ROB entry of the store is updated.

67

4 Steps of Speculative Tomasulo

4. Commit

a) when an instruction reaches the head of the ROB and its

result is present in the buffer;

• update the register with the result and remove the instruction from

the ROB.

b) Committing a store is similar except that memory is

updated rather than a result register.

c) If a branch with incorrect prediction reaches the head ROB

• it indicates that the speculation was wrong.

• ROB is flushed and execution is restarted at the correct successor

of the branch.

d) If the branch was correctly predicted, the branch is finished.

•Once an instruction commits, its entry in the ROB is

reclaimed. If the ROB fills, we simply stop issuing instructions

68

until an entry is made free.

Tomasulo With Reorder buffer:

Dest. Value

Instruction

FP Op

Queue

Done?

ROB7

ROB6

Newest

ROB5

Reorder Buffer

ROB4

ROB3

ROB2

LD

F0,16(R2)

ADDD F10,F4,F0

DIVD F2,F10,F6

F0

LD F0,16(R2)

Registers

Dest

FP adders

ROB1

To

Memory

from

Memory

Dest

Reservation

Stations

N

Dest

1 10+R2

FP multipliers

Oldest

Tomasulo With Reorder buffer:

Dest. Value

Instruction

Done?

FP Op

Queue

ROB7

ROB6

Newest

ROB5

Reorder Buffer

ROB4

ROB3

LD

F0,16(R2)

ADDD F10,F4,F0

DIVD F2,F10,F6

F10

F0

ADDD F10,F4,F0

LD F0,16(R2)

Registers

Dest

2 ADDD R(F4),ROB1

FP adders

ROB2

ROB1

Oldest

To

Memory

from

Memory

Dest

Reservation

Stations

N

N

Dest

1 10+R2

FP multipliers

70

Tomasulo With Reorder buffer:

Dest. Value

Instruction

Done?

FP Op

Queue

ROB7

ROB6

ROB5

Reorder Buffer

LD

F0,16(R2)

ADDD F10,F4,F0

DIVD F2,F10,F6

ROB4

F2

F10

F0

DIVD F2,F10,F6

ADDD F10,F4,F0

LD F0,16(R2)

Registers

Dest

2 ADDD R(F4),ROB1

FP adders

Newest

ROB3

ROB2

ROB1

Oldest

To

Memory

Dest

3 DIVD ROB2,R(F6)

Reservation

Stations

N

N

N

from

Memory

Dest

1 10+R2

FP multipliers

71

Avoiding Memory Hazards

• WAW and WAR hazards through memory are

eliminated with speculation because actual

updating of memory occurs in order, when a

store is at head of the ROB, and hence, no

earlier loads or stores can still be pending

• RAW hazards through memory are avoided by

two restrictions:

1. not allowing a load to initiate the second step of its execution

if any active ROB entry occupied by a store has a Destination

field that matches the value of the Addr. field of the load, and

2. maintaining the program order for the computation of an

effective address of a load with respect to all earlier stores.

• these restrictions ensure that any load that

accesses a memory location written to by an

earlier store cannot perform the memory access

until the store has written the data

72

Multi-Issue - Getting CPI Below 1

•

•

CPI ≥ 1 if issue only 1 instruction every clock cycle

Multiple-issue processors come in 3 flavors:

1. statically-scheduled superscalar processors,

2. dynamically-scheduled superscalar processors, and

3. VLIW (very long instruction word) processors (static

sched.)

•

The 2 types of superscalar processors issue

varying numbers of instructions per clock

– use in-order execution if they are statically scheduled, or

– out-of-order execution if they are dynamically scheduled

•

VLIW processors, in contrast, issue a fixed number

of instructions formatted either as one large

instruction or as a fixed instruction packet with the

parallelism among instructions explicitly indicated

by the instruction (Intel/HP Itanium)

73

VLIW: Very Large Instruction Word

• Each “instruction” has explicit coding for multiple

operations

– In IA-64, grouping called a “packet”

– In Transmeta, grouping called a “molecule” (with “atoms” as ops)

– Moderate LIW also used in Cray/Tera MTA-2

• Tradeoff instruction space for simple decoding

– The long instruction word has room for many operations

– By definition, all the operations the compiler puts in one long

instruction word are independent => can execute in parallel

– E.g., 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch

» 16 to 24 bits per field => 7*16 or 112 bits to 7*24 or 168 bits wide

– Need compiling techniques to schedule across several branches

(called “trace scheduling”)

74

Thrice Unrolled Loop that Eliminates

Stalls for Scalar Pipeline Computers

1 Loop:

2

3

4

5

6

7

8

9

10

11

L.D

L.D

L.D

ADD.D

ADD.D

ADD.D

S.D

S.D

DSUBUI

BNEZ

S.D

F0,0(R1)

F6,-8(R1)

F10,-16(R1)

F4,F0,F2

F8,F6,F2

F12,F10,F2

0(R1),F4

-8(R1),F8

R1,R1,#24

R1,LOOP

8(R1),F12

Minimum times between

pairs of instructions:

L.D to ADD.D: 1 Cycle

ADD.D to S.D: 2 Cycles

A single branch delay

slot follows the BNEZ.

; 8-24 = -16

11 clock cycles, or 3.67 per iteration

75

Loop Unrolling in VLIW

L.D to ADD.D: +1 Cycle

ADD.D to S.D: +2 Cycles

Memory

reference 1

Memory

reference 2

FP

operation 1

1 Loop:

2

3

4

5

6

7

8

9

10

11

FP

op. 2

L.D

L.D

L.D

ADD.D

ADD.D

ADD.D

S.D

S.D

DSUBUI

BNEZ

S.D

Int. op/

branch

F0,0(R1)

F6,-8(R1)

F10,-16(R1)

F4,F0,F2

F8,F6,F2

F12,F10,F2

0(R1),F4

-8(R1),F8

R1,R1,#24

R1,LOOP

8(R1),F12

Clock

76

Loop Unrolling in VLIW

L.D to ADD.D: +1 Cycle

ADD.D to S.D: +2 Cycles

Memory

reference 1

Memory

reference 2

FP

operation 1

1 Loop:

2

3

4

5

6

7

8

9

10

11

FP

op. 2

L.D

L.D

L.D

ADD.D

ADD.D

ADD.D

S.D

S.D

DSUBUI

BNEZ

S.D

Int. op/

branch

F0,0(R1)

F6,-8(R1)

F10,-16(R1)

F4,F0,F2

F8,F6,F2

F12,F10,F2

0(R1),F4

-8(R1),F8

R1,R1,#24

R1,LOOP

8(R1),F12

Clock

L.D F0,0(R1)

1

S.D 0(R1),F4

2

3

4

5

6

ADD.D F4,F0,F2

77

Loop Unrolling in VLIW

L.D to ADD.D: +1 Cycle

ADD.D to S.D: +2 Cycles

Memory

reference 1

Memory

reference 2

L.D F0,0(R1)

L.D F6,-8(R1)

FP

operation 1

L.D F10,-16(R1) L.D F14,-24(R1)

L.D F18,-32(R1) L.D F22,-40(R1) ADD.D

L.D F26,-48(R1)

ADD.D

ADD.D

S.D 0(R1),F4

S.D -8(R1),F8

ADD.D

S.D -16(R1),F12 S.D -24(R1),F16

S.D -32(R1),F20 S.D -40(R1),F24

S.D 8(R1),F28

1 Loop:

2

3

4

5

6

7

8

9

10

11

FP

op. 2

L.D

L.D

L.D

ADD.D

ADD.D

ADD.D

S.D

S.D

DSUBUI

BNEZ

S.D

Int. op/

branch

F0,0(R1)

F6,-8(R1)

F10,-16(R1)

F4,F0,F2

F8,F6,F2

F12,F10,F2

0(R1),F4

-8(R1),F8

R1,R1,#24

R1,LOOP

8(R1),F12

Clock

1

2

F4,F0,F2

ADD.D F8,F6,F2

3

F12,F10,F2 ADD.D F16,F14,F2

4

F20,F18,F2 ADD.D F24,F22,F2

5

F28,F26,F2

6

7

DSUBUI R1,R1,#56 8

BNEZ R1,LOOP

9

Unrolled 7 times to avoid stall delays from ADD.D to S.D

7 results in 9 clocks, or 1.3 clocks per iteration (2.8X: 1.3 vs 3.67)

Average: 2.5 ops per clock (23 ops in 45 slots), 51% efficiency

Note: 8, not -48, after DSUBUI R1,R1,#56 - which may be out of place. See next slide.

Note: We needed more registers in VLIW (used 15 pairs vs. 6 in SuperScalar)

78

Problems with 1st Generation VLIW

• Increase in code size

– generating enough operations in a straight-line code fragment

requires ambitiously unrolling loops

– whenever VLIW instructions are not full, unused functional

units translate to wasted bits in instruction encoding

• Operated in lock-step; no hazard detection HW

– a stall in any functional unit pipeline caused entire processor

to stall, since all functional units must be kept synchronized

– Compiler might predict function unit stalls, but cache stalls

are hard to predict

• Binary code incompatibility

– Pure VLIW => different numbers of functional units and unit

latencies require different versions of the code

79

Multiple Issue Processors

• Exploiting ILP

– Unrolling simple loops

– More importantly, able to exploit parallelism in a less

structured code size

• Modern Processors:

– Multiple Issue

– Dynamic Scheduling

– Speculation

80

Multiple Issue, Dynamic, Speculative

Processors

• How do you issue two instructions concurrently?

– What happens at the reservation station if two instructions issued

concurrently have true dependency?

– Solution 1:

» Issue first during first half and Issue second instruction during second

half of the clock cycle

– Problem:

» Can we issue 4 instructions?

– Solution 2:

» Pipeline and widen the issue logic

» Make instruction issue take multiple clock cycles!

– Problem:

» Can not pipeline indefinitely, new instructions issued every clock cycle

» Must be able to assign reservation station

» Dependent instruction that is being used should be able to refer to the

correct reservation stations for its operands

• Issue step is the bottleneck in dynamically scheduled

superscalars!

81

Intel/HP IA-64 “Explicitly Parallel

Instruction Computer (EPIC)”

• IA-64: instruction set architecture – 64 bits per integer

• 128 64-bit integer regs + 128 82-bit floating point regs

– Not separate register files per functional unit as in old VLIW

• Hardware checks dependencies

(interlocks => binary compatibility over time)

• Itanium™ was first implementation (2001)

– Highly parallel and deeply pipelined hardware at 800Mhz

– 6-wide, 10-stage pipeline at 800Mhz on 0.18 µ process

• Itanium 2™ is name of 2nd implementation (2005)

– 6-wide, 8-stage pipeline at 1666Mhz on 0.13 µ process

– Caches: 32 KB I, 32 KB D, 128 KB L2I, 128 KB L2D, 9216 KB L3

82

Speculation vs. Dynamic

Scheduling

L.D

L.D

MUL.D

SUB.D

DIV.D

ADD.D

F6, 32(R2)

F2,44(R3)

F0,F2,F4

F8,F6,F2

F10,F0,F6

F6,F8,F2

• no instruction after the earliest uncompleted instruction

(MUL.D) is allowed to complete. In contrast, in dynamic

scheduling fast instructions (SUB.D and ADD.D) have also

completed.

• ROB can dynamically execute code while maintaining a

precise interrupt model.

• if MUL.D caused an interrupt, we could simply wait until it reached

the head of the ROB and take the interrupt, flushing any other

pending instructions from the ROB. Because instruction commit

happens in order, this yields a precise exception.

• By contrast, in Tomasulo’s algorithm, the SUB.D and ADD.D

completed before the MUL.D raised the exception. F8 and F6 could

be overwritten, and the interrupt would be imprecise.

83

ARM Cortex-A8 and Intel Core i7

• A8:

•

•

•I7:

•

•

•

Multiple issue

iPad, Motorola Droid, iPhones

Multiple issue

high end dynamically scheduled speculative

High-end desktops, server

84

ARM Cortex-A8

• A8 Design goal: low power, reasonably high clock rate

• Dual-issue

• Statically scheduled superscalar

• Dynamic issue detection

• Issue one or two instructions per clock (in-order)

• 13 stage pipeline

• Fully bypassing

• Dynamic branch predictor

• 512-entry, 2-way set associative branch target buffer

• 4K-entry global history buffer

• If branch target buffer misses

• Prediction through global history buffer

• 8-entry return address stack

• I7: aggressive 4-issue dynamically scheduled speculative85

pipeline

ARM Cortex-A8

The basic structure of the A8 pipeline is 13 stages. Three cycles are used for instruction fetch and four for

instruction decode, in addition to a five-cycle integer pipeline. This yields a 13-cycle branch misprediction

penalty. The instruction fetch unit tries to keep the 12-entry instruction queue filled.

86

ARM Cortex-A8

The five-stage instruction decode of the A8. In the first stage, a PC produced by the fetch unit (either from the

branch target buffer or the PC incrementer) is used to retrieve an 8-byte block from the cache. Up to two

instructions are decoded and placed into the decode queue; if neither instruction is a branch, the PC is

incremented for the next fetch. Once in the decode queue, the scoreboard logic decides when the instructions can

issue. In the issue, the register operands are read; recall that in a simple scoreboard, the operands always come

from the registers. The register operands and opcode are sent to the instruction execution portion of the pipeline.

87

ARM Cortex-A8

The five-stage instruction decode of the A8. Multiply operations are always performed in ALU pipeline 0.

88

ARM Cortex-A8

Figure 3.39 The estimated composition of the CPI on the ARM A8 shows that pipeline stalls are the primary addition to

the base CPI. eon deserves some special mention, as it does integer-based graphics calculations (ray tracing) and has

very few cache misses. It is computationally intensive with heavy use of multiples, and the single multiply pipeline

becomes a major bottleneck. This estimate is obtained by using the L1 and L2 miss rates and penalties to compute the

L1 and L2 generated stalls per instruction. These are subtracted from the CPI measured by a detailed simulator to89

obtain the pipeline stalls. Pipeline stalls include all three hazards plus minor effects such as way misprediction.

ARM Cortex-A8 vs A9

A9:

Issue 2 instructions/clk

Dynamic scheduling

Speculation

Figure 3.40 The performance ratio for the A9 compared to the A8, both using a 1 GHz clock and the same size caches for

L1 and L2, shows that the A9 is about 1.28 times faster. Both runs use a 32 KB primary cache and a 1 MB secondary

cache, which is 8-way set associative for the A8 and 16-way for the A9. The block sizes in the caches are 64 bytes for the

A8 and 32 bytes for the A9. As mentioned in the caption of Figure 3.39, eon makes intensive use of integer multiply, and

the combination of dynamic scheduling and a faster multiply pipeline significantly improves performance on the A9.

twolf experiences a small slowdown, likely due to the fact that its cache behavior is worse with the smaller L1 block size of

the A9.

90

Intel Core i7

• The total pipeline depth is 14

stages.

• There are 48 load and 32 store

buffers.

• The six independent functional units

can each begin execution of a

ready micro-op in the same cycle.

91

Intel Core i7

• Instruction Fetch:

• Multilevel branch target buffer

• Return address stack (function

return)

• Fetch 16 bytes from instruction

cache

• 16-bytes in predecode instruction

buffer

• Macro-op fusion: compare

followed by branch fused into

one instruction

• Break 16 bytes into instructions

• Place into 18-entry queue

92

Intel Core i7

• Micro-op decode: translate x86

instructions into micro-ops (directly

executable by the pipeline)

• Generate up to 4 microops/cycle

• Place into 28-entry buffer

• Micro-op buffer:

• loop stream detection:

• Small sequence of

instructions in a loop (<28

instructions)

• Eliminate fetch, decode

• Microfusion

• Fuse load/ALU, ALU/store

pairs

• Issue to single reservation

station

93

Intel Core i7 vs. Atom 230 (45nm technology)

Intel i7 920

ARM A8

Intel Atom 230

4-cores each with FP 1 core, no FP

1 core, with FP

Clock rate

2.66GHz

1GHz

1.66GHz

Power

130W

2W

4W

Cache

3-level,

all 4-way,

128 I, 64 D, 512 L2

1-level

Fully associative

32 I, 32 D

2-level

All 4-way

16 I, 16 D, 64 L2

Pipeline

4ops/cycle

2ops/cycle

2 ops/cycle

Speculative, OOO

In-order, dynamic

issue

In-order

Dynamic issue

Two-level

Two-level

512-entry BTB

4K global history

8-entry return

Two-level

Branch pred

94

Intel Core i7 vs. Atom 230 (45nm technology)

Figure 3.45 The relative performance and energy efficiency for a set of single-threaded benchmarks shows the i7 920 is 4 to

over 10 times faster than the Atom 230 but that it is about 2 times less power efficient on average! Performance is shown in

the columns as i7 relative to Atom, which is execution time (i7)/execution time (Atom). Energy is shown with the line as

Energy (Atom)/Energy (i7). The i7 never beats the Atom in energy efficiency, although it is essentially as good on four

benchmarks, three of which are floating point. The data shown here were collected by Esmaeilzadeh et al. [2011]. The SPEC

benchmarks were compiled with optimization on using the standard Intel compiler, while the Java benchmarks use the Sun

(Oracle) Hotspot Java VM. Only one core is active on the i7, and the rest are in deep power saving mode. Turbo Boost is

used on the i7, which increases its performance advantage but slightly decreases its relative energy efficiency.

Copyright © 2011, Elsevier Inc. All

rights Reserved.

Improving Performance

• Techniques to increase performance:

pipelining

improves clock speed

increases number of in-flight instructions

hazard/stall elimination

branch prediction

register renaming

out-of-order execution

bypassing

increased pipeline bandwidth

96

Deep Pipelining

• Increases the number of in-flight instructions

• Decreases the gap between successive independent

instructions

• Increases the gap between dependent instructions

• Depending on the ILP in a program, there is an optimal

pipeline depth

• Tough to pipeline some structures; increases the cost

of bypassing

97

Increasing Width

• Difficult to find more than four independent instructions

• Difficult to fetch more than six instructions (else, must

predict multiple branches)

• Increases the number of ports per structure

98

Reducing Stalls in Fetch

• Better branch prediction

novel ways to index/update and avoid aliasing

cascading branch predictors

• Trace cache

stores instructions in the common order of execution,

not in sequential order

in Intel processors, the trace cache stores pre-decoded

instructions

99

Reducing Stalls in Rename/Regfile

• Larger ROB/register file/issue queue

• Virtual physical registers: assign virtual register names to

instructions, but assign a physical register only when the

value is made available

• Runahead: while a long instruction waits, let a thread run

ahead to prefetch (this thread can deallocate resources

more aggressively than a processor supporting precise

execution)

• Two-level register files: values being kept around in the

register file for precise exceptions can be moved to 2nd level

100

Performance beyond single thread ILP

•There can be much higher natural parallelism in some

applications (e.g., Database or Scientific codes)

•Explicit Thread Level Parallelism or Data Level Parallelism

•Thread: process with own instructions and data

• thread may be a process part of a parallel program of

multiple processes, or it may be an independent

program

• Each thread has all the state (instructions, data, PC,

register state, and so on) necessary to allow it to

execute

•Data Level Parallelism: Perform identical operations on data,

and lots of data

101

Thread Level Parallelism (TLP)

•ILP exploits implicit parallel operations within a loop or

straight-line code segment

•TLP explicitly represented by the use of multiple threads of

execution that are inherently parallel

•Goal: Use multiple instruction streams to improve

• Throughput of computers that run many programs

• Execution time of multi-threaded programs

•TLP could be more cost-effective to exploit than ILP

102

Thread-Level Parallelism

• Motivation:

a single thread leaves a processor under-utilized

for most of the time

by doubling processor area, single thread performance

barely improves

• Strategies for thread-level parallelism:

multiple threads share the same large processor

reduces under-utilization, efficient resource allocation

Simultaneous Multi-Threading (SMT)

each thread executes on its own mini processor

simple design, low interference between threads

Chip Multi-Processing (CMP)

103

New Approach: Mulithreaded Execution

•Multithreading: multiple threads to share the functional units

of 1 processor via overlapping

• processor must duplicate independent state of each thread e.g., a

separate copy of register file, a separate PC, and for running

independent programs, a separate page table

• memory shared through the virtual memory mechanisms, which

already support multiple processes

• HW for fast thread switch; much faster than full process switch

~100s to 1000s of clocks

•When switch?

• Alternate instruction per thread (fine grain)

• When a thread is stalled, perhaps for a cache miss, another thread

can be executed (coarse grain)

104

Fine-Grained Multithreading

•Switches between threads on each instruction, causing the execution of

multiples threads to be interleaved

•Usually done in a round-robin fashion, skipping any stalled threads

•CPU must be able to switch threads every clock

•Advantage is it can hide both short and long stalls, since instructions from

other threads executed when one thread stalls

•Disadvantage is it slows down execution of individual threads, since a

thread ready to execute without stalls will be delayed by instructions from

other threads

•Used on Sun’s Niagara

105

Coarse-Grained Multithreading

•Switches threads only on costly stalls, such as L2 cache misses

•Advantages

• Relieves need to have very fast thread-switching

• Doesn’t slow down thread, since instructions from other threads

issued only when the thread encounters a costly stall

•Disadvantage is hard to overcome throughput losses from shorter stalls,

due to pipeline start-up costs

• Since CPU issues instructions from 1 thread, when a stall occurs,

the pipeline must be emptied or frozen

• New thread must fill pipeline before instructions can complete

•Because of this start-up overhead, coarse-grained multithreading is better

for reducing penalty of high cost stalls, where pipeline refill << stall time

•Used in IBM AS/400

106

M = Load/Store, FX = Fixed Point, FP = Floating Point, BR = Branch, CC = Condition Codes

Simultaneous Multi-threading ...

One thread, 8 units

Cycle M M FX FX FP FP BR CC

Two threads, 8 units

Cycle M M FX FX FP FP BR CC

1

1

2

2

3

3

4

4

5

5

6

6

7

7

8

8

9

9

107

Simultaneous Multithreading (SMT)

•Simultaneous multithreading (SMT): insight that dynamically

scheduled processor already has many HW mechanisms to

support multithreading

• Large set of virtual registers that can be used to hold the register

sets of independent threads

• Register renaming provides unique register identifiers, so

instructions from multiple threads can be mixed in datapath without

confusing sources and destinations across threads

• Out-of-order completion allows the threads to execute out of order,

and get better utilization of the HW

•Just adding a per thread renaming table and keeping

separate PCs

• Independent commitment can be supported by logically keeping a

separate reorder buffer for each thread

108

Time (processor cycle)

Multithreaded Categories

Superscalar

Fine-Grained Coarse-Grained

Thread 1

Thread 2

Multiprocessing

Thread 3

Thread 4

Simultaneous

Multithreading

Thread 5

Idle slot

109

Head to Head ILP competition

Processor

Micro architecture

Fetch /

Issue /

Execute

FU

Clock

Rate

(GHz)

Transis

-tors

Die size

Power

Intel

Pentium

4

Extreme

AMD

Athlon 64

FX-57

IBM

Power5

(1 CPU

only)

Intel

Itanium 2

Speculative

dynamically

scheduled; deeply

pipelined; SMT

Speculative

dynamically

scheduled

Speculative

dynamically

scheduled; SMT;

2 CPU cores/chip

Statically

scheduled

VLIW-style

3/3/4

7 int.

1 FP

3.8

125 M

122

mm2

115

W

3/3/4

6 int.

3 FP

2.8

8/4/8

6 int.

2 FP

1.9

6/5/11

9 int.

2 FP

1.6

114 M 104

115

W

mm2

200 M 80W

300 (est.)

mm2

(est.)

592 M 130

423

W

2

mm

110

Limits to ILP

•Doubling issue rates above today’s 3-6 instructions per clock,

say to 6 to 12 instructions, probably requires a processor to

•

•

•

•

issue 3 or 4 data memory accesses per cycle,

resolve 2 or 3 branches per cycle,

rename and access more than 20 registers per cycle, and

fetch 12 to 24 instructions per cycle.

•The complexities of implementing these capabilities is likely

to mean sacrifices in the maximum clock rate

• E.g, widest issue processor is the Itanium 2, but it also has the

slowest clock rate, despite the fact that it consumes the most power!

111

Limits to ILP

•Most techniques for increasing performance increase power consumption

•The key question is whether a technique is energy efficient: does it

increase power consumption faster than it increases performance?

•Multiple issue processors techniques all are energy inefficient:

• Issuing multiple instructions incurs some overhead in logic that

grows faster than the issue rate grows

• Growing gap between peak issue rates and sustained performance

•Number of transistors switching = f(peak issue rate), and performance = f(

sustained rate), growing gap between peak and sustained performance

=> increasing energy per unit of performance

112

Conclusion

•Limits to ILP (power efficiency, compilers, dependencies …) seem to limit

to 3 to 6 issue for practical options

•Explicitly parallel (Data level parallelism or Thread level parallelism) is

next step to performance

•Coarse grain vs. Fine grained multihreading

• Only on big stall vs. every clock cycle

•Simultaneous Multithreading if fine grained multithreading based on OOO

superscalar microarchitecture

• Instead of replicating registers, reuse rename registers

•Balance of ILP and TLP decided in marketplace

113