UNIX Filters

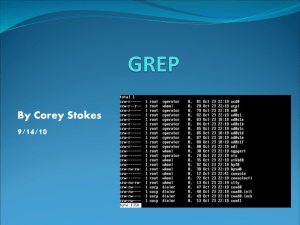

advertisement

UNIX Filters Some Simple UNIX Filters (Commands That Use Both Standard Input & Standard Output) Content Level File Level $ $ $ $ pr cmp, comm, diff sort uniq $ $ $ $ head,tail cut, paste tr grep There are a lot of others!! Formatting Output: pr Command pr prepares files for printing by adding formatting, headers, footers, … Some options: -k -n -d -l n -w m prints in k columns numbers the lines of output double spaces output sets length to n lines sets width to m chars Example: a.out | pr –n –d –l 64 Prints output with line numbers, double spacing, and 64 lines per page Comparing Files: cmp Command cmp compares 2 files and stops when it finds a difference The comparison is character by character (byte by byte). Option: -l lists all byte differences in the files Examples: cmp file1 file2 file1 file2 differ: char 12, line 3 cmp –l file1 file2 | wc –l Displays the number of differences in the 2 files. Comparing Files: comm Command comm compares 2 files and lists 3 columns of information: 1. lines unique to 1st file 2.lines unique to 2nd file 3. lines common to both files The files must be sorted. Options: -1, -2, -3 indicates the columns to drop in the output Examples: comm -3 file1 file2 Lists all the unique lines in both files. comm –l2 file1 file2 Lists all the lines common to both files. Comparing Files: diff Command diff compares 2 files and lists the instructions needed to make the files the same. Here’s an example: $ diff file1 file2 3c3 < This is line 3 of file 1. --> This is line 3 of file 2. 7a8 > This is line 8 from file 2. Change line 3 from this to this. Add this line after line 7 of file1. Extracting Vertical Data: cut Command cut extracts vertical slices of data from a file. Either columns (-c option) or fields (-f option) of data may be extracted. A delimiter (-d option) may be defined to separate fields. Default delimiter is tab. Examples: cut –c1-4,8,15- file1 Extracts chars 1 thru 4, the 8th character, and characters 15 thru end of each line from file1. No whitespace in the column list!! cut –d: -f1-3 file2 Extracts fields 1 thru 3 from file2. The fields are separated by the : character who | cut –d” “ –f1 Lists the names of all users logged in. Joining Vertical Data: paste Command paste vertically joins 2 files together. A delimiter (-d option) may be defined to separate fields. Default delimiter is tab. The -s option joins lines of a single file together. Examples: paste file1 file2 Displays file1 and file2 side by side. paste –s –d”::\n” addressbook rick rick@att.com 1234567890 >>>> rick:rick@att.com:1234567890 Displaying Files: head and tail Commands head displays the top of a file. (1st 10 lines, by default). tail displays the end of the file (last 10 lines, by default). Options: -n x or –x displays 1st (last) x lines of the file. -f continuously displays the end of a file as it grows. This option is for the tail command only. You must use the interrupt key to stop monitoring the file growth. Examples: ls –t | head –n 1 tail –f install.log Displays the file most recently edited. Continuously displays the log file as it grows. Use interrupt key to stop. Ordering Files: sort Command sort reorders the lines of a file in ascending (descending) order. The default order is ASCII: whitespace, numbers, uppercase, and finally, lowercase letters. Options: -k n sort on the nth field of the line -tchar use char as the field delimiter -n sort numerically -r reverse order sort -u remove repeated lines -m list merge sorted files in list sort Examples Examples: sort –t: -k 2 list Sort on the 2nd field of file list. Fields are separated by : sort –t: -k 5.7 –r list Sort file list in reverse order on the 7th character of 5th field. Fields separated by : sort –n list Numerically sort file list, assumed to contain numbers. sort –m file1 file2 Sorted files file1 and file2 are merged. cut –d: -f3 list | sort –u Extract the 3rd field from list & sort that field, removing the repeated lines. Removing Duplicates: uniq Command uniq displays a presorted file, removing all the duplicate lines from it. If 2 files are specified, uniq reads from the first and writes to the second. Options: -u lists only the lines that are unique -d lists only the lines that are duplicates -c counts the frequency of occurrences Examples: sort list | uniq – xlist Sorts file list; uniq reads from stdin and writes the output to xlist. uniq –c list Displays count of each unique line in the file list. Character Manipulation: tr Command tr translates characters from one format to another. Input always comes from standard input. Arguments don’t include filenames. General form is: tr options expression1 expression2 standard input expression1 is the set of characters to change; expression2 is what they change to. (The expressions should be equal length.) Examples: tr ‘+-’ ‘*/’ < math In the file math, replace all +’s with *’s and replace all -’s with /’s. head –n 3 list | tr ‘[a-z]’ ‘[A-Z]’ The 1st 3 lines of the file list are translated to uppercase. tr Command Options Options: -d delete characters from the input stream -s compress multiple consecutive characters (squeeze) -c complementing value of expression Examples: tr –d ‘/’ < dates tr –s ‘ ’ < names Remove all /’s from the file dates. Replace all strings of blanks with a single blank in the file names. tr –cd ‘:’ < file1 In the file file1, delete everything that isn’t a colon (:). All that’s left is a file full of :’s Finding Patterns in Files with grep grep searches a file and displays the lines containing a pattern. Form is: grep options pattern files If more than 1 file is listed, the filename is also displayed in the output. Some options: -i ignore case when matching -n display line numbers as well as lines -c displays a count of the number of occurrences Examples: grep “professor” college.lst Displays all lines in file, college.lst, that contain the string professor. grep –i “Rick” college.lst Displays all lines in the file, college.lst, that contain the string Rick. (Also finds rick, RICK, rIcK, …) Regular Expressions in grep Regular expressions are metacharacter patterns used in ways different from how the shell uses them. Regular Expression * . [pqr] [c1-c2] [^pqr] ^abc abc$ Meaning 0 or more of the previous character a single character a single p or q or r a single char in the ASCII range of c1 thru c2 a single character not p nor q nor r abc at the beginning of line abc at the end of the line Example Regular Expressions with grep grep “g*” file1 Displays all lines in file1 that contain nothing or g, gg, ggg, … grep “.*” file1 Displays all lines in file1 that contain nothing or any # of chars grep “[1-3]” file1 Displays all lines in file1 that contain a digit between 1 & 3. grep “[^a-zA-Z]” file1 grep “^Rick$” file1 grep “^$” file1 Displays all lines in file1 that contain a non-alphabetic character Displays all lines in file1 that contain only Rick Displays all lines in file1 that contain nothing grep “R[aeiou]ck” file1 Displays all lines in file1 that contain Rack, Reck, Rick, Rock or Ruck Putting It All Together An author wants to count the frequency of words used in a book chapter. 1. Put each word on a separate line: tr “ \011” “\012\012” < chapter 2. Strip out everything that isn’t an alphabetic character or newline: tr -cd “[a-zA-Z\012]” 3. Sort the list: sort 4. Count the word frequency: uniq –c 5. Put it all together: tr “ \011” “\012\012” < chapter | tr –cd “[a-zA-Z\012]” | sort | uniq -c