*************3***f***************************3***4***5***6***7***8***9

Receiver Operating Characteristic

(ROC) Curves

Assessing the predictive properties of a test statistic – Decision Theory

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

Test

Criterion

True Disease Status

Pos Neg

Pos TP

Neg

TP = True Positive

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

Test

Criterion

Pos

Neg

True Disease Status

Pos Neg

FP

FP = False Positive

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

Test

Criterion

True Disease Status

Pos Neg

Pos

Neg FN

FN = False Negative

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

True Disease Status

Pos Neg

Test

Criterion

Pos

Neg TN

TN = True Negative

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

Suppose we have a test statistic for predicting the presence or absence of disease.

Test

Criterion

Pos

Neg

True Disease Status

Pos Neg

TP

FN

P

FP

TN

N P+ N

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Conceptual Framework

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

True Disease Status

Test

Criterion

Pos

Neg

Pos

TP

FN

P

Neg

FP

TN

N

Accuracy = Probability that the test yields a correct result.

= (TP+TN) / (P+N)

P+ N

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

Test

Criterion

Pos

Neg

True Disease Status

Pos

TP

FN

Neg

FP

TN

P N P+ N

Sensitivity = Probability that a true case will test positive

= TP / P

Also referred to as True Positive Rate (TPR) or True Positive Fraction (TPF).

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

True Disease Status

Test

Criterion

Pos

Neg

Pos

TP

FN

P

Neg

FP

TN

N P+ N

Specificity = Probability that a true negative will test negative

= TN / N

Also referred to as True Negative Rate (TNR) or True Negative Fraction (TNF).

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

True Disease Status

Test

Criterion

Pos

Neg

Pos

TP

FN

P

Neg

FP

TN

N P+ N

1-Specificity = Prob that a true negative will test positive

= FP / N

Also referred to as False Positive Rate (FPR) or False Positive Fraction (FPF).

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

Test

Criterion

Pos

Neg

Positive Predictive

Value (PPV)

True Disease Status

Pos

TP

Neg

FP

FN

P

TN

N P+ N

= Probability that a positive test will truly have disease

= TP / (TP+FP)

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Test Properties

Test

Criterion

Pos

Neg

Negative Predictive

Value (NPV)

True Disease Status

Pos

TP

FN

Neg

FP

TN

P N P+ N

= Probability that a negative test will truly be disease free

= TN / (TN+FN)

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Binary Prediction Problem

Example

Test

Criterion

Pos

Neg

Se = 27/100 = .27

S p = 727/900 = .81

FPF = 1- S p = .19

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

True Disease Status

Pos Neg

27

73

100

173

727

900

Acc = (27+727)/1000 = .75

PPV = 27/200 = .14

NPV = 727/800 = .91

200

800

1000

Binary Prediction Problem

Test Properties

Of these properties, only Se and Sp (and hence FPR) are considered invariant test characteristics.

Accuracy, PPV, and NPV will vary according to the underlying prevalence of disease.

Se and Sp are thus “fundamental” test properties and hence are the most useful measures for comparing different test criteria, even though PPV and NPV are probably the most clinically relevant properties.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Now assume that our test statistic is no longer binary, but takes on a series of values (for instance how many of five distinct risk factors a person exhibits).

Clinically we make a rule that says the test is positive if the number of risk factors meets or exceeds some threshold (#RF > x)

Suppose our previous table resulted from using x = 4.

Let’s see what happens as we vary x.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Impact of using a threshold of 3 or more RFs

Test

Criterion

Pos

Neg

.27

Se = 27/100 = .45

S p = 727/900 = .78

.81

FPF = 1- S p = .22

True Disease Status

Pos Neg

45

55

200

700

245

200

800

755

100 900 1000

Acc = (27+727)/1000 = .75

.75

.14

PPV = 27/200 = .18

.91

NPV = 727/800 = .93

Se , Sp , and interestingly both PPV and NPV

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

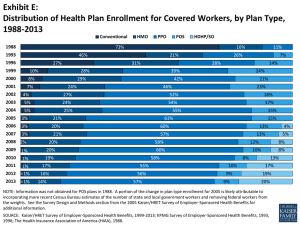

Summary of all possible options

Threshold TPR FPR

4

3

6

5

0.00

0.10

0.27

0.45

0.00

0.11

0.19

0.22

2

1

0

0.73

0.27

0.98

0.80

1.00

1.00

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

As we relax our threshold for defining “disease,” our true positive rate

(sensitivity) increases, but so does the false positive rate (FPR).

The ROC curve is a way to visually display this information.

ROC Curves

Summary of all possible options

Threshold TPR FPR

4

3

6

5

0.00

0.10

0.27

0.45

0.00

0.11

0.19

0.22

2

1

0

0.73

0.27

0.98

0.80

1.00

1.00

x=4

x=5

x=2

The diagonal line shows what we would expect from simple guessing (i.e., pure chance).

What might an even better ROC curve look like?

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Summary of a more optimal curve

Threshold TPR FPR

4

3

6

5

0.00

0.10

0.77

0.90

0.00

0.01

0.02

0.03

2

1

0

0.95

0.04

0.99

0.40

1.00

1.00

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Note the immediate sharp rise in sensitivity. Perfect accuracy is represented by upper left corner.

ROC Curves

Use and interpretation

The ROC curve allows us to see, in a simple visual display, how sensitivity and specificity vary as our threshold varies.

The shape of the curve also gives us some visual clues about the overall strength of association between the underlying test statistic (in this case #RFs that are present) and disease status.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Use and interpretation

The ROC methodology easily generalizes to test statistics that are continuous (such as lung function or a blood gas).

We simply fit a smoothed

ROC curve through all observed data points.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Use and interpretation

See demo from www.anaesthetist.com/mnm/stats/roc/index.htm

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Area under the curve (AUC)

The total area of the grid represented by an ROC curve is 1, since both TPR and FPR range from 0 to 1.

The portion of this total area that falls below the

ROC curve is known as the area under the curve, or AUC.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation

The AUC serves as a quantitative summary of the strength of association between the underlying test statistic and disease status.

An AUC of 1.0 would mean that the test statistic could be used to perfectly discriminate between cases and controls.

An AUC of 0.5 (reflected by the diagonal 45 ° line) is equivalent to simply guessing.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation

The AUC can be shown to equal the Mann-

Whitney U statistic, or equivalently the Wilcoxon rank statistic, for testing whether the test measure differs for individuals with and without disease.

It also equals the probability that the value of our test measure would be higher for a randomly chosen case than for a randomly chosen control.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation

1 controls cases TPR

0

AUC ~ 0.540

FPR

ROC Curve

1

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation controls

1 cases

TPR

0

AUC ~ .95

FPR

ROC Curve

1

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation

What defines a “good” AUC?

Opinions vary

Probably context specific

What may be a good AUC for predicting COPD may be very different than what is a good AUC for predicting prostate cancer

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation http://gim.unmc.edu/dxtests/roc3.htm

.90-1.0 = excellent

.80-.90 = good

.70-.80 = fair

.60-.70 = poor

.50-.60 = fail

Remember that <.50 is worse than guessing!

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

Area Under the Curve (AUC)

Interpretation www.childrens-mercy.org/stats/ask/roc.asp

.97-1.0 = excellent

.92-.97 = very good

.75-.92 = good

.50-.75 = fair

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Comparing multiple ROC curves

Suppose we have two candidate test statistics to use to create a binary decision rule. Can we use ROC curves to choose an optimal one?

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Comparing multiple ROC curves

Adapted from curves at: http://gim.unmc.edu/dxtests/roc3.htm

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Comparing multiple ROC curves http://en.wikipedia.org/w iki/Receiver_operating_ characteristic

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Comparing multiple ROC curves

We can formally compare AUCs for two competing test statistics, but does this answer our question?

AUC speaks to which measure, as a continuous variable, best discriminates between cases and controls?

It does not tell us which specific cutpoint to use, or even which test statistic will ultimately provide the “best” cutpoint.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Choosing an optimal cutpoint

The choice of a particular Se and Sp should reflect the relative costs of FP and FN results.

What if a positive test triggers an invasive procedure?

What if the disease is life threatening and I have an inexpensive and effective treatment?

How do you balance these and other competing factors?

See excellent discussion of these issues at www.anaesthetist.com/mnm/stats/roc/index.htm

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Generalizations

These techniques can be applied to any binary outcome. It doesn’t have to be disease status.

In fact, the use of ROC curves was first introduced during

WWII in response to the challenge of how to accurately identify enemy planes on radar screens.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH

ROC Curves

Final cautionary notes

We assume throughout the existence of a gold standard for measuring “disease,” when in practice no such gold standard exists.

COPD, asthma, even cancer (can we truly rule out the absence of cancer in a given patient?)

As a result, even Se and Sp may not be inherently stable test characteristics, but may vary depending on how we define disease and the clinical context in which it is measured.

Are we evaluating the test in the general population or only among patients referred to a specialty clinic?

Incorrect specification of P and N will vary in these two settings.

©

2009, KAISER PERMANENTE CENTER FOR HEALTH RESEARCH