Lecture 5, January 18

advertisement

Lecture 5

Today, how to solve recurrences

We learned “guess and proved by induction”

We also learned “substitution” method

Today, we learn the “master theorem”

More divide and conquer:

closest pair problem

matrix multiplication

Master Theorem

Theorem 4.1 (CLRS, Theorem 4.1) Let a ≥ 1 and b > 1

be constants. Let f(n) be a function and let T(n) be

defined on the nonnegative integers by

T(n) = aT(n/b) + f(n).

Then

log a

n b

log a

T ( n ) n b log n

f (n )

log a

f (n) O n b

0

log a

f (n) n b

c 1

log b a

f (n) n

AND

af ( n / b ) cf ( n ) for large n

Note

Only apply to a particular family of

recurrences.

f(n) is positive for large n.

Key is to compare f(n) with nlog_b a

Case 2, more general is f(n) = Θ( nlog_b a lgkn).

Then the result is T(n) = Θ( nlog_b a lgk+1n).

Sometimes it does not apply. Ex. T(n) =

4T(n/2) + n2 /logn.

Proof ideas of Master Theorem

Consider a tree with T(n) at the root, and apply the recursion to

each node, until we get down to T(1) at the leaves. The first

recursion is T(n) = aT(n/b) + f(n), so assign a cost of f(n) to the

root. At the next level we have “a” nodes, each with a cost of

T(n/b). When we apply the recursion again, we get a cost of

af(n/b) for all of these. At the next level we have a2 nodes, each

with a cost of T(n/b2). We get a cost of a2f(n/b2). We continue

down to T(1) at the leaves. There are alog_b n leaves and each

costs Θ(1), which gives Θ(alog_b n). The total cost associated with

f is Σ 0 ≤ i ≤ log_b n - 1 ai f(n/bi).

Thus T(n) = Θ(n log_b a) + Σ 0 ≤ i ≤ (log_b n) - 1 ai f(n/bi).

The three cases now come from deciding which term is

dominant. In case (1), the Θ term is dominant. In case (2), the

terms are roughly equal (but the second term has an extra lg n

factor). In case (3), the f(n) term is dominant. The details are

somewhat painful, but can be found in CLRS, pp. 76-84.

Idea of master theorem

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

T (1)

#leaves = ah

= alogbn

= nlogba

f (n)

a f (n/b)

a2 f (n/b2)

…

Recursion tree:

nlogbaT (1)

Three common cases

Compare f (n) with nlogba:

1. f (n) = O(nlogba – ) for some constant > 0.

• f (n) grows polynomially slower than nlogba

(by an n factor).

Solution: T(n) = (nlogba) .

Recursion tree:

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

CASE 1: The weight increases

geometrically from the root to the

T (1) leaves. The leaves hold a constant

fraction of the total weight.

f (n)

a f (n/b)

a2 f (n/b2)

…

Idea of master theorem

These functions increase

from top to bottom

geometrically, hence we

only need to have the

last bottom term

nlogbaT (1)

(nlogba)

Case 2

Compare f (n) with nlogba:

2. f (n) = (nlogba lgkn) for some constant k 0.

• f (n) and nlogba grow at similar rates.

• This is clear for k=0. For k>0, the intuition is

that lgk n factor remain for constant fraction

of levels, hence sum to the following

Solution: T(n) = (nlogba lgk+1n) .

Idea of master theorem

Recursion tree:

f (n)

f (n)

a f (n/b)

a2 f (n/b2)

…

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

All levels same

T (1)

CASE 2: (k = 0) The weight

is approximately the same on

each of the logbn levels.

nlogbaT (1)

(nlogbalg n)

Case 3, c<1, akf(n/bk) geometrically

decreases hence = Θ(f(n))

Compare f (n) with nlogba:

3. f (n) = (nlogba + ) for some constant > 0.

• f (n) grows polynomially faster than nlogba (by

an n factor),

and f (n) satisfies the regularity condition that

a f (n/b) c f (n) for some constant c < 1.

Solution: T(n) = ( f (n)) .

Recursion tree:

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

CASE 3: The weight decreases

geometrically from the root to the

T (1) leaves. The root holds a constant

fraction of the total weight.

af(n/b)<(1-ε)f(n)

f (n)

a f (n/b)

a2 f (n/b2)

…

Idea of master theorem

nlogbaT (1)

( f (n))

Examples for the Master Theorem

The Karatsuba recurrence has a = 3, b = 2, f(n) = cn. Then case

1 applies, and so T(n) = Θ(n l og_2 3 ), as we found.

The mergesort recurrence has a = 2, b = 2, f(n) = n. Then case 2

applies, and so T(n) = Θ(n lg n).

Finally, a recurrence like T(n) = 3T(n/2) + n2 gives rise to case 3.

In this case f(n) = n2, so 3f(n/2) = 3 (n/2)2 = (3/4) n2 ≤ c n2 for c =

3/4, and so T(n) = Θ(n2).

Note that the master theorem does not cover all cases. In

particular, it does not cover the case

T(n) = 2 T(n/2) + n / lg n

since then the only applicable case is case 3, but then the

inequality involving f does not hold.

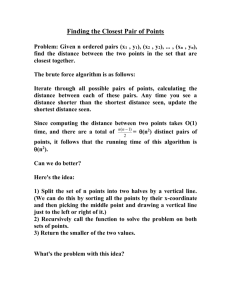

Closest pair problem

Input:

A set of points P = {p1,…, pn} in two

dimensions

Output:

The pair of points pi, pj that minimize the

Euclidean distance between them.

Distances

Euclidean distance

y1

x1 , y 1

x2 , y2

y2

x1

x2

x1 , y1 x 2 , y 2

x1 x 2

2

y1 y 2

2

Closest Pair Problem

Closest Pair Problem

Divide and Conquer

O(n2) time algorithm is easy

Assumptions:

No two points have the same x-coordinates

No two points have the same y-coordinates

How do we solve this problem in 1 dimension?

Sort the number and walk from left to right to find

minimum gap.

Divide and Conquer

Divide and conquer has a chance to do better

than O(n2).

We can first sort the points by their x-

coordinates and sort also by y-coordinates

Closest Pair Problem

Divide and Conquer for the Closest

Pair Problem

Divide by x-median

Divide

L

R

Divide by x-median

Conquer

L

R

1

2

Conquer: Recursively solve L and R

Combination I

L

R

2

Take the smaller one of 1 , 2 : = min(1 , 2 )

Combination II

Is there a point in L and a point in R whose distance is

smaller than ?

L

R

= min(1 , 2 )

Combination II

If the answer is “no” then we are done!!!

If the answer is “yes” then the closest such

pair forms the closest pair for the entire set

How do we determine this?

Combination II

Is there a point in L and a point in R whose distance is

smaller than ?

L

R

Combination II

Is there a point in L and a point in R whose distance is

smaller than ?

L

R

Need only to consider the narrow band

O(n) time

Combination II

Is there a point in L and a point in R whose distance is

smaller than ?

L

R

Denote this set by S, assume Sy is the sorted

list of S by the y-coordinates.

Combination II

There exists a point in L and a point in R whose

distance is less than if and only if there exist

two points in S whose distance is less than .

If S is the whole thing, did we gain anything?

CLAIM: If s and t in S have the property that ||st|| < , then s and t are within 15 positions of

each other in the sorted list Sy.

Combination II

Is there a point in L and a point in R whose distance is

smaller than ?

L

R

There are at most one point in each box of size δ/2 by δ/2.

Thus s and t cannot be too far apart.

Closest-Pair

Preprocessing:

Construct Px and Py as sorted-list by x- and y-coordinates

Closest-pair(P, Px,Py)

Divide

Construct L, Lx , Ly and R, Rx , Ry

Conquer

Let 1= Closest-Pair(L, Lx , Ly )

Let 2= Closest-Pair(R, Rx , Ry )

Combination

Let = min(1 , 2 )

Construct S and Sy

For each point in Sy, check each of its next 15 points down

the list

If the distance is less than , update the as this smaller

distance

Complexity Analysis

Preprocessing takes O(n lg n) time

Divide takes O(n) time

Conquer takes 2 T(n/2) time

Combination takes O(n) time

T(n) = 2T(n/2) + cn

So totally takes O(n lg n) time.

Matrix Multiplication

Suppose we multiply two NxN matrices together.

Regular method is NxNxN = N3 multiplications

O(N3)

Can we Divide and Conquer?

A11

A =

A 21

A12

A 22

B=

B 11

B 21

B 12

B 22

C 11

C= A*B =

C 21

C11 = A11*B11 + A12*B21

C12 = A11*B12 + A12*B22

C21 = A21*B11 + A22*B21

C22 = A21*B12 + A22*B22

Complexity : T(N) = 8T(N/2) + O(N2)

= O(Nlog28) = O(N3)

No improvement

C 12

C 22

Strassen’s Matrix Multiplication

P1 = (A11+ A22)(B11+B22)

P2 = (A21 + A22) * B11

P3 = A11 * (B12 - B22)

P4 = A22 * (B21 - B11)

P5 = (A11 + A12) * B22

P6 = (A21 - A11) * (B11 + B12)

P7 = (A12 - A22) * (B21 + B22)

C11 = P1 + P4 - P5 + P7

C12 = P3 + P5

C21 = P2 + P4

C22 = P1 + P3 - P2 + P6

And do this recursively as usual.

Volker

Strassen

Time analysis

T(n) = 7T(n/2) + O(n2 )

= 7logn

by the Master Theorem

=nlog7

=n2.81

Best bound: O(n2.376) by CoppersmithWinograd.

Best known (trivial) lower bound: Ω(n2).

Open: what is the true complexity of matrix

multiplication?