Presentation

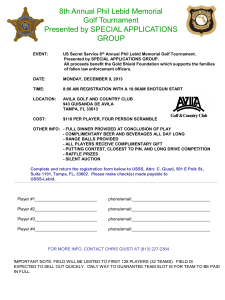

advertisement

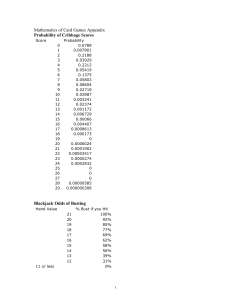

By: Jonathan Quenzer To have a computer learn how to play Blackjack through reinforcement learning ◦ Computer starts off with no memory. After each hand is played, the computer learns more. ◦ Goal is to have computer make the best possible decision of how much to bet and when to hit/stay Splitting hands and doubling down will not be included. This decreases the odds of winning. The dealer has a 5-8% advantage depending on the specific rules without using card counting The player through correct strategy and using card counting can obtain at most a 2% advantage on the dealer I wrote a Matlab program to simulate Black Jack. Feature vectors were generated by running the program and analyzing each hand played. All of the features were scaled to have a mean of ½, minimum of 0, and maximum of 1. Hand History Ace 2 The number of cards remaining in the deck for each value of card … 10 PT Player Total Without Aces PA Number of Aces in Player's Hand DSC The Dealers Showing Card Hit/Stay +1 = Hit, -1 = Stay Win 1.5 = Black Jack, 1 = Win, 0 = Lose Lose 1 = Lose, 0 = Win Push 1 = Push, 0 = Win = Classification Feature Set Hit/Stay Ace 2 The number of cards remaining in the deck for each value of card … 10 CC Calculated Card Count: Hi-Opt II used PT Player Total Without Aces PA Number of Aces in Player's Hand DSC The Dealers Showing Card +1 = Hit, -1 = Stay: Opposite of Hit/Stay Hit/Stay from Hand History if the player lost Feature Set Bet Amount Ace 2 The number of cards remaining in the deck for each value of card … 10 CC Calculated Card Count: Hi-Opt II used +1.5 if hand was Black Jack, +1 if won, Bet Min/Max -1 if lost Example of 5 nearest neighbors Neighbors sum to +3, so decide to Hit Computer started with no knowledge The player gained advantage over dealer using 10 nearest neighbors Computer simulated three players playing 1000 hands Computer started with large feature set from 5000 hands