The_Wumpus_World

advertisement

The Wumpus World!

2012级ACM班

金汶功

Hunt the wumpus!

Description

•

•

•

•

Performance measure

Environment

Actuators

Sensors: Stench & Breeze & Glitter & Bump &

Scream

An Example

An Example

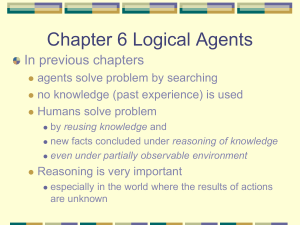

Reasoning via logic

Semantics

• Semantics: Relationship between logic and the

real world

• Model: 𝑀(α)

• Entailment: 𝛼 ⊨ β iff M(𝛼) ⊆ 𝑀(𝛽)

Models

• KB: valid sentences

• 𝛼1 : “There is no pit in [1,2]”

• 𝛼2 : “There is no pit in [2,2]”

Sensors

Tell

Axioms

Current

States

Knowledge base

Ask

Answer

Tell

Model

checking

Actuators

Agent

Efficient Model Checking

•

•

•

•

•

•

DPLL

Early termination

Pure symbol heuristic

Unit clause heuristic

Component analysis

…

Drawbacks

• Model checking is NP-complete

• Knowledge base may tell nothing.

Probabilistic Reasoning

Full joint probability distribution

• P(X, Y) = P(X|Y)P(Y)

• X: {1,2,3,4} -> {0.1,0.2,0.3,0.4}

• Y: {a,b} -> {0.4, 0.6}

• P(X = 2, Y = a) = P(X = 2|Y = a)P(Y = a)

• The probability of all combination of values

Normalization

• 𝑃 𝑐𝑎𝑣𝑖𝑡𝑦 𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒) =

𝑃(𝑐𝑎𝑣𝑖𝑡𝑦∧𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒)

𝑃(𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒)

• 𝑃(𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒) is a constant

• 𝑃 𝑐𝑎𝑣𝑖𝑡𝑦 𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒) = α 𝑃(𝑐𝑎𝑣𝑖𝑡𝑦 ∧

𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒)

• 𝐏 𝑐𝑎𝑣𝑖𝑡𝑦 𝑡𝑜𝑜𝑡ℎ𝑎𝑐ℎ𝑒) = 𝛼 < 0.12,0.08 >

• =< 0.6,0.4 >

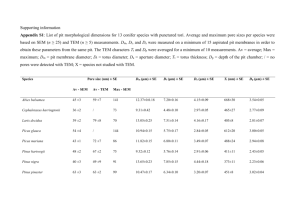

The Wumpus World

• Aim: calculate the probability that each of the

three squares contains a pit.

Full joint distribution

• P(𝑃1,1 , ⋯ 𝑃4,4 , 𝐵1,1 , 𝐵1,2 , 𝐵2,1 ) = P(𝐵1,1 , 𝐵1,2 ,

𝐵2,1 |𝑃1,1 , ⋯ 𝑃4,4 ) P(𝑃1,1 , ⋯ 𝑃4,4 )

• P(𝑃1,1 , ⋯ 𝑃4,4 ) =

𝑖,𝑗 P(𝑃𝑖,𝑗 )

• Every room contains a pit of probability 0.2

• 𝑃 𝑃1,1 , ⋯ 𝑃4,4 = 0.2𝑛 × 0.816−𝑛

How likely is it that [1,3] has a pit?

• Given observation:

• 𝑏 = ¬𝑏1,1 ∧ 𝑏1,2 ∧ 𝑏2,1

• 𝑘𝑛𝑜𝑤𝑛 = ¬𝑝1,1 ∧ ¬𝑝1,2 ∧ ¬𝑝2,1

• 𝐏 𝑃1,3 𝑘𝑛𝑜𝑤𝑛, 𝑏 = 𝛼

• 212 = 4096 terms

𝑢𝑛𝑘𝑛𝑜𝑤𝑛 𝐏(𝑃1,3 , 𝑢𝑛𝑘𝑛𝑜𝑤𝑛, 𝑘𝑛𝑜𝑤𝑛, 𝑏)

Using independence

Simplification

• 𝐏 𝑃1,3 𝑘𝑛𝑜𝑤𝑛, 𝑏 = 𝛼

• =𝛼

• =𝛼

𝑢𝑛𝑘𝑛𝑜𝑤𝑛 𝐏(𝑃1,3 , 𝑘𝑛𝑜𝑤𝑛, 𝑏, 𝑢𝑛𝑘𝑛𝑜𝑤𝑛)

𝑢𝑛𝑘𝑛𝑜𝑤𝑛 𝐏(𝑏|𝑃1,3 , 𝑘𝑛𝑜𝑤𝑛, 𝑢𝑛𝑘𝑛𝑜𝑤𝑛)𝐏(𝑃1,3 , 𝑘𝑛𝑜𝑤𝑛, 𝑢𝑛𝑘𝑛𝑜𝑤𝑛)

𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟

𝐏(𝑏|𝑃1,3 , 𝑘𝑛𝑜𝑤𝑛, 𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟, 𝑜𝑡ℎ𝑒𝑟)

𝑜𝑡ℎ𝑒𝑟 𝐏(𝑃 , 𝑘𝑛𝑜𝑤𝑛, 𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟, 𝑜𝑡ℎ𝑒𝑟)

1,3

• =⋯

• = 𝛼𝑃 𝑘𝑛𝑜𝑤𝑛 𝐏(𝑃1,3 )

𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟 𝐏(𝑏|𝑃1,3 , 𝑘𝑛𝑜𝑤𝑛, 𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟)𝑃(𝑓𝑟𝑜𝑛𝑡𝑖𝑒𝑟)

• Now there are only 4 terms, cheers!

Finally

• 𝐏 𝑃1,3 𝑘𝑛𝑜𝑤𝑛, 𝑏 =< 0.31, 0.69 >

• [2,2] contains a pit with 86% probability!

• Data structures---independence

Bayesian Network

Simple Example

P(B)

Burglary

P(E)

Earthquake

.001

Alarm(Bark)

John Calls

Bark

P(J)

true

.90

false

.05

.002

B

E

P(A)

True

true

.95

true

false

.94

false

true

.29

false

false

.001

Mary Calls

Bark

P(M)

true

.70

false

.01

Specification

• Each node corresponds to a random variable

• Acyclic – DAG

• Each node has a conditional probability

distribution 𝐏 𝑋𝑖 𝑃𝑎𝑟𝑒𝑛𝑡𝑠(𝑋𝑖 )

Conditional Independence

Exact Inference

• 𝑃 𝑏 𝑗, 𝑚 = α𝑃 𝑏, 𝑗, 𝑚

• = α 𝑒 𝑎 𝑃(𝑏, 𝑗, 𝑚, 𝑒, 𝑎)

• = α 𝑒 𝑎 𝑃 𝑏 𝑃 𝑒 𝑃 𝑎 𝑏, 𝑒 𝑃 𝑗 𝑎 𝑃(𝑚|𝑎)

• 𝐏(𝐵|𝑗, 𝑚) =< 0.284,0.716 >

P(P2,2)

0.2

P1,3

P2,2

P(P3,1)

P3,1

0.2

P(known)

P(1,3)

known

0.2

b

P1,3

P2,2

P3,1

b

True

True

True

1

True

True

False

1

True

False

True

1

True

False

False

0

False

True

True

1

False

True

False

1

False

False

True

0

False

False

False

0

Approximate Inference

• Markov Chain Monte Carlo

• Gibbs Sampling

• Idea: The long-run fraction of time spent in

each state is exactly proportional to its

posterior probability.

𝑃(𝑥𝑖 ′ |𝑀𝑎𝑟𝑘𝑜𝑣𝐵𝑙𝑎𝑛𝑘𝑒𝑡 𝑋𝑖 ) = αP(𝑥𝑖′ |𝑃𝑎𝑟𝑒𝑛𝑡𝑠 𝑋𝑖 ) ×

𝑃(𝑦𝑗 |𝑝𝑎𝑟𝑒𝑛𝑡𝑠(𝑌𝑗 ))

𝑌𝑗 ∈𝐶ℎ𝑖𝑙𝑑𝑟𝑒𝑛 𝑋𝑖

Reference

• http://zh.wikipedia.org/wiki/Hunt_the_Wumpus

• http://zh.wikipedia.org/wiki/%E8%B4%9D%E5%8F%B6%E6%9

6%AF%E7%BD%91%E7%BB%9C

• Stuart Russell, Peter Norvig Artificial Intelligence—A Modern

Approach 3rd edition, 2010