ppt

advertisement

Uninformed Search

Reading: Chapter 3 by today, Chapter 4.1-4.3 by

Wednesday, 9/12

Homework #2 will be given out on Wednesday

DID YOU TURN IN YOUR SURVEY? USE

COURSEWORKS AND TAKE THE TEST

Pending Questions

Class web page:

http://www.cs.columbia.edu/~kathy/cs

4701

Reminder: Don’t forget assignment

0 (survey). See courseworks

2

When solving problems, dig at

the roots instead of just hacking

at the leaves.

Anthony J. D'Angelo, The College

Blue Book

3

Agenda

Introduction of search as a problem-solving

paradigm

Uninformed search algorithms

Example using the 8-puzzle

Formulation of another example: sodoku

Transition to greedy search, one method for

informed search

4

Goal-based Agents

Agents that work towards a goal

Select the action that more likely achieve

the goal

Sometimes an action directly

achieves a goal; sometimes a series

of actions are required

5

Problem solving as search

Goal formulation

Problem formulation

Actions

States

6

Uninformed

Search through the space of

possible solutions

Use no knowledge about which path

is likely to be best

7

Characteristics

Before actually doing something to solve

a puzzle, an intelligent agent explores

possibilities “in its head”

Search = “mental exploration of

possibilities”

Making a good decision requires exploring

several possibilities

Execute the solution once it’s found

8

Formulating Problems as Search

Given an initial state and a goal, find the sequence

of actions leading through a sequence of states to

the final goal state.

Terms:

Successor function: given action and state, returns

{action, successors}

State space: the set of all states reachable from the

initial state

Path: a sequence of states connected by actions

Goal test: is a given state the goal state?

Path cost: function assigning a numeric cost to each path

Solution: a path from initial state to goal state

9

Example: the 8-puzzle

How would you use AI techniques

to solve the 8-puzzle problem?

10

8-puzzle URLS

http://www.permadi.com/java/puzzle8

http://www.cs.rmit.edu.au/AISearch/Product

11

8 Puzzle

States: integer locations of tiles

(0 1 2 3 4 5 6 7 B)

(0 1 2)(3 4 5) (6 7 B)

Action: left, right, up, down

Goal test: is current state = (0 1 2 3 4 5

6 7 B)?

Path cost: same for all paths

Successor function: given {up, (5 2 3 B 1

8 4 7 6)} -> ?

What would the state space be for this

problem?

12

What are we searching?

State space vs. search space

Nodes

State represents a physical configuration

Search space represents a tree/graph of

possible solutions… an abstract configuration

Abstract data structure in search space

Parent, children, depth, path cost, associated

state

Expand

A function that given a node, creates all

children nodes, using successsor function

13

14

Data structures:

Searching data

AI: Searching solutions

15

Uninformed Search Strategies

The strategy gives the order in which

the search space is searched

Breadth first

Depth first

Depth limited search

Iterative deepening

Uniform cost

16

Breadth first Algorithm

17

18

19

20

21

Depth-first Algorithm

22

23

24

25

26

27

28

29

30

Complexity Analysis

Completeness: is the algorithm

guaranteed to find a solution when there

is one?

Optimality: Does the strategy find the

optimal solution?

Time: How long does it take to find a

solution?

Space: How much memory is needed to

perform the search?

31

Cost variables

Time: number of nodes generated

Space: maximum number of nodes stored

in memory

Branching factor: b

Depth: d

Maximum number of successors of any node

Depth of shallowest goal node

Path length: m

Maximum length of any path in the state

space

32

Can we combine benefits of both?

Depth limited

Select some limit in depth to explore the

problem using DFS

How do we select the limit?

Iterative deepening

DFS with depth 1

DFS with depth 2 up to depth d

33

34

35

36

Properties of the task environment?

Fully observable

Deterministic

Episodic

Static

Discrete

Single agent

Partially

observable

Stochastic

Sequential

Dynamic

Continuous

Multiagent

37

Three types of incompleteness

Sensorless problems

Contingency problems

Adversarial problems

Exploration problems

38

End of Class Questions?

39

Sodoku

40

Informed Search

Heuristics

Suppose 8-puzzle off by one

Is there a way to choose the best

move next?

Good news: Yes!

We can use domain knowledge or

heuristic to choose the best move

42

0

1

2

3

4

5

6

7

43

Nature of heuristics

Domain knowledge: some

knowledge about the game, the

problem to choose

Heuristic: a guess about which is

best, not exact

Heuristic function, h(n): estimate

the distance from current node to

goal

44

Heuristic for the 8-puzzle

# tiles out of place (h1)

Manhattan distance (h2)

Sum of the distance of each tile from its

goal position

Tiles can only move up or down city

blocks

45

0

1

2

3

4

5

6

7

Goal State

0

1

2

0

2

5

3

4

5

3

1

7

7

6

6

h1=1

h2=1

4

h1=5

h2=1+1+1+2+2=7

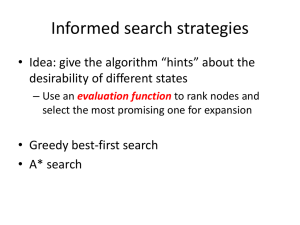

Best first searches

A class of search functions

Choose the “best” node to expand

next

Use an evaluation function for each

node

Estimate of desirability

Implementation: sort fringe, open

in order of desirability

Today: greedy search, A* search

47

Greedy search

Evaluation function = heuristic

function

Expand the node that appears to be

closest to the goal

48

Greedy Search

OPEN = start node; CLOSED = empty

While OPEN is not empty do

Remove leftmost state from OPEN, call it X

If X = goal state, return success

Put X on CLOSED

SUCCESSORS = Successor function (X)

Remove any successors on OPEN or CLOSED

Compute heuristic function for each node

Put remaining successors on either end of OPEN

Sort nodes on OPEN by value of heuristic function

End while

49

End of Class Questions?

50