PRINCIPLES OF LANGUAGE ASSESSMENT Riko Arfiyantama

advertisement

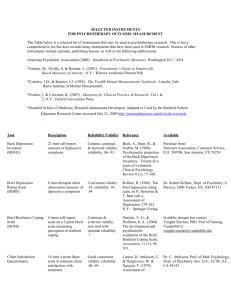

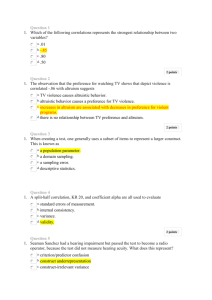

PRINCIPLES OF LANGUAGE ASSESSMENT Riko Arfiyantama Ratnawati Olivia JOB DESCRIPTION Speaker I - Practicality - Reliability - Validity Speaker II - Authenticity - Washback Speaker III - Applying principles to the evaluation of classroom tests HOW DO YOU KNOW IF A TEST IS EFFECTIVE? 1. Practicality 2. Reliability 3. Validity 4. Authenticity 5. Washback PRACTICALLY An effective test is Practical: Is not excessively expensive, Stays within appropriate time constraints, Is relatively easy to administer, and Has a scoring/evaluation procedure that is specific and time-efficient. RELIABILITY A reliable test is consistent and dependable. If you give the same test to the same student or matched students on two different occasion, the test should yield similar results. Test I First occasion Test II Second occasion THE POSSIBILITIES OF RELIABILITY The fluctuations in: The students Scoring Test administration The test itself STUDENT-RELATED RELIABILITY The fluctuation in the student can be caused by the following factors: Temporary illness, Fatigue A “bad day” Anxiety Other physical and psychological factors RATER RELIABILITY The fluctuation in scoring can be caused by the following factors: Human error (teacher’s fatigue) Subjectivity Bias (good or bad students) Lack of attention to scoring criteria Inexperience Inattention TEST ADMINISTRATION RELIABILITY The fluctuation in administration can be caused by the following factors: The condition (place) of the test administration e.g. listening test becomes unclear because of the street noise. Photocopying variations The amount of light in different parts of the room. Variations in temperature. The condition of desks and chairs. TEST RELIABILITY The fluctuation in the test itself can be caused by the following factors: Time limitation in a test The test is administered too long so the testtakers may become fatigue. VALIDITY The extent to which inferences made from assessment results are appropriate, meaningful, and useful in terms of the purpose of the assessment. (Gronlund, 1998: 226) For example: A valid test of reading ability actually measures reading ability. A valid test of writing ability actually measures writing ability not grammar. CONTENT-RELATED EVIDENCE The validity of the test depends on the content and the relation between the purpose of the test (content) and the way the test is administered (related) . For example: To get a valid speaking test, the students should do the direct test by giving the students’ chance to perform their ability in speaking, not by giving them paper-and-pencil test. CRITERION-RELATED EVIDENCE Criterion-related Evidence usually falls into one of two categories: Concurrent Validity: a test has concurrent validity if its results are supported by other concurrent performance beyond the assessment it self. E.g. a high score of the final exam of a foreign language course will be sustained by actual proficiency in the language. Predictive Validity: the predictive validity of an assessment becomes important in the case of placement tests, admissions assessment batteries, etc. The assessment criterion in such cases is not to measure concurrent ability but to assess (and predict) a test-taker’s likelihood of future success. CONSTRUCT-RELATED EVIDENCE A construct is any theory, hypothesis, or model that attempts to explain observed phenomena in our universe of perceptions. For examples: linguistic construct covers “proficiency” and “communicative competence”, and psychological construct covers “self-esteem” and “motivation”. CONSEQUENTIAL VALIDITY Consequential Validity encompasses all the consequences of a test, including such considerations as its accuracy in measuring intended criteria, its impact on the preparation of test-takers, its effect on the learner, and the (intended and unintended) social consequences of a test’s interpretation and use. McNamara (2000: 54) cautions against test results that may reflect socioeconomic conditions such as opportunities for coaching that are “differentially available to the students being assessed (for example, because only some families can afford coaching)”