Learning

Chapter 8

1

Learning

How Do We Learn?

Classical Conditioning

Pavlov’s Experiments

Extending Pavlov’s

Understanding

Pavlov’s Legacy

2

Learning

Operant Conditioning

Skinner’s Experiments

Extending Skinner’s

Understanding

Skinner’s Legacy

Contrasting Classical & Operant

Conditioning

3

Learning

Learning by Observation

Bandura’s Experiments

Applications of Observational

Learning

4

Definition

Learning is a relatively permanent change in an

organism’s behavior due to experience.

Learning is more flexible in comparison to the

genetically-programmed behaviors of Chinooks,

for example.

5

How Do We Learn?

Associative Learning-We learn by association. Our

minds naturally connect events that occur in sequence.

2000 years ago, Aristotle suggested this law of

association. Then 200 years ago Locke and Hume

reiterated this law.

6

Stimulus-Stimulus Learning

Learning to associate one stimulus

with another.

7

Stimulus-Stimulus Learning

Learning to associate one stimulus

with another.

8

Response-Consequence Learning

Learning to associate a response

with a consequence.

9

Response-Consequence Learning

Learning to associate a response

with a consequence.

10

Behaviorism

• Behaviorism- the

view that psychology

should be an objective

science that studies

behavior without

reference to mental

processes. Most

researchers disagree

with mental processes.

11

Watson Quote

• Give me a dozen healthy infants, well-formed, and

my own specified world to bring them up in and

I’ll guarantee to take any one at random and train

him to become any type of specialist I might

select—doctor, lawyer, artist, merchant-chief and,

yes, even beggar-man and thief, regardless of his

talents, penchants, tendencies, abilities, vocations,

and race of his ancestors. (1930)

12

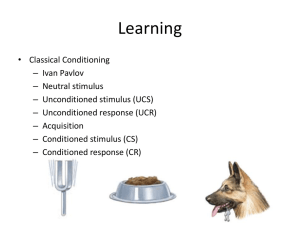

Classical Conditioning

Sovfoto

Ideas of classical conditioning originate from old

philosophical theories. However, it was the

Russian physiologist Ivan Pavlov who elucidated

classical conditioning. His work provided a basis

for later behaviorists like John Watson and B. F.

Skinner.

Ivan Pavlov (1849-1936)

13

Pavlov’s Experiments

Before conditioning, food (Unconditioned

Stimulus, US) produces salivation

(Unconditioned Response, UR). However, the

tone (neutral stimulus) does not. Thus, UCSFOOD; UCR- SALIVATION

14

Pavlov’s Experiments

During conditioning, the neutral stimulus (tone)

and the US (food) are paired, resulting in

salivation (UR). After conditioning, the neutral

stimulus (now Conditioned Stimulus, CS) elicits

salivation (now Conditioned Response, CR); Thus

CS; TONE; and CR; SALIVATION

15

Acquisition

Acquisition is the initial stage in classical

conditioning in which an association between a

neutral stimulus and an unconditioned

stimulus takes place.

1. In most cases, for conditioning to occur, the

neutral stimulus needs to come before the

unconditioned stimulus.

2. The time in between the two stimuli should

be about half a second.

16

Acquisition

The CS needs to come half a second before the

US for acquisition to occur.

17

Extinction

When the US (food) does not follow the CS

(tone), CR (salivation) begins to decrease and

eventually causes extinction.

18

Spontaneous Recovery

After a rest period, an extinguished CR (salivation)

spontaneously recovers, but if the CS (tone) persists

alone, the CR becomes extinct again.

19

Stimulus Generalization

Tendency to respond to

stimuli similar to the CS is

called generalization.

Pavlov conditioned the

dog’s salivation (CR) by

using miniature vibrators

(CS) on the thigh. When he

subsequently stimulated

other parts of the dog’s

body, salivation dropped.

20

Stimulus Discrimination

Discrimination is the learned ability to distinguish

between a conditioned stimulus and other stimuli

that do not signal an unconditioned stimulus.

21

Extending Pavlov’s Understanding

Pavlov and Watson considered consciousness,

or mind, unfit for the scientific study of

psychology. However, they underestimated

the importance of cognitive processes and

biological constraints.

22

Cognitive Processes

Early behaviorists believed that learned

behaviors of various animals could be reduced

to mindless mechanisms.

However, later behaviorists suggested that

animals learn the predictability of a stimulus,

meaning they learn expectancy or awareness of a

stimulus (Rescorla, 1988).

23

Biological Predispositions

Pavlov and Watson believed that laws of

learning were similar for all animals.

Therefore, a pigeon and a person do not differ

in their learning.

However, behaviorists later suggested that

learning is constrained by an animal’s biology.

24

Biological Predispositions

Courtesy of John Garcia

Garcia showed that the duration

between the CS and the US may be

long (hours), but yet result in

conditioning. A biologically adaptive

CS (taste) led to conditioning and not

to others (light or sound).

John Garcia

25

Biological Predisposition related

to Classical Conditioning

Even humans can develop classically to

conditioned nausea.

26

Pavlov’s Legacy

Pavlov’s greatest contribution

to psychology is isolating

elementary behaviors from

more complex ones through

objective scientific

procedures.

Ivan Pavlov

(1849-1936)

27

Applications of Classical

Conditioning

Brown Brothers

Watson used classical

conditioning procedures to

develop advertising

campaigns for a number of

organizations, including

Maxwell House, making the

“coffee break” an American

custom.

John B. Watson

28

Applications of Classical

Conditioning

1. Alcoholics may be conditioned (aversively)

by reversing their positive-associations with

alcohol.

2. Through classical conditioning, a drug (plus

its taste) that affects the immune response

may cause the taste of the drug to invoke the

immune response.

29

Respondent Behavior- Operant &

Classical Conditioning

1. Classical conditioning

forms associations

between stimuli (CS

and US). Operant

conditioning, on the

other hand, forms an

association between

behaviors and the

resulting events.

30

Operant Behavior- Operant &

Classical Conditioning

2. Classical conditioning involves respondent

behavior that occurs as an automatic

response to a certain stimulus. Operant

conditioning involves operant behavior, a

behavior that operates on the environment,

producing rewarding or punishing stimuli.

31

Skinner’s Experiments

Skinner’s experiments extend Thorndike’s

thinking, especially his law of effect. This law

states that rewarded behavior is likely to occur

again.

Yale University Library

32

Using Thorndike's law of effect as a starting

point, Skinner developed the Operant chamber,

or the Skinner box, to study operant

conditioning.

Walter Dawn/ Photo Researchers, Inc.

33

From The Essentials of Conditioning and Learning, 3rd

Edition by Michael P. Domjan, 2005. Used with permission

by Thomson Learning, Wadsworth Division

Operant Chamber

Operant Chamber

The operant chamber,

or Skinner box, comes

with a bar or key that

an animal manipulates

to obtain a reinforcer

like food or water. The

bar or key is connected

to devices that record

the animal’s response.

34

Shaping

Shaping is the operant conditioning procedure

in which reinforcers guide behavior towards the

desired target behavior through successive

approximations.

Fred Bavendam/ Peter Arnold, Inc.

Khamis Ramadhan/ Panapress/ Getty Images

A rat shaped to sniff mines. A manatee shaped to discriminate

objects of different shapes, colors and sizes.

35

Types of Reinforcers

Any event that strengthens the behavior it

follows. A heat lamp positively reinforces a

meerkat’s behavior in the cold.

Reuters/ Corbis

36

Primary & Secondary Reinforcers

1. Primary Reinforcer: An innately reinforcing

stimulus like food or drink.

2. Conditioned Reinforcer: A learned

reinforcer that gets its reinforcing power

through association with the primary

reinforcer.

37

POSITIVE REINFORCERS

NEGATIVE REINFORCERS

PRIMARY

SECONDARY

PRIMARY

SECONDARY

Food

Money

Electric shock

Rejection

Water

Grades

Intense heat

Failure

Sex

Status

Pain of any

sort

Criticism

Warmth

Praise

Suffocation

Avoidance

Types of Reinforcers

• Positive Reinforcers – A reinforcer that when

presented increases the frequency of an operant.

• Ex. A hungry rat presses a bar in its cage and

receives food. The food is a positive condition for

the hungry rat. The rat presses the bar again, and

again receives food. The rat's behavior of pressing

the bar is strengthened by the consequence of

receiving food.

Types of Reinforcement

• Negative Reinforcers – A reinforcer that when

removed increases the frequency of an operant.

• Ex. A rat is placed in a cage and immediately

receives a mild electrical shock on its feet. The

shock is a negative condition for the rat. The rat

presses a bar and the shock stops. The rat receives

another shock, presses the bar again, and again the

shock stops. The rat's behavior of pressing the bar

is strengthened by the consequence of stopping the

shock.

Positive or Negative Reinforcement?

1.

2.

3.

4.

5.

6.

7.

8.

Police pulling drivers over and giving prizes for

buckling up.

A child snaps her fingers until her teacher calls on her.

A hospital patient is allowed extra visiting time after

eating a complete meal.

Receiving a city utility discount for participating in a

recycling program.

Grounding a teenager until his/her homework is

finished.

A parent nagging a child to clean up her room.

A rat presses a lever to terminate a shock or a loud tone.

A professor gives extra credit to students with perfect

attendance.

1.

PR

2.

NR

3.

PR

4.

PR

5.

6.

7.

NR

NR

NR

8.

PR

Potential Negative Effects of Punishment

Recurrence

of undesirable

employee behavior

Undesirable

emotional reaction

Antecedent

Undesirable

employee

behavior

Punishment

by

manager

But

Short-term

leads

to

decrease in

frequency long-term

of

undesirable

employee

behavior

Aggressive,

disruptive

behavior

Apathetic,

noncreative

performance

Fear of

manager

Which tends

to reinforce

High turnover

and absenteeism

Schedules of Reinforcement

• Ratio Version –

having to do with

instances of the

behavior.

• Ex. – Reinforce or

reward the behavior

after a set number or x

many times that an

action or behavior is

demonstrated.

• Interval Version –

having to do with the

passage of time.

• Ex. – Reinforce the

student after a set

number or x period of

time that the behavior

is displayed.

4 Basic Schedules of Reinforcement

• Fixed-interval

schedule

• Variable-interval

schedule

• Fixed-ratio

schedule

• Variable-ratio

schedule

Fixed-Interval Schedule

• Fixed-interval schedule – A schedule in which a

fixed amount of time must elapse between the

previous and subsequent times that reinforcement

will occur.

• No response during the interval is reinforced.

• The first response following the interval is

reinforced.

• Produces an overall low rate of responding

• Ex. I get one pellet of food every 5 minutes when

I press the lever

Fixed Interval Reinforcement

Variable-Interval Schedule

• Variable-interval Schedule – A schedule

in which a variable amount of time must

elapse between the previous and subsequent

times that reinforcement is available.

• Produces an overall low consistent rate of

responding.

• Ex. – I get a pellet of food on average every

5 minutes when I press the bar.

Variable Interval Reinforcement

Fixed-Ratio Schedule

• Fixed-ratio Schedule – A schedule in which

reinforcement is provided after a fixed number of

correct responses.

• These schedules usually produce rapid rates of

responding with short post-reinforcement pauses

• The length of the pause is directly proportional to

the number of responses required

• Ex. – For every 5 bar presses, I get one pellet of

food

An Example of Fixed Ratio

Reinforcement

• Every fourth instance of a smile is reinforced

Fixed Ratio Reinforcement

Variable-Ratio Schedule

• Variable-ratio Schedule – A schedule in

which reinforcement is provided after a

variable number of correct responses.

• Produce an overall high consistent rate of

responding.

• Ex. – On average, I press the bar 5 times for

one pellet of food.

An Example of Variable Ratio

Reinforcement

• Random instances of the behavior are

reinforced

Variable Ratio Reinforcement

TYPE

MEANING

OUTCOME

Fixed

Ratio

Reinforcement depends

on a definite number of

responses

Activity slows after

reinforcement and

then picks up

Variable

Ratio

Number of responses

needed for

reinforcement varies

Greatest activity of

all schedules

Fixed

Interval

Reinforcement depends

on a fixed time

Activity increases

as deadline nears

Variable

Interval

Time between

reinforcement varies

Steady activity

results

Comparisons of Schedules of Reinforcement

SCHEDULE

FORM OF

REWARD

INFLUENCE ON

PERFORMANCE

EFFECTS ON

BEHAVIOR

Fixed interval

Reward on fixed

time basis

Leads to average

and irregular

performance

Fast extinction of

behavior

Fixed ratio

Reward tied to

specific number of

responses

Leads quickly to

very high and

stable

performance

Moderately fast

extinction of

behavior

Variable interval

Reward given after

varying periods of

time

Leads to

moderately high

and stable

performance

Slow extinction of

behavior

Variable ratio

Reward given for

some behaviors

Leads to very high

performance

Very slow

extinction of

behavior

1.

2.

3.

4.

5.

FI,

VI,

FR,

or

VR?

When I bake cookies, I can only put one set in at a time, so

after 10 minutes my first set of cookies is done. After another

ten minutes, my second set of cookies is done. I get to eat a

cookie after each set is done baking.

After every 10 math problems that I complete, I allow myself

a 5 minute break.

I look over my notes every night because I never know how

much time will go by before my next pop quiz.

When hunting season comes around, sometimes I’ll spend all

day sitting in the woods waiting to get a shot at a big buck.

It’s worth it though when I get a nice 10 point.

Today in Psychology class we were talking about Schedules

of Reinforcement and everyone was eagerly raising their

hands and participating. Miranda raised her hand a couple of

times and was eventually called on.

1.

FI

2.

FR

3. VI

4.

VI

5.

VR

FI, VI, FR, or VR?

6. Madison spanks her son if she has to ask him three times to

clean up his room.

7. Emily has a spelling test every Friday. She usually does well

and gets a star sticker.

8. Steve’s a big gambling man. He plays the slot machines all

day hoping for a big win.

9. Snakes get hungry at certain times of the day. They might

watch any number of prey go by before they decide to

strike.

10. Mr. Vora receives a salary paycheck every 2 weeks.

t

11. Christina works at a tanning salon. For every 2 bottles of

lotion she sells, she gets 1 dollar in commission.

12. Mike is trying to study for his upcoming Psychology quiz.

He reads five pages, then takes a break. He resumes reading

and takes another break after he has completed 5 more

pages.

6. FR

7. FI

8. VR

9. VI

10. FI

11. FR

12. FR

FI, VI, FR, or VR?

13. Megan is fundraising to try to raise money so she can go on

the annual band trip. She goes door to door in her

neighborhood trying to sell popcorn tins. She eventually sells

some.

14. Kylie is a business girl who works in the big city. Her boss is

busy, so he only checks her work periodically.

15. Mark is a lawyer who owns his own practice. His customers

makes payments at irregular times.

16. Jessica is a dental assistant and gets a raise every year at the

same time and never in between.

17. Andrew works at a GM factory and is in charge of attaching 3

parts. After he gets his parts attached, he gets some free time

before the next car moves down the line.

18. Brittany is a telemarketer trying to sell life insurance. After so

many calls, someone will eventually buy.

13. VR

14. VI

15. VI

16. FI

17. FR

18. VR

Applications of Operant

Conditioning

• Programmed Learning

– A method of

learning in which

complex tasks are

broken down into

simple steps, each of

which is reinforced.

Errors are not

reinforced.

• Ex. – Hooked on

Phonics

Applications of Operant

Conditioning

• Socialization – Guidance of

people into socially desirable

behavior by means of verbal

messages, the systematic use

of rewards and punishments,

and other methods of

teaching.

• Ex. – Children play with other

children who are generous

and non-aggressive and avoid

those who are not.

Applications of Operant Conditioning

• Behavior Modification

Praise this

Ignore this

Applications of Operant

Conditioning

• Token Economy – An

environmental setting

that fosters desired

behavior by

reinforcing it with

tokens (secondary

reinforcers) that can

be exchanged for other

reinforcers.

• Ex. – Book It!

Other Types of Learning

• Insight Learning – In Gestalt psychology,

a sudden perception of relationships among

elements of the “perceptual field”,

permitting the solution of a problem.

• Latent Learning – Learning that is hidden

or concealed.

• Observational Learning – Acquiring

operants by observing other engage in them.

Insight Learning

• Wolfgang Kohler –

German Gestalt

psychologist

• Experiments with

Chimpanzees

• Sultan learned to use a

stick to rake in bananas

placed outside his cage.

• When the bananas were

placed outside of Sultan’s

reach, he fitted two poles

together to make a single

pole long enough to reach

the food

Insight

Learning

“Aha” Learning

•

• Cognitive Map – A mental

representation or “picture” of

the elements in a learning

situation, such as a maze.

– Ex. – if someone pukes in the

hall that you usually take to

your next class you will still be

able to find your way because

of your mental representation

of this school.

Insight Learning

Latent Learning

• E.C. Tolman – experiment with rats.

• Rats learn about their environments

in the absence of reinforcement.

• Some rats went through maze for

food goals, while others were given

no reinforcement for several days.

• After 10 days, rewards were put in

with the rats that had previously

been given no rewards for 2 or 3

trials.

• Those rats reached the food box as

quickly as the rats that had been

getting reinforcement for over a

week.

• Rats learn about mazes in which

they roam even if they are

unrewarded for doing so.

•

•

•

•

•

Observational Learning

Albert Bandura

Bobo doll experiment

Assistant placed in room with doll

Beat doll with hammer and hit doll

Kindergarten children watched this

display of aggression in a separate

room.

• When placed in the room, they too

were extremely aggressive with the

doll

• Happened without ever being rewarded

for the behavior.

• http://www.npr.org/templat

es/story/story.php?storyId=

1187559

•Modeling

Monkey see, monkey do!

•Disinhibition

Get away with or are rewarded for violence

They always “get the girl/guy/money/car - etc.

•Increased arousal

Works the audience up

Watch the fans at a sporting event

Watch your friends watch the WWF

•Habituation

We become used to - desensitization

Immediate & Delayed Reinforcers

1. Immediate Reinforcer: A reinforcer that

occurs instantly after a behavior. A rat gets

a food pellet for a bar press.

2. Delayed Reinforcer: A reinforcer that is

delayed in time for a certain behavior. A

paycheck that comes at the end of a week.

We may be inclined to engage in small immediate

reinforcers (watching TV) rather than large delayed

reinforcers (getting an A in a course) which require

consistent study.

76

Reinforcement Schedules

1. Continuous Reinforcement: Reinforces the

desired response each time it occurs.

2. Partial Reinforcement: Reinforces a

response only part of the time. Though this

results in slower acquisition in the

beginning, it shows greater resistance to

extinction later on.

77

Ratio Schedules

1. Fixed-ratio schedule: Reinforces a response

only after a specified number of responses.

e.g., piecework pay.

2. Variable-ratio schedule: Reinforces a

response after an unpredictable number of

responses. This is hard to extinguish because

of the unpredictability. (e.g., behaviors like

gambling, fishing.)

78

Interval Schedules

1. Fixed-interval schedule: Reinforces a

response only after a specified time has

elapsed. (e.g., preparing for an exam

only when the exam draws close.)

2. Variable-interval schedule: Reinforces a

response at unpredictable time

intervals, which produces slow, steady

responses. (e.g., pop quiz.)

79

Schedules of Reinforcement

80

Punishment

An aversive event that decreases the behavior it

follows.

81

Punishment

Although there may be some justification for

occasional punishment (Larzelaere & Baumrind,

2002), it usually leads to negative effects.

1.

2.

3.

4.

Results in unwanted fears.

Conveys no information to the organism.

Justifies pain to others.

Causes unwanted behaviors to reappear in its

absence.

5. Causes aggression towards the agent.

6. Causes one unwanted behavior to appear in

place of another.

82

Extending Skinner’s Understanding

Skinner believed in inner thought processes and

biological underpinnings, but many

psychologists criticize him for discounting

them.

83

Cognition & Operant Conditioning

Evidence of cognitive processes during operant

learning comes from rats during a maze

exploration in which they navigate the maze

without an obvious reward. Rats seem to

develop cognitive maps, or mental

representations, of the layout of the maze

(environment).

84

Latent Learning

Such cognitive maps are based on latent

learning, which becomes apparent when an

incentive is given (Tolman & Honzik, 1930).

85

Motivation

Intrinsic Motivation:

The desire to perform a

behavior for its own

sake.

Extrinsic Motivation:

The desire to perform a

behavior due to

promised rewards or

threats of punishments.

86

Biological Predisposition

Photo: Bob Bailey

Biological constraints

predispose organisms to

learn associations that

are naturally adaptive.

Breland and Breland

(1961) showed that

animals drift towards

their biologically

predisposed instinctive

behaviors.

Marian Breland Bailey

87

Skinner’s Legacy

Skinner argued that behaviors were shaped by

external influences instead of inner thoughts and

feelings. Critics argued that Skinner

dehumanized people by neglecting their free will.

Falk/ Photo Researchers, Inc

.

88

Applications of Operant

Conditioning

Skinner introduced the concept of teaching

machines that shape learning in small steps and

provide reinforcements for correct rewards.

LWA-JDL/ Corbis

In School

89

Applications of Operant

Conditioning

Reinforcement principles can enhance athletic

performance.

In Sports

90

Applications of Operant

Conditioning

Reinforcers affect productivity. Many companies

now allow employees to share profits and

participate in company ownership.

At work

91

Applications of Operant

Conditioning

In children, reinforcing good behavior increases

the occurrence of these behaviors. Ignoring

unwanted behavior decreases their occurrence.

92

Operant vs. Classical Conditioning

93

Learning by Observation

© Herb Terrace

Higher animals,

especially humans,

learn through observing

and imitating others.

©Herb Terrace

The monkey on the

right imitates the

monkey on the left in

touching the pictures in

a certain order to obtain

a reward.

94

Reprinted with permission from the American

Association for the Advancement of Science,

Subiaul et al., Science 305: 407-410 (2004)

© 2004 AAAS.

Mirror Neurons

Neuroscientists discovered mirror neurons in

the brains of animals and humans that are active

during observational learning.

95

Learning by observation

begins early in life. This

14-month-old child

imitates the adult on TV

in pulling a toy apart.

Meltzoff, A.N. (1998). Imitation of televised models by infants.

Child Development, 59 1221-1229. Photos Courtesy of A.N. Meltzoff and M. Hanuk.

Imitation Onset

96

Bandura's Bobo doll

study (1961) indicated

that individuals

(children) learn

through imitating

others who receive

rewards and

punishments.

Courtesy of Albert Bandura, Stanford University

Bandura's Experiments

97

Applications of Observational

Learning- Modeling

Unfortunately,

Bandura’s studies

show that antisocial

models (family,

neighborhood or TV)

may have antisocial

effects. Must model

acceptable social

behavior

98

Positive Observational Learning

Bob Daemmrich/ The Image Works

Fortunately, prosocial (positive, helpful) models

may have prosocial effects.

99

Gentile et al., (2004)

shows that children in

elementary school

who are exposed to

violent television,

videos, and video

games express

increased aggression.

Ron Chapple/ Taxi/ Getty Images

Television and Observational

Learning

100

Modeling Violence

Children modeling after pro wrestlers

Glassman/ The Image Works

Bob Daemmrich/ The Image Works

Research shows that viewing media violence

leads to an increased expression of aggression.

101