Experiences Using Cloud Computing for A Scientific

advertisement

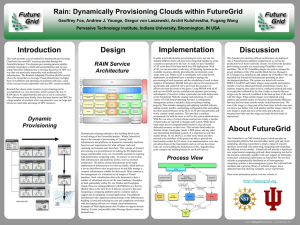

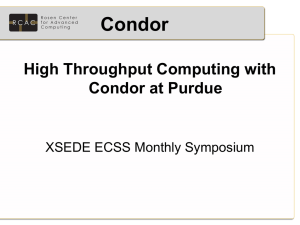

Experiences Using Cloud Computing for A Scientific Workflow Application Jens Vöckler, Gideon Juve, Ewa Deelman, Mats Rynge, G. Bruce Berriman Funded by NSF grant OC 0910812 This Talk Experience in cloud computing talk FutureGrid: Pegasus-WMS Periodograms Experiments 2011-06-08 Hardware Middlewares Periodogram I Comparison of clouds using periodograms Periodogram II ScienceCloud’11 2 What is FutureGrid Something Different For Everyone 6 centers across the nation Nimbus Eucalyptus Moab “bare metal” Start here: 2011-06-08 Test bed for Cloud Computing (this talk). http://www.futuregrid.org/ ScienceCloud’11 3 What Comprises FutureGrid Resource Type Cores Host CPU + RAM IU india iDataPlex 1,416 8 x 2.9 GHz Xeon @ 24 GB IU xray Cray XT5m 672 2 x 2.6 GHz 285 (E6) @ 8 GB UofC hotel iDataPlex 672 8 x 2.9 GHz Xeon @ 24 GB UCSD sierra iDataPlex 672 8 x 2.5 GHz Xeon @ 32 GB UFl foxtrot iDataPlex 256 8 x 2.3 GHz Xeon @ 24 GB PowerEdge 1,016 8 x 2.6 GHz Xeon @ 12 GB TACC alamo TOTALS Proposed: 2011-06-08 4,704 16 x (192 GB + 12 TB / node) cluster 8 node GPU-enhanced cluster ScienceCloud’11 4 Middlewares in FG Resource Type Cores Moab Eucalyptus Nimbus IU india iDataPlex 1,416 832 (59%) 400 (28%) - IU xray Cray XT5m 672 672 (100%) - - UofC hotel iDataPlex 672 336 (50%) - 336 (50%) UCSD sierra iDataPlex 672 312 (46%) 120 (18%) 160 (24%) UFl foxtrot iDataPlex 256 - - 248 (97%) PowerEdge 1,016 896 (88%) - 120 (12%) 4,704 3,048 (65%) 520 (11%) 744 (18%) TACC alamo TOTALS Available resources as of 2011-06-06 2011-06-08 ScienceCloud’11 5 Pegasus WMS I Automating Computational Pipelines Funded by NSF/OCI, is a collaboration with the Condor group at UW Madison Automates data management Captures provenance information Used by a number of domains Across a variety of applications Scalability 2011-06-08 Handle large data (kB…TB), and Many computations (1…106 tasks) ScienceCloud’11 6 Pegasus WMS II Reliability Retry computations from point of failure Construction of complex workflows Can run pure locally, or Distributed among institutions 2011-06-08 Based on computational blocks Portable, reusable WF descr. Laptop, campus cluster, grid, cloud ScienceCloud’11 7 How Pegasus Uses FutureGrid Focus on Eucalyptus and Nimbus No Moab “bare metal” at this point During Experiments in Nov’ 2010 544 Nimbus cores 744 Eucalyptus cores 1,288 total potential cores 2011-06-08 across 4 clusters in 5 clouds. Actually used 300 physical cores (max). ScienceCloud’11 8 Pegasus FG Interaction 2011-06-08 ScienceCloud’11 9 Periodograms Find extra-solar planets by Wobbles in radial velocity of star, or Dips in star’s intensity Star Planet Star 2011-06-08 Time Brightness Red Blue Planet Light Curve Time ScienceCloud’11 10 Kepler Workflow 210k light-curves released in July 2010 Apply 3 algorithms to each curve Run entire data-set This talk’s experiments: 2011-06-08 3 times, with 3 different parameter sets 1 algorithm, 1 parameter set, 1 run Either partial or full data-set ScienceCloud’11 11 Pegasus Periodograms 1st experiment is a “ramp-up” Try to see where things trip Across 3 comparable infrastructures 3rd experiment runs full set 2011-06-08 Already found places needing adjustments 2nd experiment also 16k light curves 16k light curves 33k computations (every light-curve twice) Testing hypothesized tunings ScienceCloud’11 12 Periodogram Workflow 2011-06-08 ScienceCloud’11 13 Excerpt: Jobs over Time 2011-06-08 ScienceCloud’11 14 Hosts, Tasks, and Duration (I) 100% 90% 80% 50 10,290 50 50 352 250.6 70% 60% 50% 20 20 6,678 17 20 126 20 40% 30% 140 29 20% 10% 28 7,080 30 30 8 Req. Hosts Avail. Hosts Eucalyptus india 86.8 162 0% 2011-06-08 7,134 20 30 77.5 Act. Hosts Eucalyptus sierra Nimbus sierra ScienceCloud’11 119.2 19 1,900 Jobs Tasks Nimbus foxtrot 0.4 Cum. Dur. (h) Nimbus hotel 15 Resource- and Job States (I) 2011-06-08 ScienceCloud’11 16 Cloud Comparison Compare academic and commercial clouds Constrained node- and core selection 2011-06-08 NERSC’s Magellan cloud (Eucalyptus) Amazon’s cloud (EC2), and FutureGrid’s sierra cloud (Eucalyptus) Because AWS costs $$ 6 nodes, 8 cores each node 1 Condor slot / physical CPU ScienceCloud’11 17 Cloud Comparison II Site CPU Magellan 8 x 2.6 GHz 19 (0) GB 5.2 h 226.6 h 43.6 Amazon 8 x 2.3 GHz 7 (0) GB 7.2 h 295.8 h 41.1 FutureGrid 8 x 2.5 GHz 29 (½) GB 5.7 h 248.0 h 43.5 Cum. Dur. Speed-Up Given 48 physical cores 2011-06-08 RAM (SW) Walltime Speed-up ≈ 43 considered pretty good AWS cost ≈ $31 7.2 h x 6 x c1.large ≈ $29 1.8 GB in + 9.9 GB out ≈ $2 ScienceCloud’11 18 Scaling Up I Workflow optimizations Submit-host Unix settings Increase open file-descriptors limit Increase firewall’s open port range Submit-host Condor DAGMan settings 2011-06-08 Pegasus clustering ✔ Compress file transfers Idle job limit ✔ ScienceCloud’11 19 Scaling Up II Submit-host Condor settings Socket cache size increase File descriptors and ports per daemon Remote VM Condor settings 2011-06-08 Using condor_shared_port daemon Use CCB for private networks Tune Condor job slots TCP for collector call-backs ScienceCloud’11 20 Hosts, Tasks, and Duration (II) 100% 90% 80% 50 50 356.5 4,012 101,283 1,428 34,480 809 21,539 1,074 24,600 125.8 1,135 28,761 102.3 Jobs Tasks Cum. Dur. (h) 70% 60% 50% 20 20 20 19 40% 30% 30 29 20% 10% 30 26 Req. Hosts Act. Hosts 164.1 85.5 0% Eucalyptus india 2011-06-08 Eucalyptus sierra Nimbus sierra ScienceCloud’11 Nimbus foxtrot Nimbus hotel 21 Resource- and Job States (II) 2011-06-08 ScienceCloud’11 22 Lose Ends Saturate requested resources Clustering Better submit host tuning 2011-06-08 Requires better monitoring ✔ Better data staging ScienceCloud’11 23 Acknowledgements Funded by NSF grant OC 0910812 Ewa Deelman, Gideon Juve, Mats Rynge, Bruce Berriman FG help desk ;-) http://pegasus.isi.edu/ 2011-06-08 ScienceCloud’11 24