Zookeeper at Facebook

advertisement

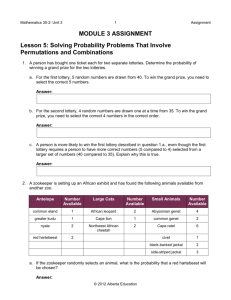

Vishal Kathuria Zookeeper use at Facebook Project Zeus – Goals Tao Design Tao Workload simulator Early results of Zookeeper testing Zookeeper Improvements HDFS ◦ For location of the name node ◦ Name node leader election ◦ 75K temporary (permanent in future) clients HBase ◦ For mapping of regions to region servers, location of ROOT node ◦ Region server failure detection and failover ◦ After UDBs more to HBase, ~100K permanent clients Titan ◦ Mapping of user to Prometheus web server within a cell ◦ Leader election of Prometheus web server ◦ Future: Selection of the Hbase geo-cell Ads ◦ Leader Election Scribe ◦ Leader election of scribe aggregators Future customers ◦ TAO Sharding ◦ MySQL Leader Election ◦ Search “Make Zookeeper awesome” ◦ Zookeeper works at Facebook scale ◦ Zookeeper is one of the most reliable services at Facebook Solve pressing infrastructure problems using ZooKeeper ◦ Shard Manager for Tao ◦ Generic Shard Management capability in Tupperware ◦ MySQL HA Project is 5 weeks old Initial sharing of ideas with the community ◦ Ideas not yet whetted or proven through prototypes Shard Map ◦ ◦ ◦ ◦ Based on ranges instead of consistent hash Stored in ZooKeeper Accessed by clients using Aether Populated by Eos Dynamically updated based on load information Scale requirements for a single cluster 24,000 Web machines ◦ Read only clients 6,000 Tao server machines ◦ Read/Write clients About 20 clusters site wide Shard Map is 2-3 MB of data Clients ◦ Read the shard map of local cluster after connection ◦ Put a watch on the shard map ◦ Refresh shard map after watch fires Follower Servers Leader Servers ◦ These servers are clients of the leader servers ◦ Also read their own shard map ◦ Read their own shard map and of all of their followers Shard Manager - Eos ◦ Periodically updates the shard map 3 node zookeeper ensemble ◦ 8 core ◦ 8G RAM Clients – 20 node cluster ◦ Web class machines ◦ 12 G RAM Using Zookeeper ensemble per cluster model Assumptions ◦ 40K connections ◦ Small number of clients joining/leaving at any time ◦ Rare updates to the shard map – once every 10 minutes Result ◦ Zookeeper worked well in this Cluster Powering Up ◦ 25K Clients simultaneously trying to connect ◦ Slow response time It took some clients 560s to connect and get data Cluster powering down ◦ 25 K clients simultaneously disconnect ◦ System Temporarily Unresponsive The disconnect requests filled zookeeper queues System would not accept any more new connections or requests After a short time, the disconnect requests were processed and the system became responsive again Rolling Restart of ZooKeeper Nodes Startup/Shutdown of entire cluster ◦ With active clients ◦ Without active clients Result ◦ No corruptions or system hangs noticed so far Client connect/disconnect is a persisted update involving all nodes The ping and connection timeout handling is done by the leader for all connections Single thread handling connect requests and data requests Zookeeper is implemented as a single threaded pipeline. ◦ All reads are serialized ◦ Low read throughput ◦ Uses only 3 cores at full load Non persisted sessions with local session tracking ◦ Hacked a prototype to test potential ◦ Initial test runs very encouraging Dedicated connection creation thread ◦ Prototyped, test runs in progress Multiple threads for deserializing incoming requests Dedicated parallel pipeline for read only clients

![[Quick answers to common problems] Saurav Haloi - Apache ZooKeeper Essentials (2015, Packt Publishing - ebooks Account) - libgen.li](http://s2.studylib.net/store/data/025979173_1-b2a3aa2721a678e99570bb0d66f70b77-300x300.png)