PPT

advertisement

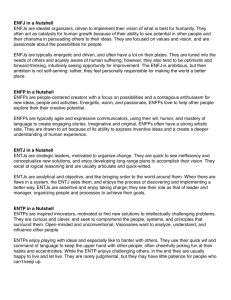

• Session 1 IR in a Nutshell: Applications, Research, and Challenges Tamer Elsayed Feb 21st 2013 Roadmap What is Information Retrieval (IR)? ● Overview and applications Overview of my research interests ● Large-scale problems ● MapReduce Extensions ● Twitter Analysis The future of IR research ● SWIRL 2012 IR in a Nutshell: Applications, Research, and Challenges 2 WHAT IS IR? OVERVIEW & APPLICATIONS/RESEARCH TOPICS IR in a Nutshell: Applications, Research, and Challenges 3 Information Retrieval (IR) … information need Query Unstructured Hits IR in a Nutshell: Applications, Research, and Challenges 4 Who and Where? *Source: Matt Lease (IR Course at UTexes) IR is not just “Web Page” Ranking or Document or Retrieval 6 Web Search: Google Search suggestions Vertical search Sponsored search Query-biased summarization Search shortcuts Vertical search (news, blog, image) Web Search: Google II Spelling correction Personalized search / social ranking Vertical search (local) Cross-Lingual IR 1/3 of the Web is in non-English About 50% of Web users do not use English as their primary language Many (maybe most) search applications have to deal with multiple languages ● monolingual search: search in one language, but with many possible languages ● cross-language search: search in multiple languages at the same time Routing / Filtering Given standing query, analyze new information as it arrives ● Input: all email, RSS feed or listserv, … ● Typically classification rather than ranking ● Simple example: Ham vs. spam *Source: Matt Lease (IR Course at UTexes) Content-based Music Search *Source: Matt Lease (IR Course at UTexes) Speech Retrieval *Source: Matt Lease (IR Course at UTexes) Entity Search *Source: Matt Lease (IR Course at UTexes) Question Answering & Focused Retrieval *Source: Matt Lease (IR Course at UTexes) Expert Search *Source: Matt Lease (IR Course at U Texes) Blog Search *Source: Matt Lease (IR Course at UTexes) μ-Blog Search (e.g. Twitter) *Source: Matt Lease (IR Course at UTexes) e-Discovery *Source: Matt Lease (IR Course at Utexes) Book Search Find books or more focused results Detect / generate / link table of contents Classification: detect genre (e.g. for browsing) Detect related books, revised editions Challenges: Variable scan quality, OCR accuracy, Copyright, etc. Other Visual Interfaces *Source: Matt Lease (IR Course at Utexes) MY RESEARCH IR in a Nutshell: Applications, Research, and Challenges 21 My Research … emails Text + Enron ~500,000 Large-Scale Processing Identity Resolution web pages CLuE Web ~1,000,000,000 User Application Web Search 22 Back in 2009 … Before 2009, small text collections are available ● Largest: ~ 1M documents ClueWeb09 ● Crawled by CMU in 2009 ● ~ 1B documents ! ● need to move to cluster environments MapReduce/Hadoop seems like promising framework 23 MapReduce Framework (b) Shuffle (a) Map (k1, v1) input input input input (c) Reduce [k2, v2] map map map (k2, [v2]) Shuffling group values by: [keys] [(k3, v3)] reduce output reduce output reduce output map Framework handles “everything else” ! 24 Ivory http://ivory.cc E2E Search Toolkit using MapReduce Completely designed for the Hadoop environment Experimental Platform for research Supports common text collections ● + ClueWeb09 Open source release Implements state-of-the-art retrieval models 25 (1) Pairwise Similarity in Large Collections 0.20 0.30 0.54 ~~~~~~~~~~~~ 0.21 ~~~~~~~~~~~~ 0.00 ~~~~~~~~~~~~ ~~~~ 0.34 0.34 0.13 0.74 0.20 0.30 ~~~~~~~~~~~~ 0.54 ~~~~~~~~~~~~ 0.21 ~~~~~~~~~~~~ 0.00 ~~~~ 0.34 0.34 0.13 0.74 0.20 0.30 ~~~~~~~~~~~~ 0.54 ~~~~~~~~~~~~ 0.21 ~~~~~~~~~~~~ 0.00 ~~~~ 0.34 0.34 0.13 0.74 0.20 ~~~~~~~~~~~~ 0.30 ~~~~~~~~~~~~ 0.54 ~~~~~~~~~~~~ 0.21 ~~~~ 0.00 0.34 0.34 0.13 0.74 0.20 ~~~~~~~~~~~~ 0.30 ~~~~~~~~~~~~ 0.54 ~~~~~~~~~~~~ 0.21 ~~~~0.00 0.34 0.34 0.13 0.74 Applications: Clustering “more-like-that” queries 26 Decomposition Each term contributes only if appears in reduce map 27 (2) Cross-Lingual Pairwise Similarity Find similar document pairs in different languages More difficult than monolingual! Multilingual text mining, Machine Translation Application: automatic generation of potential “interwiki” language links Locality-sensitive Hashing Vectors close to each other are likely to have similar signatures 28 Solution Overview Nf German articles Ne English articles CLIR projection Preprocess Ne+Nf English document vectors <nobel=0.324, prize=0.227, book=0.01, …> Signature generation Random Projection/ Minhash/Simhash Similar article pairs 11100001010 01110000101 Ne+Nf Signatures Sliding window algorithm (3) Approximate Positional Indexes “Learning to Rank” models Approximate Term positions Large index X Smaller index √ Proximity features Learn effective ranking functions Slow query evaluation Faster query evaluation √ X √ Close Enough is Good Enough? 30 Fixed-Width Buckets Buckets of length W d1 1 2 3 4 5 ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. d2 ………...........…. ………...........…. ………...........…. ………...........…. ………...........…. 1 2 3 31 (4) Pseudo Training Data for Web Rankers Documents, queries, and relevance judgments Important driving force behind IR innovation In industry, easy to get In academia, hard and really expensive Web Graph P3 P1 web search SIGIR 2012 P6 P2 web search P4 web search web search P5 P7 web search Google Queries and Judgments? anchor text lines ≈ pseudo queries target pages ≈ relevant candidates P3 P1 SIGIR 2012 P4 web search P2 P6 P5 Google Bing P7 noise reduction ? (5) Extending MapReduce Framework Iterative Computations (iHadoop) Concurrent Jobs with shared data m maps - r reduces instead of 1 map-1 reduce IR in a Nutshell: Applications, Research, and Challenges 35 (6) Twitter Analysis Real-time search in Twitter ● TREC 2011 (6th out of 59 teams) ● TREC 2013? Answering Real-time Questions from Arabic Social Media ● NPRP-submitted IR in a Nutshell: Applications, Research, and Challenges 36 FUTURE RESEARCH DIRECTIONS IR in a Nutshell: Applications, Research, and Challenges 37 SWIRL 2012 Goal of Report Inspire researchers and graduate students to address the questions raised Provide funding agencies data to focus and coordinate support for information retrieval research. Participants were asked to focus on efforts that could be handled in an academic setting, without the requirement of large-scale commercial data. Key Themes (across Topics) Not just a ranked list ● move beyond the classic “single adhoc query and ranked list” approach Help for users ● support users more broadly, including ways to bring IR to inexperienced, illiterate, and disabled users. Capturing context ● Treats people using search systems, their context, and their information needs as critical aspects needing exploration. Information, not documents ● beyond document retrieval and into more complex types of data and more complicated results New Domains ● data with restricted access, collections of “apps,” and richly connected workplace data Evaluation ● suggest new techniques for evaluation “Most Interesting” Topics IR in a Nutshell: Applications, Research, and Challenges 41 [1] Conversational Answer Retrieval IR: provides ranked lists of documents in response to a wide range of keyword queries QA: provides more specific answers to a very limited range of natural language questions. Goal: combine the advantages of both to provide effective retrieval of appropriate answers to a wide range of questions expressed in natural language, with rich user-system dialogue Proposed Research Questions: open-domain, natural language text questions Answers: Develop more general approaches to identifying as many constraints as possible on the answers for questions Dialogue would be initiated by the searcher and proactively by the system, for: ● refining the understanding of questions ● improving the quality of answers Answers: short answers, text passages, clustered groups of passages, documents, or even groups of documents may be appropriate answers. Even tables, figures, images, or videos IR in a Nutshell: Applications, Research, and Challenges 43 Challenges Definitions of question and answer for open domain searching Techniques for representing questions and answers Techniques for reasoning about and ranking answers Techniques for representing a mixed-initiative CAR dialogue Effective dialogue actions for improving question understanding Effective dialogue actions for refining answers IR in a Nutshell: Applications, Research, and Challenges 44 [2] Finding What You Need with Zero Query Terms (or Less) Function without an explicit query, depending on context and personalization in order to understand user needs Anticipate user needs and respond with information appropriate to the current context without the user having to enter a query (zero query terms) or even initiate an interaction with the system (or less). In a mobile context: take the form of an app that recommends interesting places and activities based on the user’s location, personal preferences, past history, and environmental factors such as weather and time. In a traditional desktop environment: might monitor ongoing activities and suggest related information, or track news, blogs, and social media for interesting updates. Imagine a system that automatically gathers information related to an upcoming task. Proposed Research New representations of information and user needs, along with methods for matching the two Modeling person, task, and context; Methods for finding “objects of interest”, including content, people, objects and actions Methods for determining what, how and when to show material of interest. IR in a Nutshell: Applications, Research, and Challenges 46 Challenges Time- and geo-sensitivity; trust, transparency, privacy; determining interruptibility; summarization Power management in mobile contexts Evaluation IR in a Nutshell: Applications, Research, and Challenges 47 [3] Mobile Information Retrieval Analytics (MIRA) No company or researcher has an understanding of mobile information access across a variety of tasks, modes of interaction, or software applications. For example, a search service provider might know that a query was issued, but not know whether the results it provided resulted in consequent action. The identification of common types of web search queries led to query classification and algorithms tuned for different purposes, which improved web search accuracy. A similar understanding for mobile information seeking would focus research on the problems of highest value to mobile users. study what information, what kind of information, and what granularity of information to deliver for different tasks and contexts Proposed Research Methodology and tools for doing large-scale collection of data about mobile information access. Research on incentive mechanisms is required to understand situations in which people are willing to allow their behavior to be monitored. Research on privacy is required to understand what can be protected by dataset licenses alone, what must be anonymized, and tradeoffs between anonymization and data utility. Development of well-defined information seeking tasks Support quantitative evaluation in well-defined evaluation frameworks that lead to repeatable scientific research IR in a Nutshell: Applications, Research, and Challenges 49 Challenges Developing incentive mechanisms Developing data collections that are sufficiently detailed to be useful while still protecting people’s privacy. Collection of data in a manner that university internal review boards will consider acceptable ethically. Collection of data in a manner that does not violate the Terms of Use restrictions of commercial service providers. IR in a Nutshell: Applications, Research, and Challenges 50 [4] Empowering Users to Search and Learn Search engines are currently optimized for look-up tasks and not tasks that require more sustained interactions with information People have been conditioned by current search engines to interact in particular ways that prevent them from achieving higher levels of learning. We seek to empower users to be more proactive and critical thinkers during the information search process. [5] The Structure Dimension Better integration of structured and unstructured information to seamlessly meet a user’s information needs is a promising, but underdeveloped area of exploration. Named entities, user profiles, contextual annotations, as well as (typed) links between information objects ranging from web pages to social media messages. [6] Understanding People in Order to Improve Information (Retrieval) Systems Development of a research resource for the IR community: 1. from which hypotheses about how to support people in information interactions can be developed 2. in which IR system designs can be appropriately evaluated. Conducting studies of people ● before, during, and after engagement with information systems, ● at a variety of levels, ● using a variety of methods. • • • • ethnography in situ observation controlled observation large-scale logging IR in a Nutshell: Applications, Research, and Challenges 54 Thank You! IR in a Nutshell: Applications, Research, and Challenges 55