Determining Validity and Reliability of Key Assessments

advertisement

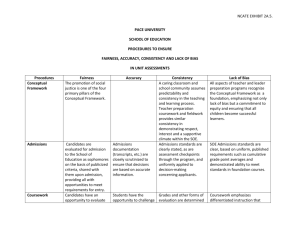

Susan Malone Mercer University “The unit has taken effective steps to eliminate bias in assessments and is working to establish the fairness, accuracy, and consistency of its assessment procedures and unit operations.” Address contextual distractions (inappropriate noise, poor lighting, discomfort, lack of proper equipment) Address problems with assessment instruments (missing or vague instructions, poorly worded questions, poorly produced copies that make reading difficult) [Review candidate performance to determine if candidates perform differentially with respect to specific demographic characteristics] [ETS: Guidelines for Fairness Review of Assessments; Pearson: Fairness and Diversity in Tests] Assure candidates are exposed to the K, S, & D that are being evaluated Assure candidates know what is expected of them Instructions and timing of assessments are clearly stated and shared with candidates Candidates are given information on how the assessments are scored and how they count toward completion of programs Assessments are of appropriate type and content to measure what they purport to measure Aligned with the standards and/or learning proficiencies they are designed to measure [content validity] Produce dependable results or results that would remain constant on repeated trials ◦ Provide training for raters that promotes similar scoring patterns ◦ Use multiple raters ◦ Conduct simple studies of inter-rater reliability ◦ Compare results to other internal or external assessments that measure comparable K, S, & D [concurrent validity] Dispositions Assessment Portfolios Analysis of Student Learning Summative Evaluation Alignment with INTASC standards, program standards, and the Conceptual Framework (accuracy; content validity) ◦ ◦ ◦ ◦ Matrices LiveText standards mapping PRS Relationship to Standards section PRS alignment with program standards requirement in Evidence for Meeting Standards section Alignment with other assessments (accuracy; concurrent validity) ◦ Matrices ◦ Potential documentation within LiveText Standards Correlation Builder Rubrics/assessment expectations shared with candidates in courses; field experience orientations; and LiveText (fairness) Rubrics/assessment expectations shared with cooperating teachers by university supervisors (consistency) Statistical study (in process) examining correlations among candidate performances on multiple assessments (where those assessments address comparable K, S, & D) (consistency; concurrent validity) Multiple assessors (consistency) Exploration of faculty’s assumptions re: purpose of the assessment, expectations of behaviors, and meaning of rating scale (consistency; reliability) Revision of rating scale, addition of more specific indicators, development of two versions (courses/field experiences) (accuracy; content validity) Norming session with supervisors (consistency; reliability) Revision of instructions to align more closely with rubric expectations and expected process (fairness; avoidance of bias) Review of coursework and fieldwork to ensure candidates are prepared for assignment (fairness) Seeking feedback from experts (P12 partners) on whether assignment reqs and assessment criteria are authentic (accuracy; content validity) Annual review of data disaggregated by demographic factors (gender, race/ethnicity, site, degree program) (fairness, avoidance of bias) Recent revision of portfolios and rubrics to align with new INTASC (accuracy; content validity) Revision of rubrics to include more specific indicators related to the standards (change from generic rating descriptors) (accuracy; content validity) Cross-college workshop on artifact selections (accuracy; content validity) Annual review of artifact selections (accuracy; content validity) Inter-rater reliability study (consistency; reliability) Review of rubric expectations and all other PR and ST assignments to ensure opportunities to demonstrate all standards and indicators during experience (fairness) Workshops for supervisors on the rubric expectations (consistency) Feedback from cooperating teachers on relevance of the assessment (accuracy; content validity) Annual review of data disaggregated by demographic variables (fairness; avoidance of bias) Statistical study to identify correlations among entry reqs and successful program completion Statistical study to determine if key assessments and entry criteria are predictive of program success (as defined by success in student teaching)