A comparison of distributed data storage middleware for HPC, GRID

advertisement

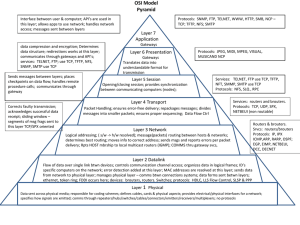

A comparison of distributed data storage middleware for HPC, GRID and Cloud Mikhail Goldshtein1, Andrey Sozykin1, Grigory Masich2 and Valeria Gribova3 1Institute of Mathematics and Mechanics UrB RAS, Russia, Yekaterinburg 2Institute of Continuous Media Mechanics UrB RAS, Russia, Perm 3Institute of Automation and Control Processes FEB RAS, Russia, Vladivostok European Middleware Initiative EMI - Software platform for high performance distributed computing, http://www.eu-emi.eu Joint effort of the major European distributed computing middleware providers (ARC, dCache, gLite, UNICORE) Widely used in Europe, including Worldwide LHC Computing Grid (WLCG) Higgs boson: • Alberto Di Meglio: Without the EMI middleware, such an important result could not have been achieved in such a short time 2 Storage solutions in EMI dCache - http://www.dcache.org/ Disk Pool Manager (DPM) - https://svnweb.cern.ch/trac/lcgdm/wiki/Dpm StoRM (STOrage Resource Manager) - http://storm.forge.cnaf.infn.it/ 3 dCache 4 Disk Pool Manager 5 StoRM 6 Usage statistics in WLCG 7 Distributed storage systems Traditional approach: • Grid • Distributed file systems (IBM GPFS, Lustre File System, etc.) Modern technologies: • Standard Internet Protocols (Parallel NFS, WebDAV, etc.) • Cloud storage (Amazone S3, HDFS, etc.) 8 Classic NFS 9 Parallel NFS 10 Comparison results Feature Grid protocols dCache SRM, xroot, dcap GridFTP Standard protocols Cloud backend NFS 4.1, WebDAV Quality documentation Ease administration HDFS development) DPM SRM, RFIO, xroot, GridFTP StoRM SRM, RFIO, xroot, GridFTP, file NFS 4.1, WebDAV (in HDFS, Amazon S3 of High Medium High of Easy Medium Easy 11 Distributed dCache based Tire 1 WLCG storage 12 Implementation 13 Implementation details Hardware: 4 x Supermicro servers (3 in Yekaterinburg, 1 in Perm), 210 TB useful capacity (252 full capacity, RAID5 + Hotspare are used) ОС Scientific Linux 6.3 dCache 2.6 from EMI repository Protocol: NFS v4.1 (Parallel NFS) RHEL has a parallel NFS client, no need to install additional software to clusters 14 Performance testing IOR test (http://www.nersc.gov/systems/trinity-nersc-8-rfp/nersc-8-trinity-benchmarks/ior/) 15 Future works Evaluation of NFS performance over 10GE and WAN Evaluation of dCache in the experiments (Particle Image Velocimetry and so on) Participation in GRID projects: • Grid of Russian National Nanotechnology Network • WLCG (through Joint Institute for Nuclear Research, Dubna, Russia) Connection to Hadoop Cluster (when dCache will support HDFS) 16 Andrey Sozykin Thank you! Institute of Mathematics and Mechanics UrB RAS, Russia, Yekaterinburg avs@imm.uran.ru 17