IGVC12_Poster

advertisement

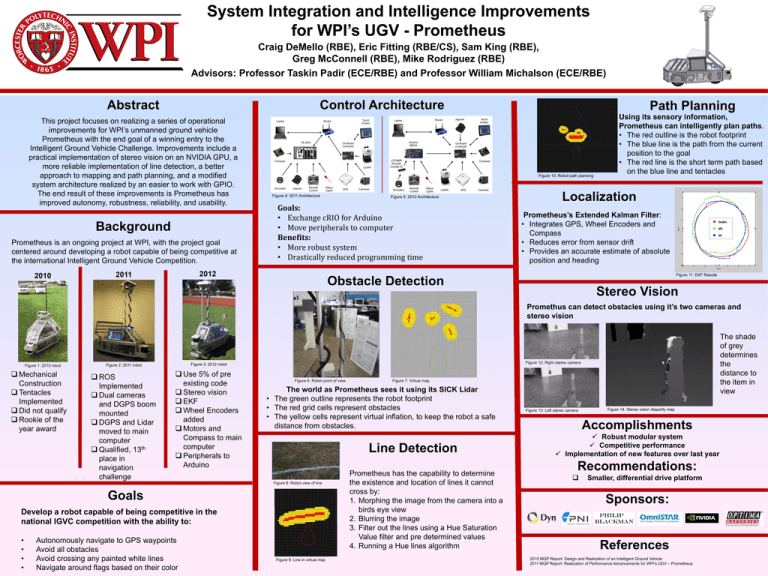

System Integration and Intelligence Improvements for WPI’s UGV - Prometheus Craig DeMello (RBE), Eric Fitting (RBE/CS), Sam King (RBE), Greg McConnell (RBE), Mike Rodriguez (RBE) Advisors: Professor Taskin Padir (ECE/RBE) and Professor William Michalson (ECE/RBE) Abstract Control Architecture This project focuses on realizing a series of operational improvements for WPI’s unmanned ground vehicle Prometheus with the end goal of a winning entry to the Intelligent Ground Vehicle Challenge. Improvements include a practical implementation of stereo vision on an NVIDIA GPU, a more reliable implementation of line detection, a better approach to mapping and path planning, and a modified system architecture realized by an easier to work with GPIO. The end result of these improvements is Prometheus has improved autonomy, robustness, reliability, and usability. Background Prometheus is an ongoing project at WPI, with the project goal centered around developing a robot capable of being competitive at the international Intelligent Ground Vehicle Competition. 2010 Figure 10: Robot path planning Figure 4: 2011 Architecture Figure 5: 2012 Architecture Goals: • Exchange cRIO for Arduino • Move peripherals to computer Benefits: • More robust system • Drastically reduced programming time 2012 2011 Path Planning Using its sensory information, Prometheus can intelligently plan paths. • The red outline is the robot footprint • The blue line is the path from the current position to the goal • The red line is the short term path based on the blue line and tentacles Localization Prometheus’s Extended Kalman Filter: • Integrates GPS, Wheel Encoders and Compass • Reduces error from sensor drift • Provides an accurate estimate of absolute position and heading Figure 11: EKF Results Obstacle Detection Stereo Vision Promethus can detect obstacles using it’s two cameras and stereo vision Figure 1: 2010 robot Mechanical Construction Tentacles Implemented Did not qualify Rookie of the year award ROS Implemented Dual cameras and DGPS boom mounted DGPS and Lidar moved to main computer Qualified, 13th place in navigation challenge Figure 12: Right stereo camera Figure 3: 2012 robot Figure 2: 2011 robot Use 5% of pre existing code Stereo vision EKF Wheel Encoders added Motors and Compass to main computer Peripherals to Arduino Figure 6: Robot point of view Line Detection Goals Develop a robot capable of being competitive in the national IGVC competition with the ability to: Autonomously navigate to GPS waypoints Avoid all obstacles Avoid crossing any painted white lines Navigate around flags based on their color Figure 7: Virtual map The world as Prometheus sees it using its SICK Lidar • The green outline represents the robot footprint • The red grid cells represent obstacles • The yellow cells represent virtual inflation, to keep the robot a safe distance from obstacles. Figure 8: Robot view of line • • • • The shade of grey determines the distance to the item in view Figure 9: Line in virtual map Prometheus has the capability to determine the existence and location of lines it cannot cross by: 1. Morphing the image from the camera into a birds eye view 2. Blurring the image 3. Filter out the lines using a Hue Saturation Value filter and pre determined values 4. Running a Hue lines algorithm Figure 13: Left stereo camera Figure 14: Stereo vision disparity map Accomplishments Robust modular system Competitive performance Implementation of new features over last year Recommendations: Smaller, differential drive platform Sponsors: References 2010 MQP Report: Design and Realization of an Intelligent Ground Vehicle 2011 MQP Report: Realization of Performance Advancements for WPI’s UGV – Prometheus