novel prediction and the underdetermination of scientific theory

advertisement

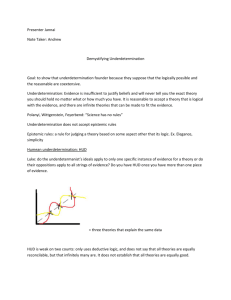

NOVEL PREDICTION AND THE UNDERDETERMINATION OF SCIENTIFIC THEORY BUILDING Richard Dawid Univ. of Vienna A General Strategy • To the Bayesian, confirmation is an increase of the probability of a theory’s truth due to empirical data. P(T |E)/P(T)= P(E|T)/P(E) • Per se, it does not matter whether the data has influenced theory construction or not. Novel confirmation does not directly raise confirmation value. • However, the observation that novel confirmation took place can itself constitute data which confirms a statement that in turn raises the probability of T. Some suggested ideas • A number of authors have used that strategy and proposed various qualities which are to be assessed by observing novel confirmation. • Patrick Maher: novel confirmation confirms that the method of theory construction (rather than the theory itself) is good. • Hitchcock & Sober: n.c. indicates that there is no overfitting. • Kahn,Landsberg,Stockman: n.c confirms that the scientist who constructed the theory before confirmation is competent. • Eric Barnes: n. c. confirms that the scientist who endorsed the theory before confirmation is capable. The same principle, a different suggestion • Claim: Looking for novel confirmation can lead to an assessment of a crucial characteristic of the scientific context: the underdetermination of scientific theory building by the available data. • In a number of scientific contexts, in particular in modern physics, this assessment constitutes the most important extravalue of novel confirmation over accommodation. • This does not imply that other construals of an extra-value of n. c. are false. N.c. may well work at various levels. However, we suggest that underdetermination plays a pivotal role in the case of physics. Underdetermination of Scientific Theory Building by logically ampliatively all possible evidence available evidence Hume Quine-Duhem Quine [‚reasons for Sklar, Stanford indet. of transl.‘] (transient underdet.) van Fraassen Scientific Underdet. The Framework • Assumption: a priori, scientists can’t be expected to construct the theory that will be empirically successful in the future. If many scientific theories are possible which fit the available data satisfy “scientificality conditions” C give different predictions regarding upcoming experiments then chances are high that scientists find the wrong theory. (In the simplest model, if there are i such theories, chances are 1/i.) Alluding to additional criteria like simplicity, beauty, etc. adds to the conditions C but does not change the argument. The Argument • Inversely, if novel predictive success occurs, that indicates limitations to scientific underdetermination. • Meta-inductive reasoning then leads to the inference that, if limitations to scientific underdetermination frequently occur at some level at one stage, they are likely to occur at following stages as well. => therefore, the observation of novel confirmation raises the probability for the theory’s truth. To the contrary, accommodation does not tell anything about scientific underdetermination. o In the extreme case where no limitations are assumed, P(T)=0 and no confirmation occurs. o If one assumes some limitations a priori, there is some confirmation but it is weaker than in the case of novel confirmation. Comparison with other Approaches (1) Example: The standard model of particle physics (SM) The Higgs is the last of many SM predictions. All others were successful. SM is strongly believed in due to its n.c. successes. SM was developed in the early 60s, got first n.c. in the mid 70s and since then is believed in by most physicists. Situation today quite different than back then. Lots of new knowledge. Developers and early endorsers back then are not the leading experts today. o relating an evaluation of developers/ endorsers to theory assessment seems problematic. – People don’t t believe in the SM today because n.c. has shown Weinberg’s competence in the 1960s. – Whether or not there were early endorsers does not matter either. => It seems far more plausible that n.c. evaluates the scientific context rather than scientific agents. Comparison with other Approaches (2) • Hitchcock and Sober do not work in the SM case either. – Developing the SM is a matter of finding a general scheme that solves general consistency problems. Overfitting plays no role. • Assessment of scientific underdetermination adresses precisely the context of finding consistent solution and asking how many alternative solutions exist. - • The SM shows these points with particular clarity – Due to the strong theoretical constraints, it highlights the importance of consistency. – Due to the long time periods, the problems of Kahn et al and Barnes become more conspicuous. However, the use of n.c. seems characteristic of fundamental physics. Distinction between AoU and other Ideas Scenario: – First one theory A is developed and endorsed. – Then A gets empirically confirmed by data D. – Then someone else discovers that theory B also reproduces data D. ? Question: does novel confirmation favour A over B? • Many approaches (Kahn et al., Hitchcock&Sober) would say yes. • AoU says no: underdetermination is the same for A and B. Reduction of P(T) due to new known alternative at the same level as comparison between A and B. o When consistency is a dominating factor, AoU looks good. o When not, other approaches fare better. ! However: predictive power seems strongest when consistency is an issue. Conclusion • Assessment of scientific underdetermination constitutes a crucial reason for the higher confirmation value of novel confirmation. • It applies in contexts where questions of consistency are important in theory building. • Arguably, those are the contexts where novel confirmation occurs most frequently.