Uniform Information Density - Computational Linguistics and Phonetics

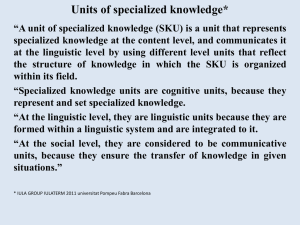

advertisement

Seminar „Information Theoretic Approaches to the Study of Language “ Redundancy and reduction: Speakers manage syntactic information density T. Florian Jaeger (2010) Torsten Jachmann 16.05.2014 So far • Frequent words have shorter linguistic forms (Zipf) o Orthographic; PHONOLOGICAL • Word length (phonemes/syllables) correlated with predictability • Information is context dependent o The more probable, the more redundant • More predictable instances of the same word are produced shorter and with less phonological and phonetic detail Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) • Morphosyntactic: o E.g. auxiliary contractions “he is” vs. “he’s” Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) • Syntactic: o E.g. optional that-mentioning “This is the friend I told you about” vs. “This is the friend that I told you about” Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) • Elide constituents: o E.g. optional argument and adjunct omission “I already ate” vs. “I already ate dinner” Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) • Production planning: o E.g. one or more clauses “Next move the triangle over there” vs. “Next take the triangle and move it over there” Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) • Other languages? o German: “Er hat es verstanden” vs. “Er hat’s verstanden” (he understood it) o Japanese: “行ってはダメ” vs. “行っちゃダメ” Itte ha dame Iccha dame (you can’t go) Idea Speakers manage the amount of information per amount of linguistic signal (at choice points) The form with less linguistic signal should be less preferred whenever the reducible unit encodes a lot of information (in the context) Uniform Information Density (UID) Optimal: • On average each word adds the same amount of information to what we already know • The rate of information transfer is close to the channel capacity ❌ Many constraints (grammar; learnability) Uniform Information Density (UID) Efficient: • Relative uniform information distribution where possible • No continuous under- or overutilization of the channel ? UID Definitions: • Information density: Information per time (articulatory detail is left out) • Choice: Subconscious (existence of different ways to encode the intended message) an Jaeger UID Example: Page 41 UID Example: Fig. 1. UID Goals: • UID as a computational account of efficient sentence production • Corpus-based studies are feasible and desirable Corpus of spontaneous speech Naturally distributed data Data • 7369 automatically extracted complement clauses (CC) from “Paraphrase StanfordEdinburgh LINK Switchboard Corpus” (Penn Treebank) • - 144 (2%) falsely extracted • - 71 (1%) rare matrix verbs extreme probabilities Data Focus Actually: I(CC onset|context) = -log p(CC|context) + -log p(onset|context,CC) Here: I(CC|context) = -log p(CC|matrix verb lemma) Data Multilevel logit model • Various factors (might) influence the outcome • Ability to include several (control) parameters in one model • Contribution of each can be estimated Why? • Natural (uncontrolled) data Controls Dependency • Distance of matrix verb from CC onset “THAT” o My boss thinks [I’m absolutely crazy.] o I agree with you [that, that a person’s heart can be changed.] • Length of CC onset (including subject) “THAT” • Length of CC remainder Short sidetrack Length of CC remainder • Language production is incremental (+ heuristic complexity estimates?) Florian Jaeger Page 45 NIH-PA Author Manuscript NIH-PA Author Manus Fig. 5. Observed proportions of that by CC length in words (limited to CCs up to 25 words); jittered points are bottom and top of each cell represent individual cases; error bars indicate 95% Controls Availability • Lower speech rate “THAT” • preceding pause “THAT” • initial disfluency “THAT” Controls Availability • Type of CC subject o It vs. I o Other PRO vs. above o Other NP vs. above • Frequency of CC subject head • Subject identity o Identical subject in matrix and CC ≈ “NONE” Controls Availability • Word form similarity o Demonstrative pronoun “that” o Demonstrative determiner “that” ≈ “NONE” • Frequency of matrix verb o Higher frequency “NONE” Controls Ambiguity avoidance • Possible garden path sentence “THAT” ❌ -PA Author Manuscript NIH-PA Author Manuscript o Even unlikely cases were included “I guess (this doesn’t really have to do with…)” Table 4 Distribution of disambiguation points for potentially ambiguous CC onsets. Disambiguation point – word: 1 2 3 4 5–9 >9 Number of instances 773 86 61 48 35 9 Cogn Ps Controls Matrix • Position of matrix verb o Further away from sentence-initial position “THAT” • Matrix subject o You vs. I “THAT” o Other PRO vs. above “THAT” o Other NP vs. above “THAT” Controls Others • Random speakers intercept • Persistence o Prime w/o that vs. no prime o Prime w/ that vs. above • Gender o Male “NONE” Information density • Clear significance (p < .0001) • High information density of the CC onset use of “that” • Correlation with other predictors negligible • Contribution to the model’s likelihood is high • At least 15% of the model quality due to information density • Single most important predictor Information density • Verbs’ subcategorization frequency as estimate for information density • High CC-biases, low “that”-biases (e.g.: guess) • Low CC-biases, high “that”-biases (e.g.: worry) Syntactic reduction is affected by information density Results Information density • Prediction: UID can account for any type of reduction Phonetic and phonological reduction • So far patterns align with this prediction • Availability account do not predict this o But predict lengthening of words Information density Optional case markers (or copula) • Languages with flexible word order • Japanese ケーキが大好きだ vs. ケーキ大好き Keeki ga daisuki da I love cake keeki daisuki Information density Reduced case markers • Korean 나는 독일 사람이야 vs. 난 독일 사람이야 Na neun togil saram iya I am German Nan togil saram iya Information density Optional object clitics and other argument marking morphology • Direct object clitics in Bulgarian o Can’t be predicted by availability account o Could be predicted by ambiguity avoiding Information density Contracted auxiliaries • English “he’s” vs. “he is” o Can’t be predicted by neither availability nor ambiguity avoidance Information density Ellipsis • Japanese 行きたいけど行けない vs. ikitai kedo ikenai 行きたいけど ikitai kedo I want to go but (I can’t go) (¬ 行きたいけど(遅くなりそう) I want to go, but I might be late) ikitai kedo osoku nari sou Information density Non-subject-extracted relative clause • Indefinite noun phrase < definite noun phrases • Light head nouns < heavy head nouns (e.g. the way) (e.g. the priest) “I like the way (that) it vibrates” Information density Whiz-deletion (BE) • Relativizer + auxiliary can be ommitted “The smell (that is) released by a pig or a chicken farm is indescribable” Information density Object drop • Verbs with high selectional preference “Tom ate.” vs. “Tom saw …” Information density • Many novel predictions across o Different levels of linguistic productions o Languages o Types of alternations • Per-word entropy of sentences should stay constant throughout discourse o Words with high information density (in the context and discourse) should come later in the sentence o A priori per-word entropy should increase Grammaticalization • Might interfere with information density? o Matrix subject “I” or “you” o Matrix verb “guess”, “think”, “say”, “know”, “mean” o Matrix verb in present tense o Matrix clause was not embedded 3033 cases remain • Still highly significant (p < .0001) UID may be a reason for grammaticalization Noisy channel • Base of UID • Audience design o Speaker considers interlocutors’ knowledge and processor state to improve chance of successfully achieving their goal • Modulating information density at choice points = rational strategy for efficient production • UID minimizes processing difficulties Corpus-based research Claim: “Lack of balance and heterogeneity of data make findings unreliable” • Multilevel models • Avoidance of redundant predictors • If redundant residualization • Inter-speaker variance + ecological valid Corpus-based research • Results easier extend to all of English • Many previous results replicate • Provides evidence for so far relatively understudied effects (e.g. similarity avoidance) • “effect size” needs to be taken with caution o Not only strength of effects but also applicability Ambiguity avoidance (garden path sentences) relatively rare Questions and discussion