Semantics and Context in Natural Language Processing - ICRI-CI

advertisement

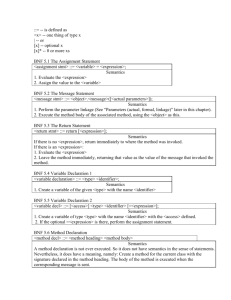

Semantics and Context in Natural Language Processing (NLP) Ari Rappoport The Hebrew University Form vs. Meaning • Solid NLP progress with statistical learning • OK for classification, search, prediction – Prediction: language models in speech to text • But all this is just form (lexicon, syntax) • Language is for expressing meanings (semantics) – (i) Lexical, (ii) sentence, (iii) interaction (i) Lexical Semantics • Relatively context independent • Sensory feature conjunction: “house”, “hungry”, “guitar” – Non-linguistic “semantic” machine learning: face identification in photographs • Categories: is-a: “a smartphone is a (type of) product”, “iPhone is a (type of) smartphone” • Configurations: part-of: “engine:car”, places Generic Relationships • Medicine : Illness – Hunger : Thirst – Love : Treason – Law : Anarchy – Stimulant : Sensitivity • “You use X in a way W, to do V to some Z, at a time T and a place P, for the purpose S, because of B, causing C, …” Flexible Patterns • X w v Y : “countries such as France” – Davidov & Rappoport (ACL, EMNLP, COLING, etc) • • • • • Content words, High frequency words Meta-patterns: CHHC, CHCHC, HHC, etc. Fully unsupervised, general Efficient hardware filtering, clustering Categories, SAT exams, geographic maps, numerical questions, etc. Ambiguity • Relative context independence does not solve ambiguity • Apple: fruit, company • Madrid: Spain, New Mexico • Which one is more relevant? • Context must be taken into account – Language use is always contextual (ii) Sentence Semantics • The basic meaning expressed by (all) languages: argument structure “scenes” • Dynamic or static relations between participants; elaborators; connectors; embeddings: – “John kicked the red ball” – “Paul and Anne walked slowly in the park” – “She remembered John’s singing” Several Scenes • Linkers: cause, purpose, time, conditionality – “He went there to buy fruits”, “Before they arrived, the party was very quiet”, “If X then Y” • Ground: referring to the speech situation – “In my opinion, machine learning is the greatest development in computer science since FFT” [and neither were done by computer scientists] • “Career”, “Peace”, “Democracy” Sentence Semantics in NLP • Mostly manual: FrameNet, PropBank • Unsupervised algorithms – Arg. identification, Abend & Rappoport (ACL 2010) • Question Answering – Bag of words (lexical semantics) • Machine Translation – Rewriting of forms (alignment, candidates, target language model) Extreme Semantic Application • Tweet Sentiment Analysis – Schwartz (Davidov, Tsur) & Rappoport 2010, 2013 • Coarse semantics: 2 categories (40) • Short texts, no words lists; fixed patterns (iii) Interaction Semantics • ‘Understanding’ means having enough information to DO something – The brain’s main cycle • Example: human-system interaction • Full context dependence – Relevance to your current situation Interaction Examples • Searching “Argo”, did you mean – The plot? Reviews? Where and/or when to watch? • “Chinese restaurant” – The best in the country? In town? The nearest to you? The best deal? • There are hints: – Location (regular, irregular); time (lunch?) Interaction Directions • Extending flexible patterns: – Include Text-Action H and C items (words, actions) • Action: – represented as User Interface operations • Shortcut: bag of words (lexical semantics) + “current context”. Ignore sentence semantics • Noise, failure (Siri, maps,…) Summary • Lexical, sentence, and interaction semantics • Applications are possible using all levels • As relevance to life grows, so do requirements from algorithms • Both sentence and interaction semantics necessary for future smart applications • Current focus: sentence semantics