Sybex - The Official (ISC)2 Guide to the CISSP CBK Reference, 5th Edition

advertisement

CISSP

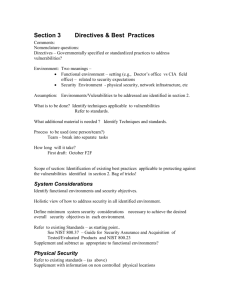

The Official (ISC)2®

CISSP® CBK® Reference

Fifth Edition

CISSP: Certified Information

Systems Security Professional

The Official (ISC)2®

CISSP® CBK®

Reference

Fifth Edition

John Warsinkse

With: Mark Graff, Kevin Henry, Christopher Hoover, Ben Malisow,

Sean Murphy, C. Paul Oakes, George Pajari, Jeff T. Parker,

David Seidl, Mike Vasquez

Development Editor: Kelly Talbot

Senior Production Editor: Christine O’Connor

Copy Editor: Kim Wimpsett

Editorial Manager: Pete Gaughan

Production Manager: Kathleen Wisor

Associate Publisher: Jim Minatel

Proofreader: Louise Watson, Word One New York

Indexer: Johnna VanHoose Dinse

Project Coordinator, Cover: Brent Savage

Cover Designer: Wiley

Copyright © 2019 by (ISC)2

Published simultaneously in Canada

ISBN: 978-1-119-42334-8

ISBN: 978-1-119-42332-4 (ebk.)

ISBN: 978-1-119-42331-7 (ebk.)

Manufactured in the United States of America

No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means,

electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108

of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization

through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers,

MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permission should be addressed to the

Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201)

748-6008, or online at http://www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: The publisher and the author make no representations or warranties with

respect to the accuracy or completeness of the contents of this work and specifically disclaim all warranties, including

without limitation warranties of fitness for a particular purpose. No warranty may be created or extended by sales or

promotional materials. The advice and strategies contained herein may not be suitable for every situation. This work

is sold with the understanding that the publisher is not engaged in rendering legal, accounting, or other professional

services. If professional assistance is required, the services of a competent professional person should be sought. Neither

the publisher nor the author shall be liable for damages arising herefrom. The fact that an organization or Web site is

referred to in this work as a citation and/or a potential source of further information does not mean that the author or

the publisher endorses the information the organization or Web site may provide or recommendations it may make.

Further, readers should be aware that Internet Web sites listed in this work may have changed or disappeared between

when this work was written and when it is read.

For general information on our other products and services or to obtain technical support, please contact our Customer

Care Department within the U.S. at (877) 762-2974, outside the U.S. at (317) 572-3993 or fax (317) 572-4002.

Wiley publishes in a variety of print and electronic formats and by print-on-demand. Some material included with

standard print versions of this book may not be included in e-books or in print-on-demand. If this book refers to media

such as a CD or DVD that is not included in the version you purchased, you may download this material at

http://booksupport.wiley.com. For more information about Wiley products, visit www.wiley.com.

Library of Congress Control Number: 2019936840

TRADEMARKS: Wiley, the Wiley logo, and the Sybex logo are trademarks or registered trademarks of John Wiley &

Sons, Inc. and/or its affiliates, in the United States and other countries, and may not be used without written permission. (ISC)2, CISSP, and CBK are registered trademarks of (ISC)2, Inc. All other trademarks are the property of their

respective owners. John Wiley & Sons, Inc. is not associated with any product or vendor mentioned in this book.

10 9 8 7 6 5 4 3 2 1

Lead Author and Lead Technical

Reviewer

Over the course of his 30-plus years as an information technology professional, John Warsinske

has been exposed to a breadth of technologies and governance structures. He has been, at

various times, a network analyst, IT manager, project manager, security analyst, and chief

information officer. He has worked in local, state, and federal government; has worked in

public, private, and nonprofit organizations; and has been variously a contractor, direct

employee, and volunteer. He has served in the U.S. military in assignments at the tactical,

operational, and strategic levels across the entire spectrum from peace to war. In these diverse

environments, he has experienced both the uniqueness and the similarities in the activities

necessary to secure their respective information assets.

Mr. Warsinske has been an instructor for (ISC)2 for more than five years; prior to that, he

was an adjunct faculty instructor at the College of Southern Maryland. His (ISC)2 certifications

include the Certified Information Systems Security Professional (CISSP), Certified CloudSecurity Professional (CCSP), and HealthCare Information Security and Privacy Practitioner

(HCISPP). He maintains several other industry credentials as well.

When he is not traveling, Mr. Warsinske currently resides in Ormond Beach, Florida, with

his wife and two extremely spoiled Carolina dogs.

v

Contributing Authors

Mark Graff (CISSP), former chief information security officer for both NASDAQ and Lawrence Livermore National Laboratory, is a seasoned cybersecurity practitioner and thought

leader. He has lectured on risk analysis, cybersecurity, and privacy issues before the American

Academy for the Advancement of Science, the Federal Communications Commission, the

Pentagon, the National Nuclear Security Administration, and other U.S. national security

facilities. Graff has twice testified before Congress on cybersecurity, and in 2018–2019 served

as an expert witness on software security to the Federal Trade Commission. His books—notably

Secure Coding: Principles and Practices—have been used at dozens of universities worldwide in

teaching how to design and build secure software-based systems. Today, as head of the consulting firm Tellagraff LLC (www.markgraff.com), Graff provides strategic advice to large companies, small businesses, and government agencies. Recent work has included assisting multiple

state governments in the area of election security.

Kevin Henry (CAP, CCSP, CISSP, CISSP-ISSAP, CISSP-ISSEP, CISSP-ISSMP, CSSLP,

and SSCP) is a passionate and effective educator and consultant in information security.

Kevin has taught CISSP classes around the world and has contributed to the development of

(ISC)2 materials for nearly 20 years. He is a frequent speaker at security conferences and the

author of several books on security management. Kevin’s years of work in telecommunications,

government, and private industry have led to his strength in being able to combine real-world

experience with the concepts and application of information security topics in an understandable and effective manner.

Chris Hoover, CISSP, CISA, is a cybersecurity and risk management professional with 20

years in the field. He spent most of his career protecting the U.S. government’s most sensitive

data in the Pentagon, the Baghdad Embassy, NGA Headquarters, Los Alamos Labs, and many

other locations. Mr. Hoover also developed security products for RSA that are deployed across

the U.S. federal government, many state governments, and internationally. He is currently

consulting for the DoD and runs a risk management start-up called Riskuary. He has a master’s

degree in information assurance.

Ben Malisow, CISSP, CISM, CCSP, Security+, SSCP, has been involved in INFOSEC and

education for more than 20 years. At Carnegie Mellon University, he crafted and delivered the

CISSP prep course for CMU’s CERT/SEU. Malisow was the ISSM for the FBI’s most highly

classified counterterror intelligence-sharing network, served as an Air Force officer, and taught

vii

grades 6–12 at a reform school in the Las Vegas public school district (probably his most

dangerous employment to date). His latest work has included CCSP Practice Tests and

CCSP (ISC)2 Certified Cloud Security Professional Official Study Guide, also from Sybex/

Wiley, and How to Pass Your INFOSEC Certification Test: A Guide to Passing the CISSP,

CISA, CISM, Network+, Security+, and CCSP, available from Amazon Direct. In addition to other consulting and teaching, Ben is a certified instructor for (ISC)2, delivering

CISSP, CCSP, and SSCP courses. You can reach him at www.benmalisow.com or his

INFOSEC blog, securityzed.com. Ben would also like to extend his personal gratitude

to Todd R. Slack, MS, JD, CIPP/US, CIPP/E, CIPM, FIP, CISSP, for his invaluable contributions to this book.

Sean Murphy, CISSP, HCISSP, is the vice president and chief information security

officer for Premera Blue Cross (Seattle). He is responsible for providing and optimizing

an enterprise-wide security program and architecture that minimizes risk, enables business imperatives, and further strengthens the health plan company’s security posture. He’s

a healthcare information security expert with more than 20 years of experience in highly

regulated, security-focused organizations. Sean retired from the U.S. Air Force (Medical

Service Corps) after achieving the rank of lieutenant colonel. He has served as CIO and

CISO in the military service and private sector at all levels of healthcare organizations.

Sean has a master’s degree in business administration (advanced IT concentration) from

the University of South Florida, a master’s degree in health services administration from

Central Michigan University, and a bachelor’s degree in human resource management

from the University of Maryland. He is a board chair of the Association for Executives

in Healthcare Information Security (AEHIS). Sean is a past chairman of the HIMSS

Privacy and Security Committee. He served on the (ISC)2 committee to develop the

HCISPP credential. He is also a noted speaker at the national level and the author of

numerous industry whitepapers, articles, and educational materials, including his book

Healthcare Information Security and Privacy.

C. Paul Oakes, CISSP, CISSP-ISSAP, CCSP, CCSK, CSM, and CSPO, is an author,

speaker, educator, technologist, and thought leader in cybersecurity, software development, and process improvement. Paul has worn many hats over his 20-plus years of

experience. In his career he has been a security architect, consultant, software engineer,

mentor, educator, and executive. Paul has worked with companies in various industries

such as the financial industry, banking, publishing, utilities, government, e-commerce,

education, training, research, and technology start-ups. His work has advanced the cause

of software and information security on many fronts, ranging from writing security policy

to implementing secure code and showing others how to do the same. Paul’s passion is to

help people develop the skills they need to most effectively defend the line in cyberspace

viii

Contributing Authors

and advance the standard of cybersecurity practice. To this end, Paul continuously collaborates with experts across many disciplines, ranging from cybersecurity to accelerated

learning to mind-body medicine, to create and share the most effective strategies to

rapidly learn cybersecurity and information technology subject matter. Most of all, Paul

enjoys his life with his wife and young son, both of whom are the inspirations for his

passion.

George E. Pajari, CISSP-ISSAP, CISM, CIPP/E, is a fractional CISO, providing

cybersecurity leadership on a consulting basis to a number of cloud service providers.

Previously he was the chief information security officer (CISO) at Hootsuite, the most

widely used social media management platform, trusted by more than 16 million

people and employees at 80 percent of the Fortune 1000. He has presented at conferences

including CanSecWest, ISACA CACS, and BSides Vancouver. As a volunteer, he helps

with the running of BSides Vancouver, the (ISC)² Vancouver chapter, and the University

of British Columbia’s Cybersecurity Summit. He is a recipient of the ISACA CISM

Worldwide Excellence Award.

Jeff Parker, CISSP, CySA+, CASP, is a certified technical trainer and security consultant

specializing in governance, risk management, and compliance (GRC). Jeff began his

information security career as a software engineer with an HP consulting group out of

Boston. Enterprise clients for which Jeff has consulted on site include hospitals,

universities, the U.S. Senate, and a half-dozen UN agencies. Jeff assessed these clients’

security posture and provided gap analysis and remediation. In 2006 Jeff relocated to

Prague, Czech Republic, for a few years, where he designed a new risk management

strategy for a multinational logistics firm. Presently, Jeff resides in Halifax, Canada, while

consulting primarily for a GRC firm in Virginia.

David Seidl, CISSP, GPEN, GCIH, CySA+, Pentest+, is the vice president for information technology and CIO at Miami University of Ohio. During his IT career, he has

served in a variety of technical and information security roles, including serving as the

senior director for Campus Technology Services at the University of Notre Dame and

leading Notre Dame’s information security team as director of information security.

David has taught college courses on information security and writes books on information

security and cyberwarfare, including CompTIA CySA+ Study Guide: Exam CS0-001,

CompTIA PenTest+ Study Guide: Exam PT0-001, CISSP Official (ISC)2 Practice

Tests, and CompTIA CySA+ Practice Tests: Exam CS0-001, all from Wiley, and Cyberwarfare: Information Operations in a Connected World from Jones and Bartlett. David holds

a bachelor’s degree in communication technology and a master’s degree in information

security from Eastern Michigan University.

Contributing Authors

ix

Michael Neal Vasquez has more than 25 years of IT experience and has held several

industry certifications, including CISSP, MCSE: Security, MCSE+I, MCDBA, and

CCNA. Mike is a senior security engineer on the red team for a Fortune 500 financial

services firm, where he spends his days (and nights) looking for security holes. After

obtaining his BA from Princeton University, he forged a security-focused IT career, both

working in the trenches and training other IT professionals. Mike is a highly sought-after

instructor because his classes blend real-world experience and practical knowledge with

the technical information necessary to comprehend difficult material, and his students

praise his ability to make any course material entertaining and informative. Mike has

taught CISSP, security, and Microsoft to thousands of students across the globe through

local colleges and online live classes. He has performed penetration testing engagements

for healthcare, financial services, retail, utilities, and government entities. He also runs

his own consulting and training company and can be reached on LinkedIn at

https://www.linkedin.com/in/mnvasquez.

x

Contributing Authors

Technical Reviewers

Bill Burke, CISSP, CCSP, CRISC, CISM, CEH, is a security professional with more than

35 years serving the information technology and services community. He specializes in security

architecture, governance, and compliance, primarily in the cloud space. He previously served

on the board of directors of the Silicon Valley (ISC)2 chapter, in addition to the board

of directors of the Cloud Services Alliance – Silicon Valley. Bill can be reached via email at

billburke@cloudcybersec.com.

Charles Gaughf, CISSP, SSCP, CCSP, is both a member and an employee of (ISC)², the

global nonprofit leader in educating and certifying information security professionals. For more

than 15 years, he has worked in IT and security in different capacities for nonprofit, higher education, and telecommunications organizations to develop security education for the industry

at large. In leading the security team for the last five years as the senior manager of security at

(ISC)², he was responsible for the global security operations, security posture, and overall security health of (ISC)². Most recently he transitioned to the (ISC)² education team to develop

immersive and enriching CPE opportunities and security training and education for the industry

at large. He holds degrees in management of information systems and communications.

Dr. Meng-Chow Kang, CISSP, is a practicing information security professional with more

than 30 years of field experience in various technical information security and risk management roles for organizations that include the Singapore government, major global financial

institutions, and security and technology providers. His research and part of his experience

in the field have been published in his book Responsive Security: Be Ready to Be Secure from

CRC Press. Meng-Chow has been a CISSP since 1998 and was a member of the (ISC)2 board

of directors from 2015 through 2017. He is also a recipient of the (ISC)2 James Wade Service

Award.

Aaron Kraus, CISSP, CCSP, Security+, began his career as a security auditor for U.S. federal

government clients working with the NIST RMF and Cybersecurity Framework, and then

moved to the healthcare industry as an auditor working with the HIPAA and HITRUST frameworks. Next, he entered the financial services industry, where he designed a control and audit

program for vendor risk management, incorporating financial compliance requirements and

industry-standard frameworks including COBIT and ISO 27002. Since 2016 Aaron has been

xi

working with startups based in San Francisco, first on a GRC SaaS platform and more

recently in cyber-risk insurance, where he focuses on assisting small- to medium-sized

businesses to identify their risks, mitigate them appropriately, and transfer risk via insurance. In addition to his technical certifications, he is a Learning Tree certified instructor

who teaches cybersecurity exam prep and risk management.

Professor Jill Slay, CISSP, CCFP, is the optus chair of cybersecurity at La Trobe University, leads the Optus La Trobe Cyber Security Research Hub, and is the director of

cyber-resilience initiatives for the Australian Computer Society. Jill is a director of the

Victorian Oceania Research Centre and previously served two terms as a director of the

International Information Systems Security Certification Consortium. She has established an international research reputation in cybersecurity (particularly digital forensics)

and has worked in collaboration with many industry partners. She was made a member

of the Order of Australia (AM) for service to the information technology industry through

contributions in the areas of forensic computer science, security, protection of infrastructure, and cyberterrorism. She is a fellow of the Australian Computer Society and a fellow

of the International Information Systems Security Certification Consortium, both for her

service to the information security industry. She also is a MACS CP.

xii

Technical Reviewers

Contents at a Glance

Foreword

xxv

Introductionxxvii

Domain 1:

Security and Risk Management

Domain 2:

Asset Security

131

Domain 3:

Security Architecture and Engineering

213

Domain 4:

Communication and Network Security

363

Domain 5:

Identity and Access Management

483

Domain 6:

Security Assessment and Testing

539

Domain 7:

Security Operations

597

Domain 8:

Software Development Security

695

Index

1

875

xiii

Contents

Foreword

xxv

Introductionxxvii

Domain 1: Security and Risk Management

1

Understand and Apply Concepts of Confidentiality, Integrity, and Availability

Information Security

Evaluate and Apply Security Governance Principles

Alignment of Security Functions to Business Strategy, Goals, Mission,

and Objectives

Vision, Mission, and Strategy

Governance

Due Care

Determine Compliance Requirements

Legal Compliance

Jurisdiction

Legal Tradition

Legal Compliance Expectations

Understand Legal and Regulatory Issues That Pertain to Information Security in a

Global Context

Cyber Crimes and Data Breaches

Privacy

Understand, Adhere to, and Promote Professional Ethics

Ethical Decision-Making

Established Standards of Ethical Conduct

(ISC)² Ethical Practices

Develop, Document, and Implement Security Policy, Standards, Procedures,

and Guidelines

Organizational Documents

Policy Development

Policy Review Process

2

3

6

6

6

7

10

11

12

12

12

13

13

14

36

49

49

51

56

57

58

61

61

xv

Identify, Analyze, and Prioritize Business Continuity Requirements

62

Develop and Document Scope and Plan

62

Risk Assessment

70

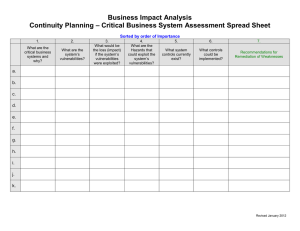

Business Impact Analysis

71

Develop the Business Continuity Plan

73

Contribute to and Enforce Personnel Security Policies and Procedures

80

Key Control Principles

80

Candidate Screening and Hiring

82

Onboarding and Termination Processes

91

Vendor, Consultant, and Contractor Agreements and Controls

96

Privacy in the Workplace

97

Understand and Apply Risk Management Concepts

99

Risk99

Risk Management Frameworks

99

Risk Assessment Methodologies

108

Understand and Apply Threat Modeling Concepts and Methodologies

111

Threat Modeling Concepts

111

Threat Modeling Methodologies

112

Apply Risk-Based Management Concepts to the Supply Chain

116

Supply Chain Risks

116

Supply Chain Risk Management

119

Establish and Maintain a Security Awareness, Education, and Training Program

121

Security Awareness Overview

122

Developing an Awareness Program

123

Training

127

Summary

128

xvi

Domain 2: Asset Security

131

Asset Security Concepts

Data Policy

Data Governance

Data Quality

Data Documentation

Data Organization

Identify and Classify Information and Assets

Asset Classification

Determine and Maintain Information and Asset Ownership

Asset Management Lifecycle

Software Asset Management

131

132

132

133

134

136

139

141

145

146

148

Contents

Protect Privacy

Cross-Border Privacy and Data Flow Protection

Data Owners

Data Controllers

Data Processors

Data Stewards

Data Custodians

Data Remanence

Data Sovereignty

Data Localization or Residency

Government and Law Enforcement Access to Data

Collection Limitation

Understanding Data States

Data Issues with Emerging Technologies

Ensure Appropriate Asset Retention

Retention of Records

Determining Appropriate Records Retention

Retention of Records in Data Lifecycle

Records Retention Best Practices

Determine Data Security Controls

Technical, Administrative, and Physical Controls

Establishing the Baseline Security

Scoping and Tailoring

Standards Selection

Data Protection Methods

Establish Information and Asset Handling Requirements

Marking and Labeling

Handling

Declassifying Data

Storage

Summary

152

153

161

162

163

164

164

164

168

169

171

172

173

173

175

178

178

179

180

181

183

185

186

189

198

208

208

209

210

211

212

Domain 3: Security Architecture and Engineering

213

Implement and Manage Engineering Processes Using Secure Design Principles

Saltzer and Schroeder’s Principles

ISO/IEC 19249

Defense in Depth

Using Security Principles

215

216

221

229

230

Contents

xvii

Understand the Fundamental Concepts of Security Models

Bell-LaPadula Model

The Biba Integrity Model

The Clark-Wilson Model

The Brewer-Nash Model

Select Controls Based upon Systems Security Requirements

Understand Security Capabilities of Information Systems

Memory Protection

Virtualization

Secure Cryptoprocessor

Assess and Mitigate the Vulnerabilities of Security Architectures, Designs, and

Solution Elements

Client-Based Systems

Server-Based Systems

Database Systems

Cryptographic Systems

Industrial Control Systems

Cloud-Based Systems

Distributed Systems

Internet of Things

Assess and Mitigate Vulnerabilities in Web-Based Systems

Injection Vulnerabilities

Broken Authentication

Sensitive Data Exposure

XML External Entities

Broken Access Control

Security Misconfiguration

Cross-Site Scripting

Using Components with Known Vulnerabilities

Insufficient Logging and Monitoring

Cross-Site Request Forgery

Assess and Mitigate Vulnerabilities in Mobile Systems

Passwords

Multifactor Authentication

Session Lifetime

Wireless Vulnerabilities

Mobile Malware

Unpatched Operating System or Browser

xviii

Contents

230

232

234

235

235

237

241

241

244

247

253

254

255

257

260

267

271

274

275

278

279

280

283

284

284

285

285

286

286

287

287

288

288

289

290

290

290

Insecure Devices

Mobile Device Management

Assess and Mitigate Vulnerabilities in Embedded Devices

Apply Cryptography

Cryptographic Lifecycle

Cryptographic Methods

Public Key Infrastructure

Key Management Practices

Digital Signatures

Non-Repudiation

Integrity

Understand Methods of Cryptanalytic Attacks

Digital Rights Management

Apply Security Principles to Site and Facility Design

Implement Site and Facility Security Controls

Physical Access Controls

Wiring Closets/Intermediate Distribution Facilities

Server Rooms/Data Centers

Media Storage Facilities

Evidence Storage

Restricted and Work Area Security

Utilities and Heating, Ventilation, and Air Conditioning

Environmental Issues

Fire Prevention, Detection, and Suppression

Summary

291

291

292

295

295

298

311

315

318

320

321

325

339

342

343

343

345

346

348

349

349

351

355

358

362

Domain 4: Communication and Network Security

363

Implement Secure Design Principles in Network Architectures

Open Systems Interconnection and Transmission Control

Protocol/Internet Protocol Models

Internet Protocol Networking

Implications of Multilayer Protocols

Converged Protocols

Software-Defined Networks

Wireless Networks

Internet, Intranets, and Extranets

Demilitarized Zones

Virtual LANs

364

365

382

392

394

395

396

409

410

410

Contents

xix

xx

Secure Network Components

Firewalls

Network Address Translation

Intrusion Detection System

Security Information and Event Management

Network Security from Hardware Devices

Transmission Media

Endpoint Security

Implementing Defense in Depth

Content Distribution Networks

Implement Secure Communication Channels According to Design

Secure Voice Communications

Multimedia Collaboration

Remote Access

Data Communications

Virtualized Networks

Summary

411

412

418

421

422

423

429

442

447

448

449

449

452

458

466

470

481

Domain 5: Identity and Access Management

483

Control Physical and Logical Access to Assets

Information

Systems

Devices

Facilities

Manage Identification and Authentication of People, Devices, and Services

Identity Management Implementation

Single Factor/Multifactor Authentication

Accountability

Session Management

Registration and Proofing of Identity

Federated Identity Management

Credential Management Systems

Integrate Identity as a Third-Party Service

On-Premise

Cloud

Federated

Implement and Manage Authorization Mechanisms

Role-Based Access Control

Rule-Based Access Control

484

485

486

487

488

492

494

496

511

511

513

520

524

525

526

527

527

528

528

529

Contents

Mandatory Access Control

Discretionary Access Control

Attribute-Based Access Control

Manage the Identity and Access Provisioning Lifecycle

User Access Review

System Account Access Review

Provisioning and Deprovisioning

Auditing and Enforcement

Summary

530

531

531

533

534

535

535

536

537

Domain 6: Security Assessment and Testing

539

Design and Validate Assessment, Test, and Audit Strategies

Assessment Standards

Conduct Security Control Testing

Vulnerability Assessment

Penetration Testing

Log Reviews

Synthetic Transactions

Code Review and Testing

Misuse Case Testing

Test Coverage Analysis

Interface Testing

Collect Security Process Data

Account Management

Management Review and Approval

Key Performance and Risk Indicators

Backup Verification Data

Training and Awareness

Disaster Recovery and Business Continuity

Analyze Test Output and Generate Report

Conduct or Facilitate Security Audits

Internal Audits

External Audits

Third-Party Audits

Integrating Internal and External Audits

Auditing Principles

Audit Programs

Summary

540

543

545

546

554

564

565

567

571

573

574

575

577

579

580

583

584

585

587

590

591

591

592

593

593

594

596

Contents

xxi

xxii

Domain 7: Security Operations

597

Understand and Support Investigations

Evidence Collection and Handling

Reporting and Documentation

Investigative Techniques

Digital Forensics Tools, Techniques, and Procedures

Understand Requirements for Investigation Types

Administrative

Criminal

Civil

Regulatory

Industry Standards

Conduct Logging and Monitoring Activities

Define Auditable Events

Time

Protect Logs

Intrusion Detection and Prevention

Security Information and Event Management

Continuous Monitoring

Ingress Monitoring

Egress Monitoring

Securely Provision Resources

Asset Inventory

Asset Management

Configuration Management

Understand and Apply Foundational Security Operations Concepts

Need to Know/Least Privilege

Separation of Duties and Responsibilities

Privileged Account Management

Job Rotation

Information Lifecycle

Service Level Agreements

Apply Resource Protection Techniques to Media

Marking

Protecting

Transport

Sanitization and Disposal

598

599

601

602

604

610

611

613

614

616

616

617

618

619

620

621

623

625

629

631

632

632

634

635

637

637

638

640

642

643

644

647

647

647

648

649

Contents

Conduct Incident Management

An Incident Management Program

Detection

Response

Mitigation

Reporting

Recovery

Remediation

Lessons Learned

Third-Party Considerations

Operate and Maintain Detective and Preventative Measures

White-listing/Black-listing

Third-Party Security Services

Honeypots/Honeynets

Anti-Malware

Implement and Support Patch and Vulnerability Management

Understand and Participate in Change Management Processes

Implement Recovery Strategies

Backup Storage Strategies

Recovery Site Strategies

Multiple Processing Sites

System Resilience, High Availability, Quality of Service, and Fault Tolerance

Implement Disaster Recovery Processes

Response

Personnel

Communications

Assessment

Restoration

Training and Awareness

Test Disaster Recovery Plans

Read-Through/Tabletop

Walk-Through

Simulation

Parallel

Full Interruption

Participate in Business Continuity Planning and Exercises

Implement and Manage Physical Security

Physical Access Control

The Data Center

650

651

653

656

657

658

661

661

661

662

663

665

665

667

667

670

672

673

673

676

678

679

679

680

680

682

682

683

684

685

686

687

687

687

688

688

689

689

692

Contents

xxiii

xxiv

Address Personnel Safety and Security Concerns

Travel

Duress

Summary

693

693

693

694

Domain 8: Software Development Security

695

Understand and Integrate Security in the Software Development Lifecycle

Development Methodologies

Maturity Models

Operations and Maintenance

Change Management

Integrated Product Team

Identify and Apply Security Controls in Development Environments

Security of the Software Environment

Configuration Management as an Aspect of Secure Coding

Security of Code Repositories

Assess the Effectiveness of Software Security

Logging and Auditing of Changes

Risk Analysis and Mitigation

Assess the Security Impact of Acquired Software

Acquired Software Types

Software Acquisition Process

Relevant Standards

Software Assurance

Certification and Accreditation

Define and Apply Secure Coding Standards and Guidelines

Security Weaknesses and Vulnerabilities at the

Source-Code Level

Security of Application Programming Interfaces

Secure Coding Practices

Summary

696

696

753

768

770

773

776

777

796

798

802

802

817

835

835

842

845

848

852

853

Index

875

Contents

854

859

868

874

Foreword

Being recognized as a CISSP is an important step in investing

in your information security career. Whether you are picking up this book

to supplement your preparation to sit for the exam or you are an existing

CISSP using this as a desk reference, you’ve acknowledged that this certification makes you recognized as one of the most respected and sought-after

cybersecurity leaders in the world. After all, that’s what the CISSP symbolizes. You and your peers are among the ranks of the most knowledgeable practitioners in our community. The designation of CISSP instantly

communicates to everyone within our industry that you are intellectually curious and traveling

along a path of lifelong learning and improvement. Importantly, as a member of (ISC)² you

have officially committed to ethical conduct commensurate to your position of trust as a cybersecurity professional.

The recognized leader in the field of information security education and certification,

(ISC)2 promotes the development of information security professionals throughout the world.

As a CISSP with all the benefits of (ISC)2 membership, you are part of a global network of more

than 140,000 certified professionals who are working to inspire a safe and secure cyber world.

Being a CISSP, though, is more than a credential; it is what you demonstrate daily in your

information security role. The value of your knowledge is the proven ability to effectively

design, implement, and manage a best-in-class cybersecurity program within your organization.

To that end, it is my great pleasure to present the Official (ISC)2 Guide to the CISSP (Certified

Information Systems Security Professional) CBK. Drawing from a comprehensive, up-to-date

global body of knowledge, the CISSP CBK provides you with valuable insights on how to

implement every aspect of cybersecurity in your organization.

If you are an experienced CISSP, you will find this edition of the CISSP CBK to be a

timely book to frequently reference for reminders on best practices. If you are still gaining the

experience and knowledge you need to join the ranks of CISSPs, the CISSP CBK is a deep dive

that can be used to supplement your studies.

As the largest nonprofit membership body of certified information security professionals

worldwide, (ISC)² recognizes the need to identify and validate not only information security

xxv

competency but also the ability to connect knowledge of several domains when building

high-functioning cybersecurity teams that demonstrate cyber resiliency. The CISSP credential represents advanced knowledge and competency in security design, implementation, architecture, operations, controls, and more.

If you are leading or ready to lead your security team, reviewing the Official (ISC)2

Guide to the CISSP CBK will be a great way to refresh your knowledge of the many factors that go into securely implementing and managing cybersecurity systems that match

your organization’s IT strategy and governance requirements. The goal for CISSP credential holders is to achieve the highest standard for cybersecurity expertise—managing

multiplatform IT infrastructures while keeping sensitive data secure. This becomes especially crucial in the era of digital transformation, where cybersecurity permeates virtually

every value stream imaginable. Organizations that can demonstrate world-class cybersecurity capabilities and trusted transaction methods can enable customer loyalty and fuel

success.

The opportunity has never been greater for dedicated men and women to carve out a

meaningful career and make a difference in their organizations. The CISSP CBK will be

your constant companion in protecting and securing the critical data assets of your organization that will serve you for years to come.

Regards,

David P. Shearer, CISSP

CEO, (ISC)2

xxvi

Foreword

Introduction

The Certified Information Systems Security Professional (CISSP) signifies that an individual has a cross-disciplinary expertise across the broad spectrum of

information security and that he or she understands the context of it within a business

environment. There are two main requirements that must be met in order to achieve

the status of CISSP. One must take and pass the certification exam, while also proving a

minimum of five years of direct full-time security work experience in two or more of the

domains of the (ISC)² CISSP CBK. The field of information security is wide, and there

are many potential paths along one’s journey through this constantly and rapidly changing profession.

A firm comprehension of the domains within the CISSP CBK and an understanding of how they connect back to the business and its people are important components

in meeting the requirements of the CISSP credential. Every reader will connect these

domains to their own background and perspective. These connections will vary based

on industry, regulatory environment, geography, culture, and unique business operating

environment. With that sentiment in mind, this book’s purpose is not to address all of

these issues or prescribe a set path in these areas. Instead, the aim is to provide an official

guide to the CISSP CBK and allow you, as a security professional, to connect your own

knowledge, experience, and understanding to the CISSP domains and translate the CBK

into value for your organization and the users you protect.

Security and Risk Management

The Security and Risk Management domain entails many of the foundational security

concepts and principles of information security. This domain covers a broad set of topics

and demonstrates how to generally apply the concepts of confidentiality, integrity and

availability across a security program. This domain also includes understanding compliance requirements, governance, building security policies and procedures, business continuity planning, risk management, security education, and training and awareness, and

xxvii

most importantly it lays out the ethnical canons and professional conduct to be demonstrated by (ISC)2 members.

The information security professional will be involved in all facets of security and risk

management as part of the functions they perform across the enterprise. These functions

may include developing and enforcing policy, championing governance and risk management, and ensuring the continuity of operations across an organization in the event of

unforeseen circumstances. To that end, the information security professional must safeguard the organization’s people and data.

Asset Security

The Asset Security domain covers the safeguarding of information and information assets

across their lifecycle to include the proper collection, classification, handling, selection,

and application of controls. Important concepts within this domain are data ownership,

privacy, data security controls, and cryptography. Asset security is used to identify controls

for information and the technology that supports the exchange of that information to

include systems, media, transmission, and privilege.

The information security professional is expected to have a solid understanding of

what must be protected, what access should be restricted, the control mechanisms available, how those mechanisms may be abused, and the appropriateness of those controls,

and they should be able to apply the principles of confidentiality, integrity, availability,

and privacy against those assets.

Security Architecture and Engineering

The Security Architecture and Engineering domain covers the process of designing and

building secure and resilient information systems and associated architecture so that the

information systems can perform their function while minimizing the threats that can be

caused by malicious actors, human error, natural disasters, or system failures. Security

must be considered in the design, in the implementation, and during the continuous

delivery of an information system through its lifecycle. It is paramount to understand

secure design principles and to be able to apply security models to a wide variety of distributed and disparate systems and to protect the facilities that house these systems.

An information security professional is expected to develop designs that demonstrate how

controls are positioned and how they function within a system. The security controls must tie

back to the overall system architecture and demonstrate how, through security engineering,

those systems maintain the attributes of confidentiality, integrity, and availability.

xxviii

Introduction

Communication and Network Security

The Communication and Network Security domain covers secure design principles as they

relate to network architectures. The domain provides a thorough understanding of components of a secure network, secure design, and models for secure network operation. The

domain covers aspects of a layered defense, secure network technologies, and management

techniques to prevent threats across a number of network types and converged networks.

It is necessary for an information security professional to have a thorough understanding of networks and the way in which organizations communicate. The connected world

in which security professionals operate requires that organizations be able to access information and execute transactions in real time with an assurance of security. It is therefore

important that an information security professional be able to identify threats and risks

and then implement mitigation techniques and strategies to protect these communication

channels.

Identity and Access Management (IAM)

The Identity and Access Management (IAM) domain covers the mechanisms by which

an information system permits or revokes the right to access information or perform an

action against an information system. IAM is the mechanism by which organizations

manage digital identities. IAM also includes the organizational policies and processes for

managing digital identities as well as the underlying technologies and protocols needed

to support identity management.

Information security professionals and users alike interact with components of IAM

every day. This includes business services logon authentication, file and print systems,

and nearly any information system that retrieves and manipulates data. This can mean

users or a web service that exposes data for user consumption. IAM plays a critical and

indispensable part in these transactions and in determining whether a user’s request is

validated or disqualified from access.

Security Assessment and Testing

The Security Assessment and Testing domain covers the tenets of how to perform and

manage the activities involved in security assessment and testing, which includes providing a check and balance to regularly verify that security controls are performing optimally

and efficiently to protect information assets. The domain describes the array of tools and

methodologies for performing various activities such as vulnerability assessments, penetration tests, and software tests.

Introduction

xxix

The information security professional plays a critical role in ensuring that security

controls remain effective over time. Changes to the business environment, technical

environment, and new threats will alter the effectiveness of controls. It is important that

the security professional be able to adapt controls in order to protect the confidentiality,

integrity, and availability of information assets.

Security Operations

The Security Operations domain includes a wide range of concepts, principles, best

practices, and responsibilities that are core to effectively running security operations in

any organization. This domain explains how to protect and control information processing assets in centralized and distributed environments and how to execute the daily

tasks required to keep security services operating reliably and efficiently. These activities

include performing and supporting investigations, monitoring security, performing incident response, implementing disaster recovery strategies, and managing physical security

and personnel safety.

In the day-to-day operations of the organization, sustaining expected levels of confidentiality, availability, and integrity of information and business services is where the

information security professional affects operational resiliency. The day-to-day securing,

responding, monitoring, and maintenance of resources demonstrates how the information security professional is able to protect information assets and provide value to the

organization.

Software Development Security

The Software Development Security domain refers to the controls around software, its

development lifecycle, and the vulnerabilities inherent in systems and applications.

Applications and data are the foundation of an information system. An understanding of

this process is essential to the development and maintenance required to ensure dependable and secure software. This domain also covers the development of secure coding

guidelines and standards, as well as the impacts of acquired software.

Software underpins of every system that the information security professional and

users in every business interact with on a daily basis. Being able to provide leadership

and direction to the development process, audit mechanisms, database controls, and web

application threats are all elements that the information security professional will put in

place as part of the Software Development Security domain.

xxx

Introduction

$*4415IF0GGJDJBM *4$ ď¡$*441¡$#,¡3FGFSFODF 'JGUI&EJUJPO

By +PIO8BSTJOLTF

$PQZSJHIU¥CZ *4$ Ē

doMain

1

security and risk

Management

iN tHe PoPular PreSS, we are bombarded with stories of technically savvy

coders with nothing else to do except spend their days stealing information

from computers connected to the Internet. Indeed, many security professionals have built their careers on the singular focus of defeating the wily

hacker. As with all stereotypes, these exaggerations contain a grain of truth:

there are capable hackers, and there are skilled defenders of systems. Yet

these stereotypes obscure the greater challenge of ensuring information, in

all its forms and throughout its lifecycle, is properly protected.

The Certified Information Systems Security Professional (CISSP) Common

Body of Knowledge is designed to provide a broad foundational understanding of information security practice, applicable to a range of organizational structures and information systems. This foundational knowledge

allows information security practitioners to communicate using a consistent

language to solve technical, procedural, and policy challenges. Through this

work, the security practice helps the business or organization achieve its

mission efficiently and effectively.

1

The CBK addresses the role of information security as an essential component

of an organization’s risk management activities. Organizations, regardless of type,

create structures to solve problems. These structures often leverage frameworks

of knowledge or practice to provide some predictability in process. The CISSP CBK

provides a set of tools that allows the information security professional to integrate security practice into those frameworks, protecting the organization’s assets

while respecting the unique trust that comes with the management of sensitive

information.

This revision of the CISSP CBK acknowledges that the means by which we

protect information and the range of information that demands protection are

both rapidly evolving. One consequence of that evolution is a change in focus

of the material. No longer is it enough to simply parrot a list of static facts or

concepts—security professionals must demonstrate the relevance of those

concepts to their particular business problems. Given the volume of information on which the CBK depends, the application of professional judgment in

the study of the CBK is essential. Just as in the real world, answers may not be

simple choices.

Understand and Apply Concepts of

Confidentiality, Integrity, and Availability

For thousands of years, people have sought assurance that information has been captured,

stored, communicated, and used securely. Depending on the context, differing levels of

emphasis have been placed on the availability, integrity, and confidentiality of information, but achieving these basic objectives has always been at the heart of security practice.

As we moved from the time of mud tablets and papyrus scrolls into the digital era, we

watched the evolution of technology to support these three objectives. In today’s world,

where vast amounts of information are accessible at the click of a mouse, our security

decision-making must still consider the people, processes, and systems that assure us that

information is available when we need it, has not been altered, and is protected from disclosure to those not entitled to it.

This module will explore the implications of confidentiality, integrity, and availability

(collectively, the CIA Triad) in current security practices. These interdependent concepts

form a useful and important framework on which to base the study of information security practice.

2

DOMAIN 1 Security and Risk Management

Information Security

1

Co

ility

nfi

lab

den

i

Ava

tial

ity

Security and Risk Management

Information security processes, practices, and technologies can be evaluated based on

how they impact the confidentiality, integrity, and availability of the information being

communicated. The apparent simplicity of the CIA Triad drives a host of security principles, which translate into practices and are implemented with various technologies

against a dizzying array of information sources (see Figure 1.1). Thus, a common understanding of the meaning of each of the elements in the triad allows security professionals

to communicate effectively.

Integrity

Figure 1.1 CIA Triad

Confidentiality

Ensuring that information is provided to only those people who are entitled to access that

information has been one of the core challenges in effective communications. Confidentiality implies that access is limited. Controls need to be identified that separate those

who need to know information from those who do not.

Once we have identified those with legitimate need, then we will apply controls to

enforce their privilege to access the information. Applying the principle of least privilege

ensures that individuals have only the minimum means to access the information to

which they are entitled.

Information about individuals is often characterized as having higher sensitivity to disclosure. The inappropriate disclosure of other types of information may also have adverse

impacts on an organization’s operations. These impacts may include statutory or regulatory noncompliance, loss of unique intellectual property, financial penalties, or the loss of

trust in the ability of the organization to act with due care for the information.

Understand and Apply Concepts of Confidentiality, Integrity, and Availability

3

Integrity

To make good decisions requires acting on valid and accurate information. Change to

information may occur inadvertently, or it may be the result of intentional acts. Ensuring

the information has not been inappropriately changed requires the application of control

over the creation, transmission, presentation, and storage of the information.

Detection of inappropriate change is one way to support higher levels of information

integrity. Many mechanisms exist to detect change in information; cryptographic hashing, reference data, and logging are only some of the means by which detection of change can occur.

Other controls ensure the information has sufficient quality to be relied upon for

decisions. Executing well-formed transactions against constrained data items ensures the

system maintains integrity as information is captured. Controls that address separation of

duties, application of least privilege, and audit against standards also support the validity

aspect of data integrity.

Availability

Availability ensures that the information is accessible when it is needed. Many circumstances can disrupt information availability. Physical destruction of the information, disruption of the communications path, and inappropriate application of access controls are

only a few of the ways availability can be compromised.

Availability controls must address people, processes, and systems. High availability

systems such as provided by cloud computing or clustering are of little value if the people

necessary to perform the tasks for the organization are unavailable. The challenge for the

information security architect is to identify those single points of failure in a system and

apply a sufficient amount of control to satisfy the organization’s risk appetite.

Taken together, the CIA Triad provides a structure for characterizing the information

security implications of a concept, technology, or process. It is infrequent, however, that

such a characterization would have implications on only one side of the triad. For example, applying cryptographic protections over information may indeed ensure the confidentiality of information and, depending on the cryptographic approach, support higher

levels of integrity, but the loss of the keys to those who are entitled to the information

would certainly have an availability implication!

Limitations of the CIA Triad

The CIA Triad evolved out of theoretical work done in the mid-1960s. Precisely because

of its simplicity, the rise of distributed systems and a vast number of new applications for

new technology has caused researchers and security practitioners to extend the triad’s

coverage.

Guaranteeing the identities of parties involved in communications is essential to

confidentiality. The CIA Triad does not directly address the issues of authenticity and

4

DOMAIN 1 Security and Risk Management

nonrepudiation, but the point of nonrepudiation is that neither party can deny that they

participated in the communication. This extension of the triad uniquely addresses aspects

of confidentiality and integrity that were never considered in the early theoretical work.

The National Institute of Standards and Technology (NIST) Special Publication 800-33,

“Underlying Technical Models for Information Technology Security,” included the CIA

Triad as three of its five security objectives, but added the concepts of accountability (that

actions of an entity may be traced uniquely to that entity) and assurance (the basis for confidence that the security measures, both technical and operational, work as intended to protect

the system and the information it processes). The NIST work remains influential as an effort

to codify best-practice approaches to systems security.

Perhaps the most widely accepted extension to the CIA Triad was proposed by information security pioneer Donn B. Parker. In extending the triad, Parker incorporated

three additional concepts into the model, arguing that these concepts were both atomic

(could not be further broken down conceptually) and nonoverlapping. This framework

has come to be known as the Parkerian Hexad (see Figure 1.2). The Parkerian Hexad

contains the following concepts:

Confidentiality: The limits on who has access to information

■■

Integrity: Whether the information is in its intended state

■■

Availability: Whether the information can be accessed in a timely manner

■■

Authenticity: The proper attribution of the person who created the information

■■

Utility: The usefulness of the information

■■

Possession or control: The physical state where the information is maintained

Security and Risk Management

■■

1

Integrity

Confidentiality

Availability

Authenticity

Possession

Utility

Figure 1.2 The Parkerian Hexad

Understand and Apply Concepts of Confidentiality, Integrity, and Availability

5

Subsequent academic work produced dozens of other information security models,

all aimed at the same fundamental issue—how to characterize information security risks.

For the security professional, a solid understanding of the CIA Triad is essential when

communicating about information security practice.

Evaluate and Apply Security Governance

Principles

A security-aware culture requires all levels of the organization to see security as integral

to its activities. The organization’s governance structure, when setting the vision for the

organization, should ensure that protecting the organization’s assets and meeting the

compliance requirements are integral to acting as good stewards of the organization.

Once the organization’s governance structure implements policies that reflect its level

of acceptable risk, management can act with diligence to implement good security

practices.

Alignment of Security Functions to Business Strategy, Goals,

Mission, and Objectives

Information security practice exists to support the organization in the achievement

of its goals. To achieve those goals, the information security practice must take into

account the organizational leadership environment, corporate risk tolerance, compliance expectations, new and legacy technologies and practices, and a constantly evolving set of threats. To be effective, the information security practitioner must be able to

communicate about risk and technology in a manner that will support good corporate

decision-making.

Vision, Mission, and Strategy

Every organization has a purpose. Some organizations define that purpose clearly and

elegantly, in a manner that communicates to all the stakeholders of the organization the

niche that the organization uniquely fills. An organization’s mission statement should

drive the organization’s activities to ensure the efficient and effective allocation of time,

resources, and effort.

The organization’s purpose may be defined by a governmental mandate or jurisdiction. For other organizations, the purpose may be to make products or deliver services

for commercial gain. Still other organizations exist to support their stakeholders’ vision

of society. Regardless, the mission clearly states why an organization exists, and this statement of purpose should drive all corporate activities.

6

DOMAIN 1 Security and Risk Management

What organizations do now, however, is usually different from what they will do in

the future. For an organization to evolve to its future state, a clear vision statement should

inspire the members of the organization to work toward that end. Often, this will require

the organization to change the allocation of time, resources, and efforts to that new and

desired state.

How the organization will go about achieving its vision is the heart of the organization’s strategy. At the most basic level, a corporate strategy is deciding where to spend

time and resources to accomplish a task. Deciding what that task is, however, is often the

hardest part of the process. Many organizations lack the focus on what it is they want to

achieve, resulting in inefficient allocation of time and resources.

Protecting an organization’s information assets is a critical part of the organization’s

strategy. Whether that information is written on paper, is managed by an electronic system, or exists in the minds of the organization’s people, the basic challenge remains the

same: ensuring the confidentiality, integrity, and availability of the information.

It is a long-held tenet that an organization’s information security practice should

support the organization’s mission, vision, and strategy. Grounded in a solid base of information security theory, the application of the principles of information security should

enable the organization to perform its mission efficiently and effectively with an acceptable level of risk.

1

Security and Risk Management

Governance

The organization’s mission and vision must be defined at the highest levels of the organization. In public-sector organizations, governance decisions are made through the

legislative process. In corporate environments, the organization’s board of directors serves

a similar role, albeit constrained by the laws of the jurisdictions in which that entity

conducts business.

Governance is primarily concerned with setting the conditions for the organization’s management to act in the interests of the stakeholders. In setting those conditions, corporate governance organizations are held to a standard of care to ensure that

the organization’s management acts ethically, legally, and fairly in the pursuit of the

organization’s mission.

Acting on behalf of the organization requires that the governing body have tools to

evaluate the risks and benefits of various actions. This can include risks to the financial

health of the organization, failure to meet compliance requirements, or operational risks.

Increasingly, governing bodies are explicitly addressing the risk of compromises to the

information security environment.

In 2015, the Organization for Economic Cooperation and Development (OECD)

revised its Principles of Corporate Governance. These principles were designed to help

Evaluate and Apply Security Governance Principles

7

policymakers evaluate and improve the legal, regulatory, and institutional framework for

corporate governance. These principles have been adopted or endorsed by multiple international organizations as best practice for corporate governance.

✔✔ The OECD Principles of Corporate Governance

The Principles of Corporate Governance provide a framework for effective corporate governance through six chapters.

■■ Chapter 1: This chapter focuses on how governance can be used to promote

efficient allocation of resources, as well as fair and transparent markets.

■■ Chapter 2: Chapter 2 focuses on shareholder rights such as the right to partici-

pate in key decisions through shareholder meetings and the right to have information about the company. It emphasizes equitable treatment of shareholders,

as well as the ownership functions that the OECD considers key functions.

■■ Chapter 3: The economic incentives provided at each level of the investment

chain are covered in this chapter. Minimizing conflicts of interest and the role of

institutional investors who act in a fiduciary capacity are covered, as well as the

importance of fair and effective price discovery in stock markets.

■■ Chapter 4: This chapter focuses on the role of stakeholders in corporate gover-

nance, including how corporations and stockholders should cooperate and the

necessity of establishing the rights of stockholders. It supports Chapter 2’s coverage of stockholder rights, including the right to information and the right to seek

redress for violations of those rights.

■■ Chapter 5: Disclosure of financial and operational information, such as operat-

ing results, company objectives, risk factors, share ownership, and other critical

information, are discussed in this chapter.

■■ Chapter 6: The final chapter discusses the responsibilities of the board of direc-

tors, including selecting management, setting management compensation,

reviewing corporate strategy, risk management, and other areas, such as the

oversight of internal audit and tax planning.

Governance failures in the both the private and public sectors are well known. In particular, failures of organizational governance to address information security practices can

have catastrophic consequences.

8

DOMAIN 1 Security and Risk Management

1

✔✔ Ashley Madison Breach

Security and Risk Management

In July 2015, a major Canadian corporation’s information systems were compromised,

resulting in the breach of personally identifiable information for some 36 million user

accounts in more than 40 countries. Its primary website, AshleyMadison.com, connected

individuals who were seeking to have affairs.

Founded in 2002, the company experienced rapid growth and by the time of the breach

was generating annual revenues in excess of $100 million. Partly due to the organization’s rapid growth, the organization did not have a formal framework for managing risk

or documented information security policies, the information security leadership did not

report to the board (or board of directors) or the CEO, and only some of the organization’s employees had participated in the information security awareness program for the

organization.

The attackers subsequently published the information online, exposing the personal

details of the company’s customers. The breach was widely reported, and subsequent

class-action lawsuits from affected customers claimed damages of more than $567

million. Public shaming of the customers caused a number of high-profile individuals

to resign from their jobs, put uncounted marriages at risk, and, in several instances, was

blamed for individuals’ suicides.

A joint investigation of the incident performed by the Privacy Commissioner of Canada

and the Australian Privacy Commissioner and Acting Australian Information Commissioner identified failures of governance as one of the major factors in the event. The

report found, among a number of failings, that the company’s board and executive leadership had not taken reasonable steps to implement controls for sensitive information

that should have received protection under both Canadian and Australian law.

Almost without exception, the post-compromise reviews of major information security

breaches have identified failed governance practices as one of the primary factors contributing to the compromise.

Information security governance in the public sector follows a similar model. The

laws of the jurisdiction provide a decision-making framework, which is refined through

the regulatory process. In the United States, this structure can be seen in the legislative

adoption of the Federal Information Security Management Act of 2002, which directed

the adoption of good information security practices across the federal enterprise. The several chief executives, interpreting the law, have given force to its implementation through

a series of management directives, ultimately driving the development of a standard body

of information security practice.

Evaluate and Apply Security Governance Principles

9

Other models for organizational governance exist. For example, the World Wide

Web Consortium (W3C), founded by Tim Berners-Lee in 1994, provides a forum for

developing protocols and guidelines to encourage long-term growth for the Web. The

W3C, through a consensus-based decision process, attempts to address the growing technological challenge of diverse uses of the World Wide Web using open, nonproprietary

standards. These include standards for the Extensible Markup Language (XML), Simple

Object Access Protocol (SOAP), Hypertext Markup Language (HTML), and others.

From a governance perspective, this model provides a mechanism for active engagement

from a variety of stakeholders who support the general mission of the organization.

Due Care

Governance requires that the individuals setting the strategic direction and mission of

the organization act on behalf of the stakeholders. The minimum standard for their

governance action requires that they act with due care. This legal concept expects these

individuals to address any risk as would a reasonable person given the same set of facts.

This “reasonable person” test is generally held to include knowledge of the compliance

environment, acting in good faith and within the powers of the office, and avoidance of

conflicts of interest.

In this corporate model, this separation of duties between the organization’s ownership interests and the day-to-day management is needed to ensure that the interests of the

true owners of the organization are not compromised by self-interested decision-making

by management.

The idea of duty of care extends to most legal systems. Article 1384 of the Napoleonic

Code provides that “One shall be liable not only for the damages he causes by his own

act, but also for that which is caused by the acts of persons for whom he is responsible, or

by things which are in his custody.”

For example, the California Civil Code requires that “[a] business that owns, licenses,

or maintains personal information about a California resident shall implement and maintain reasonable security procedures and practices appropriate to the nature of the information, to protect the personal information from unauthorized access, destruction, use,

modification, or disclosure.” In defining reasonable in the California Data Breach Report

in February 2016, the California attorney general invoked the Center for Internet Security (CIS) Critical Security Controls as “a minimum level of information security that all

organizations should meet [and] the failure to implement all of the Controls that apply to

an organization’s environment constitutes a lack of reasonable security.”

In India, the Ministry of Communication and Information Technology similarly used

the ISO 27001 standard as one that meets the minimum expectations for protection of

sensitive personal data or information arising out of the adoption of the Information

Technology Act of 2000.

10

DOMAIN 1 Security and Risk Management

A wide variety of actions beyond adoption of a security framework can be taken by

boards to meet their duty of care. These actions may include bringing individuals into the

governing body with specific expertise in information security, updating of policies which

express the board’s expectations for managing information security, or engaging the services of outside experts to audit and evaluate the organization’s security posture.

Widespread media reports of compromise and the imposition of ever-larger penalties for inappropriate disclosure of sensitive information would suggest that the governance bodies should increase their attention to information security activities. However,

research into the behavior of publicly traded companies suggests that many boards have

not significantly increased their oversight of information security practices.

A 2017 Stanford University study suggests that organizations still lack sufficient cybersecurity expertise and, as a consequence, have not taken actions to mitigate the risks from

data theft, loss of intellectual property, or breach of personally identifiable information.

When such failures become public, most announce steps to improve security, provide

credit monitoring, or enter into financial settlements with injured parties.

Almost without exception, corporations that experienced breaches made no changes

to their oversight of information security activities, nor did they add cybersecurity professionals to their boards. Boards held few executives directly accountable, either through

termination or reductions in compensation. In other words, despite widespread recognition that there is increasing risk from cyber attacks, most governance organizations have

not adopted new approaches to more effectively address weaknesses in cybersecurity

governance.

Compliance means that all the rules and regulations pertinent to your organization

have been met. Compliance is an expectation that is defined by an external entity that