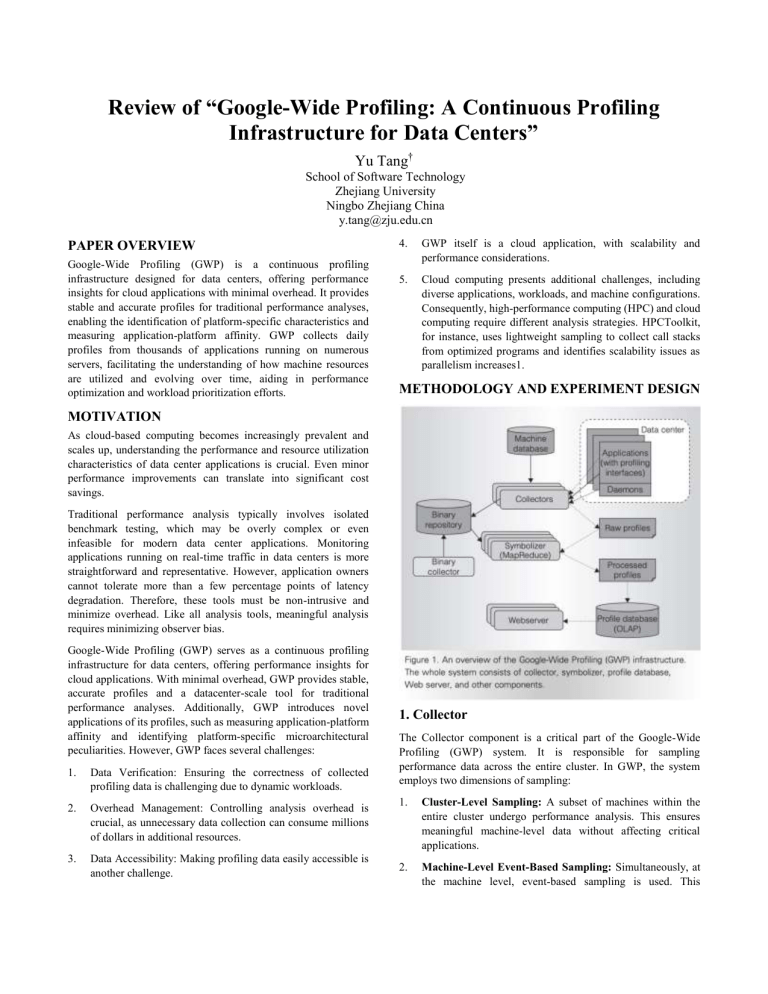

Review of “Google-Wide Profiling: A Continuous Profiling Infrastructure for Data Centers” Yu Tang† School of Software Technology Zhejiang University Ningbo Zhejiang China y.tang@zju.edu.cn PAPER OVERVIEW Google-Wide Profiling (GWP) is a continuous profiling infrastructure designed for data centers, offering performance insights for cloud applications with minimal overhead. It provides stable and accurate profiles for traditional performance analyses, enabling the identification of platform-specific characteristics and measuring application-platform affinity. GWP collects daily profiles from thousands of applications running on numerous servers, facilitating the understanding of how machine resources are utilized and evolving over time, aiding in performance optimization and workload prioritization efforts. 4. GWP itself is a cloud application, with scalability and performance considerations. 5. Cloud computing presents additional challenges, including diverse applications, workloads, and machine configurations. Consequently, high-performance computing (HPC) and cloud computing require different analysis strategies. HPCToolkit, for instance, uses lightweight sampling to collect call stacks from optimized programs and identifies scalability issues as parallelism increases1. METHODOLOGY AND EXPERIMENT DESIGN MOTIVATION As cloud-based computing becomes increasingly prevalent and scales up, understanding the performance and resource utilization characteristics of data center applications is crucial. Even minor performance improvements can translate into significant cost savings. Traditional performance analysis typically involves isolated benchmark testing, which may be overly complex or even infeasible for modern data center applications. Monitoring applications running on real-time traffic in data centers is more straightforward and representative. However, application owners cannot tolerate more than a few percentage points of latency degradation. Therefore, these tools must be non-intrusive and minimize overhead. Like all analysis tools, meaningful analysis requires minimizing observer bias. Google-Wide Profiling (GWP) serves as a continuous profiling infrastructure for data centers, offering performance insights for cloud applications. With minimal overhead, GWP provides stable, accurate profiles and a datacenter-scale tool for traditional performance analyses. Additionally, GWP introduces novel applications of its profiles, such as measuring application-platform affinity and identifying platform-specific microarchitectural peculiarities. However, GWP faces several challenges: 1. Data Verification: Ensuring the correctness of collected profiling data is challenging due to dynamic workloads. 2. Overhead Management: Controlling analysis overhead is crucial, as unnecessary data collection can consume millions of dollars in additional resources. 3. Data Accessibility: Making profiling data easily accessible is another challenge. 1. Collector The Collector component is a critical part of the Google-Wide Profiling (GWP) system. It is responsible for sampling performance data across the entire cluster. In GWP, the system employs two dimensions of sampling: 1. Cluster-Level Sampling: A subset of machines within the entire cluster undergo performance analysis. This ensures meaningful machine-level data without affecting critical applications. 2. Machine-Level Event-Based Sampling: Simultaneously, at the machine level, event-based sampling is used. This Review of Google-Wide Profiling: A Continuous Profiling Infrastructure for Data Centers approach captures relevant data, such as stack traces, hardware events, heap profiles, and kernel events. After data collection, the Collector periodically retrieves a list of all machines from the central machine database. It then selects a subset of machines for remote activation of performance analysis and retrieves the results. The Collector can retrieve different types of sample data sequentially or concurrently, including hardware performance counters, lock contention, and memory allocation. To enhance availability and minimize additional disturbances caused by the Collector itself, GWP Collector operates as a distributed service. It monitors error conditions and stops performance analysis when the failure rate exceeds predefined thresholds, reducing interference with running services and machines. The design of GWP’s Collector allows meaningful machine-level data by performing performance analysis on a small subset of machines across the entire cluster. Regularly activating performance analysis and retrieving results, the Collector helps users monitor and optimize resource utilization, ensuring efficient system operation without wasting resources and improving overall resource efficiency. 2. Profiles and profiling interfaces GWP collects two types of profiles: machine-level profiles and per-process profiles. The machine-level profiles capture all activities occurring on a machine, including user applications, kernel operations, kernel modules, daemons, and other background tasks. These profiles provide multidimensional performance data visualization and analysis capabilities, helping users gain a comprehensive understanding of application performance characteristics. Through visualization and data analysis tools, users can achieve performance optimization. This comprehensive performance analysis interface empowers users with deeper insights, enabling them to better manage and optimize application performance. Symbolization, which associates performance data with source code, helps users better understand the code execution behind performance data. By efficiently storing and managing performance data and binary files, data integrity and accessibility are ensured, providing users with fast and accurate performance analysis services. 4. Profile storage GWP stores performance logs and their corresponding binary files through the Google File System (GFS), ensuring the integrity and reliability of performance data. The system accumulates several terabytes of historical performance data, which are crucial for performance analysis and optimization. During data loading, GWP loads samples into a read-only multidimensional data storage database distributed across hundreds of machines, making the data accessible to users and the system. This service facilitates userdriven queries and automated analysis. Given that most queries are frequent, GWP employs an aggressive caching strategy to reduce latency and enhance query speed. RELIABILITY ANALYSIS To reduce the overhead of continuous profiling, the authors employed sampling across two dimensions: time and machines. However, sampling inherently introduces variation, necessitating an understanding of its impact on profiling quality. Yet, this is challenging due to the dynamic behavior of data center workloads. There is no direct method to assess the representativeness of profiles. Therefore, this paper evaluates profile quality through the following two indirect approaches。 1. Analyze the stability of profiles Entropy First, the author proposed to use entropy to evaluate the variation of a given profile. And the definition of entropy H is given as: 3. Symbolization and binary storage After collecting data, GWP utilizes the Google File System to store these profiles. To provide valuable insights, it is essential to associate these profiles with their corresponding source code. However, to conserve network bandwidth and disk space, applications deployed in data centers often lack any debug or symbol information. This lack of information makes establishing associations challenging. Additionally, some applications, such as those based on Java, execute just-in-time compilation, and their code is often unavailable offline, making symbolization impossible. The current implementation involves storing all non-stripped debug information binaries in a central storage center. However, due to the large size of these binaries, symbolizing profiles for each day can be time-consuming. To mitigate this, a MapReduce distributed approach is used to process symbolization tasks, reducing latency. where n is the total number of entries in the profile and p(x) is the fraction of profile samples on the entry x. Entropy is a measure of uncertainty for a random variable. In the context of performance data, the level of entropy reflects the distribution of the data. The author evaluates the stability of performance data by calculating its entropy, which helps determine the reliability and representativeness of the data. High entropy values indicate an unstable data distribution, while low entropy values suggest relatively stable data distribution. Analyzing entropy allows assessing the stability of performance data. In this section, the author uses entropy as a metric to gauge the degree of variation in a given set of performance data, thereby assessing its stability and reliability. By analyzing entropy values, one can understand the variability of performance data, evaluate its Review of Google-Wide Profiling: A Continuous Profiling Infrastructure for Data Centers stability, and make judgments about its reliability and representativeness. Profiles’ Entropy To assess the uncertainty and variability of performance data and gain a better understanding of data stability and reliability, the author introduces Profiles’ Entropy. Entropy serves as a metric for measuring data diversity and distribution uniformity, helping users comprehend the range of performance data variations and their distribution patterns. By analyzing Profiles’ Entropy, one can discern the concentration level and trends within the dataset, thus evaluating its diversity and stability. Additionally, Profiles’ Entropy can be used to assess the quality and effectiveness of data sampling. Higher entropy values may indicate insufficient or uneven data sampling, while lower entropy values suggest thorough and evenly distributed sampling. Analyzing entropy guides subsequent data processing and analysis tasks. In experiments, the author observes that the daily application-level Profiles’ Entropy remains relatively stable across different dates, typically falling within a narrow range. This indicates minimal variation in the data, demonstrating a certain level of stability. Manhattan Distance Entropy does not capture the differences between different item names. For example, consider two function profiles: 1. Profile A has x% of entries named “foo” and y% named “bar.” 2. Profile B has y% of entries named “foo” and x% named “bar.” Despite their different distributions, the entropy of these two profiles would be equal. To reflect the differences in item names between different profiles, we calculate the Manhattan distance between the top k item names of the two profiles. The formula is as follows: where X and Y are two profiles, k is the number of top entries to count, and py(xi) is 0 when xi is not in Y. Essentially, the Manhattan distance is a simplified version of relative entropy between two profiles. 2. Comparing with Other Sources In addition to assessing the stability of profiles across different dates, the authors of this paper cross-validate the profiles using performance and utilization data from other Google sources. One example is the utilization data collected by the data center monitoring system. Unlike GWP, which collects data from a subset of machines, the monitoring system gathers data from all machines in the data center. However, the granularity of this data is coarser, such as overall CPU utilization. The CPU utilization data (measured in core-seconds) aligns with the measurements from GWP’s CPU cycle profiles, and the relationship is expressed by the following formula: LIMITATION Limitations of Sampling Rate on Performance Impact: Although the default sampling rate is already sufficiently high to yield top-level performance insights with high confidence, there are cases where GWP may not collect enough samples for comprehensive performance analysis. For certain applications, achieving a complete analysis might be challenging. While the authors propose extending GWP for application-specific analysis in cloud environments, achieving high sampling rates on these machines dedicated to specific applications introduces additional overhead. The critical questions remain unanswered: What is the exact impact of increasing the sampling rate? How significantly does it affect performance results? Unfortunately, this aspect lacks detailed explanation and experimental validation in the paper.