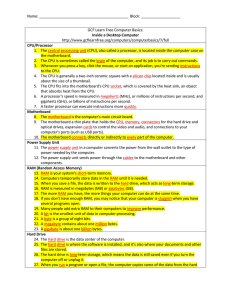

CompTIA A+ Certification All-in-One Exam Guide, 8th Edition - PDF Room

advertisement