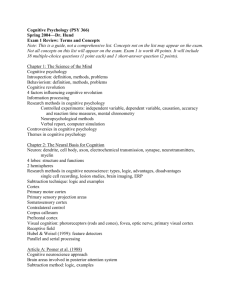

Eysenck Michael W and Keane Mark T 2015 Cognitive psychology a student's handbook 7th ed

advertisement