1

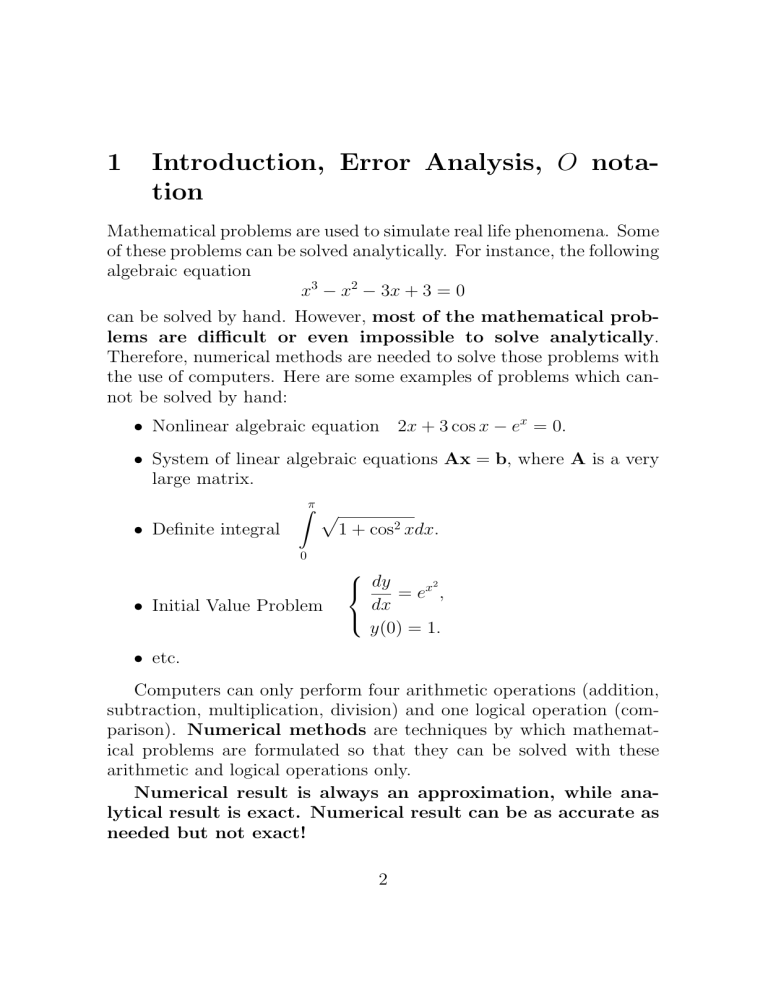

Introduction, Error Analysis, O notation

Mathematical problems are used to simulate real life phenomena. Some

of these problems can be solved analytically. For instance, the following

algebraic equation

x3 − x2 − 3x + 3 = 0

can be solved by hand. However, most of the mathematical problems are difficult or even impossible to solve analytically.

Therefore, numerical methods are needed to solve those problems with

the use of computers. Here are some examples of problems which cannot be solved by hand:

• Nonlinear algebraic equation 2x + 3 cos x − ex = 0.

• System of linear algebraic equations Ax = b, where A is a very

large matrix.

Zπ p

• Definite integral

1 + cos2 xdx.

0

• Initial Value Problem

dy = ex2 ,

dx

y(0) = 1.

• etc.

Computers can only perform four arithmetic operations (addition,

subtraction, multiplication, division) and one logical operation (comparison). Numerical methods are techniques by which mathematical problems are formulated so that they can be solved with these

arithmetic and logical operations only.

Numerical result is always an approximation, while analytical result is exact. Numerical result can be as accurate as

needed but not exact!

2

Lecture Notes - Numerical Methods

1.1

M.Ashyraliyev

Absolute and Relative Errors

Definition 1.1. Suppose x̂ is an approximation to x. The absolute

error Ex in the approximation of x is defined as the magnitude of the

difference between exact value x and the approximated value x̂. So,

Ex = |x − x̂|.

(1.1)

The relative error Rx in the approximation of x is defined as the

ratio of the absolute error to the magnitude of x itself. So,

Rx =

|x − x̂|

,

|x|

x 6= 0.

(1.2)

Example 1.1. Let x̂ = 1.02 be an approximation of x = 1.01594,

ŷ = 999996 be an approximation of y = 1000000 and ẑ = 0.000009 be

an approximation of z = 0.000012. Then

Ex = |x − x̂| = |1.01594 − 1.02| = 0.00406,

Rx =

|x − x̂| |1.01594 − 1.02|

=

= 0.0039963,

|x|

|1.01594|

Ey = |y − ŷ| = |1000000 − 999996| = 4,

Ry =

|y − ŷ| |1000000 − 999996|

=

= 0.000004,

|y|

|1000000|

Ez = |z − ẑ| = |0.000012 − 0.000009| = 0.000003,

Rz =

|z − ẑ| |0.000012 − 0.000009|

=

= 0.25.

|z|

|0.000012|

Note that Ex ≈ Rx , Ry << Ey and Ez << Rz .

3

Lecture Notes - Numerical Methods

1.2

M.Ashyraliyev

Types of Errors in Numerical Procedures

When applying a numerical method to solve a certain mathematical

problem on the computer, one has to be aware of two main types of

errors which may affect significantly the numerical result. The first

type of errors, so called truncation errors, occur when a mathematical problem is reformulated so that it can be solved with arithmetic

operations only. The other type of errors, so called round-off errors,

occur when a machine actually does those arithmetic operations.

Here are some real life examples of what can happen when errors

in numerical algorithms are underestimated.

• The Patriot Missile failure, in Dharan, Saudi Arabia, on February

25, 1991 which resulted in 28 deaths, is ultimately attributable

to poor handling of rounding errors.

• The explosion of the Ariane 5 rocket just after lift-off on its

maiden voyage off French Guiana, on June 4, 1996, was ultimately the consequence of a simple overflow.

• The sinking of the Sleipner A offshore platform in Gandsfjorden

near Sta-vanger, Norway, on August 23, 1991, resulted in a loss

of nearly one billion dollars. It was found to be the result of

inaccurate finite element analysis.

1.2.1

Truncation Errors

Four arithmetic operations are sufficient to evaluate any polynomial

function. But how can we evaluate other functions by using only four

arithmetic operations?

For instance, how do we find the value of func√

tion f (x) = x at x = 5?

With the help of classical Taylor’s theorem, functions can be approximated with polynomials. Let us first recall the Taylor’s theorem

known from Calculus.

4

Lecture Notes - Numerical Methods

M.Ashyraliyev

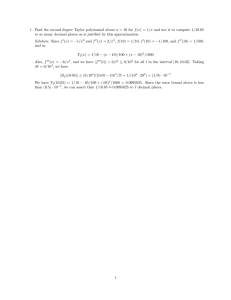

Theorem 1.1. (Taylor’s Theorem)

Suppose function f has continuous n + 1 derivatives on interval [a, b].

Then for any points x and x0 from [a, b], there exists a number ξ

between x and x0 such that

f (x) = Pn (x) + Rn (x),

(1.3)

where

f 00 (x0 )

f (n) (x0 )

2

Pn (x) = f (x0 )+f (x0 )(x−x0 )+

(x−x0 ) +. . .+

(x−x0 )n

2!

n!

(1.4)

is called the n-th degree Taylor polynomial and

0

f (n+1) (ξ)

Rn (x) =

(x − x0 )n+1

(n + 1)!

(1.5)

is called the remainder term. (1.3)-(1.5) is called Taylor expansion

of function f (x) about point x0 .

Remark 1.1. If the remainder term Rn (x) in (1.3) is small enough then

function f (x) can be approximated with Taylor polynomial Pn (x). So

that

f (x) ≈ Pn (x).

(1.6)

Then, the error in this approximation is the magnitude of the remainder term Rn (x), since E = |f (x) − Pn (x)| = |Rn (x)|. In numerical

analysis, remainder term (1.5) is usually called a truncation error of

approximation (1.6).

Example 1.2. Let f (x) = ex . Then, using Taylor’s theorem with

x0 = 0, we have

ex = Pn (x) + Rn (x),

where

x x2 x3

xn

Pn (x) = 1 + +

+

+ ... +

1! 2!

3!

n!

5

Lecture Notes - Numerical Methods

M.Ashyraliyev

is a Taylor polynomial and

xn+1

Rn (x) = e

(n + 1)!

ξ

is a remainder term (or truncation error) associated with Pn (x). Here

ξ is some point between 0 and x.

Now, assume that we want to approximate the value of f (1) = e with

Taylor polynomials. The exact value of number e is known from the

literature; it is e = 2.718281828459 . . .

Taylor polynomials up to degree 5 for function f (x) = ex are:

P0 (x) = 1,

P1 (x) = 1 + x,

x2

,

2

x2

P3 (x) = 1 + x +

+

2

x2

P4 (x) = 1 + x +

+

2

x2

P5 (x) = 1 + x +

+

2

P2 (x) = 1 + x +

x3

,

6

x3

+

6

x3

+

6

x4

,

24

x4

x5

+

.

24 120

Then

P0 (1) = 1

=⇒ Error = |f (1) − P0 (1)| = 1.718281828459 . . .

P1 (1) = 2

=⇒ Error = |f (1) − P1 (1)| = 0.718281828459 . . .

P2 (1) = 2.5

=⇒ Error = |f (1) − P2 (1)| = 0.218281828459 . . .

P3 (1) = 2.666666 . . . =⇒ Error = |f (1) − P3 (1)| = 0.051615161792 . . .

P4 (1) = 2.708333 . . . =⇒ Error = |f (1) − P4 (1)| = 0.009948495126 . . .

P5 (1) = 2.716666 . . . =⇒ Error = |f (1) − P5 (1)| = 0.001615161792 . . .

We observe that truncation error decreases as we use higher degree

Taylor polynomials.

6

Lecture Notes - Numerical Methods

M.Ashyraliyev

Now, let us find the degree of Taylor polynomial that should be used

to approximate f (1) so that the error is less than 10−6 . We have

Rn (1) =

e

3

eξ

≤

<

< 10−6

(n + 1)!

(n + 1)! (n + 1)!

=⇒

n ≥ 9.

So, analyzing the remainder term we can say that 9-th degree Taylor

polynomial is certain to give an approximation with error less than

10−6 . Indeed, we have

1 1 1 1 1 1 1 1 1

+ + + + + + + + = 2.718281525573 . . .

1! 2! 3! 4! 5! 6! 7! 8! 9!

for which |f (1) − P9 (1)| = 0.000000302886 . . . < 10−6 .

P9 (1) = 1+

Example 1.3. Consider the definite integral

Z1/2

2

p = ex dx = 0.544987104184 . . .

0

From previous example we have approximation

x x2 x3 x4

e ≈1+ +

+

+

1! 2!

3!

4!

Replacing x with x2 , we get

x

x2

e

x2 x4 x6 x8

≈1+

+

+

+

1!

2!

3!

4!

Then

Z1/2

Z1/2

4

6

8

x

x

x

2

p = ex dx ≈

1 + x2 +

+

+

dx =

2

6

24

0

0

x3 x5 x7

x9

= x+

+

+

+

3

10 42 216

=

1/2

=

0

1

1

1

1

1

+

+

+

+

= 0.54498672 . . . = p̂

2 3 · 23 10 · 25 42 · 27 216 · 29

7

Lecture Notes - Numerical Methods

M.Ashyraliyev

with the error in approximation being |p − p̂| ≈ 3.8 × 10−7 .

Example 1.4. What is the maximum possible error in using the approximation

x3 x5

+

sin x ≈ x −

3!

5!

when −0.3 ≤ x ≤ 0.3?

Solution: Using Taylor’s theorem for function f (x) = sin x with

x0 = 0, we have

x3 x5

+

+ R6 (x),

sin x = x −

3!

5!

where

f (7) (ξ) 7

x7

R6 (x) =

x = − cos (ξ)

7!

7!

Then for −0.3 ≤ x ≤ 0.3 we have

0.37

|x|7

≤

≈ 4.34 × 10−8 .

|R6 (x)| ≤

7!

7!

Remark 1.2. Truncation error is under control of the user. Truncation

error can be reduced as much as it is needed. However, it cannot be

eliminated entirely!

1.2.2

Round-off Errors

Computers use only a fixed number of digits to represent a number.

As a result the numerical values stored in a computer are said to have

a finite precision. Because of this round-off errors occur when the

arithmetic operations, performed in a machine, involve numbers with

only a finite number of digits.

Remark 1.3. Round-off errors depend on hardware and the computer

language used.

8

Lecture Notes - Numerical Methods

M.Ashyraliyev

To understand the effect of round-off errors we should first understand how numbers are stored on a machine. Although computers use

binary number system (so that every digit is 0 or 1), for simplicity of

explanation we will use here the decimal number system.

Any real number y can be normalized to achieve the form:

y = ±0.d1 d2 . . . dk−1 dk dk+1 dk+2 . . . × 10n

(1.7)

where n ∈ Z, 1 ≤ d1 ≤ 9 and 0 ≤ dj ≤ 9 for j > 1. For instance,

38

1

y=

= 0.126666 . . . × 102 or y =

= 0.3030303030 . . . × 10−1 .

3

33

Real number y in (1.7) may have infinitely many digits. As we

have already mentioned, computer stores only finite number of digits.

That’s why number y should be represented with so-called floatingpoint form of y, denoted by f l(y), which has let say only k digits.

There are two ways to perform this. One method, called chopping, is

to simply chop off in (1.7) the digits dk+1 dk+2 . . . to obtain

f lc (y) = ±0.d1 d2 . . . dk−1 dk × 10n

The other method, called rounding, is performed in the following way:

if dk+1 < 5 then the result is the same as chopping; if dk+1 ≥ 5 then

1 is added to k-th digit and the resulting number is chopped. The

floating-point form of y obtained with rounding is denoted by f lr (y).

Example 1.5. Determine five-digit (a) chopping and (b) rounding

22

values of numbers

= 3.14285714 . . . and π = 3.14159265 . . .

7

Solution:

1

(a) Five-digit chopping of numbers gives f lc 22

7 = 0.31428 × 10 and

f lc (π) = 0.31415 × 101 . Therefore, the errors of chopping are

22

22

−

≈ 5.7 × 10−5 ; |f lc (π) − π| ≈ 9.3 × 10−5 .

f lc

7

7

9

Lecture Notes - Numerical Methods

M.Ashyraliyev

1

(b) Five-digit rounding of numbers gives f lr 22

7 = 0.31429 × 10 and

f lr (π) = 0.31416 × 101 . So, the errors of rounding are

22

22

f lr

≈ 4.3 × 10−5 ; |f lr (π) − π| ≈ 7.3 × 10−6 .

−

7

7

We observe that the values obtained from rounding method have less

errors than the values obtained from chopping method.

Remark 1.4. The error that results from replacing a number with

its floating-point form is called round-off error regardless of whether

the rounding or chopping method is used. However, rounding method

results in less error than chopping method and that’s why it is preferred

generally.

1.2.3

Loss of Significance

A loss of significance can occur if two nearly equal quantities are

subtracted from one another. For example, both numbers a = 0.177241

and b = 0.177589 have 6 significant digits. But the difference of them

b − a = 0.000348 = 0.348 × 10−3 has only three significant digits. So,

in subtraction of these numbers three significant digits have been lost!

This loss is called subtractive cancellation.

Errors can also occur when two quantities of radically different

magnitudes are added. For example, if we add numbers x = 0.1234

and y = 0.6789 × 10−20 the result for x + y will be rounded to 0.1234

by a machine that keeps only 16 significant digits.

The loss of accuracy due to round-off errors can often be avoided by

a careful sequencing of operations or a reformulation of the problem.

We will describe this issue with examples.

√

√ Example 1.6. Consider two functions f (x) = x

x + 1 − x and

x

g(x) = √

√ . Evaluate f (500) and g(500) using six-digits

x+1+ x

10

Lecture Notes - Numerical Methods

M.Ashyraliyev

arithmetic with rounding method and compare the results in terms of

errors.

Solution: Using six-digits arithmetic with rounding method, we have

√

√ ˆ

f (500) = 500 ·

501 − 500 = 500 · (22.3830 − 22.3607) =

= 500 · 0.0223 = 11.1500

and

500

500

500

√

=

=

= 11.1748.

501 + 500 22.3830 + 22.3607 44.7437

Note that the exact values of these two functions at x = 500 are the

same, namely f (500) = g(500) = 11.1747553 . . . Then, the absolute errors in approximations of f (500) and g(500), when six-digits arithmetic

with rounding method is used, are as following:

ĝ(500) = √

Ef = |f (500) − fˆ(500)| ≈ 2.5 × 10−2 ,

Eg = |g(500) − ĝ(500)| ≈ 4.5 × 10−5 .

Although f (x) ≡ g(x), we see that using g(x) function results in much

less error than using f (x) function. It is only due to the loss of significance which occurred in f (x) when we subtracted nearly equal numbers. By rewriting function f (x) in the form of g(x) we eliminated

that subtractive cancellation.

3

2

Example 1.7.

Consider two

polynomials P (x) = x − 3x + 3x − 1

and Q(x) = (x − 3)x + 3 x − 1. Evaluate P (2.19) and Q(2.19) using

three-digits arithmetic with rounding method and compare the results

in terms of errors.

Solution: Using three-digits arithmetic with rounding method, we

have

P̂ (2.19) = 2.193 − 3 · 2.192 + 3 · 2.19 − 1 =

= 2.19 · 4.80 − 3 · 4.80 + 6.57 − 1 = 10.5 − 14.4 + 5.57 =

= −3.9 + 5.57 = 1.67

11

Lecture Notes - Numerical Methods

M.Ashyraliyev

and

Q̂(2.19) =

(2.19 − 3) · 2.19 + 3 · 2.19 − 1 =

= (−0.81 · 2.19 + 3) · 2.19 − 1 = (−1.77 + 3) · 2.19 − 1 =

= 1.23 · 2.19 − 1 = 2.69 − 1 = 1.69.

Note that the polynomials P (x) and Q(x) are the same and

P (2.19) = Q(2.19) = 1.685159. Then, the absolute errors in approximations of P (2.19) and Q(2.19), when three-digits arithmetic with

rounding method is used, are as following:

EP = |P (2.19) − P̂ (2.19)| = 0.015159,

EQ = |Q(2.19) − Q̂(2.19)| = 0.004841.

Although P (x) ≡ Q(x), we see that using polynomial Q(x) results in

three times less error than using polynomial P (x). Moreover, if we

count the number of arithmetic operations, we observe that evaluation

of polynomial Q(x) needs less number of arithmetic operations than

evaluation of polynomial P (x). Indeed,

• it requires 4 multiplications and 3 additions/subtractions to evaluate the polynomial P (x);

• it requires 2 multiplications and 3 additions/subtractions to evaluate the polynomial Q(x).

Remark 1.5. The polynomial Q(x) in Example 1.7 is called a nested

structure of polynomial P (x). Evaluation of nested structure of polynomial requires less arithmetic operations and often has less error.

1.2.4

Propagation of Error

When repeating arithmetic operations are performed on the computer,

round-off errors sometimes have tendency to accumulate and therefore

12

Lecture Notes - Numerical Methods

M.Ashyraliyev

to grow up. Let us illustrate this issue on the base of two arithmetic

operations, addition and multiplication of two numbers.

Let p̂ be an approximation of number p and q̂ be an approximation

of number q. Then

p = p̂ + p

and q = q̂ + q

where p and q are the errors in the approximations p̂ and q̂, respectively. Assume that both p and q are positive. Then

p + q = p̂ + q̂ + (p + q ).

Therefore, approximation of p+q with p̂+ q̂ has an error p +q . So, the

absolute error in the sum p + q can be as large as the sum of absolute

errors in p and q.

Assuming that p ≈ p̂ and q ≈ q̂, we have

Rpq =

p̂q + q̂p + p q

q p

pq − p̂q̂

=

≈ + = Rq + Rp .

pq

pq

q

p

So, the relative error in the product pq can be approximately as large

as the sum of relative errors in p and q.

1.3

O notation

∞

Definition 1.2. Suppose {pn }∞

n=1 and {qn }n=1 are sequences of real

numbers. If there exist positive constant C and natural number N

such that

|pn | ≤ C|qn | for all n ≥ N,

then we say that {pn } is of order {qn } as n → ∞ and we write

pn = O(qn ).

Example 1.8. Let {pn }∞

n=1 be a sequence of numbers defined by

2

pn = n + n for all n ∈ N.

Since for all n ≥ 1 we have pn = n2 + n ≤ n2 + n2 = 2n2 , it follows

that pn = O(n2 ).

13

Lecture Notes - Numerical Methods

M.Ashyraliyev

Example 1.9. Let {pn }∞

n=1 be a sequence of numbers defined by

n+1

for all n ∈ N.

pn =

n2

n+1

n+n

2

Since for all n ≥ 1 we have pn =

≤

=

, it follows that

n2

n2

n

1

pn = O

.

n

Example 1.10. Let {pn }∞

n=1 be a sequence of numbers defined by

n+3

pn =

for all n ∈ N.

2n3

n+3

n+n

1

Since for all n ≥ 3 we have pn =

≤

=

, it follows that

2n3

2n3

n2

1

pn = O

.

n2

Definition 1.3. Let f (x) and g(x) be two functions defined in some

open interval containing point a. If there exist positive constants C

and δ such that

|f (x)| ≤ C|g(x)| for all x with |x − a| < δ,

then we say that f (x) is of order g(x) as x → a and we write

f (x) = O(g(x)).

Example 1.11. Using Taylor’s theorem for function f (x) = ln (1 + x)

with x0 = 0, we have

x3

x2

+

,

ln (1 + x) = x −

2

3(1 + ξ)3

where ξ is some point between 0 and x. Since

constant, we have

ln (1 + x) = x −

1

3(1+ξ)3

is bounded by a

x2

+ O(x3 ) for x close to 0.

2

14

Lecture Notes - Numerical Methods

1.4

M.Ashyraliyev

Self-study Problems

Problem 1.1. Compute the absolute error and the relative error in

approximations of p by p̂

a) p = 3.1415927, p̂ = 3.1416

√

b) p = 2, p̂ = 1.414

c) p = 8!, p̂ = 39900

Problem 1.2. Find the fourth degree Taylor polynomial for the function f (x) = cos x about x0 = 0.

Problem 1.3. Find the third degree Taylor polynomial for the function f (x) = x3 − 21x2 + 17 about x0 = 1.

Problem 1.4. Let f (x) = ln (1 + 2x).

a) Find the fifth degree Taylor polynomial P5 (x) for given function

f (x) about x0 = 0

b) Use P5 (x) to approximate ln (1.2)

c) Determine the actual error of the approximation in (b)

Problem 1.5. Let f (x) =

√

x + 4.

a) Find the third degree Taylor polynomial P3 (x) for given function

f (x) about x0 = 0

√

√

b) Use P3 (x) to approximate 3.9 and 4.2

c) Determine the actual error of the approximations in (b)

15

Lecture Notes - Numerical Methods

M.Ashyraliyev

Problem 1.6. Determine the degree of Taylor polynomial Pn (x) for

the function f (x) = ex about x0 = 0 that should be used to approximate e0.1 so that the error is less than 10−6 .

Problem 1.7. Determine the degree of Taylor polynomial Pn (x) for

the function f (x) = cos x about x0 = 0 that should be used to approximate cos (0.2) so that the error is less than 10−5 .

Problem 1.8. Let f (x) = ex .

a) Find the Taylor polynomial P8 (x) of degree 8 for given function

f (x) about x0 = 0

b) Find an upper bound for error |f (x) − P8 (x)| when −1 ≤ x ≤ 1

c) Find upper bound for error |f (x) − P8 (x)| when −0.5 ≤ x ≤ 0.5

Problem 1.9. Let f (x) = ex cos x.

a) Find the second degree Taylor polynomial P2 (x) for given function f (x) about x0 = 0

b) Use P2 (0.5) to approximate f (0.5)

c) Find an upper bound for error |f (0.5) − P2 (0.5)| and compare it

to the actual error

Z1

d) Use

Z1

P2 (x)dx to approximate

0

f (x)dx.

0

e) Determine the actual error of the approximation in (d)

Problem 1.10. Use three-digit rounding arithmetic to perform the

following calculations. Find the absolute error and the relative error

in obtained results.

16

Lecture Notes - Numerical Methods

M.Ashyraliyev

a) (121 − 0.327) − 119

b) (121 − 119) − 0.327

c) −10π + 6e −

3

62

Problem 1.11. Let P (x) = x3 − 6.1x2 + 3.2x + 1.5

a) Use three-digit arithmetic with chopping method to evaluate

P (x) at x = 4.17 and find the relative error in obtained result

b) Rewrite P (x) in a nested structure. Use three-digit arithmetic

with chopping method to evaluate a nested structure at x = 4.17

and find the relative error in obtained result

Problem 1.12. Let P (x) = x3 − 6.1x2 + 3.2x + 1.5

a) Use three-digit arithmetic with rounding method to evaluate

P (x) at x = 4.17 and find the relative error in obtained result

b) Rewrite P (x) in a nested structure. Use three-digit arithmetic

with rounding method to evaluate a nested structure at x = 4.17

and find the relative error in obtained result

Problem 1.13. Consider two functions f (x) = x + 1 −

x

√

and g(x) =

.

x + 1 + x2 + x + 1

p

x2 + x + 1

a) Use four-digit arithmetic with rounding method to approximate

f (0.1) and find the relative error in obtained result.

(Note that the true value is f (0.1) = 0.0464346247 . . .)

b) Use four-digit arithmetic with rounding method to approximate

g(0.1) and find the relative error in obtained result.

(Note that the true value is g(0.1) = 0.0464346247 . . .)

17

Lecture Notes - Numerical Methods

M.Ashyraliyev

ex − e−x

Problem 1.14. Let f (x) =

.

x

a) Evaluate f (0.1) using four-digit arithmetic with rounding method

and find the relative error of obtained value.

(Note that the true value is f (0.1) = 2.0033350004 . . .)

b) Find the third degree Taylor polynomial P3 (x) for given function

f (x) about x0 = 0.

x2 x3 x4

x

+

+

+ . . .)

(You can use the formula e = 1 + x +

2!

3!

4!

c) For approximation of f (0.1) evaluate P3 (0.1) using four-digit

arithmetic with rounding method and find the relative error of

obtained value.

√

Problem 1.15. For which values of x the expression 2x2 + 1 − 1

would cause the loss of accuracy and how would you avoid the loss of

accuracy?

Problem 1.16. For which values of x and y the expression

ln (x) − ln (y) would cause the loss of accuracy and how would you

avoid the loss of accuracy?

Problem 1.17. For each of the following

sequences, find the largest

1

possible value of α such that pn = O

nα

1

1

1

a) pn = √

b) pn = sin

c) pn = ln 1 +

n

n

n2 + n + 1

Problem 1.18. For each of the following functions, find the largest

possible value of α such that f (x) = O (xα ) as x → 0.

a) f (x) = sin x−x

b) f (x) =

1 − cos x

x

18

c) f (x) =

ex − e−x

x

![Student number Name [SURNAME(S), Givenname(s)] MATH 100, Section 110 (CSP)](http://s2.studylib.net/store/data/011223986_1-37c276ae41f28d5dba87bc6d27e2a5b3-300x300.png)