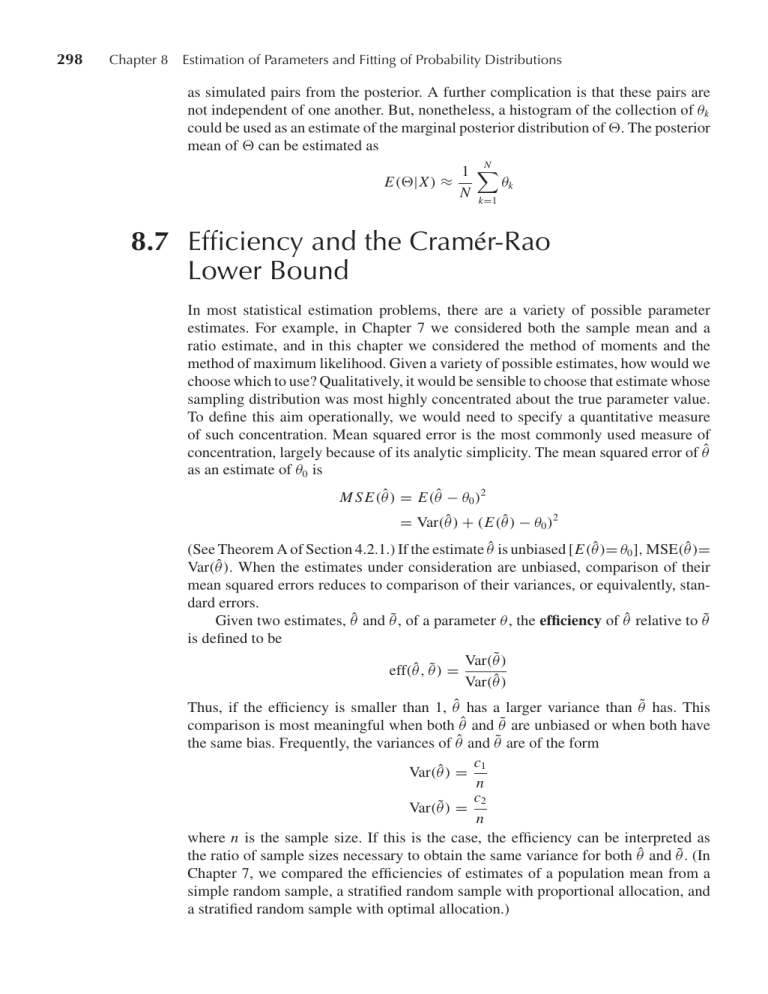

298 Chapter 8 Estimation of Parameters and Fitting of Probability Distributions as simulated pairs from the posterior. A further complication is that these pairs are not independent of one another. But, nonetheless, a histogram of the collection of θk could be used as an estimate of the marginal posterior distribution of ". The posterior mean of " can be estimated as E("|X ) ≈ N 1 ! θk N k=1 8.7 Efficiency and the Cramér-Rao Lower Bound In most statistical estimation problems, there are a variety of possible parameter estimates. For example, in Chapter 7 we considered both the sample mean and a ratio estimate, and in this chapter we considered the method of moments and the method of maximum likelihood. Given a variety of possible estimates, how would we choose which to use? Qualitatively, it would be sensible to choose that estimate whose sampling distribution was most highly concentrated about the true parameter value. To define this aim operationally, we would need to specify a quantitative measure of such concentration. Mean squared error is the most commonly used measure of concentration, largely because of its analytic simplicity. The mean squared error of θ̂ as an estimate of θ0 is M S E(θ̂) = E(θ̂ − θ0 )2 = Var(θ̂ ) + (E(θ̂ ) − θ0 )2 (See Theorem A of Section 4.2.1.) If the estimate θ̂ is unbiased [E(θ̂ )= θ0 ], MSE(θ̂ )= Var(θ̂). When the estimates under consideration are unbiased, comparison of their mean squared errors reduces to comparison of their variances, or equivalently, standard errors. Given two estimates, θ̂ and θ̃ , of a parameter θ, the efficiency of θ̂ relative to θ̃ is defined to be Var(θ̃ ) eff(θ̂ , θ̃ ) = Var(θ̂ ) Thus, if the efficiency is smaller than 1, θ̂ has a larger variance than θ̃ has. This comparison is most meaningful when both θ̂ and θ̃ are unbiased or when both have the same bias. Frequently, the variances of θ̂ and θ̃ are of the form c1 Var(θ̂ ) = n c2 Var(θ̃ ) = n where n is the sample size. If this is the case, the efficiency can be interpreted as the ratio of sample sizes necessary to obtain the same variance for both θ̂ and θ̃ . (In Chapter 7, we compared the efficiencies of estimates of a population mean from a simple random sample, a stratified random sample with proportional allocation, and a stratified random sample with optimal allocation.) 8.7 Efficiency and the Cramér-Rao Lower Bound EXAMPLE A 299 Muon Decay Two estimates have been derived for α in the problem of muon decay. The method of moments estimate is α̃ = 3X The maximum likelihood estimate is the solution of the nonlinear equation n ! i=1 Xi =0 1 + α̂ X i We need to find the variances of these two estimates. Since the variance of a sample mean is σ 2 /n, we compute σ 2 : σ 2 = E(X 2 ) − [E(X )]2 " 1 α2 1 + αx dx − = x2 2 9 −1 1 α2 − 3 9 Thus, the variance of the method of moments estimate is = Var(α̃) = 9 Var(X ) = 3 − α2 n The exact variance of the mle, θ̂ , cannot be computed in closed form, so we approximate it by the asymptotic variance, Var(α̂) ≈ 1 n I (α) and then compare this asymptotic variance to the variance of α̃. The ratio of the former to the latter is called the asymptotic relative efficiency. By definition, $2 # ∂ log f (x|α) I (α) = E ∂α % & " 1 x2 1 + αx = dx 2 2 −1 (1 + αx) % & 1+α log − 2α 1−α , −1 < α < 1, α = # 0 = 2α 3 1 α=0 = , 3 The asymptotic relative efficiency is thus (for α = # 0) Var(α̂) 2α 3 = Var(α̃) 3 − α2 log % 1 & 1+α − 2α 1−α 300 Chapter 8 Estimation of Parameters and Fitting of Probability Distributions The following table gives this efficiency for various values of α between 0 and 1; symmetry would yield the values between −1 and 0. α Efficiency 0.0 .1 .2 .3 .4 .5 .6 .7 .8 .9 .95 1.0 .997 .989 .975 .953 .931 .878 .817 .727 .582 .464 As α tends to 1, the efficiency tends to 0. Thus, the mle is not much better than the method of moments estimate for α close to 0 but does increasingly better as α tends to 1. It must be kept in mind that we used the asymptotic variance of the mle, so we calculated an asymptotic relative efficiency, viewing this as an approximation to the actual relative efficiency. To gain more precise information for a given sample size, a simulation of the sampling distribution of the mle could be conducted. This might be especially interesting for α = 1, a case for which the formula for the asymptotic variance given above does not appear to make much sense. With a simulation study, the behavior of the bias as n and α vary could be analyzed (we showed that the mle is asymptotically unbiased, but there may be bias for a finite sample size), and the ■ actual distribution could be compared to the approximating normal. In searching for an optimal estimate, we might ask whether there is a lower bound for the MSE of any estimate. If such a lower bound existed, it would function as a benchmark against which estimates could be compared. If an estimate achieved this lower bound, we would know that it could not be improved upon. In the case in which the estimate is unbiased, the Cramér-Rao inequality provides such a lower bound. We now state and prove the Cramér-Rao inequality. THEOREM A Cramér-Rao Inequality Let X 1 , . . . , X n be i.i.d. with density function f (x|θ ). Let T = t (X 1 , . . . , X n ) be an unbiased estimate of θ. Then, under smoothness assumptions on f (x|θ ), 1 Var(T ) ≥ n I (θ ) 8.7 Efficiency and the Cramér-Rao Lower Bound 301 Proof Let Z = = n ! ∂ log f (X i |θ ) ∂θ i=1 n ! i=1 ∂ f (X i |θ ) ∂θ f (X i |θ ) In Section 8.5.2, we showed that E(Z ) = 0. Because the correlation coefficient of Z and T is less than or equal to 1 in absolute value Cov2 (Z , T ) ≤ Var(Z )Var(T ) It was also shown in Section 8.5.2 that $ # ∂ log f (X |θ ) = I (θ ) Var ∂θ Therefore, Var(Z ) = n I (θ ) The proof will be completed by showing that Cov(Z , T ) = 1. Since Z has mean 0, Cov(Z , T ) = E(Z T ) = " ··· " Noting that n ! i=1 ∂ n n f (x |θ ) i ! ∂θ t (x1 , . . . , xn ) f (x j |θ ) d x j f (xi |θ ) i=1 j=1 ∂ n n f (xi |θ) ∂ ∂θ f (x j |θ ) = f (xi |θ ) f (xi |θ) j=1 ∂θ i=1 we rewrite the expression for the covariance of Z and T as " " n ∂ Cov(Z , T ) = · · · t (x1 , . . . , xn ) f (xi |θ ) d xi ∂θ i=1 " " n ∂ = f (xi |θ ) d xi · · · t (x1 , . . . , xn ) ∂θ i=1 ∂ ∂ E(T ) = (θ ) = 1 ∂θ ∂θ which proves the inequality. [Note the interchange of differentiation and integration that must be justified by the smoothness assumptions on f (x|θ ).] ■ = 302 Chapter 8 Estimation of Parameters and Fitting of Probability Distributions Theorem A gives a lower bound on the variance of any unbiased estimate. An unbiased estimate whose variance achieves this lower bound is said to be efficient. Since the asymptotic variance of a maximum likelihood estimate is equal to the lower bound, maximum likelihood estimates are said to be asymptotically efficient. For a finite sample size, however, a maximum likelihood estimate may not be efficient, and maximum likelihood estimates are not the only asymptotically efficient estimates. EXAMPLE B Poisson Distribution In Example B in Section 8.5.3, we found that for the Poisson distribution 1 λ Therefore, by Theorem A, for any unbiased estimate T of λ, based on a sample of independent Poisson random variables, X 1 , . . . , X n , λ Var(T ) ≥ n I (λ) = The mle of λ was found to be X = S/n, where S = X 1 + · · · + X n . Since S follows a Poisson distribution with parameter nλ, Var(S) = nλ and Var(X ) = λ/n. Therefore, X attains the Cramér-Rao lower bound, and we know that no unbiased estimator of λ can have a smaller variance. In this sense, X is optimal for the Poisson distribution. But note that the theorem does not preclude the possibility that there is a biased ■ estimator of λ that has a smaller mean squared error than X does. 8.7.1 An Example: The Negative Binomial Distribution The Poisson distribution is often the first model considered for random counts; it has the property that the mean of the distribution is equal to the variance. When it is found that the variance of the counts is substantially larger than the mean, the negative binomial distribution is sometimes instead considered as a model. We consider a reparametrization and generalization of the negative binomial distribution introduced in Section 2.1.3, which is a discrete distribution on the nonnegative integers with a frequency function depending on the parameters m and k: % &x . m /−k '(k + x) m f (x|m, k) = 1 + k x!'(k) m+k The mean and variance of the negative binomial distribution can be shown to be µ=m m2 k It is apparent that this distribution is overdispersed (σ 2 > µ) relative to the Poisson. We will not derive the mean and variance. (They are most easily obtained by using moment-generating functions.) σ2 = m + 8.7 Efficiency and the Cramér-Rao Lower Bound 303 The negative binomial distribution can be used as a model in several cases: • • • • If k is an integer, the distribution of the number of successes up to the kth failure in a sequence of independent Bernoulli trials with probability of success p = m/(m + k) is negative binomial. Suppose that ( is a random variable following a gamma distribution and that for λ, a given value of (, X follows a Poisson distribution with mean λ. It can be shown that the unconditional distribution of X is negative binomial. Thus, for situations in which the rate varies randomly over time or space, the negative binomial distribution might tentatively be considered as a model. The negative binomial distribution also arises with a particular type of clustering. Suppose that counts of colonies, or clusters, follow a Poisson distribution and that each colony has a random number of individuals. If the probability distribution of the number of individuals per colony is of a particular form (the logarithmic series distribution), it can be shown that the distribution of counts of individuals is negative binomial. The negative binomial distribution might be a plausible model for the distribution of insect counts if the insects hatch from depositions, or clumps, of larvae. The negative binomial distribution can be applied to model population size in a certain birth/death process, the assumption being that the birth rate and death rate per individual are constant and that there is a constant rate of immigration. Anscombe (1950) discusses estimation of the parameters m and k and compares the efficiencies of several methods of estimation. The simplest method is the method of moments; from the relations of m and k to µ and σ 2 given previously, the method of moments estimates of m and k are m̂ = X X2 σ̂ 2 − X Another relatively simple method of estimation of m and k is based on the number of zeros. The probability of the count being zero is . m /−k p0 = 1 + k If m is estimated by the sample mean and there are n 0 zeros out of a sample size of n, then k is estimated by k̂, where k̂ satisfies % &−k̂ X n0 = 1+ n k̂ k̂ = Although the solution cannot be obtained in closed form, it is not difficult to find by iteration. Figure 8.11, from Anscombe (1950), shows the asymptotic efficiencies of the two methods of estimation of the negative binomial parameters relative to the maximum likelihood estimate. In the figure, the method of moments is method 1 and the method based on the number of zeros is method 2. Method 2 is quite efficient when the mean is small—that is, when there are a large number of zeros. Method 1 becomes more efficient as k increases. 304 Chapter 8 Estimation of Parameters and Fitting of Probability Distributions 100 40 98% 20 Exponent k 10 90% 4 75% 2 50% 1 75% 90% 0-4 0-2 0-1 0-04 0-1 0-2 0-4 50% 98% 1 2 4 10 20 40 Mean m 100 200 400 Method 1 Method 2 F I G U R E 8.11 Asymptotic efficiencies of estimates of negative binomial parameters. The maximum likelihood estimate is asymptotically efficient but is somewhat more difficult to compute. The equations will not be written out here. Bliss and Fisher (1953) discuss computational methods and give several examples. The maximum likelihood estimate of m is the sample mean, but that of k is the solution of a nonlinear equation. EXAMPLE A Insect Counts Let us consider an example from Bliss and Fisher (1953). From each of 6 apple trees in an orchard that was sprayed, 25 leaves were selected. On each of the leaves, the number of adult female red mites was counted. Intuitively, we might conclude that this situation was too heterogeneous for a Poisson model to fit; the rates of infestation might be different on different trees and at different locations on the same tree. The following table shows the observed counts and the expected counts from fitting Poisson and negative binomial distributions. The mle’s for k and m were k̂ = 1.025 and m̂ = 1.146. Number per Leaf Observed Count Poisson Distribution Negative Binomial Distribution 0 1 2 3 4 5 6 7 8+ 70 38 17 10 9 3 2 1 0 47.7 54.6 31.3 12.0 3.4 .75 .15 .03 .00 69.5 37.6 20.1 10.7 5.7 3.0 1.6 .85 .95 8.8 Sufficiency 305 Casual inspection of this table makes it clear that the Poisson does not fit; there are many more small and large counts observed than are expected for a Poisson ■ distribution. A recursive relation is useful in fitting the negative binomial distribution: . m /−k p0 = 1 + k % & m k+n−1 pn = pn−1 n k+m 8.8 Sufficiency This section introduces the concept of sufficiency and some of its theoretical implications. Suppose that X 1 , . . . , X n is a sample from a probability distribution with the density or frequency function f (x|θ ). The concept of sufficiency arises as an attempt to answer the following question: Is there a statistic, a function T (X 1 , . . . , X n ), that contains all the information in the sample about θ ? If so, a reduction of the original data to this statistic without loss of information is possible. For example, consider a sequence of independent Bernoulli trials with unknown probability of success, θ . We may have the intuitive feeling that the total number of successes contains all the information about θ that there is in the sample, that the order in which the successes occurred, for example, does not give any additional information. The following definition formalizes this idea. DEFINITION A statistic T (X 1 , . . . , X n ) is said to be sufficient for θ if the conditional distribution of X 1 , . . . , X n , given T = t, does not depend on θ for any value ■ of t. In other words, given the value of T , which is called a sufficient statistic, we can gain no more knowledge about θ from knowing more about the probability distribution of X 1 , . . . , X n . (Formally, we could envision keeping only T and throwing away all the X i without any loss of information. Informally, and more realistically, this would make no sense at all. The values of the X i might indicate that the model did not fit or that something was fishy about the data. What would you think, for example, if you saw 50 ones followed by 50 zeros in a sequence of supposedly independent Bernoulli trials?)