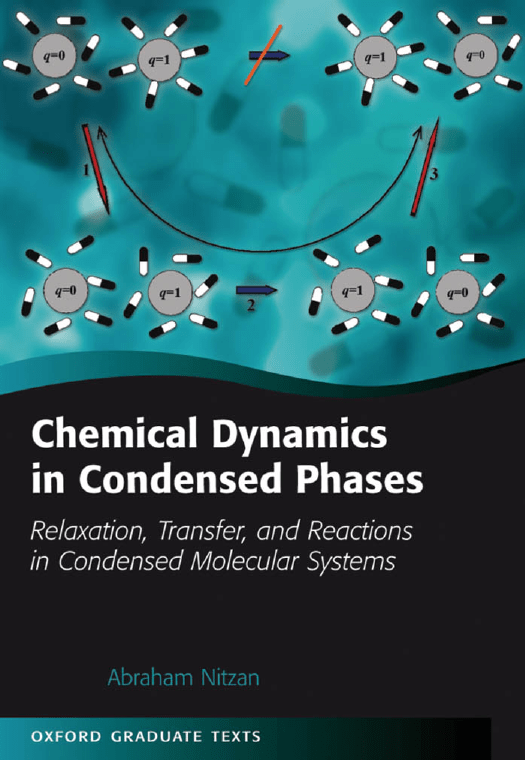

Chemical dynamics in condensed phases relaxation, transfer and reactions in condensed molecular systems (Abraham Nitzan) (z-lib.org)

advertisement

Chemical Dynamics in Condensed Phases

This page intentionally left blank

Chemical Dynamics in

Condensed Phases

Relaxation, Transfer and Reactions in

Condensed Molecular Systems

Abraham Nitzan

Tel Aviv University

1

3

Great Clarendon Street, Oxford OX2 6DP

Oxford University Press is a department of the University of Oxford.

It furthers the University’s objective of excellence in research, scholarship,

and education by publishing worldwide in

Oxford New York

Auckland Cape Town Dar es Salaam Hong Kong Karachi

Kuala Lumpur Madrid Melbourne Mexico City Nairobi

New Delhi Shanghai Taipei Toronto

With offices in

Argentina Austria Brazil Chile Czech Republic France Greece

Guatemala Hungary Italy Japan Poland Portugal Singapore

South Korea Switzerland Thailand Turkey Ukraine Vietnam

Oxford is a registered trade mark of Oxford University Press

in the UK and in certain other countries

Published in the United States

by Oxford University Press Inc., New York

© Oxford University Press 2006

The moral rights of the author have been asserted

Database right Oxford University Press (maker)

First published 2006

All rights reserved. No part of this publication may be reproduced,

stored in a retrieval system, or transmitted, in any form or by any means,

without the prior permission in writing of Oxford University Press,

or as expressly permitted by law, or under terms agreed with the appropriate

reprographics rights organization. Enquiries concerning reproduction

outside the scope of the above should be sent to the Rights Department,

Oxford University Press, at the address above

You must not circulate this book in any other binding or cover

and you must impose the same condition on any acquirer

British Library Cataloguing in Publication Data

Data available

Library of Congress Cataloging in Publication Data

Nitzan, Abraham.

Chemical dynamics in condensed phases : relaxation, transfer, and

reactions in condensed molecular systems / Abraham Nitzan.

p. cm.

Includes bibliographical references.

ISBN-13: 978–0–19–852979–8 (alk. paper)

ISBN-10: 0–19–852979–1 (alk. paper)

1. Molecular dynamics. 2. Chemical reaction, Conditions and laws of. I. Title.

QD461.N58 2006

2005030160

541 .394—dc22

Typeset by Newgen Imaging Systems (P) Ltd., Chennai, India

Printed in Great Britan

on acid-free paper by

Biddles Ltd., King’s Lynn

ISBN 0–19–852979–1

978–0–19–852979–8

1 3 5 7 9 10 8 6 4 2

To Raya, for her love, patience and understanding

PREFACE

In the past half century we have seen an explosive growth in the study of chemical reaction dynamics, spurred by advances in both experimental and theoretical

techniques. Chemical processes are now measured on timescales as long as many

years and as short as several femtoseconds, and in environments ranging from high

vacuum isolated encounters to condensed phases at elevated pressures. This large

variety of conditions has lead to the evolution of two branches of theoretical studies.

On one hand, “bare” chemical reactions involving isolated molecular species are

studied with regard to the effect of initial conditions and of molecular parameters

associated with the relevant potential surface(s). On the other, the study of chemical reactions in high-pressure gases and in condensed phases is strongly associated

with the issue of environmental effects. Here the bare chemical process is assumed

to be well understood, and the focus is on the way it is modified by the interaction

with the environment.

It is important to realize that not only does the solvent environment modify the

equilibrium properties and the dynamics of the chemical process, it often changes

the nature of the process and therefore the questions we ask about it. The principal

object in a bimolecular gas phase reaction is the collision process between the

molecules involved. In studying such processes we focus on the relation between

the final states of the products and the initial states of the reactants, averaging

over the latter when needed. Questions of interest include energy flow between

different degrees of freedom, mode selectivity, and yields of different channels.

Such questions could be asked also in condensed phase reactions, however, in

most circumstances the associated observable cannot be directly monitored. Instead

questions concerning the effect of solvent dynamics on the reaction process and

the inter-relations between reaction dynamics and solvation, diffusion and heat

transport become central.

As a particular example consider photodissociation of iodine I2 ↔ I + I that was

studied by many authors in the past 70 years.1 In the gas phase, following optical

excitation at wavelength ∼500 nm the I2 molecule dissociates and this is the end of

the story as far as we are concerned. In solutions the process is much more complex.

The molecular absorption at ∼500 nm is first bleached (evidence of depletion of

ground state molecules) but recovers after 100–200 ps. Also some transient state

1

For a review see A. L. Harris, J. K. Brown, and C. B. Harris, Ann. Rev. Phys. Chem. 39, 341

(1988).

Preface

vii

50

Ion pair

states

Vibrational relaxation

10

x

0

400 nm

350 nm

A

2

B

Valence

states

a(lg)

1000 nm

Trapping and detrapping

20

500 nm

Recombination

or

escape

710 nm

Dissociation

Energy (1000 cm–1)

40

3

Å

4

Fig. 0.1 A simplified energy level diagram for I2 (right), with the processes discussed in the text

(left). (Based on Harris et al. (see footnote 1).)

which absorbs at ∼350 nm seems to be formed. Its lifetime strongly depends on

the solvent (60 ps in alkane solvents, 2700 ps (=2.7 ns) in CCl4 ). Transient IR

absorption is also observed and can be assigned to two intermediate species. These

observations can be interpreted in terms of the schematic potential energy diagram

shown in Fig. 0.1 which depicts several electronic states: The ground state X,

bound excited states A and B and a repulsive state that correlates with the ground

state of the dissociated species. A highly excited state corresponding to the ionic

configuration I+ I− is also shown. Note that the energy of the latter will be very

sensitive to the solvent polarity. Also note that these are just a few representative

electronic states of the I2 molecule. The ground state absorption, which peaks at

500 nm, corresponds to the X→B transition, which in the low-pressure gas phase

leads to molecular dissociation after crossing to the repulsive state. In solution the

dissociated pair finds itself in a solvent cage, with a finite probability to recombine.

This recombination yields an iodine molecule in the excited A state or in the higher

vibrational levels of the ground X states. These are the intermediates that give rise

to the transient absorption signals.

Several solvent induced relaxation processes are involved in this process:

Diffusion, trapping, geminate recombination, and vibrational relaxation. In addition, the A→X transition represents the important class of nonadiabatic reactions,

here induced by the solute–solvent interaction. Furthermore, the interaction

between the molecular species and the radiation field, used to initiate and to

monitor the process, is modified by the solvent environment. Other important

solvent induced processes: Diffusion controlled reactions, charge (electron, proton)

viii

Preface

transfer, solvation dynamics, barrier crossing and more, play important roles in other

condensed phase chemical dynamics phenomena.

In modeling such processes our general strategy is to include, to the largest

extent possible, the influence of the environment in the dynamical description of

the system, while avoiding, as much as possible, a specific description of the environment itself. On the most elementary level this strategy results in the appearance of

phenomenological coefficients, for example dielectric constants, in the forces that

enter the equations of motion. In other cases the equations of motions are modified

more drastically, for example, replacing the fundamental Newton equations by the

phenomenological diffusion law. On more microscopic levels we use tools such as

coarse graining, projections, and stochastic equations of motion.

How much about the environment do we need to know? The answer to this

question depends on the process under study and on the nature of the knowledge

required about this process. A student can go through a full course of chemical

kinetics without ever bringing out the solvent as a participant in the game—all

that is needed is a set of rate coefficients (sometimes called “constants”). When

we start asking questions about the origin of these coefficients and investigate

their dependence on the nature of the solvent and on external parameters such

as temperature and pressure, then some knowledge of the environment becomes

essential.

Timescales are a principle issue in deciding this matter. In fact, the need for

more microscopic theories arises from our ability to follow processes on shorter

timescales. To see how time becomes of essence consider the example shown in

Fig. 0.2 that depicts a dog trying to engage a hamburger. In order to do so it has to

go across a barrier that is made of steps of the following property: When you stand

on a step for more than 1 s the following step drops to the level on which you stand.

The (hungry) dog moves at constant speed but if it runs too fast he will spend less

than one second on each step and will have to work hard to climb the barrier. On the

other hand, moving slowly enough it will find itself walking effortlessly through

a plane.

In this example, the 1 second timescale represents the characteristic relaxation

time of the environment—here the barrier. The dog experiences, when it moves

Fig. 0.2 The hamburger–dog dilemma as a lesson in the importance of timescales.

Preface

Collision time

in liquids

Electronic

dephasing

ix

Molecular rotation

Solvent relaxation

Vibrational dephasing

Vibrational relaxation (polyatomics)

Vibrational motion

10–15

10–14

10–13

Electronic relaxation

10–12

Energy transfer

in photosynthesis

Photoionization

Photodissociation

10–11

10–10

Proton transfer

10–9

10–8

Time (second)

Protein internal motion

Torsional

dynamics of

DNA

Photochemical isomerization

Electron transfer

inphotosynthesis

Fig. 0.3 Typical condensed phase molecular timescales in chemistry and biology. (Adapted from

G. R. Fleming and P. G. Wolynes, Physics Today, p. 36, May 1990).

slowly or quickly relative to this timescale, very different interactions with this

environment. A major theme in the study of molecular processes in condensed

phases is to gauge the characteristic molecular times with characteristic times of

the environment. Some important molecular processes and their characteristic times

are shown in Fig. 0.3.

The study of chemical dynamics in condensed phases therefore requires the

understanding of solids, liquids, high-pressure gases, and interfaces between them,

as well as of radiation–matter interaction, relaxation and transport processes in

these environments. Obviously such a broad range of subjects cannot be treated

comprehensively in any single text. Instead, I have undertaken to present several

selected prototype processes in depth, together with enough coverage of the necessary background to make this book self contained. The reader will be directed to

other available texts for more thorough coverage of background subjects.

The subjects covered by this text fall into three categories. The first five chapters

provide background material in quantum dynamics, radiation–matter interaction,

solids and liquids. Many readers will already have this background, but it is my

experience that many others will find at least part of it useful. Chapters 6–12 cover

mainly methodologies although some applications are brought in as examples. In

terms of methodologies this is an intermediate level text, covering needed subjects

from nonequilibrium statistical mechanics in the classical and quantum regime as

well as needed elements from the theory of stochastic processes, however, without

going into advanced subjects such as path integrals, Liouville-space Green functions

or Keldysh nonequilibrium Green functions.

x

Preface

The third part of this text focuses on several important dynamical processes in

condensed phase molecular systems. These are vibrational relaxation (Chapter 13),

Chemical reactions in the barrier controlled and diffusion controlled regimes

(Chapter 14), solvation dynamics in dielectric environments (Chapter 15), electron

transfer in bulk (Chapter 16), and interfacial (Chapter 17) systems and spectroscopy

(Chapter 18). These subjects pertain to theoretical and experimental developments

of the last half century; some such as single molecule spectroscopy and molecular

conduction—of the last decade.

I have used this material in graduate teaching in several ways. Chapters 2 and 9

are parts of my core course in quantum dynamics. Chapters 6–12 constitute the

bulk of my course on nonequilibrium statistical mechanics and its applications.

Increasingly over the last 15 years I have been using selected parts of Chapters

6–12 with parts from Chapters 13 to 18 in the course “Chemical Dynamics in

Condensed Phases” that I taught at Tel Aviv and Northwestern Universities.

A text of this nature is characterized not only by what it includes but also by

what it does not, and many important phenomena belonging to this vast field were

left out in order to make this book-project finite in length and time. Proton transfer, diffusion in restricted geometries and electromagnetic interactions involving

molecules at interfaces are a few examples. The subject of numerical simulations,

an important tool in the arsenal of methodologies, is not covered as an independent

topic, however, a few specific applications are discussed in the different chapters

of Part 3.

ACKNOW LEDGE MENTS

In the course of writing the manuscript I have sought and received information,

advice and suggestions from many colleagues. I wish to thank Yoel Calev, Graham

Fleming, Michael Galperin, Eliezer Gileadi, Robin Hochstrasser, Joshua Jortner,

Rafi Levine, Mark Maroncelli, Eli Pollak, Mark Ratner, Joerg Schröder, Zeev

Schuss, Ohad Silbert, Jim Skinner and Alessandro Troisi for their advice and help.

In particular I am obliged to Misha Galperin, Mark Ratner and Ohad Silbert for their

enormous help in going through the whole manuscript and making many important observations and suggestions, and to Joan Shwachman for her help in editing

the text. No less important is the accumulated help that I got from a substantially

larger group of students and colleagues with whom I collaborated, discussed and

exchanged ideas on many of the subjects covered in this book. This include a wonderful group of colleagues who have been meeting every two years in Telluride to

discuss issues pertaining to chemical dynamics in condensed phases. My interactions with all these colleagues and friends have shaped my views and understanding

of this subject. Thanks are due also to members of the publishing office at OUP,

whose gentle prodding has helped keep this project on track.

Finally, deep gratidude is due to my family, for providing me with loving and

supportive environment without which this work could not have been accomplished.

CONTENT S

PART I

1

BACKGROUND

Review of some mathematical and physical subjects

1.1

Mathematical background

1.1.1

Random variables and probability distributions

1.1.2

Constrained extrema

1.1.3

Vector and fields

1.1.4

Continuity equation for the flow of conserved entities

1.1.5

Delta functions

1.1.6

Complex integration

1.1.7

Laplace transform

1.1.8

The Schwarz inequality

1.2

Classical mechanics

1.2.1

Classical equations of motion

1.2.2

Phase space, the classical distribution function, and

the Liouville equation

1.3

Quantum mechanics

1.4

Thermodynamics and statistical mechanics

1.4.1

Thermodynamics

1.4.2

Statistical mechanics

1.4.3

Quantum distributions

1.4.4

Coarse graining

1.5

Physical observables as random variables

1.5.1

Origin of randomness in physical systems

1.5.2

Joint probabilities, conditional probabilities, and

reduced descriptions

1.5.3

Random functions

1.5.4

Correlations

1.5.5

Diffusion

1.6

Electrostatics

1.6.1

Fundamental equations of electrostatics

1.6.2

Electrostatics in continuous dielectric media

1.6.3

Screening by mobile charges

1

3

3

3

6

7

10

11

13

15

16

18

18

19

22

25

25

29

34

35

38

38

39

41

41

43

45

45

47

52

xiv

2

3

4

Contents

Quantum dynamics using the time-dependent

Schrödinger equation

2.1

Formal solutions

2.2

An example: The two-level system

2.3

Time-dependent Hamiltonians

2.4

A two-level system in a time-dependent field

2.5

A digression on nuclear potential surfaces

2.6

Expressing the time evolution in terms of the Green’s operator

2.7

Representations

2.7.1

The Schrödinger and Heisenberg representations

2.7.2

The interaction representation

2.7.3

Time-dependent perturbation theory

2.8

Quantum dynamics of the free particles

2.8.1

Free particle eigenfunctions

2.8.2

Free particle density of states

2.8.3

Time evolution of a one-dimensional free particle

wavepacket

2.8.4

The quantum mechanical flux

2.9

Quantum dynamics of the harmonic oscillator

2.9.1

Elementary considerations

2.9.2

The raising/lowering operators formalism

2.9.3

The Heisenberg equations of motion

2.9.4

The shifted harmonic oscillator

2.9.5

Harmonic oscillator at thermal equilibrium

2.10

Tunneling

2.10.1 Tunneling through a square barrier

2.10.2 Some observations

2A

Some operator identities

83

86

89

89

93

95

96

100

101

101

105

109

An Overview of Quantum Electrodynamics and Matter–Radiation

Field Interaction

3.1

Introduction

3.2

The quantum radiation field

3.2.1

Classical electrodynamics

3.2.2

Quantum electrodynamics

3.2.3

Spontaneous emission

3A

The radiation field and its interaction with matter

112

112

114

114

115

119

120

Introduction to solids and their interfaces

4.1

Lattice periodicity

131

131

57

57

59

63

66

71

74

76

76

77

78

80

80

82

Contents

4.2

4.3

4.4

4.5

5

Lattice vibrations

4.2.1

Normal modes of harmonic systems

4.2.2

Simple harmonic crystal in one dimension

4.2.3

Density of modes

4.2.4

Phonons in higher dimensions and the heat capacity

of solids

Electronic structure of solids

4.3.1

The free electron theory of metals: Energetics

4.3.2

The free electron theory of metals: Motion

4.3.3

Electronic structure of periodic solids: Bloch theory

4.3.4

The one-dimensional tight binding model

4.3.5

The nearly free particle model

4.3.6

Intermediate summary: Free electrons versus

noninteracting electrons in a periodic potential

4.3.7

Further dynamical implications of the electronic

band structure of solids

4.3.8

Semiconductors

The work function

Surface potential and screening

4.5.1

General considerations

4.5.2

The Thomas–Fermi theory of screening by metallic

electrons

4.5.3

Semiconductor interfaces

4.5.4

Interfacial potential distributions

Introduction to liquids

5.1

Statistical mechanics of classical liquids

5.2

Time and ensemble average

5.3

Reduced configurational distribution functions

5.4

Observable implications of the pair correlation function

5.4.1

X-ray scattering

5.4.2

The average energy

5.4.3

Pressure

5.5

The potential of mean force and the reversible work theorem

5.6

The virial expansion—the second virial coefficient

xv

132

132

134

137

139

143

143

145

147

150

152

155

157

159

164

167

167

168

170

173

175

176

177

179

182

182

184

185

186

188

Part II METHODS

191

6

193

193

195

Time correlation functions

6.1

Stationary systems

6.2

Simple examples

xvi

Contents

6.3

6.4

6.5

7

8

6.2.1

The diffusion coefficient

6.2.2

Golden rule rates

6.2.3

Optical absorption lineshapes

Classical time correlation functions

Quantum time correlation functions

Harmonic reservoir

6.5.1

Classical bath

6.5.2

The spectral density

6.5.3

Quantum bath

6.5.4

Why are harmonic baths models useful?

195

197

199

201

206

209

210

213

214

215

219

219

223

225

225

227

230

233

233

235

238

241

242

242

244

245

Introduction to stochastic processes

7.1

The nature of stochastic processes

7.2

Stochastic modeling of physical processes

7.3

The random walk problem

7.3.1

Time evolution

7.3.2

Moments

7.3.3

The probability distribution

7.4

Some concepts from the general theory of stochastic processes

7.4.1

Distributions and correlation functions

7.4.2

Markovian stochastic processes

7.4.3

Gaussian stochastic processes

7.4.4

A digression on cumulant expansions

7.5

Harmonic analysis

7.5.1

The power spectrum

7.5.2

The Wiener–Khintchine theorem

7.5.3

Application to absorption

7.5.4

The power spectrum of a randomly modulated

harmonic oscillator

7A

Moments of the Gaussian distribution

7B

Proof of Eqs (7.64) and (7.65)

7C

Cumulant expansions

7D

Proof of the Wiener–Khintchine theorem

247

250

251

252

253

Stochastic equations of motion

8.1

Introduction

8.2

The Langevin equation

8.2.1

General considerations

8.2.2

The high friction limit

255

255

259

259

262

Contents

8.2.3

8.2.4

8.2.5

8.3

8.4

8.5

8A

8B

8C

9

Harmonic analysis of the Langevin equation

The absorption lineshape of a harmonic oscillator

Derivation of the Langevin equation from

a microscopic model

8.2.6

The generalized Langevin equation

Master equations

8.3.1

The random walk problem revisited

8.3.2

Chemical kinetics

8.3.3

The relaxation of a system of harmonic

oscillators

The Fokker–Planck equation

8.4.1

A simple example

8.4.2

The probability flux

8.4.3

Derivation of the Fokker–Planck equation

from the Chapman–Kolmogorov equation

8.4.4

Derivation of the Smoluchowski equation

from the Langevin equation: The overdamped limit

8.4.5

Derivation of the Fokker–Planck equation

from the Langevin equation

8.4.6

The multidimensional Fokker–Planck equation

Passage time distributions and the mean first passage time

Obtaining the Fokker–Planck equation from the

Chapman–Kolmogorov equation

Obtaining the Smoluchowski equation from the overdamped

Langevin equation

Derivation of the Fokker–Planck equation from the Langevin

equation

Introduction to quantum relaxation processes

9.1

A simple quantum-mechanical model for relaxation

9.2

The origin of irreversibility

9.2.1

Irreversibility reflects restricted observation

9.2.2

Relaxation in isolated molecules

9.2.3

Spontaneous emission

9.2.4

Preparation of the initial state

9.3

The effect of relaxation on absorption lineshapes

9.4

Relaxation of a quantum harmonic oscillator

9.5

Quantum mechanics of steady states

9.5.1

Quantum description of steady-state processes

9.5.2

Steady-state absorption

9.5.3

Resonance tunneling

xvii

264

265

267

271

273

274

276

278

281

282

283

284

287

290

292

293

296

299

301

304

305

312

312

312

314

315

316

322

329

329

334

334

xviii

Contents

9A

9B

9C

10

Using projection operators

Evaluation of the absorption lineshape for the model

of Figs 9.2 and 9.3

Resonance tunneling in three dimensions

Quantum mechanical density operator

10.1

The density operator and the quantum

Liouville equation

10.1.1 The density matrix for a pure system

10.1.2 Statistical mixtures

10.1.3 Representations

10.1.4 Coherences

10.1.5 Thermodynamic equilibrium

10.2

An example: The time evolution of a two-level system in the

density matrix formalism

10.3

Reduced descriptions

10.3.1 General considerations

10.3.2 A simple example—the quantum mechanical basis

for macroscopic rate equations

10.4

Time evolution equations for reduced density operators:

The quantum master equation

10.4.1 Using projection operators

10.4.2 The Nakajima–Zwanzig equation

10.4.3 Derivation of the quantum master equation using

the thermal projector

10.4.4 The quantum master equation in the interaction

representation

10.4.5 The quantum master equation in the Schrödinger

representation

10.4.6 A pause for reflection

10.4.7 System-states representation

10.4.8 The Markovian limit—the Redfield equation

10.4.9 Implications of the Redfield equation

10.4.10 Some general issues

10.5

The two-level system revisited

10.5.1 The two-level system in a thermal environment

10.5.2 The optically driven two-level system in a thermal

environment—the Bloch equations

10A

Analogy of a coupled 2-level system to a spin 12 system in

a magnetic field

338

341

342

347

348

348

349

351

354

355

356

359

359

363

368

368

369

372

374

377

378

379

381

384

388

390

390

392

395

Contents

xix

11

Linear response theory

11.1

Classical linear response theory

11.1.1 Static response

11.1.2 Relaxation

11.1.3 Dynamic response

11.2

Quantum linear response theory

11.2.1 Static quantum response

11.2.2 Dynamic quantum response

11.2.3 Causality and the Kramers–Kronig relations

11.2.4 Examples: mobility, conductivity, and diffusion

11A

The Kubo identity

399

400

400

401

403

404

405

407

410

412

417

12

The Spin–Boson Model

12.1

Introduction

12.2

The model

12.3

The polaron transformation

12.3.1 The Born Oppenheimer picture

12.4

Golden-rule transition rates

12.4.1 The decay of an initially prepared level

12.4.2 The thermally averaged rate

12.4.3 Evaluation of rates

12.5

Transition between molecular electronic states

12.5.1 The optical absorption lineshape

12.5.2 Electronic relaxation of excited molecules

12.5.3 The weak coupling limit and the energy gap law

12.5.4 The thermal activation/potential-crossing limit

12.5.5 Spin–lattice relaxation

12.6

Beyond the golden rule

419

420

421

424

426

430

430

435

436

439

439

442

443

445

446

449

Part III APPLICATIONS

451

13

453

453

457

460

464

464

467

468

471

476

478

481

Vibrational energy relaxation

13.1

General observations

13.2

Construction of a model Hamiltonian

13.3

The vibrational relaxation rate

13.4

Evaluation of vibrational relaxation rates

13.4.1 The bilinear interaction model

13.4.2 Nonlinear interaction models

13.4.3 The independent binary collision (IBC) model

13.5

Multi-phonon theory of vibrational relaxation

13.6

Effect of supporting modes

13.7

Numerical simulations of vibrational relaxation

13.8

Concluding remarks

xx

Contents

Chemical reactions in condensed phases

14.1

Introduction

14.2

Unimolecular reactions

14.3

Transition state theory

14.3.1 Foundations of TST

14.3.2 Transition state rate of escape from

a one-dimensional well

14.3.3 Transition rate for a multidimensional system

14.3.4 Some observations

14.3.5 TST for nonadiabatic transitions

14.3.6 TST with tunneling

14.4

Dynamical effects in barrier crossing—The Kramers model

14.4.1 Escape from a one-dimensional well

14.4.2 The overdamped case

14.4.3 Moderate-to-large damping

14.4.4 The low damping limit

14.5

Observations and extensions

14.5.1 Implications and shortcomings of the Kramers theory

14.5.2 Non-Markovian effects

14.5.3 The normal mode representation

14.6

Some experimental observations

14.7

Numerical simulation of barrier crossing

14.8

Diffusion-controlled reactions

14A

Solution of Eqs (14.62) and (14.63)

14B

Derivation of the energy Smoluchowski equation

483

483

484

489

489

15

Solvation dynamics

15.1

Dielectric solvation

15.2

Solvation in a continuum dielectric environment

15.2.1 General observations

15.2.2 Dielectric relaxation and the Debye model

15.3

Linear response theory of solvation

15.4

More aspects of solvation dynamics

15.5

Quantum solvation

536

537

539

539

540

543

546

549

16

Electron transfer processes

16.1

Introduction

16.2

A primitive model

16.3

Continuum dielectric theory of electron transfer processes

16.3.1 The problem

16.3.2 Equilibrium electrostatics

552

552

555

559

559

560

14

491

492

496

497

499

499

500

502

505

508

512

513

516

518

520

523

527

531

533

Contents

16.3.3

16.3.4

16.3.5

16.3.6

16.4

16.5

16.6

16.7

16.8

16.9

16.10

16.11

16.12

16.13

16.14

16A

17

18

Transition assisted by dielectric fluctuations

Thermodynamics with restrictions

Dielectric fluctuations

Energetics of electron transfer between two

ionic centers

16.3.7 The electron transfer rate

A molecular theory of the nonadiabatic electron transfer rate

Comparison with experimental results

Solvent-controlled electron transfer dynamics

A general expression for the dielectric reorganization energy

The Marcus parabolas

Harmonic field representation of dielectric response

The nonadiabatic coupling

The distance dependence of electron transfer rates

Bridge-mediated long-range electron transfer

Electron tranport by hopping

Proton transfer

Derivation of the Mulliken–Hush formula

xxi

561

561

562

567

570

570

574

577

579

581

582

588

589

591

596

600

602

Electron transfer and transmission at molecule–metal and

molecule–semiconductor interfaces

17.1

Electrochemical electron transfer

17.1.1 Introduction

17.1.2 The electrochemical measurement

17.1.3 The electron transfer process

17.1.4 The nuclear reorganization

17.1.5 Dependence on the electrode potential: Tafel plots

17.1.6 Electron transfer at the semiconductor–electrolyte

interface

17.2

Molecular conduction

17.2.1 Electronic structure models of molecular conduction

17.2.2 Conduction of a molecular junction

17.2.3 The bias potential

17.2.4 The one-level bridge model

17.2.5 A bridge with several independent levels

17.2.6 Experimental statistics

17.2.7 The tight-binding bridge model

616

618

619

621

625

626

629

632

633

Spectroscopy

18.1

Introduction

18.2

Molecular spectroscopy in the dressed-state picture

640

641

643

607

607

607

609

611

614

614

xxii

Contents

18.3

18.4

18.5

18.6

18.7

18A

Index

Resonance Raman scattering

Resonance energy transfer

Thermal relaxation and dephasing

18.5.1 The Bloch equations

18.5.2 Relaxation of a prepared state

18.5.3 Dephasing (decoherence)

18.5.4 The absorption lineshape

18.5.5 Homogeneous and inhomogeneous broadening

18.5.6 Motional narrowing

18.5.7 Thermal effects in resonance Raman scattering

18.5.8 A case study: Resonance Raman scattering and

fluorescence from Azulene in a Naphtalene matrix

Probing inhomogeneous bands

18.6.1 Hole burning spectroscopy

18.6.2 Photon echoes

18.6.3 Single molecule spectroscopy

Optical response functions

18.7.1 The Hamiltonian

18.7.2 Response functions at the single molecule level

18.7.3 Many body response theory

18.7.4 Independent particles

18.7.5 Linear response

18.7.6 Linear response theory of propagation and

absorption

Steady-state solution of Eqs (18.58): the Raman scattering flux

651

656

664

665

665

666

667

668

670

674

679

682

683

685

689

691

692

693

696

698

699

701

703

709

PART I

B A CKGROUND

This page intentionally left blank

1

RE V I EW O F SOME MATHEMATICAL AND PH YS ICAL

SUBJ ECTS

The lawyers plead in court or draw up briefs,

The generals wage wars, the mariners

Fight with their ancient enemy the wind,

And I keep doing what I am doing here:

Try to learn about the way things are

And set my findings down in Latin verse . . .

Such things as this require a basic course

In fundamentals, and a long approach

By various devious ways, so, all the more,

I need your full attention . . .

Lucretius (c.99–c.55 bce) “The way things are”

translated by Rolfe Humphries, Indiana University Press, 1968.

This chapter reviews some subjects in mathematics and physics that are used in

different contexts throughout this book. The selection of subjects and the level of

their coverage reflect the author’s perception of what potential users of this text

were exposed to in their earlier studies. Therefore, only brief overview is given of

some subjects while somewhat more comprehensive discussion is given of others.

In neither case can the coverage provided substitute for the actual learning of these

subjects that are covered in detail by many textbooks.

1.1

1.1.1

Mathematical background

Random variables and probability distributions

A random variable is an observable whose repeated determination yields a series

of numerical values (“realizations” of the random variable) that vary from trial to

trial in a way characteristic of the observable. The outcomes of tossing a coin or

throwing a die are familiar examples of discrete random variables. The position of a

dust particle in air and the lifetime of a light bulb are continuous random variables.

Discrete random variables are characterized by probability distributions; Pn denotes

the probability that a realization of the given random variable is n. Continuous

random variables are associated with probability density functions P(x): P(x1 )dx

4

Review of some mathematical and physical subjects

denotes the probability that the realization of the variable x will be in the interval

x1 . . . x1 +dx. By their nature, probability distributions have to be normalized, that is,

Pn = 1;

dxP(x) = 1

(1.1)

n

The jth moments of these distributions are

j

Mj = x ≡ dx xj P(x) or

nj ≡

nj Pn

(1.2)

n

Obviously, M0 = 1 and M1 is the average value of the corresponding random

variable. In what follows we will focus on the continuous case. The second moment

is usually expressed by the variance,

δx2 ≡ (x − x)2 = M2 − M12

The standard deviation

δx2 1/2 =

M2 − M12

(1.3)

(1.4)

is a measure of the spread of the fluctuations about the average M1 . The generating

function1 for the moments of the distribution P(x) is defined as the average

αx

g(α) = e = dxP(x)eαx

(1.5)

the name generating function stems from the identity (obtained by expanding eαx

inside the integral)

g(α) = 1 + αx + (1/2)α 2 x2 + · · · + (1/n!)α n xn + · · ·

(1.6)

which implies that all moments xn of P(x) can be obtained from g(α) according to

n

∂

g(α)

(1.7)

xn =

∂α n

α=0

Following are some examples of frequently encountered probability distributions:

Poisson distribution. This is the discrete distribution

P(n) =

1

an e−a

n!

n = 0, 1, . . .

Sometimes referred to also as the characteristic function of the given distribution.

(1.8)

Mathematical background

which is normalized because

n

an /n!

=

ea .

5

It can be easily verified that

n = δn2 = a

(1.9)

Binomial distribution. This is a discrete distribution in finite space: The probability that the random variable n takes any integer value between 0 and N is

given by

P(n) =

N !pn qN −n

;

n!(N − n)!

p + q = 1;

n = 0, 1, . . . , N

(1.10)

The

is satisfied by the binomial theorem since

N normalization condition

N . We discuss properties of this distribution in Section 7.3.3.

P(n)

=

(p

+

q)

n=0

Gaussian distribution. The probability density associated with this continuous

distribution is

1

exp(−[(x − x̄)2 /2σ 2 ]);

P(x) = √

2

2πσ

−∞ < x < ∞

(1.11)

with average and variance

x2 = σ 2

x = x̄,

(1.12)

In the limit of zero variance this function approaches a δ function (see Section 1.1.5)

P(x) −→ δ(x − x̄)

σ →0

(1.13)

Lorentzian distribution. This continuous distribution is defined by

P(x) =

γ /π

;

(x − x̄)2 + γ 2

−∞ < x < ∞

(1.14)

The average is x = x̄ and a δ function, δ(x − x̄), is approached as γ → 0,

however higher moments of this distribution diverge. This appears to suggest that

such a distribution cannot reasonably describe physical observables, but we will see

that, on the contrary it is, along with the Gaussian distribution quite pervasive in our

discussions, though indeed as a common physical approximation to observations

made near the peak of the distribution. Note that even though the second moment

diverges, γ measures the width at half height of this distribution.

A general phenomenon associated with sums of many random variables has

far reaching implications on the random nature of many physical observables. Its

mathematical expression is known as the central limit theorem. Let x1 , x2 , . . . , xn

6

Review of some mathematical and physical subjects

(n

1) be independent random variables with xj = 0 and xj2 = σj2 . Consider

the sum

Xn = x1 + x2 + · · · + xn

(1.15)

Under certain conditions that may be qualitatively stated by (1) all variables are

alike, that is, there are no few variables that dominate the others, and (2) certain

convergence criteria (see below) are satisfied, the probability distribution function

F(Xn ) of Xn is given by2

F(Xn ) ∼

=

1

Xn2

√ exp − 2

2Sn

Sn 2π

(1.16)

where

Sn2 = σ12 + σ22 + · · · + σn2

(1.17)

This result is independent of the forms of the probability distributions fj (xj ) of the

variables xj provided they satisfy, as stated above, some convergence criteria. A

sufficient (but not absolutely necessary) condition is that all moments dxj xjn f (xj )

of these distributions exist and are of the same order of magnitude.

In applications of these concepts to many particle systems, for example in statistical mechanics, we encounter the need to approximate discrete distributions such as

in Eq. (1.10), in the limit of large values of their arguments by continuous functions.

The Stirling Approximation

N ! ∼ eN ln N −N

when N → ∞

(1.18)

is a very useful tool in such cases.

1.1.2

Constrained extrema

In many applications we need to find the maxima or minima of a given function

f (x1 , x2 , . . . , xn ) subject to some constraints. These constraints are expressed as

given relationships between the variables that we express by

gk (x1 , x2 . . . xn ) = 0;

k = 1, 2, . . . , m (for m constraints)

(1.19)

If x = a = 0 and An = a1 + a2 + · · · + an then Eq. (1.16) is replaced by F(Xn ) ∼

=

√ j −1 j

(Sn 2π ) exp(−(Xn − An )2 /2Sn2 ).

2

Mathematical background

7

Such constrained extrema can be found by the Lagrange multipliers method: One

form the “Lagrangian”

L(x1 , . . . , xn ) = f (x1 , . . . , xn ) −

m

λk gk (x1 , . . . , xn )

(1.20)

k=1

with m unknown constants {λk }. The set of n + m equations

⎫

∂L

∂L

⎬

= ··· =

=0

∂x1

∂xn

g = 0, . . . , g = 0 ⎭

1

(1.21)

m

then yield the extremum points (x1 , . . . , xn ) and the associated Lagrange multipliers {λk }.

1.1.3

Vector and fields

1.1.3.1

Vectors

Our discussion here refers to vectors in three-dimensional Euclidean space, so

vectors are written in one of the equivalent forms a = (a1 , a2 , a3 ) or (ax , ay , az ).

Two products involving such vectors often appear in our text. The scalar (or dot)

product is

a·b=

3

an bn

(1.22)

n=1

and the vector product is

u1

a × b = −b × a = a1

b1

u2

a2

b2

u3

a3

b2

(1.23)

where uj (j = 1, 2, 3) are unit vectors in the three cartesian directions and where | |

denotes a determinant. Useful identities involving scalar and vector products are

1.1.3.2

a · (b × c) = (a × b) · c = b · (c × a)

(1.24)

a × (b × c) = b(a · c) − c(a · b)

(1.25)

Fields

A field is a quantity that depends on one or more continuous variables. We will

usually think of the coordinates that define a position of space as these variables, and

8

Review of some mathematical and physical subjects

the rest of our discussion is done in this language. A scalar field is a map that assigns

a scalar to any position in space. Similarly, a vector field is a map that assigns a vector

to any such position, that is, is a vector function of position. Here we summarize

some definitions and properties of scalar and vector fields.

The gradient, ∇S, of a scalar function S(r), and the divergence, ∇ · F, and rotor

(curl), ∇ × F, of a vector field F(r) are given in cartesian coordinates by

∂S

∂S

∂S

ux +

uy +

uz

∂x

∂y

∂z

∂Fy

∂Fx

∂Fz

∇ ·F=

+

+

∂x

∂y

∂z

∇S =

(1.26)

(1.27)

uy

uz

∂Fy

∂Fx

∂Fz

∂Fz

∂/∂y ∂/∂z = ux

−

− uy

−

∂y

∂z

∂x

∂z

Fy

Fz

∂Fy

∂Fx

+ uz

−

(1.28)

∂x

∂y

ux

∇ × F = ∂/∂x

Fx

where ux , uy , uz are again unit vectors in the x, y, z directions. Some identities

involving these objects are

∇ × (∇ × F) = ∇(∇ · F) − ∇ 2 F

(1.29)

∇ · (SF) = F · ∇S + S∇ · F

(1.30)

∇ · (∇ × F) = 0

(1.31)

∇ × (∇S) = 0

(1.32)

The Helmholtz theorem states that any vector field F can be written as a sum of its

transverse F⊥ and longitudinal F components

F = F⊥ + F

(1.33)

∇ · F⊥ = 0

(1.34a)

∇ × F = 0

(1.34b)

which have the properties

Mathematical background

9

that is, the transverse component has zero divergence while the longitudinal

component has zero curl. Explicit expressions for these components are

1

∇ × F(r )

∇ × d 3r

4π

|r − r |

1

∇ · F(r )

F (r) =

∇ d 3r

4π

|r − r |

F⊥ (r) =

1.1.3.3

(1.35a)

(1.35b)

Integral relations

Let V (S) be a volume bounded by a closed surface S. Denote a three-dimensional

volume element by d 3 r and a surface vector element by dS. dS has the magnitude

of the corresponding surface area and direction along its normal, facing outward.

We sometimes write dS = n̂d 2 x where n̂ is an outward normal unit vector. Then

for any vector and scalar functions of position, F(r) and φ(r), respectively

3

d r(∇ · F) =

V

dS · F

dSφ

S

(1.37)

3

d r(∇ × F) =

V

(1.36)

S

d 3 r(∇φ) =

V

(Gauss’s divergence theorem)

dS × F

(1.38)

S

In these equations S denotes an integral over the surface S.

Finally, the following theorem concerning the integral in (1.37) is of interest:

Let φ(r) be a periodic function in three dimensions, so that φ(r) = φ(r + R) with

R = n1 a1 +n2 a2 +n3 a3 with aj (j = 1, 2, 3) being three vectors that characterize the

three-dimensional periodicity and nj any integers (see Section 4.1). The function is

therefore characterized by its values in one unit cell defined by the three a vectors.

Then the integral (1.37) vanishes if the volume of integration is exactly one unit cell.

To prove this statement consider the integral over a unit cell

I (r ) =

V

d 3 rφ(r + r )

(1.39)

10

Review of some mathematical and physical subjects

Since φ is periodic and the integral is over one period, the result should not depend

on r . Therefore,

0 = ∇r I (r ) = d 3 r∇r φ(r + r ) = d 3 r∇r φ(r + r ) = d 3 r∇φ(r)

V

V

V

(1.40)

which concludes the proof.

1.1.4

Continuity equation for the flow of conserved entities

We will repeatedly encounter in this book processes that involve the flow of conserved quantities. An easily visualized example is the diffusion of nonreactive

particles, but it should be emphasized at the outset that the motion involved can

be of any type and the moving object(s) do not have to be particles. The essential

ingredient in the following discussion is a conserved entity Q whose distribution

in space is described by some time-dependent density function ρQ (r, t) so that its

amount within some finite volume V is given by

(1.41)

Q(t) = d 3 rρQ (r, t)

V

The conservation of Q implies that any change in Q(t) can result only from flow of

Q through the boundary of volume V . Let S be the surface that encloses the volume

V , and dS—a vector surface element whose direction is normal to the surface in the

outward direction. Denote by JQ (r, t) the flux of Q, that is, the amount of Q moving

in the direction of JQ per unit time and per unit area of the surface perpendicular to

JQ . The Q conservation law can then be written in the following mathematical form

dQ

3 ∂ρQ (r, t)

(1.42)

= d r

= − dS · JQ (r, t)

dt

∂t

V

S

Using Eq. (1.36) this can be recast as

∂ρQ (r, t)

d 3r

= − d 3 r∇ · JQ

∂t

V

(1.43)

V

which implies, since the volume V is arbitrary

∂ρQ (r, t)

= −∇ · JQ

∂t

(1.44)

Mathematical background

11

Equation (1.44), the local form of Eq. (1.42), is the continuity equation for the

conserved Q. Note that in terms of the velocity field v(r, t) = ṙ(r, t) associated

with the Q motion we have

JQ (r, t) = v(r, t)ρQ (r, t)

(1.45)

It is important to realize that the derivation above does not involve any physics.

It is a mathematical expression of conservation of entities that that can change their

position but are not created or destroyed in time. Also, it is not limited to entities

distributed in space and could be applied to objects moving in other dimensions.

For example, let the function ρ1 (r, v, t) be the particles density in position and

velocity space (i.e. ρ1 (r, v, t)d 3 rd 3 v is the number of particles whose position and

velocity are respectively within the volume element d 3 r about r and the velocity

element d 3 v about v). The total number, N = d 3 r d 3 vρ(r, v, t), is fixed. The

change of ρ1 in time can then be described by Eq. (1.44) in the form

∂ρ1 (r, v, t)

= −∇r · (vρ1 ) − ∇v (v̇ρ1 )

∂t

(1.46)

where ∇r = (∂/∂x, ∂/∂y, ∂/∂z) is the gradient in position space and ∇v =

(∂/∂vx , ∂/∂vy , ∂/∂vz ) is the gradient in velocity space. Note that vρ is a flux in

position space, while v̇ρ is a flux in velocity space.

1.1.5

Delta functions

The delta function3 (or: Dirac’s delta function) is a generalized function that is

∞

obtained as a limit when a normalized function −∞ dxf (x) = 1 becomes zero

everywhere except at one point. For example,

a −ax2

e

π

sin(ax)

δ(x) = lim

a→∞

πx

δ(x) = lim

a→∞

or

δ(x) = lim

a/π

+ a2

a→0 x 2

or

(1.47)

Another way to view this function is as the derivative of the Heaviside step function

(η(x) = 0 for x < 0 and η(x) = 1 for x ≥ 0):

δ(x) =

3

d

η(x)

dx

(1.48)

Further reading: http://mathworld.wolfram.com/DeltaFunction.html and references therein.

12

Review of some mathematical and physical subjects

In what follows we list a few properties of this function

⎧

⎪

if a < x0 < b

b

⎨f (x0 )

dx f (x) δ(x − x0 ) = 0

if x0 < a or x0 > b

⎪

⎩

(1/2)f (x0 ) if x0 = a or x0 = b

a

δ(x − xj )

δ[g(x)] =

|g (xj )|

(1.49)

(1.50)

j

where xj are the roots of g(x) = 0, for example,

δ(ax) =

1

δ(x)

|a|

(1.51)

and

δ(x2 − a2 ) =

1

(δ(x + a) + δ(x − a))

2|a|

(1.52)

The derivative of the δ function is also a useful concept. It satisfies (from integration

by parts)

(1.53)

dx f (x) δ (x − x0 ) = −f (x0 )

and more generally

dxf (x)δ (n) (x) = −

dx

∂f (n−1)

(x)

δ

∂x

(1.54)

Also (from checking integrals involving the two sides)

xδ (x) = −δ(x)

(1.55)

x2 δ (x) = 0

(1.56)

Since for −π < a < π we have

π

π

dx cos(nx)δ(x − a) = cos(na)

−π

dx sin(nx)δ(x − a) = sin(na)

and

−π

(1.57)

Mathematical background

13

it follows that the Fourier series expansion of the δ function is

δ(x − a) =

∞

1

1

[cos(na) cos(nx) + sin(na) sin(nx)]

+

2π

π

n=1

∞

1

1

=

cos(n(x − a))

+

2π

π

(1.58)

n=1

Also since

∞

dxeikx δ(x − a) = eika

(1.59)

−∞

it follows that

1

δ(x − a) =

2π

∞

dke−ik(x−a)

−∞

1

=

2π

∞

dkeik(x−a)

(1.60)

−∞

Extending δ functions to two and three dimensions is simple in cartesian coordinates

δ 2 (r) = δ(x)δ(y)

δ 3 (r) = δ(x)δ(y)δ(z)

(1.61)

In spherical coordinates care has to be taken of the integration element. The result is

δ(r)

πr

δ(r)

δ 3 (r) =

2πr 2

δ 2 (r) =

1.1.6

(1.62)

(1.63)

Complex integration

Integration in the complex plane is a powerful technique for evaluating a certain

class of integrals, including those encountered in the solution of the time-dependent

Schrödinger equation (or other linear initial value problems) by the Laplace transform method (see next subsection). At the core of this technique are the Cauchy

theorem which states that the integral along a closed contour of a function g(z),

which is analytic on the contour and the region enclosed by it, is zero:

dzg(z) = 0

(1.64)

14

Review of some mathematical and physical subjects

and the Cauchy integral formula—valid for a function g(z) with the properties

defined above,

g(z)

= 2πig(α)

(1.65)

dz

z−α

where the integration contour surrounds the point z = α at which the integrand has

a simple singularity, and the integration is done in the counter-clockwise direction

(reversing the direction yields the result with opposite sign).

Both the theorem and the integral formula are very useful for many applications:

the Cauchy theorem implies that any integration path can be distorted in the complex

plane as long as the area enclosed between the original and modified path does not

contain any singularities of the integrand. This makes it often possible to modify

integration paths in order to make evaluation easier. The Cauchy integral formula

is often used to evaluate integrals over unclosed path—if the contour can be closed

along a line on which the integral is either zero or easily evaluated. An example is

shown below, where the integral (1.78) is evaluated by this method.

Problem 1.1. Use complex integration to obtain the identity for ε > 0

1

2π

∞

−∞

1

0

dωe−iωt

=

ω − ω0 + iε

−ie−iω0 t−εt

for t < 0

for t > 0

(1.66)

In quantum dynamics applications we often encounter this identity in the limit

ε → 0. We can rewrite it in the form

1

2π

∞

dωe−iωt

−∞

1

ε→0+

−→ −iη(t)e−iω0 t

ω − ω0 + iε

(1.67)

where η(t) is the step function defined above Eq. (1.48).

Another useful identity is associated with integrals involving the function (ω −

ω0 + iε)−1 and a real function f (x). Consider

b

a

f (ω)

dω

=

ω − ω0 + iε

b

a

(ω − ω0 )f (ω)

dω

−i

(ω − ω0 )2 + ε2

b

dω

a

ε

f (ω)

(ω − ω0 )2 + ε2

(1.68)

where the integration is on the real ω axis and where a < ω0 < b. Again we are

interested in the limit ε → 0. The imaginary term in (1.68) is easily evaluated

Mathematical background

15

to be −iπ f (ω0 ) by noting that the limiting form of the Lorentzian function that

multiplies f (ω) is a delta-function (see Eq. (1.47)). The real part is identified as

b

a

(ω − ω0 )f (ω) ε→0

dω

−→ PP

(ω − ω0 )2 + ε2

b

dω

a

f (ω)

ω − ω0

(1.69)

Here PP stands for the so-called Cauchy principal part (or principal value) of the

integral about the singular point ω0 . In general, the Cauchy principal value of a

finite integral of a function f (x) about a point x0 with a < x0 < b is given by

⎫

⎧ x −ε

b

b

⎨ 0

⎬

PP dxf (x) = lim

dxf (x) +

dxf (x)

(1.70)

⎭

ε→0+ ⎩

a

x0 +ε

a

We sometimes express the information contained in Eqs (1.68)–(1.70) and (1.47)

in the concise form

1

1

ε→0+

−→ PP

− iπδ(ω − ω0 )

ω − ω0 + iε

ω − ω0

1.1.7

(1.71)

Laplace transform

A function f (t) and its Laplace transform f˜ (z) are related by

f˜ (z) =

∞

dte−zt f (t)

(1.72)

0

1

f (t) =

2πi

∞i+ε

dzezt f˜ (z)

(1.73)

−∞i+ε

where ε is chosen so that the integration path is to the right of all singularities of

f˜ (z). In particular, if the singularities of f˜ (z) are all on the imaginary axis, ε can be

taken arbitrarily small, that is, the limit ε → 0+ may be considered. We will see

below that this is in fact the situation encountered in solving the time-dependent

Schrödinger equation.

Laplace transforms are useful for initial value problems because of identities

such as

∞

dte

0

−zt df

dt

= [e

−zt

f ]∞

0

∞

+z

0

dte−zt f (t) = z f˜ (z) − f (t = 0)

(1.74)

16

Review of some mathematical and physical subjects

and

∞

dte−zt

d 2f

= z 2 f˜ (z) − zf (t = 0) − f (t = 0)

dt 2

(1.75)

0

which are easily verified using integration by parts. As an example consider the

equation

df

= −αf

dt

(1.76)

z f˜ (z) − f (0) = −α f˜ (z)

(1.77)

Taking Laplace transform we get

that is,

f˜ (z) = (z + α)

−1

f (0)

and

−1

ε+i∞

f (t) = (2πi)

dzezt (z + α)−1 f (0).

ε−i∞

(1.78)

If α is real and positive ε can be taken as 0, and evaluating the integral by closing

a counter-clockwise contour on the negative-real half z plane4 leads to

f (t) = e−αt f (0)

1.1.8

(1.79)

The Schwarz inequality

In its simplest form the Schwarz inequality expressed an obvious relation between

the products of magnitudes of two real vectors c1 and c2 and their scalar product

c1 c2 ≥ c1 · c2

(1.80)

It is less obvious to show that this inequality holds also for complex vectors,

provided that the scalar product of two complex vectors e and f is defined by

e∗ · f . The inequality is of the form

|e||f | ≥ |e∗ · f |

(1.81)

The contour is closed at z → −∞ where the integrand is zero. In fact the integrand has to vanish

faster than z −2 as z → −∞ because the length of the added path diverges like z 2 in that limit.

4

Mathematical background

Note that in the scalar product e∗ · f

17

the order is important, that is, e∗ · f

=

(f ∗ · e)∗ .

To prove the inequality (1.81) we start from

(a∗ e∗ − b∗ f ∗ ) · (ae − bf ) ≥ 0

(1.82)

which holds for any scalars a and b. Using the choice

a = (f ∗ · f )(e∗ · f )

and

b = (e∗ · e)(f ∗ · e)

(1.83)

√

in Eq. (1.82) leads after some algebra to (e∗ · e)(f ∗ · f ) ≥ |e∗ · f |, which implies

(1.81).

Equation (1.80) can be applied also to real functions that can be viewed as

vectors with a continuous ordering index. We can make the identification ck =

(ck · ck )1/2 ⇒ ( dxck2 (x))1/2 ; k = 1, 2 and c1 · c2 ⇒ dxc1 (x)c2 (x) to get

dxc12 (x)

dxc22 (x)

≥

2

dxc1 (x)c2 (x)

(1.84)

The same development can be done for Hilbert space vectors. The result is

ψ|ψφ|φ ≥ |ψ|φ|2

(1.85)

where ψ and φ are complex functions so that ψ|φ = drψ ∗ (r)φ(r). To prove

Eq. (1.85) define a function y(r) = ψ(r) + λφ(r) where λ is a complex constant.

The following inequality is obviously satisfied

dr y∗ (r) y(r) ≥ 0

This leads to

∗

∗

drψ (r)ψ(r) + λ drψ (r)φ(r) + λ

∗

+ λ λ drφ ∗ (r)φ(r) ≥ 0

∗

drφ ∗ (r)ψ(r)

(1.86)

or

ψ|ψ + λψ|φ + λ∗ φ|ψ + |λ|2 φ|φ ≥ 0

(1.87)

Now choose

λ=−

φ|ψ

;

φ|φ

λ∗ = −

ψ|φ

φ|φ

(1.88)

18

Review of some mathematical and physical subjects

and also multiply (1.87) by φ|φ to get

ψ|ψφ|φ − |ψ|φ|2 − |φ|ψ|2 + |ψ|φ|2 ≥ 0

(1.89)

which leads to (1.85).

An interesting implication of the Schwarz inequality appears in the relationship

between averages and correlations involving two observables A and B . Let Pn

be the probability that the system is in state n and let An and Bn be the values

of these observables in this state. Then A2 = n Pn A2n , B2 = n Pn Bn2 , and

AB = n Pn An Bn . The Schwarz inequality now implies

A2 B2 ≥ AB2

(1.90)

2

Indeed, Eq. (1.90) is identical to Eq. (1.80)

written in

√

√the form (a·a)(b·b) ≥ (a·b)

where a and b are the vectors an = Pn An ; bn = Pn Bn .

1.2

1.2.1

Classical mechanics

Classical equations of motion

Time evolution in classical mechanics is described by the Newton equations

ṙi =

1

pi

mi

(1.91)

ṗi = Fi = −∇i U

ri and pi are the position and momentum vectors of particle i of mass mi , Fi is the

force acting on the particle, U is the potential, and ∇i is the gradient with respect

to the position of this particle. These equations of motion can be obtained from the

Lagrangian

L = K({ẋ}) − U ({x})

(1.92)

where K and U are, respectively, the total kinetic and potential energies and {x},

{ẋ} stands for all the position and velocity coordinates. The Lagrange equations of

motion are

d ∂L

∂L

=

(and same for y, z)

dt ∂ ẋi

∂xi

(1.93)

The significance of this form of the Newton equations is its invariance to coordinate

transformation.

Classical mechanics

19

Another useful way to express the Newton equations of motion is in the

Hamiltonian representation. One starts with the generalized momenta

pj =

∂L

∂ ẋj

and define the Hamiltonian according to

⎡

H = − ⎣L({x}, {ẋ}) −

(1.94)

⎤

pj ẋj ⎦

(1.95)

j

The mathematical operation done in (1.95) transforms the function L of variables

{x}, {ẋ} to a new function H of the variables {x}, {p}.5 The resulting function,

H ({x}, {p}), is the Hamiltonian, which is readily shown to satisfy

H =U +K

(1.96)

that is, it is the total energy of the system, and

ẋj =

∂H

;

∂pj

ṗj = −

∂H

∂qj

(1.97)

which is the Hamiltonian form of the Newton equations. In a many-particle system

the index j goes over all generalized positions and momenta of all particles.

The specification of all positions and momenta of all particles in the system

defines the dynamical state of the system. Any dynamical variable, that is, a function of these positions and momenta, can be computed given this state. Dynamical

variables are precursors of macroscopic observables that are defined as suitable

averages over such variables and calculated using the machinery of statistical

mechanics.

1.2.2

Phase space, the classical distribution function, and the Liouville equation

In what follows we will consider an N particle system in Euclidian space. The

classical equations of motion are written in the form

ṙN =

5

∂H (rN , pN )

;

∂pN

ṗN = −

This type of transformation is called a Legendre transform.

∂H (rN , pN )

∂rN

(1.98)

20

Review of some mathematical and physical subjects

which describe the time evolution of all coordinates and momenta in the system. In these equations rN and pN are the 3N -dimensional vectors of coordinates

and momenta of the N particles. The 6N -dimensional space whose axes are

these coordinates and momenta is refereed to as the phase space of the system. A phase point (rN , pN ) in this space describes the instantaneous state

of the system. The probability distribution function f (rN , pN ; t) is defined

such that f (rN , pN ; t)drN dpN is the probability at time t that the phase point

will be inside the volume drN dpN in phase space. This implies that in an

ensemble containing a large number N of identical systems the number of those

characterized by positions and momenta within the drN dpN neighborhood is

N f (rN , pN ; t)drN dpN . Here and below we use a shorthand notation in which, for

example, drN = dr1 dr2 . . . drN = dx1 dx2 dx3 dx4 dx5 dx6 , . . . , dx3N −2 dx3N −1 dx3N

and, (∂F/∂rN )(∂G/∂pN ) = 3N

j=1 (∂F/∂xj )(∂G/∂pj ).

As the system evolves in time according to Eq. (1.98) the distribution function

evolves accordingly. We want to derive an equation of motion for this distribution.

To this end consider first any dynamical variable A(rN , pN ). Its time evolution is

given by

dA

∂A

∂ A ∂H

∂ A ∂H

∂A

= N ṙN + N ṗN = N N − N N = {H , A} ≡ iLA

dt

∂r

∂p

∂r ∂p

∂p ∂r

(1.99)

L = −i{H , }

The second equality in Eq. (1.99) defines the Poisson brackets and L is called the

(classical) Liouville operator. Consider next the ensemble average A(t) = At

of the dynamical variable A. This average, a time-dependent observable, can be

expressed in two ways that bring out two different, though equivalent, roles played

by the function A(rN , pN ). First, it is a function in phase space that gets a distinct

numerical value at each phase point. Its average at time t is therefore given by

A(t) =

dr

N

dpN f (rN , pN ; t)A(rN , pN )

(1.100)

At the same time the value of A at time t is given by A(rN (t), pN (t)) and is

determined uniquely by the initial conditions (rN (0), pN (0)). Therefore,

A(t) =

drN

dpN f (rN , pN ; 0)A(rN (t), pN (t))

(1.101)

Classical mechanics

21

An equation of motion for f can be obtained by equating the time derivatives of

Eqs (1.100) and (1.101):

N N

dA

N

N ∂f (r , p ; t)

N N

N

A(r , p ) = dr

dr

dp

dpN f (rN , pN ; 0)

∂t

dt

∂ A ∂H

∂ A ∂H

− N N

= drN dpN f (rN , pN ; 0)

N

N

∂r ∂p

∂p ∂r

(1.102)

Using integration by parts while assuming that f vanishes at the boundary of phase

space, the right-hand side of (1.102) may be transformed according to

∂ A ∂H

∂ A ∂H

N

N

N N

− N N

dp f (r , p ; 0)

dr

∂rN ∂pN

∂p ∂r

∂H ∂f

∂H ∂f

N

N

(1.103)

− N N A(rN , pN )

dp

= dr

∂rN ∂pN

∂p ∂r

N

dpN (−iLf )A(rN , pN )

= dr

Comparing this to the left-hand side of Eq. (1.102) we get

3N ∂f (rN , pN ; t)

∂f ∂H

∂f ∂H

−

= −iLf = −

∂t

∂xj ∂pj

∂pj ∂xj

j=1

=−

3N ∂f

j=1

∂xj

ẋj +

∂f

ṗj

∂pj

(1.104)

This is the classical Liouville equation. An alternative derivation of this equation

that sheds additional light on the nature of the phase space distribution function

f (rN , pN ; t) is given in Appendix 1A.

An important attribute of the phase space distribution function f is that it is

globally constant. Let us see first what this statement means mathematically. Using

∂

∂H ∂

∂

∂

∂H ∂

∂

∂

d

=

+ ṙN N + ṗN N =

+ N N − N N =

+ iL

dt

∂t

∂r

∂p

∂t ∂p ∂r

∂r ∂p

∂t

(1.105)

Equation (1.104) implies that

df

=0

dt

(1.106)

22

Review of some mathematical and physical subjects

that is, f has to satisfy the following identity:

f (rN (0), pN (0); t = 0) = f (rN (t), pN (t); t)

(1.107)

As the ensemble of systems evolves in time, each phase point moves along the

trajectory (rN (t), pN (t)). Equation (1.107) states that the density of phase points

appears constant when observed along the trajectory.

Another important outcome of these considerations is the following. The uniqueness of solutions of the Newton equations of motion implies that phase point

trajectories do not cross. If we follow the motions of phase points that started at a

given volume element in phase space we will therefore see all these points evolving

in time into an equivalent volume element, not necessarily of the same geometrical

shape. The number of points in this new volume is the same as the original one, and

Eq. (1.107) implies that also their density is the same. Therefore, the new volume

(again, not necessarily the shape) is the same as the original one. If we think of this

set of points as molecules of some multidimensional fluid, the nature of the time

evolution implies that this fluid is totally incompressible. Equation (1.107) is the

mathematical expression of this incompressibility property.

1.3

Quantum mechanics

In quantum mechanics the state of a many-particle system is represented by a

wavefunction (rN , t), observables correspond to hermitian operators6 and results

of measurements are represented by expectation values of these operators,

A(t) = (rN , t)|Â|(rN , t)

= drN ∗ (rN , t)Â(rN , t)

(1.108)

When  is substituted with the unity operator, Eq. (1.108) shows that acceptable

wavefunctions should be normalized to 1, that is, ψ|ψ = 1. A central problem is

the calculation of the wavefunction, (rN , t), that describes the time-dependent

state of the system. This wavefunction is the solution of the time-dependent

6

The hermitian conjugatre of an operator  is the operator † that satisfies

drN φ ∗ (rN )Âψ(rN ) = drN († φ(rN ))∗ ψ(rN )

for all φ and ψ in the hilbert state of the system.

Quantum mechanics

23

Schrödinger equation

∂

i

= − Ĥ h̄

∂t

(1.109)

where the Hamiltonian Ĥ is the operator that corresponds to the energy observable,

and in analogy to Eq. (1.196) is given by

Ĥ = K̂ + Û (rN )

(1.110)

In the so-called coordinate representation the potential energy operator Û amounts

to a simple product, that is, Û (rN )(rN , t) = U (rN )(rN , t) where U (rN ) is

the classical potential energy. The kinetic energy operator is given in cartesian

coordinates by

N

1 2

∇

K̂ = −h̄

2mj j

2

(1.111)

j=1

where the Laplacian operator is defined by

∇j2 =

∂2

∂2

∂2

+

+

∂xj2 ∂yj2 ∂zj2

(1.112)

Alternatively Eq. (1.111) can be written in the form

K̂ =

N p̂2

j

j=1

2mj

=

N

p̂j · p̂j

j=1

where the momentum vector operator is

h̄ ∂ ∂ ∂

p̂j =

,

,

;

i ∂xj ∂yj ∂zj

(1.113)

2mj

i=

√

−1

The solution of Eq. (1.109) can be written in the form

(rN , t) =

ψn (rN )e−iEn t/h̄

(1.114)

(1.115)

n

where ψn and En are solutions of the time-independent Schrödinger equation

Ĥ ψn = En ψn

(1.116)

24

Review of some mathematical and physical subjects

Equation (1.116) is an eigenvalue equation, and ψn and En are eigenfunctions and

corresponding eigenvalues of the Hamiltonian. If at time t = 0 the system is in a

state which is one of these eigenfunctions, that is,

(rN , t = 0) = ψn (rn )

(1.117)

then its future (and past) evolution is obtained from (1.109) to be

(rN , t) = ψn (rN )e−iEn t/h̄

(1.118)

Equation (1.108) then implies that all observables are constant in time, and the

eigenstates of Ĥ thus constitute stationary states of the system.

The set of energy eigenvalues of a given Hamiltonian, that is, the energies that

characterize the stationary states of the corresponding system is called the spectrum

of the Hamiltonian and plays a critical role in both equilibrium and dynamical

properties of the system. Some elementary examples of single particle Hamiltonian

spectra are:

A particle of mass m in a one-dimensional box of infinite depth and width a,

En =

(2π h̄)2 n2

;

8ma2

n = 1, 2 . . .

(1.119)

A particle of mass m moving in a one-dimensional harmonic potential U (x) =

(1/2)kx2 ,

1

(1.120)

En = h̄ω n +

n = 0, 1 . . . ;

ω = k/m

2

A rigid rotator with moment of inertia I ,

En =

n(n + 1)h̄2

;

2I

wn = 2n + 1

(1.121)

where wn is the degeneracy of level n. Degeneracy is the number of different

eigenfunctions that have the same eigenenergy.

An important difference between quantum and classical mechanics is that in

classical mechanics stationary states exist at all energies while in quantum mechanics of finite systems the spectrum is discrete as shown in the examples above. This

difference disappears when the system becomes large. Even for a single particle

system, the spacings between allowed energy levels become increasingly smaller

as the size of accessible spatial extent of the system increases, as seen, for example,

Thermodynamics and statistical mechanics

25

in Eq. (1.119) in the limit of large a. This effect is tremendously amplified when

the number of degrees of freedom increases. For example the three-dimensional

analog of (1.119), that is, the spectrum of the Hamiltonian describing a particle in

a three-dimensional infinitely deep rectangular box of side lengths a, b, c is

n2y