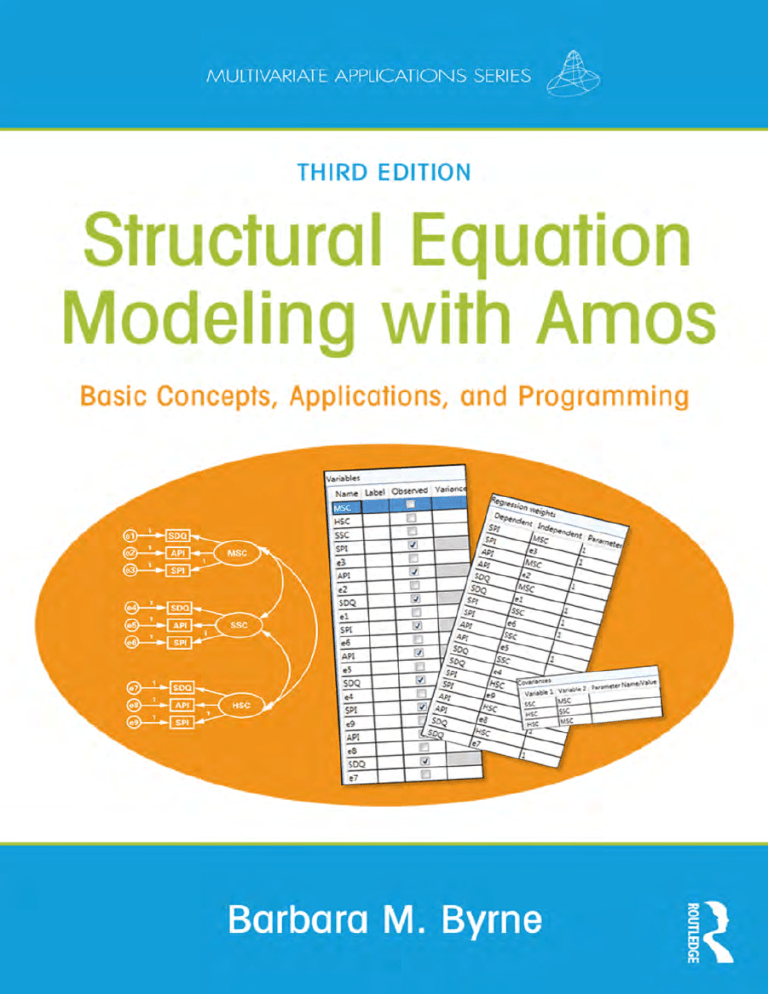

Structural-Equation-Modeling-with-Amos-Basic-Concepts-Applications-and-Programming

advertisement