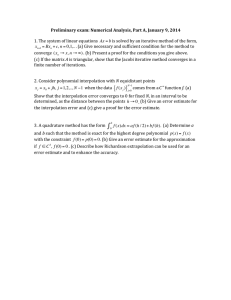

Interpolation and Curve Fitting 1 Introduction • Curve fitting is the process of constructing a curve, or mathematical function, that has the best fit to a series of data points, possibly subject to constraints. • Interpolation, a special case of curve fitting, requires an exact fit to the data. 2 Intro. … cont. • Interpolation methods attempt to answer questions about the value of f(x) at points other than the ones it was sampled at. • Interpolation and curve fitting have several uses • as an aid for data visualization, • to infer values of a function where no data are available, • to summarize the relationships among two or more variables, 3 Intro. … cont. • The question of how to reconstruct a smooth function f(x) that agrees with a number of collected sample values is not straightforward. • There is more than one way to accomplish this task. 4 Intro. … cont. • It is not trivial to argue that any particular one of these alternatives is “better", without having some knowledge of the nature of f(x), or the purpose this reconstructed function will be used for. • It may appear that the discontinuous approximation generated is awkward and not as well-behaved. • However, the real function f(x) being sampled could have been just as discontinuous to begin with • Sometimes, we may know that the real f(x) is supposed to have some degree of smoothness. • For example, if f(t) is the position of a moving vehicle in a highway, we would expect both f(t) and f’(t) (velocity), possibly even f’’(t) (acceleration) to be continuous functions of time. 5 Intro. … cont. Polynomial curves fitting points generated with a sine function. • The black dotted line is the "true" data, • The red line is a first degree polynomial, • The green line is second degree, • The orange line is third degree and • The blue line is fourth degree. 6 Polynomial Interpolation • Given a set of n+1 data points 𝑥0 , 𝑦0 , 𝑥1 , 𝑦1 , …, 𝑥𝑗 , 𝑦𝑗 ,…, 𝑥𝑛 , 𝑦𝑛 • A commonly used approach is to use a properly crafted polynomial function • Benefits • Polynomials are easy to evaluate, • Easy to compute derivatives, • Easy to obtain the coefficients, 𝑎𝑖 7 Polynomial interpolation … cont. • If there is one data point, a 0-degree polynomial can be used 8 Polynomial interpolation … cont. • If k = 1, we have two points (x0, y0) and (x1, y1), a 0-degree polynomial, P0(x) = a0 will not always be able to pass through both, but a 1-degree polynomial P1(x) = a0 + a1x always can. 9 Polynomial interpolation … cont. • Higher order polynomial can be used. • However, the smallest order polynomial should be used that can provide the required interpolation. • For 𝑛 + 1 observations, an 𝑛 order polynomial can interpolate 10 Lagrange Interpolation • Lagrange interpolation is an alternative way to define Pn(x), without having to solve expensive systems of equations. • For a given set of n + 1 points (x0, y0), (x1, y1), …, (xn, yn), define the Lagrange polynomials of degree-n 𝑙0(𝑥), 𝑙1(𝑥), …, ln(𝑥) as: • Then, the interpolating polynomial is simply 11 Lagrange Interpolation … cont. • Note that no solution of a linear system is required here. • Since li(x) is a degree-n polynomial, with the n-roots x0, x1, x2, …, xi−1, xi+1, xi+2, …, xn, it must have the form • Since li(xi)=1 12 Lagrange Interpolation … cont. • Thus, 13 Lagrange Interpolation … cont. • Cost of determining the interpolation function is very easy. • We are essentially able to write a formula for Pn(x) without solving any systems. • If the polynomial has to be written in a standard format, the cost of obtaining the coefficients would be very high. • Cost of evaluating the interpolation for arbitrary x is significant • We need n subtractions and n multiplications for each data point, n2 number of computations for all. • Derivatives are not easily available. • Incremental interpolation is not easily accommodated as each of the Lagrange polynomials have to be recomputed. 14 Lagrange Interpolation … cont. • Example: use Lagrange interpolation to compute an interpolating polynomial for (−2, −27), (0, −1), (1, 0) 15 Newton Interpolation • The Newton interpolation polynomial is a linear combination of Newton basis polynomials 𝑛 𝑁 𝑥 = 𝑎𝑗 π𝑗 (𝑥) 𝑗=0 • The Newton basis polynomials are 𝑗−1 π𝑗 𝑥 = ෑ 𝑥 − 𝑥𝑖 , 𝑤𝑖𝑡ℎ π0 𝑥 = 1 𝑖=0 16 Newton Interpolation …cont. • From the definition, we see that the π𝑗 𝑥𝑖 =0 for i<j. • Therefore, the solution of the coefficients can be obtained as follows for the case of three data points 17 Newton Interpolation …cont. • Example: determine the interpolating polynomial for the three data points (−2, −27), (0, −1), (1, 0). • a=(-27, 13, -4) • The polynomial is then 18 Newton Interpolation …cont. • Incremental construction • The Newton basis functions can be derived by considering the problem of building a polynomial interpolant incrementally as successive new data points are added. Here is the basic idea: • Step 0: Define a degree-0 polynomial P0(x) that just interpolates (x0, y0). • Step 1: Define a degree-1 polynomial P1(x) that now interpolates both (x0; y0) and (x1; y1). 19 Newton Interpolation …cont. • Step 2: Now construct P2(x) which interpolates the three points (x0, y0), (x1, y1), (x2, y2) • Step k: Repeat this procedure 20 Newton Interpolation …cont. • If 𝑥0 , 𝑥1 , … , 𝑥𝑘 are arranged consecutively with equal spacing, the Newton polynomial can be expressed in a simplified form as 21 Spline Interpolation • Polynomial interpolation may exhibit oscillatory artifacts, especially at the end points (known as Runge's phenomenon). • More generally, the shape of the resulting curve, especially for very high or low values of the independent variable, may be contrary to common sense. • These disadvantages can be reduced by using spline interpolation or Chebyshev polynomials. 22 23 Spline Interpolation … cont. • The solution is the use of lower order polynomials to connect subsets of data points as lower order polynomials avoid oscillations and overshoots. • Spline interpolation uses several formulas; each formula is a low degree polynomial to pass through all the data points. • These resulting functions are called splines. • Spline interpolation is preferred over polynomial interpolation because the interpolation error can be made small even when using low degree polynomials for the spline. 24 Spline Interpolation … cont. • For i = 1,…,n data points, interpolate between all the pairs of knots (xi-1, yi-1) and (xi, yi) with polynomials 𝑦 = 𝑞𝑖 (𝑥), 𝑖 = 1, 2, … , 𝑛. • As the spline will take a function (shape) more smoothly (minimizing the bending), both the first derivative y’ and the second derivative y’’ should be continuous everywhere and at the knots. • Therefore: q’i(xi) = q’i+1(xi) and q’’i(xi) = q’’i+1(xi) for i, where 1≤ i ≤ n -1 • This can only be achieved if the polynomials are degree 3 or higher. 25 Cubic Spline Interpolation • The goal of cubic spline interpolation is to get an interpolation formula that is continuous in both the first and second derivatives, both within the intervals and at the interpolating nodes. • This will give us a smoother interpolating function. • The continuity of first derivative means that the graph y = S(x) will not have sharp corners. • The continuity of second derivative means that the radius of curvature is defined at each point. 26 Cubic Spline Interpolation … cont. • Given the n data points (x1,y1),…,(xn,yn), where xi are distinct and in increasing order. • A cubic spline S(x) through the data points (x1,y1),…,(xn,yn) is a set of cubic polynomials: 27 Cubic Spline Interpolation … cont. 28 Cubic Spline Interpolation … cont. • To obtain the coefficients b, c, d for each of the splines the following values of the spline and its derivatives are used in the previous formulas. 29 Cubic Spline Interpolation … cont. • n data points, • • • • 3n unknowns, n number of equations from continuity of data n-1 number of equations from continuity of derivative n-1 number of equations from continuity of second derivative • This leaves 2 free conditions • Natural cubic spline: first and second derivative are zero at end points. • Clamped cubic spline: first derivative at end points is defined. 30 Cubic Spline Interpolation … cont. • Spline interpolation via MATLAB • yy = spline(x,Y,xx) • uses a cubic spline interpolation to find yy, the values of the underlying function Y at the values of the interpolant xx. For the interpolation, the independent variable is assumed to be the final dimension of Y with the breakpoints defined by x. The values in x must be distinct. • pp = spline(x,Y) • returns the piecewise polynomial form of the cubic spline interpolant for later use with ppval and the spline utility unmkpp. x must be a vector with distinct values. Y can be a scalar, a vector, or an array of any dimension, 31 Cubic Spline Interpolation … cont. • • • • • x = 0:10; y = sin(x); xx = 0:0.25:10; yy = spline(x,y,xx); plot(x,y,'o',xx,yy) 32 Polynomial Regression • A better relationship between the variables can be obtained by coming up with a measure of best fit other than exact matching of the observations. 33 Polynomial Regression … cont. • Polynomial regression aims at finding the coefficients of a polynomial of a given degree that provide the best fit • Polynomial models are very powerful to handle nonlinearity, because polynomials can approximate continuous functions within any given precision. • The coefficients are solved to minimize the mean square of the error. 34 Polynomial Regression … cont. • Polynomial order • Note that it is always possible to fit a polynomial model of order n − 1 perfectly to a data set n points • However, this will almost surely be overfitting! • The simplest possible model should be used that is consistent with the data and knowledge of the problem • The smallest order possible. 35 Polynomial Regression … cont. • Model-building strategy • Forward selection: Successively fit models of increasing order until the test for the highest order term is non significant. • Backward elimination: Appropriately fit the highest order model and then delete terms one at a time, starting with the highest order, until the highest order remaining term has a significant t statistic. • Interestingly, these two procedures do not necessarily lead to the same model. 36 Polynomial Regression … cont. • Matrix form and calculation of estimates • For n observations (n>m), the polynomial regression model can be expressed in matrix form 37 Polynomial Regression … cont. • Example 38