1

1.Let A be an n × n binary matrix (each element is either 0 or 1) such that

•

in any row i of A, all the 1’ s come before any 0’s; and

•

the number of 1’s in a row is no smaller than the number in the next row.

Write an O(n)-time algorithm to count the number of 1’s in A.

Answer 1:

Algorithm 1 count the number of 1’s

1: int

count = 0;

2: int

rec = 0;

3: int

sum = 0;

4: int

i = n;

5: for (j = 1,j ≤ n,j + +){

(a[i][count + 1]! = 0){

if

6:

count++;

7:

else

8:

sum+ = (count + 1 − rec) × i

9:

10:

i − −;

11:

rec = count;

12:

}

13: } 14: return

sum

2.Let A be an n×n binary matrix. Design an O(n)-time algorithm to decide

whether there exists i ∈ {1,2,...,n} such that aij = 0 and aji = 1 for all j 6= i(It

doesn’t matter what value of aii is).

Answer 2:

Algorithm 2

1: boolean function(int n, int [][]a){

2: for

3:

(int

for (int

i = 0;i < n;i + +){

i = 0;j < i;j + +){

2

if(a[i][j]!=a[j][i]){

4:

5:

return

6:

}

7:

}

true;

}

8:

return

9:

false;

10: }

3:Design an algorithm to find the second smallest of an array of n integers with

at most (n+logn) comparisons of elements. If the smallest element occurs two

or more times, then it is also the second smallest. (Note that this requirement is

stronger than linear-time: We’re not playing Big-Oh here, and 2n comparisons

wouldn’t count.)

Algorithm 3

1: int

first = length + 1;

2: int

last = 2 ∗ low − 1;

3: while(first < last){

4: for(i = first;i < last;i+ =

5:

if (c[i] < c[i + 1])

6:

(c[i/2] = c[i]);

2){

7:

else (c[i/2] = c[i + 1])}

8:

if(last%2 == 1)

c[last/2] = c[last];

9:

first = first/2;

10:

last = last/2};

11:

12: min = c[0];

13:

if (c[0] = c[1])

14:

second = c[2];

15:

else second = c[1];

16: for(int

i = 3;i < 2 ∗ length − 1;i + +){

3

17:

if (c[i] == min){ 18:

if (i%2 == 0){

if (c[i − 1] < second)

19:

second = t[i − 1]; }

20:

else {

21:

if(c[i + 1] < second)

22:

second = c[i + 1]; }

23:

24:

}

25: } 26: return

second

4:Modify Karatsuba’s algorithm to multiply two n-bit numbers in which n is not

exactly a power of 2. How about two numbers of m bits and n bits respectively?

Assume m ≥ n, the two numbers X and Y of m bits and n bits respectively, the numbers can

be expressed as:

X = x1 ∗ 10n + x0,

Y = y1 ∗ 10n + y0,

XY = (x1 ∗ 10n + x0)(y1 ∗ 10n + y0) = x1y1 ∗ 102n + (x1y0 + x0y1) ∗ 10n + x0y0;

Z2 = x1y1

Z1 = x1y0 + x0y1;

Z0 = x0y0,

This step requires a total of 4 multiplications, but after Karatsuba’s improvement it only requires

3 multiplications.

Z1 = (x1 + x0) ∗ (y1 + y0) − Z2 − Z0, So Z1 can be

obtained by multiplying and adding and subtracting once.

4

5. V. Pan has discovered a way of multiplying 68×68 matrices using 132,464

multiplications, a way of multiplying 70 × 70 matrices using 143,640

multiplications, and a way of multiplying 72×72 matrices using 155,424

multiplications. Which method yields the best asymptotic running time when

used in a divide-and-conquer matrix-multiplication algorithm? How does it

compare to Strassen’s algorithm?

Answer:The asymptotic running time of the three methods is computed as follows:

log68 132464 ≈ 2.795128

log70132464 ≈ 2.795122

log72132464 ≈ 2.795147 the

second one yields the best

asymptotic running time. The

asymptotic running time of

Strassen’s

algorithm is Θ(nlog7), and log7 ≈ 2.807355. Obviously, it’s worse than any of the above

algorithms.

6. How quickly can you multiply a kn × n matrix by an n × kn matrix, using

Strassen’s algorithm as a subroutine? Answer the same question with the order

of the input matrices

reversed.

5

Answer: By considering block matrix multiplication and using Strassen’s algorithm as a subroutine,

we can multiply a kn × n matrix by an n × kn matrix in Θ(k2nlog7) time. With the order reversed,

we can do it in Θ(k · nlog7) time.

7: Show how to multiply the complex numbers a + bi and c + di using

only three multiplications of real numbers. The algorithm should take a,

b, c, and d as input and produce the real component ac − bd and the

imaginary component ad + bc separately.

Answer:First, assuming that

P1 = ad

P2 = bc

P3 = (a − b)(c + d) = ac − bd + ad − bc

then the real component is

P3 − P1 + P2 = ac − bd

and the imaginary component is

P1 + P2 = ad + bc

Therefore, this method uses only three multiplications.

8. Let R.i; j / be the number of times that table entry mŒi; j is referenced while

computing other table entries in a call of MATRIX-CHAIN-ORDER. Show that the

total number of references for the entire table is Xn iD1 Xn jDi R.i; j / D n3 n 3 :

(Hint: You may find equation (A.3) useful.

Answer:Each time the l-loop executes, the i-loop executes n−l+1 times. Each

time the i-loop executes, the k-loop executes j−i = l−1 times, each time referencing

m twice. Thus the total number of

times that an entry of m is referenced while computing other entries is

.

Thus,

6

9.Give a dynamic-programming solution to the 0-1 knapsack problem that runs in

O.n W / time, where n is the number of items and W is the maximum weight of

items that the thief can put in his knapsack.

Answer:Suppose we know that a particular item of weight w is in the solution.

Then we must solve the subproblem on n−1 items with maximum weight W −w.

Thus, to take a bottom-up approach we must solve the 0 − 1 knapsack problem for

all items and possible weights smaller than W. We’ll build an n + 1 by W + 1 table

of values where the rows are indexed by item and the columns are indexed by total

weight. (The first row and column of the table will be a dummy row). For row i

column j, we decide whether or not it would be advantageous to include item i in

the knapsack by comparing the total value of of a knapsack including items 1

through i − 1 with max weight j, and the total value of including items 1 through i

− 1 with max weight j − i.weight and also item i. To solve the problem, we simply

examine the n, W entry of the table to determine the maximum value we can

achieve. To read off the items we include, start with entry n, W. In general, proceed

as follows: if entry i, j equals entry i−1, j, don’t include item i, and examine entry

i−1, j next. If entry i, j doesn?t equal entry i−1, j, include item i and examine entry

i − 1, j − i.weight next. See algorithm below for construction of table:

7

Algorithm 1 0 − 1 Knapsack(n,W)

1: Initialize

an n + 1 by W + 1 table K

2: for j = 1 → W do

3:

k[0,j] = 0

4: end for

5: for

6:

i = 1 → n do

K[i,0] = 0

7: end for

8: for

9:

10:

i = 1 → n do

for j = 1 → W do

if j < i.weight then

K[i,j] = K[i − 1,j]

11:

12:

end if

K[i,j] = max(K[i − 1,j],K[i − 1,j − i.weight] + i.value)

13:

14:

end for

15: end for

16: The

running time of a 0-1 knapsack algorithm is O(nW).

10. In order to transform one source string of text xŒ1 : : mto a target string yŒ1 : : n, we can

perform various transformation operations. Our goal is, given x and y, to produce a series of

transformations that change x to y. We use an array ´—assumed to be large enough to hold all

the characters it will need—to hold the intermediate results. Initially, ´ is empty, and at

termination, we should have ´Œj D yŒj for j D 1; 2; : : : ; n. We maintain current indices i into

x and j into ´, and the operations are allowed to alter ´ and these indices. Initially, i D j D 1. We

are required to examine every character in x during the transformation, which means that at

the end of the sequence of transformation operations, we must have i D m C 1. We may choose

from among six transformation operations: Copy a character from x to ´ by setting ´Œj D xŒiand

then incrementing both i and j . This operation examines xŒi. Replace a character from x by

another character c, by setting ´Œj D c, and then incrementing both i and j . This operation

examines xŒi. Delete a character from x by incrementing i but leaving j alone. This operation

examines xŒi. Insert the character c into ´ by setting ´Œj D c and then incrementing j , but

leaving i alone. This operation examines no characters of x. Twiddle (i.e., exchange) the next

two characters by copying them from x to ´ but in the opposite order; we do so by setting ´Œj

D xŒi C 1and ´Œj C 1D xŒi and then setting i D i C 2 and j D j C 2. This operation examines

8

xŒiand xŒi C 1. Kill the remainder of x by setting i D m C 1. This operation examines all

characters in x that have not yet been examined. This operation, if performed, must be the final

operation. Problems for Chapter 15 407 As an example, one way to transform the source string

algorithm to the target string altruistic is to use the following sequence of operations, where

the underlined characters are xŒi and ´Œj after the operation: Operation x ´ initial strings

algorithm copy algorithm a copy algorithm al replace by t algorithm alt delete algorithm alt

copy algorithm altr insert u algorithm altru insert i algorithm altrui insert s algorithm altruis

twiddle algorithm altruisti insert c algorithm altruistic kill algorithm altruistic Note that there

are several other sequences of transformation operations that transform algorithm to altruistic.

Each of the transformation operations has an associated cost. The cost of an operation depends

on the specific application, but we assume that each operation’s cost is a constant that is known

to us. We also assume that the individual costs of the copy and replace operations are less than

the combined costs of the delete and insert operations; otherwise, the copy and replace

operations would not be used. The cost of a given sequence of transformation operations is the

sum of the costs of the individual operations in the sequence. For the sequence above, the cost

of transforming algorithm to altruistic is .3

cost.copy// C

cost.replace/ C cost.delete/ C .4

cost.insert// C cost.twiddle/ C

cost.kill/ :

a. Given two sequences xŒ1 : : mand yŒ1 : : nand set of transformation-operation costs, the

edit distance from x to y is the cost of the least expensive operation sequence that transforms

x to y. Describe a dynamic-programming algorithm that finds the edit distance from xŒ1 : : mto

yŒ1 : : nand prints an optimal operation sequence. Analyze the running time and space

requirements of your algorithm. The edit-distance problem generalizes the problem of aligning

two DNA sequences (see, for example, Setubal and Meidanis [310, Section 3.2]). There are

several methods for measuring the similarity of two DNA sequences by aligning them. One such

method to align two sequences x and y consists of inserting spaces at 408 Chapter 15 Dynamic

Programming arbitrary locations in the two sequences (including at either end) so that the

resulting sequences x0 and y0 have the same length but do not have a space in the same

position (i.e., for no position j are both x0 Œj and y0 Œj a space). Then we assign a “score” to

each position. Position j receives a score as follows: C1 if x0 Œj D y0 Œj and neither is a space,

1 if x0 Œj ¤ y0 Œj and neither is a space, 2 if either x0 Œj or y0 Œj is a space. The score for

the alignment is the sum of the scores of the individual positions. For example, given the

sequences x D GATCGGCAT and y D CAATGTGAATC, one alignment is G ATCG GCAT CAAT

GTGAATC -*++*+*+-++* A + under a position indicates a score of C1 for that position, a indicates a score of 1, and a * indicates a score of 2, so that this alignment has a total score of

6

1

2

1

4

2 D 4. b. Explain how to cast the problem of finding an optimal

alignment as an edit distance problem using a subset of the transformation operations copy,

replace, delete, insert, twiddle, and kill.

9

15.5-a Answer: We will index our subproblems by two integers, 1 ≤ i ≤ m and

1 ≤ j ≤ n. We will let i indicate the rightmost element of x we have not processed

and j indicate the rightmost element of y we have not yet found matches for. For a

solution, we call EDIT − DISTANCE(x,y,i,j)

Algorithm 2 EDIT-DISTANCE(x,y,m,n)

1: letm = x.lengthandn

2: if

= y.length

i = m then

return (n − j)cost(insert)

3:

4: end if

5: if

j = n then

return min(m − i)cost(delete),cost(skill)

6:

7: end if

8: O1,O2,...,O5 initialized

9: if

to ∞

x[i] = y[j] then

O1 = cost(copy) + EDIT(x,y,i + 1,j + 1)

10:

11: end if

12: O2 = cost(replace) + EDIT(x,y,i + 1,j

+ 1)

13: O3 = cost(delete) + EDIT(x,y,i + 1,j +

1)

14: O4 = cost(insert) + EDIT(x,y,i + 1,j

15: if

16:

i < m − 1andj < n − 1 then

if x[i] = y[j + 1]andx[i + 1] = y[j] then

O5 = cost(twiddle) + EDIT(x,y,i + 2,j + 2)

17:

18:

+ 1)

end if

19: end if

20: return

mini∈[5] Oi

The answer of the Problem 15-5.b:

10

We will set:

cost(COPY ) = −1

cost(REPLACE) = 1

cost(DELETE) =

cost(INSERT) = 2

cost(twiddle) = cost(kill) = ∞

Then a minimum cost translation of the first string into the second corresponds to

an alignment. where we view a copy or a replace as incrementing a pointer for

both strings. A insert as putting a space at the current position of the pointer in

the first string. A delete operation means putting a space in the current position in

the second string. Since twiddles and kills have infinite costs, we will have neither

of them in a minimal cost solution. The final value for the alignment will be the

negative of the minimum cost sequence of edits.

Question 2: In a word processor, the goal of pretty-printing is to take text with a

ragged right margin,.... Give an efficient dynamic programming algorithm to find a

partition of a set of words W into valid lines, so that the sum of the squares of the

slacks of all lines (including the last line) is minimized.

The answer of the Question 2:

Algorithm 3 describes the related algorithm (Pretty-printing algorithm)

Algorithm 3 Pretty-printing algorithm

int neatPrint(FILE∗ fout, string ∗str, int lineLen){ int strLen=(int)str->length(), int wordNo=0,

const char∗ space=“”, int spaceFind=(int)str− >find(space);

while(spaceFind! = string :: npos)

wordNo + +;spaceFind = (int)str− > find(space,spaceFind + 1);

wordNo + +;

11

int∗

lenArray=new

int[wordNo],

int[wordNo],wordPos[0]=0, int

int∗

wordPos

=

new

lastSpace = 0, int indexWord = 0, spaceFind = 0;

while(true)

spaceFind = (int)str− > find(space,spaceFind + 1);

if(spaceFind ̸= string :: npos) then lenArray[indexWord + +] = spaceFind −

lastSpace;lastSpace = spaceFind + 1;

else then break;

lenArray[indexWord] = strLen − lastSpace,int ∗ cost = newint[wordNo],int ∗

trace = newint[wordNo];

for(int i = 0; i < wordNo; i

+ +) if(i = wordNo −

1) then int x = 0;

if(i = 0) then cost[i] = extraSpace(lenArray,i,i,lineLen,wordNo);

else then cost[i] = cost[i − 1] + extraSpace(lenArray,i,i,lineLen,wordNo); int j = i −

1, trace[i] = i, int ls = extraSpace(lenArray,j,i,lineLen,wordNo);

while (ls ≥ 0)

if(cost[i] > cost[j] + ls) then cost[i] = cost[j] + ls;trace[i] = j;

ls = extraSpace(lenArray,− − j,i,lineLen,wordNo);

return 0;}

int extraSpace(int ∗l, int i, int j, int len, int

wordNo){ if(i < 0||j < i) then return -1;

int v = len, p = i; while (p ≤ j){ v = v − (∗(l + p));

if(p ̸= j) then v = v − 1;} p++; if (v < 0) then return

-1; if (j = wordNo − 1) then return 0; return v ∗

v ∗ v;}

12

11. Give a formal encoding of directed graphs as binary strings using an

adjacencymatrix representation. Do the same using an adjacency-list

representation. Argue that the two representations are polynomially related。

Answer:step 1: A formal encoding of directed graphs as binary strings using an adjacency

matrix

representation:

Directed graph G(V,E) is the graph in which all the edges are represented in the form an arrow

from one vertex to other that is edge (u,v) ∈ E has an arrow from u to v. Adjacencymatrix Adj[u,v]

representation of graph is the representation of graph in the form of a matrix by using 0 if there

is no edge between vertices and 1 if there is a directed edge from one vertex

to other.

step 2:For the binary string form of a graph G consider the graph as:

Adjacency matrix representation in the form of binary string is as:

0

1

0

0

1

0

0

1

0

1

0

0

0

1

0

13

0

0

1

0

0

0

1

1

1

0

This matrix-representation of graph could be encoded as: 0100100101000100010001110

Taking the square root of number of bits will return the capacity of the square matrix.This

representation uses |V |2 bits, which is of polynomial form.

step 3: ln the adiacency-list form of a graph G(v,E) with directional edges, |V | number of lists

is created. One list is created for each vertex and for every vertex v ∈ V is added to the list of

vertex v if there is an edge from v to that vertex.

To convert the adjacency matrix into adjacency list, following algorithm as a pseudo code is

presented:

1) For each row do

2) Scan the row from left-to-right.

3) Add that particular column number to the list.

4) End for step 4: Using this algorithm the adjacency list representation of the graph is as in

this all the vertices connected to a vertex are listed. And the list is stored in an ASCll file:

1

2

5

2

3

5

3

4

5

4

2

3

To represent an adjacency list the no. of words of memory will be O(|V |+|E|). Every vertex

number shall be in between 1 and |V | , and that’s why it will need Θ(lg|V |) bits to store it in the

memory. So the entire number of bits in the file needed will be O((|v,E|),lg|v|), which is also in

the polynomial form.

14

Hence, the adjacency-matrix representation and adjacency-list representations both takes the

polynomial type of time for its representation.

12. Is the dynamic-programming algorithm for the 0-1 knapsack problem that is asked for in

Exercise 16.2-2 a polynomial-time algorithm? Explain your answer

Answer:step 1: The knapsack problem is the problem in which a set of items are given with

their weights, and a thief having a knapsack of a definite capacity. The thief want to put the items

in his bag so that the cost or price of the items is maximum and sum of their weights is either less

than or same as the capacity of the knapsack.

ln an optimal solution of knapsack for the S for W pounds knapsack, i is the item having largest

weight value. Then for W − wi, pounds, S′ = S − {i} is an optimal solution and the value of S is the

addition of Vi and the value of the problem generated by it.

step 2:To mathematically state this fact, we define c[i,w] to be the solution for items 1,2....,i

and maximum weight w. Then,

lt states that value of solution to i items can include either ith item; in that case the solution will

be addition of Vi, and a subproblem which solves for i − 1 items excluding the weight wi. Or it

excludes ith item, where it is a solution to the part of problem for i − 1 items with the similar

weight value. lt means, if the thief picks item i , thief takes Vi value, and for next thief can select

from items w − wi, and get e[i − 1,w − wi] additional value. On other hand, if thief decides not to

take item i, thief can choose from item 1,2...,i−1 up to the weight limit w, and get c[i − 1,w − wi]

value. The choice should be made from these two whichever is better.

step 3: The 0-1 knapsack algorithm is analogous to or subsequent to obtaining the longest

common subsequence length algorithm (LCS).

15

Input: Maximum weight W and the number of items and the 2 sequences namely m < v1,v2,...,vn >

and w =< wl,w2,...,wn > c[i, j] Values are stored in the table entries of which are in row major order.

At the end c[n, w— keeps the greatest value that the thief can put into the knapsack. Refer the

textbook for DYNAMIC-0-1-KNAPSACK(v,w,n,W) algorithm.

The time requires executing the 0-1-knapsack algorithm of dynamic programming is Θ(nW)

which is taken in two forms as:

1) Firstly Θ(nw) time is needed to fill the knapsack which has (n + 1)!‘A´(w + 1) entries in

it and each requiring Θ(1) time to compute.

2) The O(n) time is used to analyze the solution and it is because the thief checks his bag

if it has already filled with the item of its capacity or it can still take some items.

Hence, the 0-1 knapsack problem of dynamic programming fakes time in the polynomial form

which is Θ(nW) for n items and W capacity of knapsack.

13. Prove that P ∈co-NP.

Answer:A language is in coNP if there is a procedure that can verify that an input is not in the

language in polynomial time given some certificate. Suppose that for that language we a

procedure that could compute whether an input was in the language in polynomial time receiving

no certificate. This is exactly what the case is if we have that the language is in P. Then, we can

pick our procedure to verify that an element is not in the set to be running the polynomial time

procedure and just looking at the result of that, disregarding the certificate that is given. This then

shows

that any language that is in P is L ∈ Co − NP., giving us the inclusion that we wanted.

14. Prove that if NP ≠co-NP, then P ≠ NP.

Answer:Suppose NP ≠ CO − NP. let L ∈ NP\co − NP. Since P ⊂ NP ∩ co − NP, and L /∈ NP ∩ co

− NP, we have L /∈ P. Thus, P ̸= NP

15: Give a rigorous analysis of algorithm (by Mou Guiqiang) for 2-SAT

16

The answer of the Question 2: Assumin the given logical expression is: L1 = (x2 _ x1) ^ (x1 _ x2) ^

(x1 _ x3) ^ (x2 _ x3) ^ (x1 _ x4), L2 = (x1 _ x2) ^ (x1 _ x2) ^ (x1 _ x3) ^ (x1 _ x3) The logical diagram

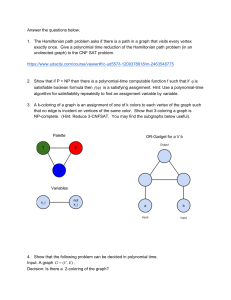

of L1 is shown in the following figure

The logical diagram of L2 is shown in the following figure:

A sufficient condition for its solution to exist is that xi and xi do not lie in the same strongly

connected component. If xi and xi are in the same strongly connected component, then both of

them cannot be True at the same time, so the solution naturally does not exist. L1 satisfies this

condition, so the solution of L1 exists. L2 does not satisfy this condition, so the solution of L2 does

not exist. After determining the existence or non-existence of a solution, we have to construct a

feasible solution. We find that if there exists xi → xi in the component diagram after the reduction

point, then xi must be True. Conversely, if xi → xi exists, then xi must be False. Then we can choose

the topological order of xi and xi to be True for whose topological sequence is later. this way a set

of feasible solutions can be constructed.

16: Give a formal encoding for the problems set covering and hitting set. (You may find the

meaning of ¡°encoding¡± on Page 1055.) write the form reductions between them (two ways)

The answer of the Question 3: For the graph G of the vertex coverage problem, a set Si is created

for each edge in G. The elements of Si are the two vertices of that edge. Reducte G to a set S1, S2,

S3 as shown in the following figure

17

S1 = {1,2} = 11000, S2 = {1,5} = 10001, S2 = {3,4} = 00110.

Method 1: When there is a solution to the collision set problem, there is a solution to the vertex

coverage problem, and it is only necessary to select all the points corresponding to the solution

H of the collision set, which is the solution to the corresponding vertex coverage problem. For

example, for the collision set problem in the figure, suppose b is 2, then a solution is H = {1,3}, at

this time, select 1 and 3 points to form the vertex set V, which is the solution of the vertex

coverage problem. Finally, when the collision set has no solution, the vertex covering problem has

no solution.

Method 2: Prove by the converse of the proposition. When the vertex-covering problem has a

solution, the set H obtained by using each vertex in the corresponding solution V corresponding

to the elements of the generated set is the solution of the collision set problem. For example, for

the vertex coverage problem in the figure, one of the solutions is V = {1,3}, and one of the

solutions of the collision set is H = {1,3}.

17: Read this question and answer, and give a quick summary (between 30 and 60

words). Why Turing machine is still relevant in the 21st century.

The answer of the the Question 4:

Turing machine proved the general computing theory, affirms the possibility of computer

implementation, and gives the main architecture of computer. Turing machine model theory is

the core theory of computing discipline, because the ultimate computing power of computer is

the computing power of general Turing machine. Many problems can be transformed into the

simple model of Turing machine.

18. Analyze the running time of the frst greedy approximation algorithm for vertex cover and

the greedy approximation algorithm for set covering.

18

Answer:Vertex Covering

Algorithm 1 APPROX-GREEDY-VERTEX-COVER

E0 = E,U ← X

while E0 6= ∅ do

Pick e ∈ E0,say e = (u,v)

S = S ∪ u,v

E0 = E0 − (u,v) ∪ edges

incident

end while return

S

to u,v

APPROX-GREEDY-VERTEX-COVER is a poly-time 2-approximation algorithm.

0

Running time is O(V + E) (using adjacency lists to represent E )

Let A ⊆ E denote the set of edges picked in line 4

Every optimal cover C∗ must include at least one endpoint of edges in A, and edges in A do not

share acommon endpoint:|C∗| ≥ |A|

Every edge in A contributes 2 vertices to |C|:|C| = 2|A| ≤ |C∗|

Set Covering

Algorithm 2 GREEDY-SET-COVER

U←XC

←∅

while U 6= ∅ do select an S ∈ F that

maximizes |S ∩ U|

U←U−S

C ← C ∪ {S}

19

end while

return C

Let OPT be the cost of optimal solution. Say (k−1) elements are covered before an iteration of

above greedy algorithm. The cost of the k’th element ≤ OPT/(n−k +1) (Note that cost of an

element is evaluated by cost of its set divided by number of elements added by its set). How did

we get this result? Since k’th element is not covered yet, there is a Si that has not been covered

before the current step of greedy algorithm and it is there in OPT. Since greedy algorithm picks

the most cost effective Si, per-element-cost in the picked set must be smaller than OPT divided

by remaining elements. Therefore cost of k’th element ≤ OPT/|U−I| (Note that U−I is set of not

yet covered elements in Greedy Algorithm). The value of |U−I| is n−(k−1) which is n−k+1.

Cost

of

Greedy

Algorithm = Sum

of

costs

of

n

elements

≤ (OPT/n + OPT(n − 1) + ... + OPT/n)

≤ OPT(1 + 1/2 + ......1/n)

≤ OPT ∗ Logn

19. Analyze the second greedy algorithm for vertex cover and give tight examples. Does this

algorithm implies an approximation algorithm for the independent set problem of the same

ratio?

Answer:We can see the algorithm1 APPROX-GREEDY-VERTEX-COVER is a 2-approximation algorithm, For a detailed analysis, see Algorithm 1 and the proof. The same algorithm can gives the

same radio for the independent set problem.

20. Analyze the local-search algorithm for maximum cut (running time and expected

approximation ratio).

The answer :

Algorithm 3 Local Search Algorithm for Max-Cut

20

INPUT: A graph G = (V,E) OUTPUT: A set

S ⊆ V Initialize S arbitrarily

while ∃u ∈ V with more edges to the same side than across do

Emove u to the other side

end while return S

Clearly each iteration takes polynomial time, so the question is how many iterations there are

(this is typical in local search algorithms). We can bound this through some very simple

observations.

1. Initially |E(S,S)| ≥ 0,

2. In every iteration, |E(S,S)| increases by at least 1, and

3. |E(S,S)|is always at most m ≤ n2.

These facts clearly imply that the number of iterations is at most m ≤ n2 and thus the total running

time is polynomial.

We say that S is a local optimum if there is no improving step: for all u ∈ V , there are more {u,v}

edges across the cut than connecting the same side. Note that the algorithm outputs a local

optimum by definition.

Suppose S is a local OPT. Let dacross(u) denote the number of edges incident on u that cross the

cut. The since S is a local optimum, we know that

where m = |E|. We know OPT ≤ m, and hence the algorithm is a 2-approximation.

21. This problem examines three algorithms for searching for a value x in an unsorted array A

consisting of n elements. Consider the following randomized strategy: pick a random index i

into A. If AŒi D x, then we terminate; otherwise, we continue the search by picking a new

random index into A. We continue picking random indices into A until we find an index j such

21

that AŒj D x or until we have checked every element of A. Note that we pick from the whole

set of indices each time, so that we may examine a given element more than once. a. Write

pseudocode for a procedure RANDOM-SEARCH to implement the strategy above. Be sure that

your algorithm terminates when all indices into A have been picked. 144 Chapter 5 Probabilistic

Analysis and Randomized Algorithms b. Suppose that there is exactly one index i such that AŒi

D x. What is the expected number of indices into A that we must pick before we find x and

RANDOM-SEARCH terminates? c. Generalizing your solution to part (b), suppose that there are

k 1 indices i such that AŒiD x. What is the expected number of indices into A that we must pick

before we find x and RANDOM-SEARCH terminates? Your answer should be a function of n and

k. d. Suppose that there are no indices i such that AŒiD x. What is the expected number of

indices into A that we must pick before we have checked all elements of A and RANDOMSEARCH terminates? Now consider a deterministic linear search algorithm, which we refer to

as DETERMINISTIC-SEARCH. Specifically, the algorithm searches A for x in order, considering

AŒ1; AŒ2; AŒ3; : : : ; AŒnuntil either it finds AŒiD x or it reaches the end of the array. Assume

that all possible permutations of the input array are equally likely. e. Suppose that there is

exactly one index i such that AŒiD x. What is the average-case running time of DETERMINISTICSEARCH? What is the worstcase running time of DETERMINISTIC-SEARCH? f. Generalizing your

solution to part (e), suppose that there are k 1 indices i such that AŒiD x. What is the averagecase running time of DETERMINISTICSEARCH? What is the worst-case running time of

DETERMINISTIC-SEARCH? Your answer should be a function of n and k. g. Suppose that there

are no indices i such that AŒi D x. What is the average-case running time of DETERMINISTICSEARCH? What is the worst-case running time of DETERMINISTIC-SEARCH? Finally, consider a

randomized algorithm SCRAMBLE-SEARCH that works by first randomly permuting the input

array and then running the deterministic linear search given above on the resulting permuted

array. h. Letting k be the number of indices i such that AŒi D x, give the worst-case and expected

running times of SCRAMBLE-SEARCH for the cases in which k D 0 and k D 1. Generalize your

solution to handle the case in which k 1. i. Which of the three searching algorithms would you

use? Explain your answer.

The answer of the 5-2.a: Assume that A has n elements. Algorithm 1 will use an array P to track

the elements which have been seen, and add to a counter c each time a new element is checked.

Once this counter reaches n, we will know that every element has been checked. Let RI(A) be the

function that returns a random index of A.

The answer of the 5-2.b: Let N be the random variable for the number of searches required.

Algorithm 4 RANDOM-SEARCH

1: Initialize

an array P of size n containing all zeros.

2: Initialize

integers c and i to 0

22

3: while

c 6= n do

4:

i = RI(A)

5:

if A[i] == x then

return i

6:

end if

7:

if P[i] == 0 then

8:

P[i] = 1, c = c + 1.

9:

10:

end if

11: end

while

12: return

A does not contain x.

Then

=n

The answer of the 5-2.c: Let N be the random variable for the number of searches required.

Then

The answer of the 5-2.d: This is identical to the “How many balls must we toss until every bin

contains at least one ball?” problem solved in section 5.4.2, whose solution is b(lnb+O(1)).

23

The answer of the 5-2.e: The average case running time is (n + 1)/2 and the worst case running

time is n.

The answer of the 5-2.f: Let X be the random variable which gives the number of elements

examined before the algorithm terminates. Let Xi be the indicator variable that the ith element

of the array is examined. If i is an index such that A[i] 6= x then

since we examine

it only if it occurs before every one of the k indices that contains x. If i is an index such that A[i] =

x then

since only one of the indices corresponding to a solution will be examined. Let

S = {i|A[i] = x} and S0 = {i|A[i] 6= x}. Then we have

The answer of the 5-2.g: The average and worst case running times are both n.

The answer of the 5-2.h: SCRAMBLE-SEARCH works identically to DETERMINISTICSEARCH,

except that we add to the running time the time it takes to randomize the input

array.

The answer of the 5-2.i: I would use DETERMINISTIC-SEARCH since it has the best expected

runtime and is guaranteed to terminate after n steps, unlike RANDOM-SEARCH. Moreover, in the

time it takes SCRAMBLE-SEARCH to randomly permute the input array we could have performed

a linear search anyway.

22. Design a randomized

-approximation algorithm for the following problem:

The answer:Let wuvi = Σv∈Vi,v6=uw(u,v) denote the sum of the weight of edges from vertex u

to vertices in set Vi.

(1)Initialization. Partition the vertices into k sets, V1,V2,...,Vk, arbitrary assigning;

(2)Iterate. If there is a pair of vertices u ∈ Vi and v ∈ Vl,i 6= l, and |Vi|wuvi + |Vl|wvvl >

|Vi|wuvl + |Vl|wvvi, reassign vertex u to Vl, and vertex v to Vi;

(3)|Vi|wuvi + |Vl|wvvl ≤ |Vi|wuvl + |Vl|wvvi, stop.

24

Next, Proof of the ratio of this algorithm. Let wii denote the sum of the weights of the edges

with both the vertices in Vi. wil denotes the sum of the weights of the edges that connect a vertex

in Vi to a vertex in Vl. Sol denote the value of the solution V1,V2,...,Vk. Then,

and

. The derivation process is as follows:

(1) As the solution returned by the local-search algorithm is locally optimal |Vi|wuvi +

|Vl|wvvl ≤ |Vi|wuvl + |Vl|wvvi.

(2) Sum both side of the inequality and obtain |Vi|Σu∈Viwuvi +|Vi||Vl|wvvl ≤ |Vi|Σu∈Viwuvl +

|Vi||Vl|wvvi.

(3) Next, sum both sides of the above expression over all v ∈ Vl and get |Vl||Vi|Σu∈Viwuvi +

|Vi||Vl|wvvl ≤ |Vl||Vi|Σu∈Viwuvl + |Vi||Vl|wvvi.

(4) Setting Σu∈Viwuvi = 2wii,Σu∈Vlwuvl = 2wil,Σu∈Vlwvvi = 2wli. Then, 2|Vi||Vl|wii +wll ≤

|Vi||Vl|wilwli. So, wii + wll ≤ wil.

(5) Then,

setting

2Sol.

(6) Therefore,

. So,

.

(7) The optimal solution obtains at most all of the edges. Therefore, OPT ≤ Ws + Sol ≤

. So,

.

SO, this is a randomized

-approximation algorithm.

23. Design a randomized approximation algorithm for the following problem, and

analyze its expected approximation ratio:

Answer:Algorithm: Initialize two sets A = V,B = ∅. If there exists a vertex v such that moving

it from one set to the other would strictly increase the cut size of A plus the cut size of B, move it,

and continue doing this until no such vertex v can be found. Compute the cuts sizes of A and B,

and return the larger of the two.

25

The algorithm costs m times to compute the value of a given cut. Do this at most n times to

find a satisfactory vertex v, and since the maximum cut value is m and each swap we perform is

guaranteed to increase the cut size by at least 1, we do at most m swaps before returning our

approximate max cut. So, the algorithm runs time is O(nm2).

Ratio: The OPT ≤ m for the algorithm. Let δA,B,B = V − A be the number of edges

crossing out of A into B, and δinA (v) be the number of edges pointing into v from some set A and

be the number of edges pointing out of v into A. Thus, moving v will change the

sum of the cut sizes by δinA (v) + δoutA (v) − δinB (v) − δoutB (v).

Moving single nodes across the cut cannot increase this sum of cut sizes, it has δinA (v) +

. It can be seen that the first two terms will each count the number

of

edges internal to A, that is δA,A. The third term counts the number of edges crossing from A to B,

and the fourth term counts the number of edges crossing from B to A. With this it can be seen

that the sets A and B found by the algorithm must satisfy 2δA,A ≤ δA,B+δB,A. By the same reasoning,

2δB,B ≤ δA,B+δB,A. Since every edge in the graph is directed, thus, δA,A+δA,B+δB,A+δB,B = m.

The Sol = max(δA,B,δB,A) ≥ (δA,B,δB,A)/2 ≥ (δA,A+δA,B +δB,A+δB,B)/4 = m/4. Therefore, the Sol ≥

OPT/4. That is, the ratio is .

24.. Give a formal proof that the reduction from SAT to 3-SAT is polynomial.

Answer:Given an instance of SAT: X = {x1,x2,...,xn}, C = {C1,C2,...,Cm}, Ci = zi,1 ∨ zi,2 ∨

zi,3 ···zi,k, We turn an instance of this problem into an instance of 3SAT (X′,C′). For each Ci, four

scenarios are as follow:

1) If |Ci| = 1, then create two variables yi,1 and yi,2, which make

(zi,1 ∨ yi,1 ∨ yi,2) ∧ (zi,1 ∨ yi,1 ∨ yi,2) ∧ (zi,1 ∨ yi,1 ∨ yi,2)

2) If |Ci| = 2, then create one variable yi,1, which makes Ci′ = (zi,1∨zi,2∨yi,1)∧(zi,1∨zi,2∨yi,1) 3) If

|Ci| = 3, then

remains unchanged.

4) If |Ci| = 4, it is assumed to be k, then add k−3 variables so that Ci′ = (zi,1∨zi,2∨yi,1)∧

(yi,1 ∨ zi,3 ∨ yi,2) ∧ (yi,2 ∨ zi,4 ∨ yi,3) ∧ ···(yi,k−3 ∨ zi,k−1 ∨ zi,k)

26

For each clause Ci, Ci′ has up to k − 3 more variables than Ci, so 3 SAT has up to m(k − 3)

variables and m(k −3) clauses than SAT. It’s clear that C′ satisfies C. Therefore, the reduction from

SAT to 3-SAT is polynomial.

25. Professor Jagger proposes to show that SAT P 3-CNF-SAT by using only the truth-table

technique in the proof of Theorem 34.10, and not the other steps. That is, the professor

proposes to take the boolean formula , form a truth table for its variables, derive from the

truth table a formula in 3-DNF that is equivalent to :, and then negate and apply DeMorgan’s

laws to produce a 3-CNF formula equivalent to . Show that this strategy does not yield a

polynomial-time reduction

Answer:The 3-SAT is a satisfiability problem where the Boolean formula has only 3 literals or

variables ORed to each other in each clause that are ANDed. The output of each of the clauses

should give the output of the whole problem as 1. lt means that we should choose the values of

the

literals or variables used in the formula so that the output is 1 then only the formula is satisfiable.

The 3-CNF SAT is a SAT problem with 3 such literals as variables, and 2 CNF SAT is a SAT

problem with two literals as variables.

For the proof of 3-CNF SAT to be NPC,consider the following 3-CNF SAT given in equation form

by using3 literals x1,x2,x3 as:

ϕ = ((x1 → x2) ∨ ¬((¬x1 ↔ x3) ∨ x4) ∧ ¬x2)

Corresponding to the related parse tree the result ϕ′ of the expression ϕ is derived and

represented as:

ϕ′ = y1 ∧ (y1 ↔ (y2 ∧ ¬x2)) ∧ (y2 ↔ (y2 ∨ y4)) ∧ (y3 ↔ (x1 → x2))

∧ (y4 ↔ ¬y5) ∧ (y5 ↔ (y6 ∨ x4)) ∧ (y6 ↔ (¬x1 ↔ x3))

We know that the length of the related parse tree is the total height traversed, that is, of the

form h = 2logn where n is the number of leaves. Now this is of the form O(2k) which is a

non-polynomial form. So to find out the total value of the formula we need to perform the same

27

task for every clause. This results in a non-polynomial degree. The 3 CNF form is:

ϕ = (¬y1 ∨ ¬y2 ∨ x2) ∧ (¬y1 ∨ y2 ∨ ¬x2) ∧ (¬y1 ∨ y2 ∨ x2) ∧ (y1 ∨ ¬y2 ∨ x2)

Hence by taking the values of the truth table the number of values increases creating a non

polynomial reduction. So this strategy does not yield a polynomial time reduction.

26. The set-partition problem takes as input a set S of numbers. The question is whether the

numbers can be partitioned into two sets A and A D S A such that P x2A x D P x2A x. Show that

the

set-partition

problem

is

NP-complete

Answer:Using the portion of the set as certificate shows that the problem is in NP. For NPhardness we give reduction from subset sum. Given an instance S = {x1,x2,...,xn} and t compute r

such the r + t = (Px∈A x + r)/2(r = Px∈A x − 2t). The instance for set portioned assume S has a

subset S′ summing to t. Then the set S′ sums to r +t and thus portions R. Conversly, if R can be

portioned then the set containing the element r sum to r + t. All other elements in this set sums

to t thus providing to the subset S′.

27. In the half 3-CNF satisfiability problem, we are given a 3-CNF formula ϕ with n variables

and m clauses, where m is even. We wish to determine whether there exists a truth

assignment to the variables of ϕ such that exactly half the clauses evaluate to 0 and exactly

half the clauses evaluate to 1. Prove that the half 3-CNF satisfiability problem is NP-complete。

Answer:A certificate would be an assignment to input variables which causes exactly half the

clauses to evaluate to 1, and the other half to evaluate to 0. Since we can check this in polynomial

time, half 3-CNF is in NP. To prove that it’s NP-hard, we’ll show that 3-CNF-SAT ≤p HALF-3-CNF.

Let ϕ be any 3-CNF formula with m clauses and input variables x1,x2,...,xn. Let T be the formula

(y∨y∨¬y), and let F be the formula (y∨y∨y). Let ϕ′ = ϕ∧T ∧...∧T ∧F ∧...∧F where there are m

copies of T and 2m copies of F. Then ϕ has 4m clauses and can be constructed from ϕ in

28

polynomial time. Suppose that ϕ has a satisfying assignment. Then by setting y = 0 and the x′is

to the satisfying assignment, we satisfy the m clauses of ϕ and the mT clauses, but none of the

F clauses. Thus, ϕ′ has an assignment which satisfies exactly half of its clauses. On the other hand,

suppose there is no satisfying assignment to ϕ. The mT clauses are always satisfied. If we set y

= 0 then the total number of clauses satisfies in ϕ′ is strictly less than 2m, since each of the 2m

F clauses is false, and at least one of the ϕ clauses is false. If we set y = 1, then strictly more than

half the clauses of ϕ′ are satisfied, since the 3m T and F clauses are all satisfied. Thus, ϕ has a

satisfying assignment if and only if ϕ′ has an assignment which satisfies exactly half of its clauses.

We conclude that HALF-3-CNF is NP-hard, and hence NP-complete.

28.Bonnie and Clyde have just robbed a bank. They have a bag of money and want to divide it

up. For each of the following scenarios, either give a polynomial-time algorithm, or prove that

the problem is NP-complete. The input in each case is a list of the n items in the bag, along

with the value of each. a. The bag contains n coins, but only 2 different denominations: some

coins are worth x dollars, and some are worth y dollars. Bonnie and Clyde wish to divide the

money exactly evenly. b. The bag contains n coins, with an arbitrary number of different

denominations, but each denomination is a nonnegative integer power of 2, i.e., the possible

denominations are 1 dollar, 2 dollars, 4 dollars, etc. Bonnie and Clyde wish to divide the money

exactly evenly. c. The bag contains n checks, which are, in an amazing coincidence, made out

to “Bonnie or Clyde.” They wish to divide the checks so that they each get the exact same

amount of money. d. The bag contains n checks as in part (c), but this time Bonnie and Clyde

are willing to accept a split in which the difference is no larger than 100 dollars

The answer:

A problem is said to be in a set of NP-Complete if that can be solved in polynomial time, here NP

is abbreviation of non-deterministic polynomial time. For example, if a language named L is

contained in the set NP, then there exists an algorithm A for a constant c and L = {x ∈ 0,1 : there

exists a certificate y with |y| = O(|x|c) such that A(x,y) = 1}. Any problem that is convertible to

the decision problem will surely be the polynomial time.

29

a.Consider that there are m coins whose worth is X dollars each and rest n−m coins worth y

dollars each. So the sum total of the worth of all the coins is S = mx+(n−m)y dollars. So for equal

division of the total worth Bonnie and Clyde must take S/2 dollars each. The division of coins can

be done in a manner so that each of them have equal amount so for division assume that either

Bonnie or Clyde start taking the coins and take exactly half worth coins.

Assume that Bonnie first start to take the coins and he is free to take any of the coin that is

either x worth or y worth ad any number of coins whose worth is exactly S/2 dollars. lf Bonnie

takes p number of coins of dollar x where 0 ≤ p ≤ m then to check how many coins of y dollars

Bonnie should take that is to check if px ≤ S/2 and

. Bonnie can

take 0,1,2,...,m coins of value x dollars so there are maximum m + 1 possibilities to check the

worth of remaining coins, because Bonnie can take at most m coins. For every possibility, we will

perform a fixed number of operations, that’s why this is a polynomial time solvable

algorithm.

b.

The process of division of coins between Bonnie and Clyde is as when the nomenclature

of the coins are represented as 2’s power.

1) Arrange all the n coins in the non increasing order of their denominations that is 20,21,22,....

Firstly, give Bonnie the coin with greatest worth or denomination after that give Clyde the

coins the sum of whose worth is same as the coin given to Bonnie.

2) Now the worth of remaining coins will be greater than or equal to the sum of worth of coins

which are with Bonnie and Clyde because the denominations of coins are raised to

power of two.

3) Now from remaining coins give the coin which has maximum worth to Bonnie and again

give same worth coins to Clyde and repeat this process until all the coins are divided.

4) In this process if we give a coin to Bonnie which is of cost p and the sum of worth of rest of

the coins is less than p then coins can’t be divided equally between them else it is

possible.

c. In the check division between Bonnie and Clyde the partition of checks is as:

First calculate the total worth of checks given to the Bonnie check whether its total is S/2 and

if it is then it is correct.Here S is the sum of total worth of the checks of Bonnie and Clyde. This

check division problem can be converted to Subset-Sum problem which is a polynomial time

30

problem.For its reduction consider a set P which contains numbers in it. lf a set S′ used to

represent the sum total of all the numbers contained in P and t denotes the target number then

from set P ′ = P ∪{S′ −2t}, the numbers in the set P are summed up to t, only in the condition

when the set P ′ can be divided into two sets with same sum. The reduction is polynomial which

is clear. Since we know that the Subset-Sum problem is NPC, and hence this check division

problem is also non polynomial.

d.

In the case when Bonnie and Clyde are ready to have unequal division of check worth

of difference at most 100 then in this first of all we simply check if the sum of checks given to

Bonnie and Clyde have a difference less than 100. And it is polynomial time verifiable. We can

convert this conditional check division problem to the simple check division problem defined in

part problem which is a NPC problem. Now for conversion of problem consider that the most

basic unit of worth on check is 1$ without any loss in generality.

ln simple check division problem a set of checks C = {C1,C2,...,Cn}, is given and it is to be decide

that they can be divided into two equal parts of same worth.This problem is NPC problem. Now

for the problem in which there can be a difference of 100 dollars between the worth of money

divided a set is created as {C1.1000,C2.1000,...,Cn.1000}. The set C will be polynomial time only

if this new created set is polynomial time can be divided into two parts with maximum difference

of 100 dollars. This is polynomial time because the division of set C is polynomial time.

29. Given a graph, we take all the edge sets of complete subgraphs, and consider them as the

input of the set covering problem. In the following graph, e.g., the four vertices and six edges

on each face make a complete subgraph.

a)

Find a minimum set covering of the graph below.

b)

Somebody argues that this problem is NP-complete because it is a special case of set

overing problem. Is he correct? If not, is the problem in P, in NP-complete, or neither?

31

The answer:

a)

Assume that the set of each face in the following figure is Gi = (V,E)(i = 1,2,3,4,5,6),

the minimum set covering of the graph below may be G1 ∨ G2 ∨ G3 ∨ G4 ∨ G5 ∧ G6.

b)

Yes, I agree that this problem is NP-complete.

30. Give a polynomial-time reduction from isomorphism to the new problem.

Answer:In the Isomorphism, it is clear that there is an n×n permutation matrix P such that PT

AP = B.

We assume that

matrix which holds

. If there exist n × n permutation

and

, then there is a matrix P = P3 which make that

isomorphism polynomial is reduced to the new problem.

31. Both SAT (with n variables) and vertex cover (with n vertices) can be solved in

O(2n) time. Design O(cn)−time algorithms with c < 1.9 for 4-SAT and vertex cover.

The answe:

We design an efficient binary search algorithm: use some simple bookkeeping. As we pass

through the tree we keep track of which clauses are satisfied and which are not. As soon as we

find one that is not satisfied, we do not need to explore further and we can backtrack. Similarly,

if we find a situation where all of the clauses are already satisfied before we reach a leaf then

we can return SAT without exploring further. This means that the variables below this point can

take any value.

32

For example: ϕ := (x1 ∨ x2) ∧ (x1 ∨ x2 ∨ x3 ∨ x4) ∧ (x1 ∨ x3 ∨ x4) ∧ (x1 ∨ x2 ∨ x3) ∧ (x1 ∨ x2 ∨ x4)

∧ (x1 ∨ x3 ∨ x4) ∧ (x2 ∨ x3) , when we pass down the first branch and set x1 to false and x2 to false

we have already contradicted clause 1 which was (x1 ∨ x2), and so there is no reason to proceed

further. Continuing in this way we only need to search a subset of the full tree. We find the first

satisfying solution when x1,x2,x3 =true, true, true and need not continue to the leaf. The setting

of a4 is immaterial.

32. Analyze the ratio of the greedy algorithm for vertex cover and give tight examples

The answer :

The greedy method works by picking, at each stage, the set S that covers the greatest number of

remaining elements that are uncovered. Firstly, we give the GREEDY-VERTEX-COVER as

follow.

Algorithm 1 GREEDY-VERTEX-COVER (G)

C := ∅ while E ̸=

∅ do

Choose v ∈ V of maximum degree

C := C ∪ {u}

Remove edges incident to u from E end

while

return C

In each run, the greedy algriothm always choose the highest degree vertices. Thus, the ratio is

O(logn) Let C∗ be an optimal cover. C is the output of the GREEDY-VERTEX-COVER. The

ratio is

, where

Now, we give an example.

33

W8-3.png

For greedy algorithm, the estimate of n ≤ H(d) seems to be not only n ≤ 2 estimate better.

theoretically, when d ≤ 3, the performance is improved, especially when d is quite large, seems

to be meaningless, but from the above analysis shows that the upper bound is very conservative

estimate. In actual application of greedy algorithm, often can get satisfactory result, especially

in the worst case, as shown in figure 1. The results of greedy algorithm are consistent with the

exact solution. For some practical problems, therefore, the greedy algorithm can be used.

33. Show how in polynomial time we can transform one instance of the travelingsalesman

problem into another instance whose cost function satisfies the triangle inequality. The two

instances must have the same set of optimal tours. Explain why such a polynomial-time

transformation does not contradict Theorem 35.3, assuming that P ≠ NP.

Answer:Let m be some value which is larger than any edge weight. If c(u,v) denotes the cost

of the edge from u to v, then modify the weight of the edge to be H(u,v) = c(u,v)+m. (In other

words, H

is our new cost function). First we show that this forces the triangle inequality to hold. Let u,v,

and w be vertices. Then we have H(u,v) = c(u,v) + m ≤ 2m ≤ c(u,w) + m + c(w,v) + m =

H(u,w) + H(w,v). Note: it’s important that the weights of edges are nonnegative.

Next we must consider how this affects the weight of an optimal tour. First, any optimal tour

has exactly n edges (assuming that the input graph has exactly n vertices). Thus, the total cost

added to any tour in the original setup is nm. Since the change in cost is constant for every tour,

the set of optimal tours must remain the same.

34

To see why this doesn’t contradict Theorem 35.3, let H denote the cost of a solution to the

transformed problem found by a ρ -approximation algorithm and H∗ denote the cost of an

optimal solution to the transformed problem. Then we have H ≤ ρH∗. We can now transform

back to the original problem, and we have a solution of weight C = H − nm, and the optimal

solution has weight C∗ = H∗−nm. This tells us that C+nm ≤ ρ(C∗ + nm), so that C ≤ ρ(C∗)+(ρ−1)nm.

Since ρ > 1, we don’t have a constant approximation ratio, so there is no contradiction.

34. Suppose that the vertices for an instance of the traveling-salesman problem are points in

the plane and that the cost c.u;

/ is the euclidean distance

between points u and

. Show that an optimal tour never crosses

itself.

Answer:To show that the optimal tour never crosses itself, we will suppose that it did cross

itself, and then show that we could produce a new tour that had lower cost, obtaining our

contradiction because we had said that the tour we had started with was optimal, and so, was

of minimal

cost. If the tour crosses itself, there must be two pairs of vertices that are both adjacent in the

tour, so that the edge between the two pairs are crossing each other. Suppose that our tour is

S1x1x2S2y1y2S3 where S1,S2,S3 are arbitrary sequences of vertices, and {x1,x2} and {y1,y2} are the

two crossing pairs. Then, We claim that the tour given by S1x1y2 Reverse (S2)y1x2S3 has lower

cost. Since {x1,x2,y1,y2} form a quadrilateral, and the original cost was the sum of the two

diagonal lengths, and the new cost is the sums of the lengths of two opposite sides, this problem

comes down to a simple geometry problem.

Now that we have it down to a geometry problem, we just exercise our grade school geometry

muscles. Let P be the point that diagonals of the quadrilateral intersect. Then, we have that the

vertices {x1,P,y2} and {x2,P,y1} form triangles. Then, we recall that the longest that one side of a

triangle can be is the sum of the other two sides, and the inequality is strict if the triangles are

non-degenerate, as they are in our case. This means that |x1y2| | +∥x2y1∥ < ∥x1P∥ + ∥Py2∥ + ∥x2P∥

+ ∥Py1∥ = ∥x1x2∥ + ∥y1y2∥. The right hand side was the original contribution to the cost from the

35

old two edges, while the left is the new contribution. This means that this part of the cost has

decreased, while the rest of the costs in the path have remained the same. This gets us that the

new path that we constructed has strictly better cross, so we must not of had an optimal tour

that had a crossing in it.

35. Show how to implement GREEDY-SET-COVER in such a way that it runs in time O ∑S⊂F |S|

Answer:

See the algorithm LINEAR-GREEDY-SET-COVER. Note that everything in the inner most for loop

takes constant time and will only run once for each pair of letter and a set containing that letter.

However, if we sum up the number of such pairs, we get PS⊂F |S| which is what we wanted to be

linear in.

Algorithm 2 LINEAR-GREEDY-SET-COVER (F)

compute thsizes of every S ∈ F, storing them in S.size. let A

be an array of length

for S ∈ F

, consisting of empty lists

do

add S to A[S.size]

end for let A.max be the index of the largest nonempty list in

A. let L be an array of length |US∈F S| consisting of empty lists

for S ∈ F do for l

∈ S do

add S to L[l] end for end for let C be the set cover that we

will be selecting, initially empty. let T be the set of letters that

have been covered, initially empty.

while A.max¿0 do

Let S0 be any element of A[A.max].

36

add S0 to C remove S0

from A[A.max] for l ∈ S0\T

do for S ∈ L[l] do

Remove S from A[A.size]

S.size = S.size-1 Add S to

A[S.size] if A[A.max] is

empty then

A.max=A.max1 end if end for add

l to T

end for

end while

36.How would you modify the approximation scheme presented in this section to find a good

approximation to the smallest value not less than t that is a sum of some subset of the given

input list?

We’ll rewrite the relevant algorithms for finding the smallest value, modifying both TRIM and

APPROX-SUBSET-SUM. The analysis is the same as that for finding a largest sum which doesn’t

exceed t, but with inequalities reversed. Also note that in TRIM, we guarantee instead that for

each y removed, there exists z which remains on the list such that y ≤ z ≤ y(1 + δ).

Algorithm 3 TRIM(L,δ)

let m be the length of L

L′ =< ym >

last = ym for i = m − 1downto1

do if yx < last /(1 + δ) then

append yi to the end of L’ last

= ym

37

end if

end for

return L’

37. Approximating the size of a maximum clique

Let G D .V; E/ be an undirected graph. For any k 1, define G.k/ to be the undirected graph .V .k/;

E.k//, where V .k/ is the set of all ordered k-tuples of vertices from V and E.k/ is defined so

that

.

1;

2;:::;

k/ is adjacent to .w1;

w2;:::;wk/ if and only if for i D 1; 2; : : : ; k, either vertex

i is

adjacent to wi in G, or else

i D wi. Problems for Chapter 35 1135

a. Prove that the size of the maximum clique in G.k/ is equal to the kth power of the size of

the maximum clique in G. b. Argue that if there is an approximation algorithm that has a

constant approximation ratio for finding a maximum-size clique, then there is a polynomialtime approximation scheme for the problem。

a.

Answer:Given a clique D of m vertices in G, let Dk be the set of all k -tuples of vertices in

D. Then Dk is a clique in G(k), so the size of a maximum clique in G(k) is at least that of the size of

a maximum clique in G. We’ll show that the size cannot exceed that.

Let m denote the size of the maximum clique in G. We proceed by induction on k. When k =

1, we have V (1) = V and E(1) = E, so the claim is trivial. Now suppose that for k ≥ 1, the size of the

maximum clique in G(k) is equal to the kth power of the size of the maximum clique in G. Let u be

a (k+1) tuple and u′ denote the restriction of u to a k -tuple consisting of its first k entries. If {u,v}

∈ E(k+1) then {u′,v′} ∈ E(k). Suppose that the size of the maximum clique C in G(k+1) = n > mk+1. Let

C′ = {u′ | u ∈ C}. By our induction hypothesis, the size of a maximum clique in G(k) is mk, so since

C′ is a clique we must have |C′| ≤ mk < n/m. A vertex u is only removed from C′ when it is a

duplicate, which happens only when there is a v such that u,v ∈ C,u = v, and u′ = v′. If there are

only m choices for the last entry of a vertex in C, then the size can decrease by at most a factor

of m. Since the size decreases by a factor of strictly greater than m, there must be more than m

vertices which appear among the last entries of vertices in C′. Since C′ is a clique, all of its vertices

38

are connected by edges, which implies all of these vertices in G are connected by edges, implying

that G contains a

clique of size strictly greater than m, a contradiction.

b. Suppose there is an approximation algorithm that has a constant approximation ratio c for

finding a maximum size clique. Given G, form G(k), where k will be chosen shortly. Perform the

approximation algorithm on G(k). If n is the maximum size clique of G(k) returned by the

approximation algorithm and m is the actual maximum size clique of G, then we have mk/n ≤ c.

If there is a clique of size at least n in G(k), we know there is a clique of size at least n1/k in G, and

we have m/n1/k ≤ c1/k. Choosing k > 1/logc(1 + ε), this gives m/n1/k ≤ 1 + ε. Since we can form

G(k) in time polynomial in k, we just need to verify that k is a polynomial in 1/ε. To this end,

k > 1/logc(1 + ε) = lnc/ln(1 + ε) ≥ lnc/ε

where the last inequality follows from (3.17). Thus, the (1 + ε) approximation algorithm is

polynomial in the input size, and 1/ε, so it is a polynomial time approximation scheme.

37. An approximation algorithm for the 0-1 knapsack problem

Recall the knapsack problem from Section 16.2. There are n items, where the ith item is worth

i dollars and weighs wi pounds. We are also given a knapsack

that can hold at most W pounds. Here, we add the further assumptions that each weight wi

is at most W and that the items are indexed in monotonically decreasing order of their values:

1

2

n. In the 0-1 knapsack problem, we wish to find a subset of the

items whose total weight is at most W and whose total value is maximum. The fractional

knapsack problem is like the 0-1 knapsack problem, except that we are allowed to take a

fraction of each item, rather than being restricted to taking either all or none of 1138 Chapter

35 Approximation Algorithms each item. If we take a fraction xi of item i, where 0 xi 1, we

contribute xiwi to the weight of the knapsack and receive value

xi

i. Our goal is to develop a polynomial-time 2-approximation

algorithm for the 0-1 knapsack problem. In order to design a polynomial-time algorithm, we

consider restricted instances of the 0-1 knapsack problem. Given an instance I of the knapsack

problem, we form restricted instances Ij , for j D 1; 2; : : : ; n, by removing items 1; 2; : : : ; j 1

and requiring the solution to include item j (all of item j in both the fractional and 0-1

39

knapsack problems). No items are removed in instance I1. For instance Ij , let Pj denote an

optimal solution to the 0-1 problem and Qj denote an optimal solution to the fractional

problem. a. Argue that an optimal solution to instance I of the 0-1 knapsack problem is one

of fP1; P2;:::;Png. b. Prove that we can find an optimal solution Qj to the fractional problem

for instance Ij by including item j and then using the greedy algorithm in which at each step,

we take as much as possible of the unchosen item in the set fj C 1; j C 2;:::; ng with maximum

value per pound

i=wi . c. Prove that we can always construct an

optimal solution Qj to the fractional problem for instance Ij that includes at most one item

fractionally. That is, for all items except possibly one, we either include all of the item or none

of the item in the knapsack. d. Given an optimal solution Qj to the fractional problem for

instance Ij , form solution Rj from Qj by deleting any fractional items from Qj . Let

.S / denote the total value of items taken in a solution S. Prove

that

.Rj /

.Qj /=2

.Pj /=2. e. Give a polynomial-time algorithm that returns a

maximum-value solution from the set fR1; R2;:::;Rng, and prove that your algorithm is a

polynomial-time 2-approximation algorithm for the 0-1 knapsack problem.

a.Answer:Though not stated, this conclusion requires the assumption that at least one of

the values isnon-negative. Since any individual item will fit, selecting that one item would be a

solution that is better then the solution of taking no items. We know then that the optimal

solution contains at least one item. Suppose the item of largest index that it contains is item i,

then, that solution would be in Pi, since the only restriction we placed when we changed the

instance to Ii was

that it contained item i.

b.

We clearly need to include all of item j, as that is a condition of instance Ij. Then, since

we know that we will be using up all of the remaining space somehow, we want to use it up in

such a way that the average value of that space is maximized. This is clearly achieved by

maximizing the value density of all the items going in, since the final value density will be an

average of the value densities that went into it weighted by the amount of space that was used

up by items with that value density.

c. Since we are greedily selecting items based off of the amount of value per space, we

onlystop once we can no longer fit any more, and each time before putting some faction of the

item with the next least value per space, we complete putting in the item we currently are. This

means that there is at most one item fractionally.

40

d.

First, it is trivial that v (Qj)/2 ≥ v (Pj)/2 since we are trying to maximize a quantity, and

there are strictly fewer restrictions on what we can do in the case of the fractional packing than

in the 1 − 0 packing. To see that v (Rj) ≥ v (Qj)/2, note that among the items {1,2,...,j}, we have

the highest value item is vj because of our ordering of the items. We know that the fractional

solution must have all of item j, and there is some item that it may not have all of that is indexed

by less than j. This part of an item is all that is thrown out when going from Qj to Rj. Even if we

threw all of that item out, it still wouldn’t be as valuable as item j which we are keeping, so, we

have retained more than half of the value. So, we have proved the slightly stronger statement

that v (Rj) > v (Qj)/2 ≥ v (Pj)/2

Algorithm 4 APPROX-MAX-SPANNING-TREE(V,E)

T = ∅ for v ∈ V

do max = ∞

best = v

for u ∈ N(v) do

if w(v,u) ≥ max then

v=u

max = w(v,u)

end if

end for

T = T ∪ {v,u}

end for

return T

41