We have seen that we can do machine learning on data that is in the nice “flat

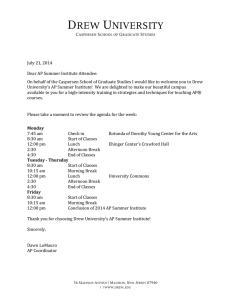

file” format

• Rows are objects

• Columns are features

Taking a real problem and “massaging” it into this format is domain dependent,

but often the most fun part of machine learning.

Let see just one example….

Insect

ID

Abdomen

Length

1

2

3

4

5

6

7

8

9

10

2.7

8.0

0.9

1.1

5.4

2.9

6.1

0.5

8.3

8.1

Antennae Insect Class

Length

Grasshopper

5.5

9.1

4.7

3.1

8.5

1.9

6.6

1.0

6.6

4.7

Katydid

Grasshopper

Grasshopper

Katydid

Grasshopper

Katydid

Grasshopper

Katydid

Katydids

(Western Pipistrelle

(Parastrellus hesperus)

Photo by Michael Durham

A spectrogram of a bat call.

Western pipistrelle calls

We can easily measure two features of bat calls. Their

characteristic frequency and their call duration

Characteristic frequency

Call duration

Bat ID

Characteristic

frequency

1

49.7

Call duration

(ms)

5.5

Bat Species

Western pipistrelle

Classification

We have seen 2 classification techniques:

• Simple linear classifier, Nearest neighbor,.

Let us see two more techniques:

• Decision tree, Naïve Bayes

There are other techniques:

• Neural Networks, Support Vector Machines, … that we will not

consider..

I have a box of apples..

Pr(X = good) = p

then Pr(X = bad) = 1 − p

the entropy of X is given by

H(X)

1

0.5

0

binary entropy function

attains its maximum value

when p = 0.5

0

1

Decision Tree Classifier

10

9

8

7

6

5

4

3

2

1

Antenna Length

Ross Quinlan

Abdomen Length > 7.1?

yes

no

Antenna Length > 6.0?

1 2 3 4 5 6 7 8 9 10

Abdomen Length

Katydid

no

yes

Grasshopper

Katydid

Antennae shorter than body?

Yes

No

3 Tarsi?

Grasshopper

Yes

No

Foretiba has ears?

Cricket

Decision trees predate computers

Yes

Katydids

No

Camel Cricket

Decision Tree Classification

• Decision tree

–

–

–

–

A flow-chart-like tree structure

Internal node denotes a test on an attribute

Branch represents an outcome of the test

Leaf nodes represent class labels or class distribution

• Decision tree generation consists of two phases

– Tree construction

• At start, all the training examples are at the root

• Partition examples recursively based on selected attributes

– Tree pruning

• Identify and remove branches that reflect noise or outliers

• Use of decision tree: Classifying an unknown sample

– Test the attribute values of the sample against the decision tree

How do we construct the decision tree?

• Basic algorithm (a greedy algorithm)

– Tree is constructed in a top-down recursive divide-and-conquer manner

– At start, all the training examples are at the root

– Attributes are categorical (if continuous-valued, they can be discretized

in advance)

– Examples are partitioned recursively based on selected attributes.

– Test attributes are selected on the basis of a heuristic or statistical

measure (e.g., information gain)

• Conditions for stopping partitioning

– All samples for a given node belong to the same class

– There are no remaining attributes for further partitioning – majority

voting is employed for classifying the leaf

– There are no samples left

Information Gain as A Splitting Criteria

• Select the attribute with the highest information gain (information

gain is the expected reduction in entropy).

• Assume there are two classes, P and N

– Let the set of examples S contain p elements of class P and n elements of

class N

– The amount of information, needed to decide if an arbitrary example in S

belongs to P or N is defined as

p

p

E(S )

log 2

pn

pn

0 log(0) is defined as 0

n

n

log 2

pn

pn

Information Gain in Decision Tree Induction

• Assume that using attribute A, a current set will be

partitioned into some number of child sets

• The encoding information that would be gained by

branching on A

Gain( A) E (Current set ) E (all child sets )

Note: entropy is at its minimum if the collection of objects is completely uniform

Person

Homer

Marge

Bart

Lisa

Maggie

Abe

Selma

Otto

Krusty

Comic

Hair

Length

Weight

Age

Class

0”

10”

2”

6”

4”

1”

8”

10”

6”

250

150

90

78

20

170

160

180

200

36

34

10

8

1

70

41

38

45

M

F

M

F

F

M

F

M

M

8”

290

38

?

Entropy ( S )

p

p

log 2

pn

p

n

n

n

log 2

pn

p

n

Entropy(4F,5M) = -(4/9)log2(4/9) - (5/9)log2(5/9)

= 0.9911

yes

no

Hair Length <= 5?

Let us try splitting

on Hair length

Gain( A) E (Current set ) E (all child sets )

Gain(Hair Length <= 5) = 0.9911 – (4/9 * 0.8113 + 5/9 * 0.9710 ) = 0.0911

Entropy ( S )

p

p

log 2

pn

p

n

n

n

log 2

pn

p

n

Entropy(4F,5M) = -(4/9)log2(4/9) - (5/9)log2(5/9)

= 0.9911

yes

no

Weight <= 160?

Let us try splitting

on Weight

Gain( A) E (Current set ) E (all child sets )

Gain(Weight <= 160) = 0.9911 – (5/9 * 0.7219 + 4/9 * 0 ) = 0.5900

Entropy ( S )

p

p

log 2

pn

p

n

n

n

log 2

pn

p

n

Entropy(4F,5M) = -(4/9)log2(4/9) - (5/9)log2(5/9)

= 0.9911

yes

no

age <= 40?

Let us try splitting

on Age

Gain( A) E (Current set ) E (all child sets )

Gain(Age <= 40) = 0.9911 – (6/9 * 1 + 3/9 * 0.9183 ) = 0.0183

Of the 3 features we had, Weight

was best. But while people who

weigh over 160 are perfectly

classified (as males), the under 160

people are not perfectly

classified… So we simply recurse!

This time we find that we

can split on Hair length, and

we are done!

yes

yes

no

Weight <= 160?

no

Hair Length <= 2?

We need don’t need to keep the data

around, just the test conditions.

Weight <= 160?

yes

How would

these people

be classified?

no

Hair Length <= 2?

yes

Male

no

Female

Male

It is trivial to convert Decision

Trees to rules…

Weight <= 160?

yes

Hair Length <= 2?

yes

Male

no

Female

Rules to Classify Males/Females

If Weight greater than 160, classify as Male

Elseif Hair Length less than or equal to 2, classify as Male

Else classify as Female

no

Male

Once we have learned the decision tree, we don’t even need a computer!

This decision tree is attached to a medical machine, and is designed to help

nurses make decisions about what type of doctor to call.

Decision tree for a typical shared-care setting applying

the system for the diagnosis of prostatic obstructions.

PSA

= serum prostate-specific antigen levels

PSAD = PSA density

TRUS = transrectal ultrasound

Garzotto M et al. JCO 2005;23:4322-4329

The worked examples we have

seen were performed on small

datasets. However with small

datasets there is a great danger of

overfitting the data…

When you have few datapoints,

there are many possible splitting

rules that perfectly classify the

data, but will not generalize to

future datasets.

Yes

No

Wears green?

Female

Male

For example, the rule “Wears green?” perfectly classifies the data, so does

“Mothers name is Jacqueline?”, so does “Has blue shoes”…

Avoid Overfitting in Classification

• The generated tree may overfit the training data

– Too many branches, some may reflect anomalies due to

noise or outliers

– Result is in poor accuracy for unseen samples

• Two approaches to avoid overfitting

– Prepruning: Halt tree construction early—do not split a

node if this would result in the goodness measure falling

below a threshold

• Difficult to choose an appropriate threshold

– Postpruning: Remove branches from a “fully grown”

tree—get a sequence of progressively pruned trees

• Use a set of data different from the training data to

decide which is the “best pruned tree”

Which of the “Pigeon Problems” can be

solved by a Decision Tree?

1) Deep Bushy Tree

2) Useless

3) Deep Bushy Tree

10

9

8

7

6

5

4

3

2

1

1 2 3 4 5 6 7 8 9 10

The Decision Tree

has a hard time with

correlated attributes

10

9

8

7

6

5

4

3

2

1

100

90

80

70

60

50

40

30

20

10

10 20 30 40 50 60 70 80 90 100

?

1 2 3 4 5 6 7 8 9 10

Advantages/Disadvantages of Decision Trees

• Advantages:

– Easy to understand (Doctors love them!)

– Easy to generate rules

• Disadvantages:

– May suffer from overfitting.

– Classifies by rectangular partitioning (so does

not handle correlated features very well).

– Can be quite large – pruning is necessary.

– Does not handle streaming data easily

How would we go about building a

classifier for projectile points?

?

length

I. Location of maximum blade width

1. Proximal quarter

2. Secondmost proximal quarter

3. Secondmost distal quarter

4. Distal quarter

II. Base shape

2. Normal curve

3. Triangular

4. Folsomoid

III. Basal indentation ratio

1. No basal indentation

2. 0·90–0·99 (shallow)

3. 0·80–0·89 (deep)

IV. Constriction ratio

1.

2.

3.

4.

5.

6.

1·00

0·90–0·99

0·80–0·89

0·70–0·79

0·60–0·69

0·50–0·59

width

1. Arc-shaped

21225212

V. Outer tang angle

1. 93–115

2. 88–92

3. 81–87

4. 66–88

5. 51–65

6. <50

VI. Tang-tip shape

1. Pointed

2. Round

3. Blunt

VII. Fluting

1. Absent

2. Present

VIII. Length/width ratio

1. 1·00–1·99

2. 2·00–2·99

3. 3.00 -3.99

4. 4·00–4·99

5. 5·00–5·99

6. >6. 6·00

length = 3.10

width = 1.45

length /width ratio= 2.13

I. Location of maximum blade width

1. Proximal quarter

2. Secondmost proximal quarter

3. Secondmost distal quarter

4. Distal quarter

21225212

II. Base shape

1. Arc-shaped

2. Normal curve

3. Triangular

4. Folsomoid

III. Basal indentation ratio

1. No basal indentation

2. 0·90–0·99 (shallow)

3. 0·80–0·89 (deep)

IV. Constriction ratio

1.

2.

3.

4.

5.

6.

1·00

0·90–0·99

0·80–0·89

0·70–0·79

0·60–0·69

0·50–0·59

V. Outer tang angle

1. 93–115

2. 88–92

3. 81–87

4. 66–88

5. 51–65

6. <50

Fluting? = TRUE?

1. Pointed

2. Round

3. Blunt

VII. Fluting

1. Absent

2. Present

VIII. Length/width ratio

1. 1·00–1·99

2. 2·00–2·99

3. 3.00 -3.99

4. 4·00–4·99

5. 5·00–5·99

6. >6. 6·00

yes

no

VI. Tang-tip shape

Base Shape = 4

no

Mississippian

Late Archaic

yes

Length/width ratio = 2

We could also us the Nearest Neighbor Algorithm

?

21225212

21265122 - Late Archaic

14114214 - Transitional Paleo

24225124 - Transitional Paleo

41161212 - Late Archaic

It might be better to use the shape

directly in the decision tree…

Lexiang Ye and Eamonn Keogh (2009) Time

Series Shapelets: A New Primitive for Data

Mining. SIGKDD 2009

Decision Tree for Arrowheads

Clovis

Avonlea

Mix

Training data (subset)

Avonlea

Clovis

(Clovis)

11.24

I

(Avonlea)

85.47

II

Shapelet Dictionary

1.5

1.0

0.5

0

0

100

I

200

300

400

Arrowhead Decision

Tree

II

1

0

2

The shapelet decision tree classifier achieves an

accuracy of 80.0%, the accuracy of rotation

invariant one-nearest-neighbor classifier is 68.0%.

Naïve Bayes Classifier

Thomas Bayes

1702 - 1761

We will start off with a visual intuition, before looking at the math…

Grasshoppers

Katydids

Antenna Length

10

9

8

7

6

5

4

3

2

1

1 2 3 4 5 6 7 8 9 10

Abdomen Length

Remember this example?

Let’s get lots more data…

With a lot of data, we can build a histogram. Let us

just build one for “Antenna Length” for now…

Antenna Length

10

9

8

7

6

5

4

3

2

1

1 2 3 4 5 6 7 8 9 10

Katydids

Grasshoppers

We can leave the

histograms as they are,

or we can summarize

them with two normal

distributions.

Let us us two normal

distributions for ease

of visualization in the

following slides…

• We want to classify an insect we have found. Its antennae are 3 units long.

How can we classify it?

• We can just ask ourselves, give the distributions of antennae lengths we have

seen, is it more probable that our insect is a Grasshopper or a Katydid.

• There is a formal way to discuss the most probable classification…

p(cj | d) = probability of class cj, given that we have observed d

3

Antennae length is 3

p(cj | d) = probability of class cj, given that we have observed d

P(Grasshopper | 3 ) = 10 / (10 + 2)

= 0.833

P(Katydid | 3 )

= 0.166

= 2 / (10 + 2)

10

2

3

Antennae length is 3

p(cj | d) = probability of class cj, given that we have observed d

P(Grasshopper | 7 ) = 3 / (3 + 9)

= 0.250

P(Katydid | 7 )

= 0.750

= 9 / (3 + 9)

9

3

7

Antennae length is 7

p(cj | d) = probability of class cj, given that we have observed d

P(Grasshopper | 5 ) = 6 / (6 + 6)

= 0.500

P(Katydid | 5 )

= 0.500

= 6 / (6 + 6)

66

5

Antennae length is 5

Bayes Classifiers

That was a visual intuition for a simple case of the Bayes classifier,

also called:

• Idiot Bayes

• Naïve Bayes

• Simple Bayes

We are about to see some of the mathematical formalisms, and

more examples, but keep in mind the basic idea.

Find out the probability of the previously unseen instance

belonging to each class, then simply pick the most probable class.

Bayes Classifiers

• Bayesian classifiers use Bayes theorem, which says

p(cj | d ) = p(d | cj ) p(cj)

p(d)

•

p(cj | d) = probability of instance d being in class cj,

This is what we are trying to compute

• p(d | cj) = probability of generating instance d given class cj,

We can imagine that being in class cj, causes you to have feature d

with some probability

• p(cj) = probability of occurrence of class cj,

This is just how frequent the class cj, is in our database

•

p(d) = probability of instance d occurring

This can actually be ignored, since it is the same for all classes

Assume that we have two classes

c1 = male, and c2 = female.

(Note: “Drew

can be a male

or female

name”)

We have a person whose sex we do not

know, say “drew” or d.

Classifying drew as male or female is

equivalent to asking is it more probable

that drew is male or female, I.e which is

greater p(male | drew) or p(female | drew)

Drew Barrymore

Drew Carey

What is the probability of being called

“drew” given that you are a male?

p(male | drew) = p(drew | male ) p(male)

p(drew)

What is the probability

of being a male?

What is the probability of

being named “drew”?

(actually irrelevant, since it is

that same for all classes)

This is Officer Drew (who arrested me in

1997). Is Officer Drew a Male or Female?

Luckily, we have a small

database with names and sex.

We can use it to apply Bayes

rule…

Officer Drew

p(cj | d) = p(d | cj ) p(cj)

p(d)

Name

Drew

Sex

Male

Claudia Female

Drew

Female

Drew

Female

Alberto Male

Female

Karin

Nina

Female

Sergio Male

Name

Sex

Drew

p(cj | d) = p(d | cj ) p(cj)

p(d)

Officer Drew

p(male | drew) = 1/3 * 3/8

3/8

p(female | drew) = 2/5 * 5/8

3/8

Male

Claudia Female

Drew

Female

Drew

Female

Alberto Male

Female

Karin

Nina

Female

Sergio Male

= 0.125

3/8

= 0.250

3/8

Officer Drew is

more likely to be

a Female.

Officer Drew IS a female!

Officer Drew

p(male | drew) = 1/3 * 3/8

3/8

p(female | drew) = 2/5 * 5/8

3/8

= 0.125

3/8

= 0.250

3/8

So far we have only considered Bayes

Classification when we have one

attribute (the “antennae length”, or the

“name”). But we may have many

features.

How do we use all the features?

Name

Drew

Claudia

Drew

Drew

Alberto

Karin

Nina

Sergio

Over 170CM

No

Yes

No

No

Yes

No

Yes

Yes

Eye

Blue

Brown

Blue

Blue

Brown

Blue

Brown

Blue

p(cj | d) = p(d | cj ) p(cj)

p(d)

Hair length

Short

Long

Long

Long

Short

Long

Short

Long

Sex

Male

Female

Female

Female

Male

Female

Female

Male

• To simplify the task, naïve Bayesian classifiers assume

attributes have independent distributions, and thereby estimate

p(d|cj) = p(d1|cj) * p(d2|cj) * ….* p(dn|cj)

The probability of

class cj generating

instance d, equals….

The probability of class cj

generating the observed

value for feature 1,

multiplied by..

The probability of class cj

generating the observed

value for feature 2,

multiplied by..

• To simplify the task, naïve Bayesian classifiers

assume attributes have independent distributions, and

thereby estimate

p(d|cj) = p(d1|cj) * p(d2|cj) * ….* p(dn|cj)

p(officer drew|cj) = p(over_170cm = yes|cj) * p(eye =blue|cj) * ….

Officer Drew

is blue-eyed,

over 170cm

tall, and has

long hair

p(officer drew| Female) = 2/5 * 3/5 * ….

p(officer drew| Male) = 2/3 * 2/3 * ….

The Naive Bayes classifiers

is often represented as this

type of graph…

cj

Note the direction of the

arrows, which state that

each class causes certain

features, with a certain

probability

p(d1|cj)

p(d2|cj)

…

p(dn|cj)

cj

Naïve Bayes is fast and

space efficient

We can look up all the probabilities

with a single scan of the database and

store them in a (small) table…

p(d1|cj)

Sex

Over190cm

Male

Yes

0.15

No

0.85

Yes

0.01

No

0.99

Female

…

p(d2|cj)

Sex

Long Hair

Male

Yes

0.05

No

0.95

Yes

0.70

No

0.30

Female

p(dn|cj)

Sex

Male

Female

Naïve Bayes is NOT sensitive to irrelevant features...

Suppose we are trying to classify a persons sex based on

several features, including eye color. (Of course, eye color

is completely irrelevant to a persons gender)

p(Jessica |cj) = p(eye = brown|cj) * p( wears_dress = yes|cj) * ….

p(Jessica | Female) = 9,000/10,000

p(Jessica | Male) = 9,001/10,000

* 9,975/10,000 * ….

* 2/10,000

* ….

Almost the same!

However, this assumes that we have good enough estimates of

the probabilities, so the more data the better.

cj

An obvious point. I have used a

simple two class problem, and

two possible values for each

example, for my previous

examples. However we can have

an arbitrary number of classes, or

feature values

p(d1|cj)

Animal

Mass >10kg

Cat

Yes

0.15

No

Dog

Pig

p(d2|cj)

…

Animal

Animal

Color

Cat

Black

0.33

0.85

White

0.23

Yes

0.91

Brown

0.44

No

0.09

Black

0.97

Yes

0.99

White

0.03

No

0.01

Brown

0.90

Black

0.04

White

0.01

Dog

Pig

p(dn|cj)

Cat

Dog

Pig

Problem!

p(d|cj)

Naïve Bayesian

Classifier

Naïve Bayes assumes

independence of

features…

p(d1|cj)

Sex

Over 6

foot

Male

Yes

0.15

No

0.85

Yes

0.01

No

0.99

Female

p(d2|cj)

Sex

Over 200

pounds

Male

Yes

0.11

No

0.80

Yes

0.05

No

0.95

Female

p(dn|cj)

Solution

p(d|cj)

Naïve Bayesian

Classifier

Consider the

relationships between

attributes…

p(d1|cj)

Sex

Male

Female

Over 6

foot

Yes

0.15

No

0.85

Yes

0.01

No

0.99

p(d2|cj)

p(dn|cj)

Sex

Over 200 pounds

Male

Yes and Over 6 foot

0.11

No and Over 6 foot

0.59

Yes and NOT Over 6 foot

0.05

No and NOT Over 6 foot

0.35

Solution

p(d|cj)

Naïve Bayesian

Classifier

Consider the

relationships between

attributes…

p(d1|cj)

p(d2|cj)

But how do we find the set of connecting arcs??

p(dn|cj)

The Naïve Bayesian Classifier has a piecewise quadratic decision boundary

Katydids

Grasshoppers

Ants

Adapted from slide by Ricardo Gutierrez-Osuna

Which of the “Pigeon Problems” can be

solved by a decision tree?

10

9

8

7

6

5

4

3

2

1

1 2 3 4 5 6 7 8 9 10

10

9

8

7

6

5

4

3

2

1

100

90

80

70

60

50

40

30

20

10

10 20 30 40 50 60 70 80 90 100

1 2 3 4 5 6 7 8 9 10

Advantages/Disadvantages of Naïve Bayes

• Advantages:

–

–

–

–

Fast to train (single scan). Fast to classify

Not sensitive to irrelevant features

Handles real and discrete data

Handles streaming data well

• Disadvantages:

– Assumes independence of features

Summary

• We have seen the four most common algorithms used for classification.

• We have seen there is no one “best” algorithm.

• We have seen that issues like normalizing, cleaning, converting the data can make

a huge difference.

• We have only scratched the surface!

• How do we learn with no class labels? (clustering)

• How do we learn with expensive class labels? (active learning)

• How do we spot outliers (Anomaly detection)

• How do we…..

Popular Science Book

The Master Algorithm

by Pedro Domingos

Textbook

Data Mining:

by Charu C. Aggarwal

Malaria

Malaria afflicts about

4% of all humans,

killing one million of

them each year.

Malaria Deaths (2003)

www.worldmapper.org

There are interventions to mitigate the problem

A recent meta-review of randomized controlled trials

of Insecticide Treated Nets (ITNs) found that ITNs

can reduce malaria-related deaths in children by one

fifth and episodes of malaria by half.

Mosquito nets work!

How do we know where to do the

interventions, given that we have

finite resources?

One second of audio from our sensor.

The Common Eastern Bumble Bee

(Bombus impatiens) takes about one tenth

of a second to pass the laser.

0.2

0.1

0

Background noise

-0.1

Bee begins to cross laser

Bee has past though the laser

-0.2

0

0.5

1

1.5

2

2.5

3

3.5

4

x 10 4

4.5

http://www.youtube.com/watch?v=WT0UAGRH0T8

One second of audio from the laser sensor. Only

Bombus impatiens (Common Eastern Bumble

Bee) is in the insectary.

0.2

0.1

0

Background noise

-0.1

Bee begins to cross laser

-0.2

0

0.5

1

1.5

2

2.5

-3

x 10

|Y(f)|

4

Peak at

197Hz

60Hz

interference

2

3.5

Single-Sided Amplitude Spectrum of Y(t)

4

3

3

4

x 10

4.5

Harmonics

1

0

0

100

200

300

400

500

600

Frequency (Hz)

700

800

900

1000

-3

x 10

|Y(f)|

4

3

2

1

0

0

100

200

300

400

500

600

700

800

900

1000

700

800

900

1000

700

800

900

1000

Frequency (Hz)

0

100

200

300

400

500

600

Frequency (Hz)

0

100

200

300

400

500

600

Frequency (Hz)

-3

x 10

|Y(f)|

4

3

2

1

0

0

100

200

300

400

500

600

700

800

900

1000

700

800

900

1000

700

800

900

1000

Frequency (Hz)

0

100

200

300

400

500

600

Frequency (Hz)

0

100

200

300

400

500

600

Frequency (Hz)

0

100

200

300

400

Wing Beat Frequency Hz

500

600

700

Anopheles stephensi is a

primary mosquito vector

of malaria.

0

100

200

The yellow fever mosquito

(Aedes aegypti) is a mosquito

that can spread dengue fever,

chikungunya, and yellow fever

viruses

300

400

Wing Beat Frequency Hz

500

600

700

400

Anopheles stephensi: Female

mean =475, Std = 30

500

600

517

700

Aedes aegyptii : Female

mean =567, Std = 43

If I see an insect with a wingbeat frequency of 500, what is it?

𝑃 𝐴𝑛𝑜𝑝ℎ𝑒𝑙𝑒𝑠 𝑤𝑖𝑛𝑔𝑏𝑒𝑎𝑡 = 500 =

1

2𝜋 30

(500−475)2

−

2×30 2

𝑒

517

400

500

What is the error rate?

Can we get more features?

600

12.2% of the

area under the

pink curve

700

8.02% of the

area under the

red curve

Circadian Features

Aedes aegypti (yellow fever mosquito)

0

0

Midnight

dusk

dawn

12

Noon

24

Midnight

70

600

500

Suppose I observe an

insect with a wingbeat

frequency of 420Hz

400

What is it?

70

600

500

Suppose I observe an

insect with a wingbeat

frequency of 420Hz at

11:00am

400

What is it?

0

Midnight

12

Noon

24

Midnight

70

600

500

Suppose I observe an

insect with a wingbeat

frequency of 420 at

11:00am

400

What is it?

0

Midnight

(Culex | [420Hz,11:00am])

12

Noon

24

Midnight

= (6/ (6 + 6 + 0)) * (2/ (2 + 4 + 3)) = 0.111

(Anopheles | [420Hz,11:00am]) = (6/ (6 + 6 + 0)) * (4/ (2 + 4 + 3)) = 0.222

(Aedes | [420Hz,11:00am])

= (0/ (6 + 6 + 0)) * (3/ (2 + 4 + 3)) = 0.000

Blue Sky Ideas

Once you have a classifier working, you begin

to see new uses for them…

Let us see some examples..

Capturing or killing individually targeted insects

• Most efforts to capture or kill insects are “shotgun”. Many nontargeted insects (including beneficial ones) are killed/captured.

• In some cases, the ratios are 1,000 to 1 (i.e. 1,000 non-targeted

insects are effected for each one that was targeted).

• We believe our sensors allow an ultra precise approach, with a ratio

approaching 1 to 1.

• This has obvious implications for SIT/metagenomics

Kill

It seems obvious you could kill a

mosquito with a powerful enough

laser and with enough time.

But we need to do it fast, with as little

power as possible.

We have gotten this down to 1/20th of

a second, and just 1 watt. (and falling)

The mosquitoes may survive the laser

strike, but they cannot fly away (as

was the case in photo shown right)

We are building a SIT Hotel

California for female

mosquitoes (you can check

out anytime you like, but

you can never leave)

Collaboration with UCR

mechanical engineers Amir Rose

and Dr. Guillermo Aguilar

Culex tarsalis

Zoom-in (after removing the wing)

Capture

We envision building robotic traps that can be left in the field, and

programed with different sampling missions.

Such traps could be placed and retrieved by drones.

Capturing live insects is important if you want to do metagenomics.

Some examples of sampling missions…

Capture examples of gravid{Aedes aegypti}

Capture insects marked{ Cripple(left-C|right-S) }

Capture examples of insects that are NOT Anopheles AND have a wingbeat frequency > 400

Capture examples of any insects with a wingbeat frequency > 500, encountered between 4:00am and 4:10am

Capture examples of fed{Anopheles gambiae} OR fed{Anopheles quadriannulatus} OR fed{Anopheles melas}

(to exclude bees, etc.)

Capture

About 10% of the insects captured

by Venus fly traps are flying insects

We believe that we can build

inexpensive mechanical traps that

can capture sex/species targeted

insects.

Capture examples of gravid{Aedes aegypti}

Capture insects marked{ Cripple(left-C|right-S) }

Capture examples of insects that are NOT Anopheles AND have a wingbeat frequency > 400

Capture examples of any insects with a wingbeat frequency > 500, encountered between 4:00am and 4:10am

Capture examples of fed{Anopheles gambiae} OR fed{Anopheles quadriannulatus} OR fed{Anopheles melas}

(to exclude bees, etc.)

Classification Problem: Fourth Amendment Cases before the Supreme Court II

The Supreme Court’s search and seizure decisions, 1962–1984 terms.

Keogh vs. State of California = {0,1,1,0,0,0,1,0}

U = Unreasonable

R = Reasonable

We can also learn decision trees for

individual Supreme Court Members.

Using similar decision trees for the

other eight justices, these models

correctly predicted the majority

opinion in 75 percent of the cases,

substantially outperforming the

experts' 59 percent.

Decision Tree for Supreme Court

Justice Sandra Day O'Connor